Minecraft RTX GPUs Benchmarked: Which Runs It Best in 2023?

Every ray tracing capable Nvidia, AMD, and Intel GPU put to the test (Updated)

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Minecraft RTX officially released to the public in April 2020, after first being teased in August 2019 at Gamescom 2019. Since then, a lot of things have changed, with regular updates to the game and a whole slew of ray-tracing capable graphics cards that didn't exist in early 2020. The best graphics cards now all have DXR (DirectX Raytracing) and VulkanRT, and you can see the full view of how the various GPUs stack up in other games by checking out our GPU benchmarks hierarchy. But how does Minecraft RTX run these days?

To be clear, we're not talking about regular Minecraft here. That can run on a potato, even at high resolutions. For example, the Intel Arc A380 — which as you'll see below falls well below a playable 30 fps with ray tracing on — plugs along happily at 93 fps at 4K without DXR, using 4xMSAA and a 24 block rendering distance. Or put another way, performance is about ten times higher than what you'll get with ray tracing enabled.

We retested all of the Intel Arc GPUs with the latest 31.0.101.4369 drivers. The driver notes indicate up to a 35% improvement in performance with Minecraft RTX. In our test sequence, we saw gains of up to 43%. As impressive as that might seem, there's probably still room for additional improvements.

So why would anyone want to turn on ray tracing in the first place, if the performance hit is so severe? First: Look at all the shiny surfaces! Minecraft RTX is a completely different looking game compared to vanilla Minecraft. Second, with cards like the Nvidia RTX 4090 now available, you can still get very good performance even at maxed out settings.

We've now tested Minecraft RTX on every DXR-capable graphics card, ranging from the lowly AMD RX 6400 and Nvidia RTX 3050 up to the chart topping 4090. We're using the latest drivers as well: AMD 23.2.2, Intel 31.0.101.4369, and Nvidia 528.49. (The Intel drivers are the main change with this update, as the latest release has improved Minecraft RTX performance quite a bit.) We'll have more details on the ray tracing implementation of Minecraft below, but let's get to the benchmarks first, since that's likely why you're here.

Minecraft RTX Testing Details

We've been using Minecraft RTX — or just Minecraft, as it's normally referred to these days — for a while now in our graphics card reviews. If you want to see just how punishing DXR calculations can be, it's great for stressing lesser GPUs. We're now running on a state of the art test system, with a Core i9-13900K with 32GB of DDR5-6600 memory, connected to a 4K 240Hz Samsung Neo G8 32 monitor. Full details on our test PC are in the boxout.

TOM'S HARDWARE TEST PC

Intel Core i9-13900K

MSI MEG Z790 Ace DDR5

G.Skill Trident Z5 2x16GB DDR5-6600 CL34

Sabrent Rocket 4 Plus-G 4TB

be quiet! 1500W Dark Power Pro 12

Cooler Master PL360 Flux

Windows 11 Pro 64-bit

Samsung Neo G8 32

GRAPHICS CARDS

AMD RX 7900 XTX

AMD RX 7900 XT

AMD RX 6000-Series

Intel Arc A770 16GB

Intel Arc A770 8GB

Intel Arc A750

Intel Arc A380

Nvidia RTX 4090

Nvidia RTX 4080

Nvidia RTX 4070 Ti

Nvidia RTX 30-Series

Nvidia RTX 20-Series

We previously tested Minecraft RTX using other CPUs and different memory conditions. While those do matter to some extent, especially if you're using something like an RTX 4090, we feel that anyone trying to run Minecraft RTX will likely have at least 16GB of memory and a reasonably capable CPU. For these tests, we're only looking at the impact of the GPU, and we've more or less maxed out all of the other hardware to eliminate bottlenecks as much as possible.

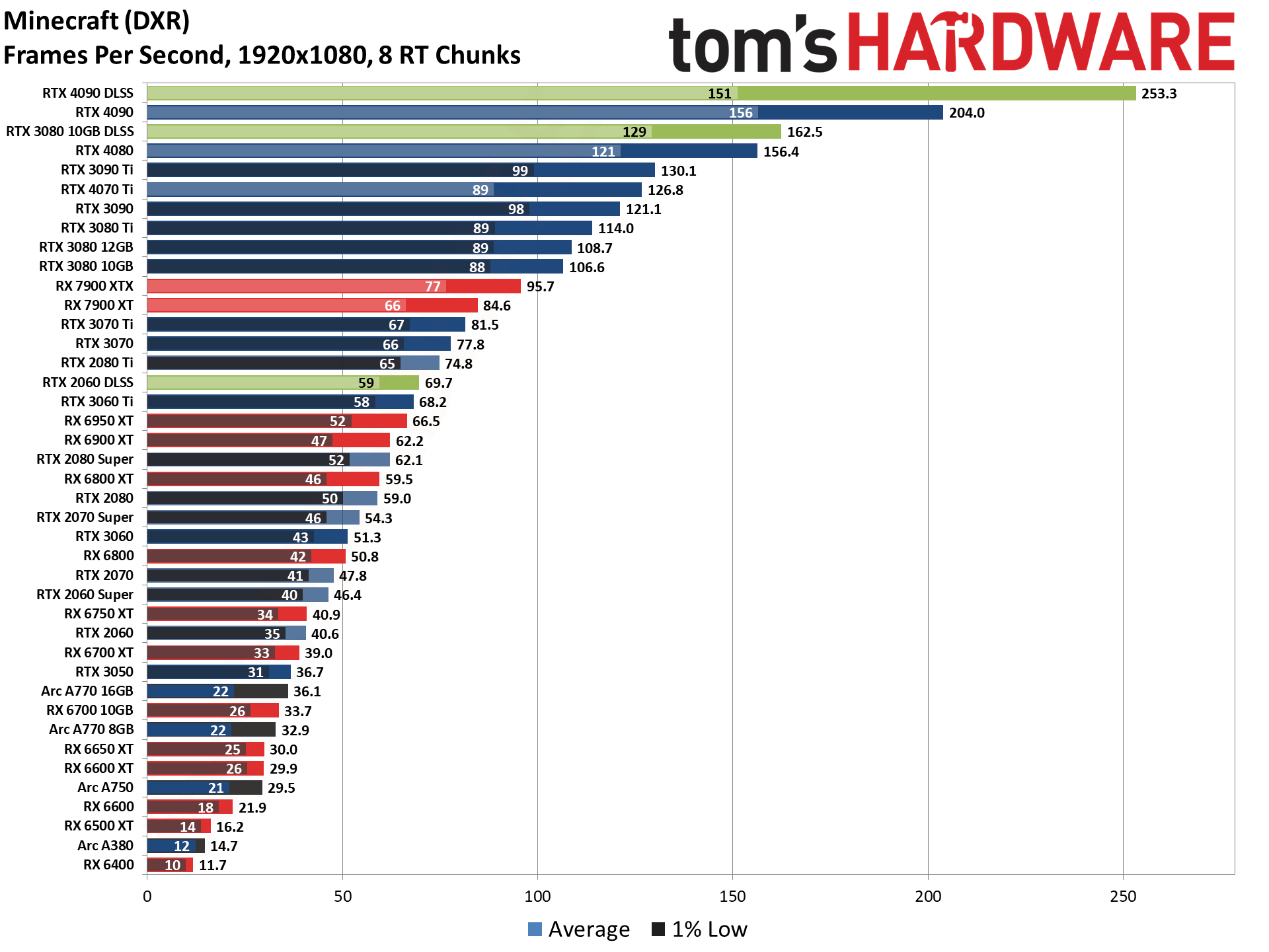

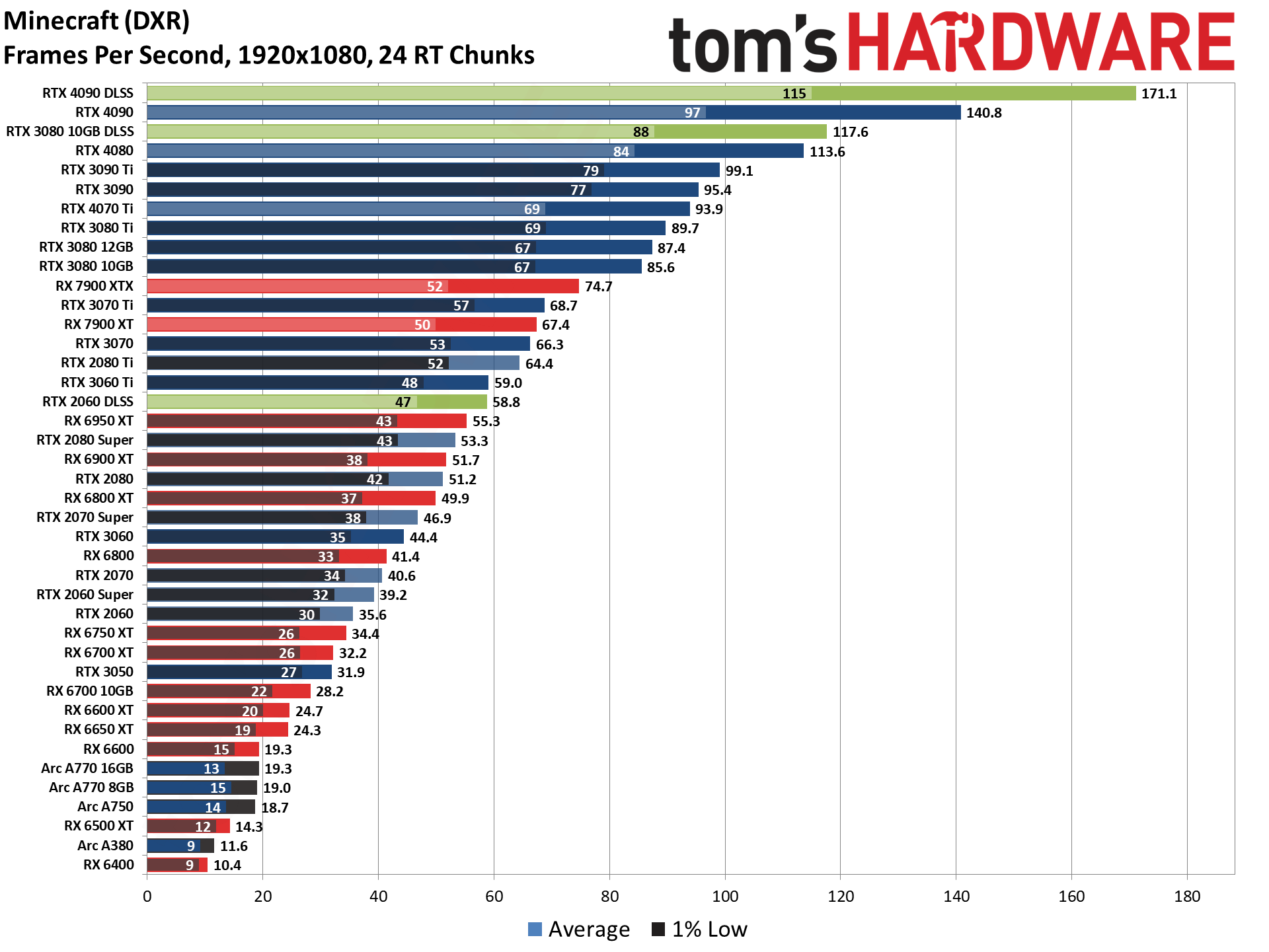

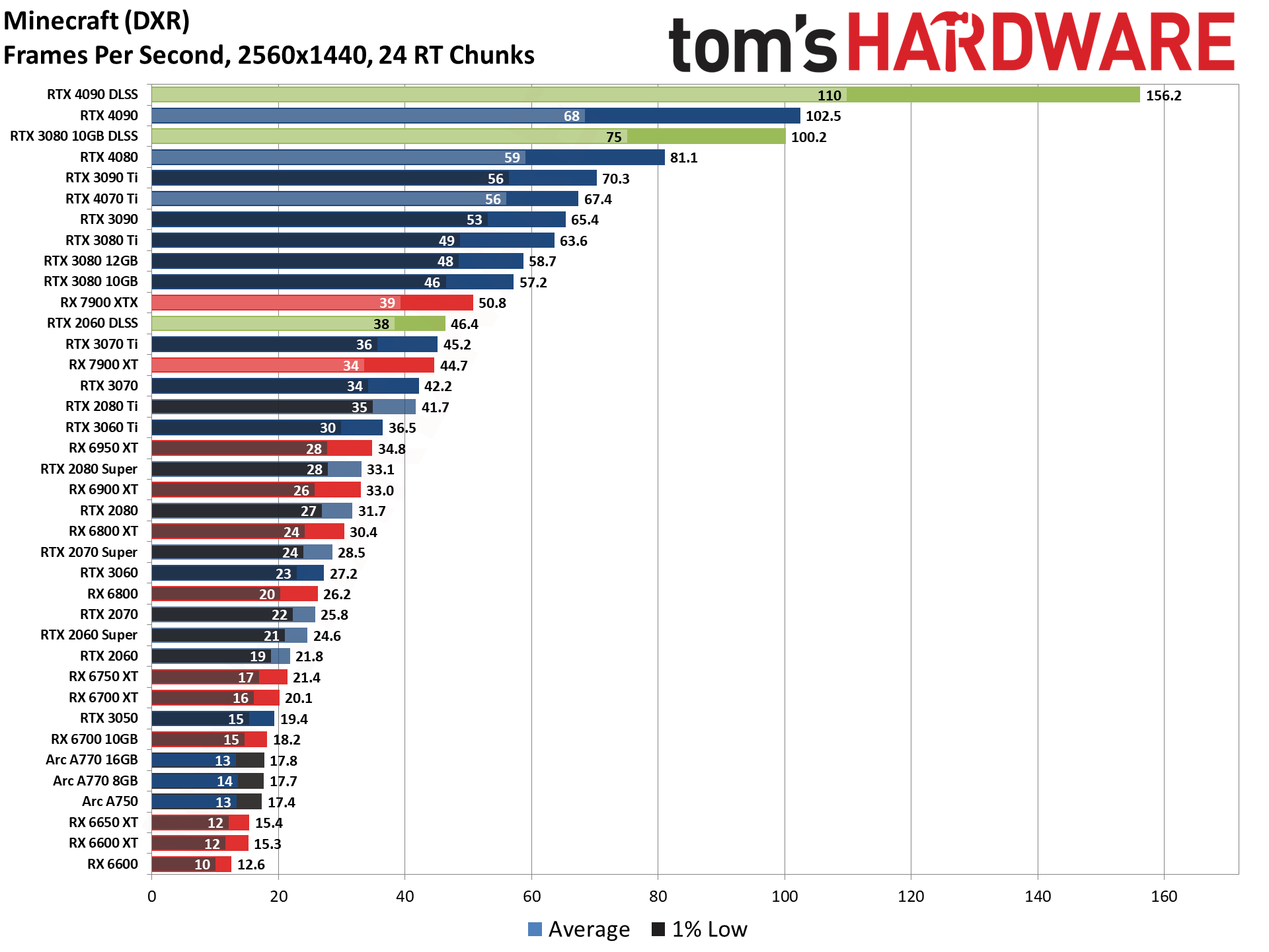

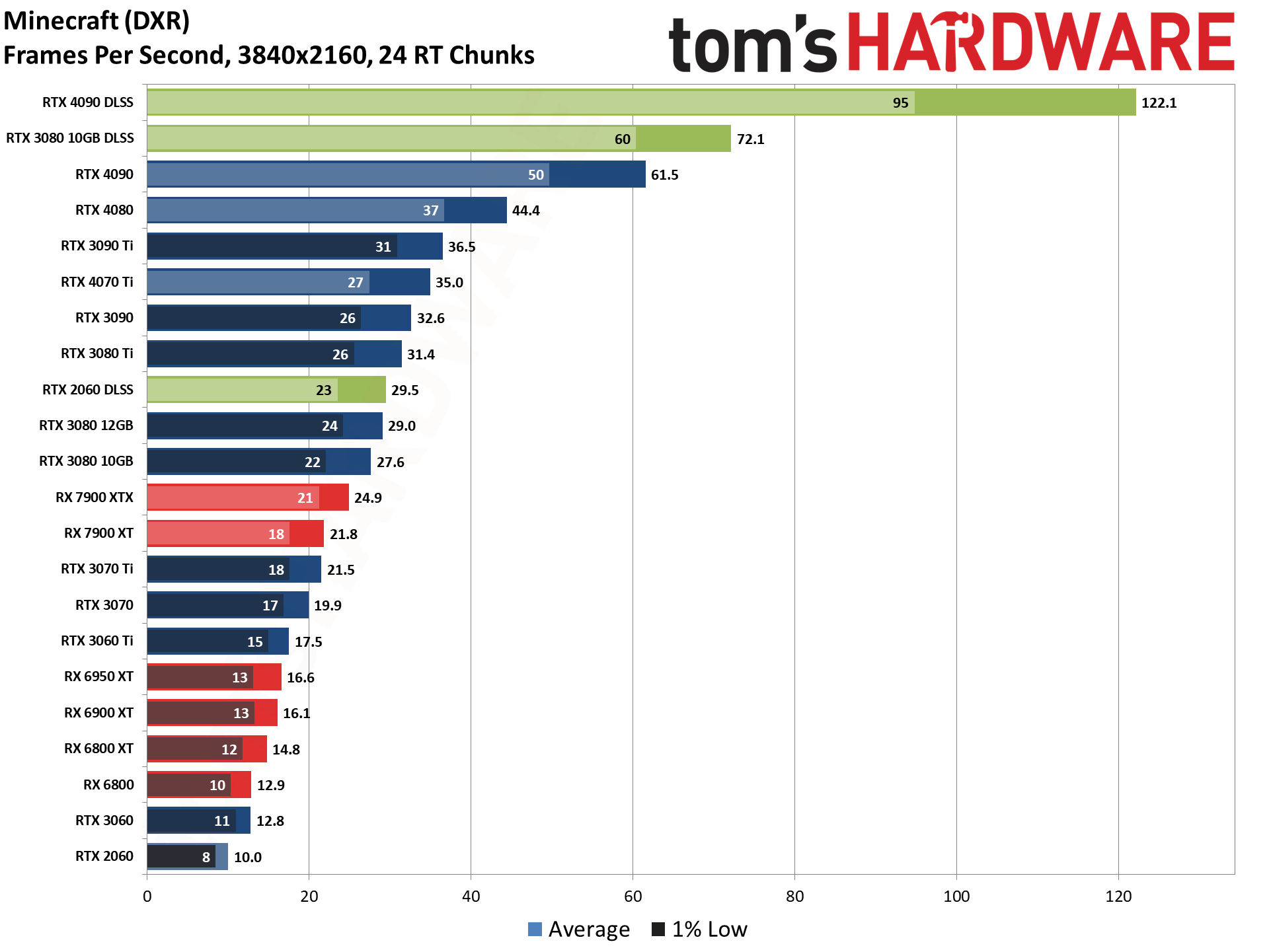

We're testing at four settings: 1920x1080 with 8 RT Chunks rendering distance, and 1920x1080, 2560x1440, and 3840x2160 with a 24 RT Chunks rendering distance — the maximum available. We're focusing on native rendering for the most part, but we do have a couple of DLSS results in the charts to show how that can affect things.

Note that in vanilla Minecraft, the rendering distance can be set as high as 96 chunks (it used to be 160, or perhaps that varies by map). Higher rendering distances will put more of a strain on the CPU, but certainly running with 64 chunks isn't uncommon.

Our test map is Portal Pioneers RTX, which includes a helpful benchmarking setup. You can find various generic RTX texture packs to use for your own maps, though you can't simply enable ray tracing effects without such a pack. That's because the ray traced rendering requires additional information on the various blocks to tell the engine how they should be rendered — e.g. are they reflective, do they glow, etc.

One other important note is that DLSS upscaling can't be tuned, it's either on or off with an Nvidia GeForce RTX card. As far as we can tell, for 1080p and lower resolutions, DLSS uses 2x upscaling (Quality mode), 1440p uses 3x upscaling (Balanced mode), and 4K and above use 4x upscaling (Performance mode). The blocky nature of Minecraft does lend itself rather well to DLSS upscaling, however, so even 4x upscaling at 4K still looks very good.

Minecraft RTX Graphics Card Performance

We've tested virtually every card at every setting that could possibly make sense, including plenty of settings that don't make sense at all! Basically, we stopped testing higher resolutions and settings once a card dropped below 20 fps, though we did test all of the cards at both 1080p settings just for the sake of completeness. Let's start with the "easiest" setting, 1920x1080 and an 8 RT chunk render distance.

If you've seen results like this before, you won't be surprised to see that Minecraft RTX runs far better on Nvidia RTX hardware than on anything else — and that's not even factoring in DLSS upscaling. AMD does offer several GPUs that can now break 60 fps, but even the RX 7900 XTX falls behind the RTX 3080, and the fastest previous gen RX 6950 XT trails the RTX 3060 Ti.

To get performance above a steady 60 fps (meaning, the 1% minimum fps is also above 60), you'll need at least an RTX 2080 Ti, or alternatively an RTX 2060 basically gets there if you turn on DLSS. If you're only looking to clear 60 fps average performance, the RTX 2080 Super and above will suffice. For a bare minimum playable experience of over 30 fps (average), the RX 6650 XT and above will suffice.

Intel's Arc Alchemist GPUs have had problems with Minecraft RTX since they first gained support. The latest 3469 drivers help quite a bit, though some oddities remain and we suspect there's still room for improvement. At least 1080p with an 8 chunk RT render distance is now playable on the A750 and above. The Arc A750 usually lands pretty close to the RTX 3060, where here even the A770 16GB ends up being quite a bit slower.

Finally, let's talk about DLSS upscaling, with the three sample cards of RTX 2060, RTX 3080, and RTX 4090. The RTX 2060 performance gets a massive boost of 72%, taking it from a borderline 41 fps to a very playable 70 fps. The RTX 3080 sees a decent 52% jump, going from 107 fps to 162 fps. There are limits to what DLSS can do, however, and while the RTX 4090 does improve its average fps by 24%, the 1% lows actually drop a few percent due to the DLSS overhead.

Maxing out the RT Chunks render distance at 24 increases the demands on the GPU a decent amount, at least in areas where you have a relatively unobstructed view. Because the BVH tree — Bounding Volume Hierarchy, used to help optimize the ray tracing calculations — gets constructed on the CPU and passed over to the GPU, some of the hardest hit cards with the increased view distance are the fastest cards. The RTX 4090 performance drops by 31% for example, while the RTX 3070 only loses 15% compared to 8 RT Chunks.

If you're looking for at least 60 fps, the RTX 2080 Ti now marks the bare minimum GPU you'll need, while 60 fps minimum basically requires an RTX 3080 or faster. Only AMD's RX 7900 XTX/XT cards can break 60 fps, and in both cases minimums fall quite a bit short of that mark. Dropping your fps target to 30 fps opens the door to more cards, but you'll still need at least an RTX 2060 to get minimums above 30, or an RTX 3050 to get the average fps to 30.

This is another good example of how much faster Nvidia's GPUs are at ray tracing calculations compared to AMD. In traditional games, the RTX 3050 delivers about 23% lower performance than an RX 6600, but in Minecraft RTX it basically ties the RX 6700 XT — a card that's 94% faster in our rasterization GPU benchmarks!

Intel's ray tracing hardware normally looks much better than this as well, for example the Arc A750 lands between the RX 6700 XT and RX 6750 XT in Cyberpunk 2077, one of the most demanding DXR games around (outside of Minecraft). Right now, Intel's Arc A700 cards come in just behind the RX 6600. That's with the updated drivers, but when you look at the 1440p results below, you'll see that something still seems off.

Last, let's talk DLSS again, which is still in Quality mode here. The gains on the RTX 2060 are 65% now, slightly lower than above so perhaps other bottlenecks are becoming more of a factor. The RTX 4090 at the other extreme sees a 22% increase again, again a touch less than before. Finally, our "middle of the road" RTX 3080 gets a 65% improvement. In other words, the DLSS performance increase appears to be mostly resolution dependent in Minecraft RTX, even though the ray tracing workload increased.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Stepping up to 2560x1440 with maxed out settings at 24 RT Chunks, we've dropped the three slowest GPUs from our benchmarks (A380, RX 6400, and RX 6500 XT), but we certainly could have skipped testing a bunch of the other cards. Roughly half of the tested cards can't keep the 1% lows above 30 fps, and only a handful of cards can even average 60 fps — six to be precise, at least when we're not using DLSS. And we're not even looking at a worst-case scenario, as there are certainly more demanding maps available in Minecraft RTX.

If you want to fully clear 60 fps, the only card that can do that at native 1440p is the RTX 4090. To average 60 fps or more, you'll want at least an RTX 3080 Ti. Also note how close the RTX 3080 10GB and RTX 3080 12GB are in performance, which indicates Minecraft RTX hits the RT Cores and GPU shaders far more than it depends on memory bandwidth. We'll see that pattern continue even at 4K below.

At least there are still 22 cards that can still break 30 fps average, which is much better than when Minecraft RTX first became available. Back then, only the RTX 2070 Super, RTX 2080, RTX 2080 Super, and RTX 2080 Ti were able to reach "playable" performance — and the Titan RTX as well, if you want to include that status symbol of GPU-dom. But in our updated tests, the RTX 2070 Super now falls short and you'll need an RX 6800 XT or faster.

Intel's Arc A700 cards are barely slower at 1440p than at 1080p as well. Now they land between the RX 6650 XT and the RX 6700 10GB, which seems pretty reasonable overall. The fact that they're only 1–2 fps slower than at 1080p means there's room for improvement in the 1080p results, though we wouldn't expect too much change at 1440p.

DLSS now switches to the "Balanced" algorithm with 3X upscaling, which means it can provide and even more significant boost to performance. The RTX 2060 is basically unplayable at just 22 fps native, but with DLSS it more than doubles performance to hit 46 fps. Even the behemoth RTX 4090 gets a 52% increase in performance thanks to DLSS, going from 102 fps to 156 fps. The RTX 3080 plays middle fiddle again with a 75% increase, jumping from 57 fps to 100 fps and delivering a far better gaming experience.

Wrapping things up with our 4K testing, we've naturally dropped most of the GPUs from testing. Where we had 38 GPUs in total at 1080p, we're down to 19 now, and half of those are again struggling — unless you want to turn on DLSS, which you should if you have an Nvidia card. We just wish you could decide whether to use Performance, Balanced, or Quality mode manually rather than the game devs deciding what's appropriate.

As expected, the RTX 4090 mostly breezes along with just over 60 fps, and it's the only card to break that barrier. Even 30 fps is a difficult target without upscaling, with the RTX 3080 Ti and above proving sufficient. An interesting aside is that the two RTX 3080 cards, one with 10GB on a 320-bit interface and the other with 12GB on a 384-bit interface, end up with very similar performance. Minecraft RTX at least doesn't seem to strongly depend on memory bandwidth based on those results. Meanwhile, none of AMD's GPUs can break 30 fps, and the fastest RX 6000-series cards fall in the high teens.

With DLSS now using Performance mode and 4X upscaling, you get a huge boost to framerates by enabling upscaling. The RTX 2060 nearly triples its performance and is mostly playable at just a hair under 30 fps. RTX 3080 improves by 160%, with its upscaled performance passing the native RTX 4090. And the RTX 4090 effectively doubles its performance.

While you might think 4X upscaling will leave noticeable artifacts, at 4K and with a game like Minecraft — meaning it's very blocky by default, and even the text is intentionally pixelated looking — the resulting output still looks almost as good as native rendering. Considering how demanding 4K would otherwise be, DLSS is basically a prerequisite to get good performance out of that resolution in Minecraft. And at least the frames rendered in Minecraft are true frames and not the DLSS 3 Frame Generation frames.

Ray Tracing vs. Path Tracing

That wraps up our performance test, but to understand why Minecraft RTX is so demanding, we need to briefly describe how it differs from other RTX enabled games. Nvidia says that Minecraft RTX uses 'path tracing,' similar to what it did with Quake II RTX, where most other games like Control and Cyberpunk 2077 only use 'ray tracing.' For anyone who fully understands the difference between ray tracing and path tracing, you probably just rolled your eyes hard. That's because Nvidia has co-opted the terms to mean something new and different.

In short, Nvidia's 'path tracing' in Minecraft RTX just means doing more ray tracing calculations—bouncing more rays—to provide more effects and a higher quality result. Actually, Nvidia also now lists Portal RTX as the only game with "full RT" if you check out Nvidia's list of RTX enabled games (which can mean games with DXR, DLSS, DLAA, or some combination of those).

Path tracing as used in Hollywood films typically means casting a number of rays per pixel into a scene, finding an intersection with an object and determining the base color, then randomly bouncing more rays from that point in new directions. Repeat the process for the new rays until each ray reaches a maximum depth (number of bounces) or fails to intersect with anything, then accumulate the resulting colors and average them out to get a final result.

That's the simplified version, but the important bit is that it can take thousands of rays per pixel to get an accurate result. A random sampling of rays at each bounce quickly scales the total number of rays needed in an exponential fashion. You do however get a 'fast' result with only a few hundred rays per pixel—this early result is usually grainy and gets refined as additional rays are calculated.

Ray tracing is similar except it doesn't have the random sampling and integral sums of a bunch of rays. Where you might need tens of thousands of samples per pixel to get a good 'final' rendering result with path tracing, ray tracing focuses on calculating rays at each bounce toward other objects and light sources. It's still complex, and often ray and path tracing are used as interchangeable terms for 3D rendering, but there are some technical differences and advantages to each approach.

Doing full real-time path tracing or ray tracing in a game isn't practical yet, especially not with more complex game environments. Instead, games with ray tracing currently use a hybrid rendering approach. Most of the rendering work is still done via traditional rasterization, which our modern GPUs are very good at, and only certain additional effects get ray traced.

Typical ray traced effects include reflections, shadows, ambient occlusion, global illumination, diffuse lighting, and caustics, and each effect requires additional rays. Most games with ray tracing support only use one or two effects, though a few might do three or four. Control uses ray tracing for reflections, contact shadows and diffused lighting, while Hogwarts Legacy can use ray tracing for reflections, shadows, and ambient occlusion.

Compare that with Minecraft RTX, where you get ray tracing for reflections, shadows, global illumination, refraction, ambient occlusion, emissive lighting, atmospheric effects, and more. That's a lot more rays, though it's still not path tracing in the traditional sense. Unless you subscribe to the 'more rays' being synonymous with 'path tracing' mindset.

(Side note: the SEUS PTGI tool is a different take on path tracing. It depends on Minecraft's use of voxels to help speed up what would otherwise be complex calculations, and it can run on GTX and AMD hardware. There's also the RTGI ReShade tool that uses screen space calculations and other clever tricks to approximate path tracing, but it lacks access to much of the data that would be required to do 'proper' path tracing.)

Closing Thoughts

There's a lot to digest with Minecraft and ray tracing. The game in its 'normal' mode can run on everything from potatoes—along with smartphones and tablets—up through the beefiest of PCs. Cranking up the render distance in the past could cause a few oddities and put more of a load on the CPU, but extreme framerates aren't really needed. Minecraft RTX significantly ups the ante in terms of the GPU hardware requirements.

The lighting, reflections, and other graphical enhancements definitely make a big difference, both in performance as well as visuals. Minecraft has never looked so pretty! Nor has it looked so dark when you're mining underground — bring lots of torches. The core survival and exploration gameplay hasn't changed, of course, but makers who spend their time building intricate Minecraft worlds now have a host of new tools available.

It's now almost three years since Minecraft RTX first became available to the public, and a lot has changed in that time. Where formerly only RTX 20-series GPUs were available, we now have the RTX 30- and 40-series cards, AMD's RX 6000- and 7000-series GPUs, and even the handful of Intel Arc offerings. As you can see from our testing, Nvidia's GPUs still generally provide the best experience, and DLSS delivers another level of performance that currently can't be matched. It's too bad the game hasn't been updated with FSR 2 or XeSS support.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Roland Of Gilead Never understood the appeal of this game. It's just so boring. Each to their own, I guess.Reply -

doughillman ReplyRoland Of Gilead said:Never understood the appeal of this game. It's just so boring. Each to their own, I guess.

Yeah, it's mostly for people with imagination who can set their own goals. Not for everyone. -

chalabam Reply

It's the modern version of playing with plastic bricks.Roland Of Gilead said:Never understood the appeal of this game. It's just so boring. Each to their own, I guess. -

PlaneInTheSky I remember how raytracing was heralded on Tomshardware, and its affiliate site PCGamer, as a revolution in real-time graphics.Reply

And here we are, raytracing...Minecraft..., that most turn off because it looks unrealistic.

But we have reflective puddles though...not because mirror-like puddles look realistic...not because you couldn't do this without raytracing...but because Nvidia paid developers to put them everywhere🤦 -

digitalgriffin Is RT prime time?Reply

Probably not. And I doubt it ever will be in our lifetimes.

But you need to look at it this way:. Do you know the first implementation of tesselation was on the ATi 9800. It was Serious Sam 2.

So you know how long it took tesselation to be mainstream?

But the thing is, that tessellation, even selectively applied did enhance the game substantially.

Same with RT. For example Hogwart's floor reflections from the windows and soft shadows are just a thing of beauty. -

PlaneInTheSky ReplySame with RT. For example Hogwart's floor reflections from the windows and soft shadows are just a thing of beauty.

I much prefer the more defined rasterized shadows, the soft RTX raytraced shadows lack character imo.

And it's not like you need raytracing to do a penumbra, there are plenty of algorithms to soften shadow edges without resorting to raytracing that tanks FPS. Softening shadows just takes away character.

https://i.postimg.cc/jxdfcC1J/fddgdg.jpg

---------------------------------------------------------------------------------------------------------------------

RTX also causes unrealistically saturated colors and light bleeding that reminds me of the horrible bloom effect.

https://i.postimg.cc/jRcdXvYZ/FGDGDGDG.jpg

-----------------------------------------------------------------------------------------------------------------------------------------------

RTX also causes lighting bugs. Developers have confirmed these are not "special effects" but RTX rendering errors.

Minecraft RTX has plenty of these rendering problems too, it's likely linked to the fact that there is a raytrace bounce cutoff to maintain performance, and these incomplete renders result in very weird lighting bugs.

https://i.postimg.cc/B4tgs7M1/dfgdfgdggggg.jpg -

AgentBirdnest Really nice article! As a 2060 owner, I'm unsatisfied with the performance in RTX Minecraft at 1440p, even with DLSS.Reply

I'm currently saving up for a new graphics card. Hopefully will be able to afford it in the next month or two. RTX Minecraft is actually a big priority for me, and I'd love to be able to play it at 90FPS (I usually can't tell a difference in framerates higher than that.) It's good to know I can get that level of performance with a 3080 or better. : ) -

ohio_buckeye This isn’t on pc but my 6 year old nephew is a Minecraft junkie. That boy is constantly playing Minecraft on ps4 or trying to watch Minecraft on YouTube.Reply -

digitalgriffin ReplyPlaneInTheSky said:I much prefer the more defined rasterized shadows, the soft RTX raytraced shadows lack character imo.

And it's not like you need raytracing to do a penumbra, there are plenty of algorithms to soften shadow edges without resorting to raytracing that tanks FPS. Softening shadows just takes away character.

https://i.postimg.cc/jxdfcC1J/fddgdg.jpg

---------------------------------------------------------------------------------------------------------------------

RTX also causes unrealistically saturated colors and light bleeding that reminds me of the horrible bloom effect.

https://i.postimg.cc/jRcdXvYZ/FGDGDGDG.jpg

-----------------------------------------------------------------------------------------------------------------------------------------------

RTX also causes lighting bugs. Developers have confirmed these are not "special effects" but RTX rendering errors.

Minecraft RTX has plenty of these rendering problems too, it's likely linked to the fact that there is a raytrace bounce cutoff to maintain performance, and these incomplete renders result in very weird lighting bugs.

https://i.postimg.cc/B4tgs7M1/dfgdfgdggggg.jpg

Penumbras with rasterization has limitations which often lead to banding or stepping. And with dynamic objects (ie: npcs and chars) it can to stair stepped jagged edges. You can do more light projections with smaller steps by increasing the recursion limit on the bounding box, but the slowdown in performance is significant.