The five worst Nvidia GPUs of all time: Infamous and envious graphics cards

Nvidia GPUs can flop, too.

Though Nvidia and AMD (and ATI before 2006) have long struggled for supremacy in the world of graphics, it's pretty clear that the green giant has generally held the upper hand. But despite Nvidia's track record and ability to rake in cash, the company has put out plenty of bad GPUs — just like the five worst AMD GPUs. (See also: The five best AMD GPUs — we're covering the good and the bad.) Sometimes Nvidia rests on its laurels for a little too long, or reads the room terribly wrong, or simply screws up in spectacular fashion.

We've ranked what we think are Nvidia's five worst GPUs in the 24 years since the beginning of the GeForce brand in 1999, which helped usher in modern graphics cards. We're working from the best of the worst down to the worst of the worst. These choices are based both on individual cards and whole product lines, which means a single bad GPU in a series of good GPUs won't necessarily make the cut.

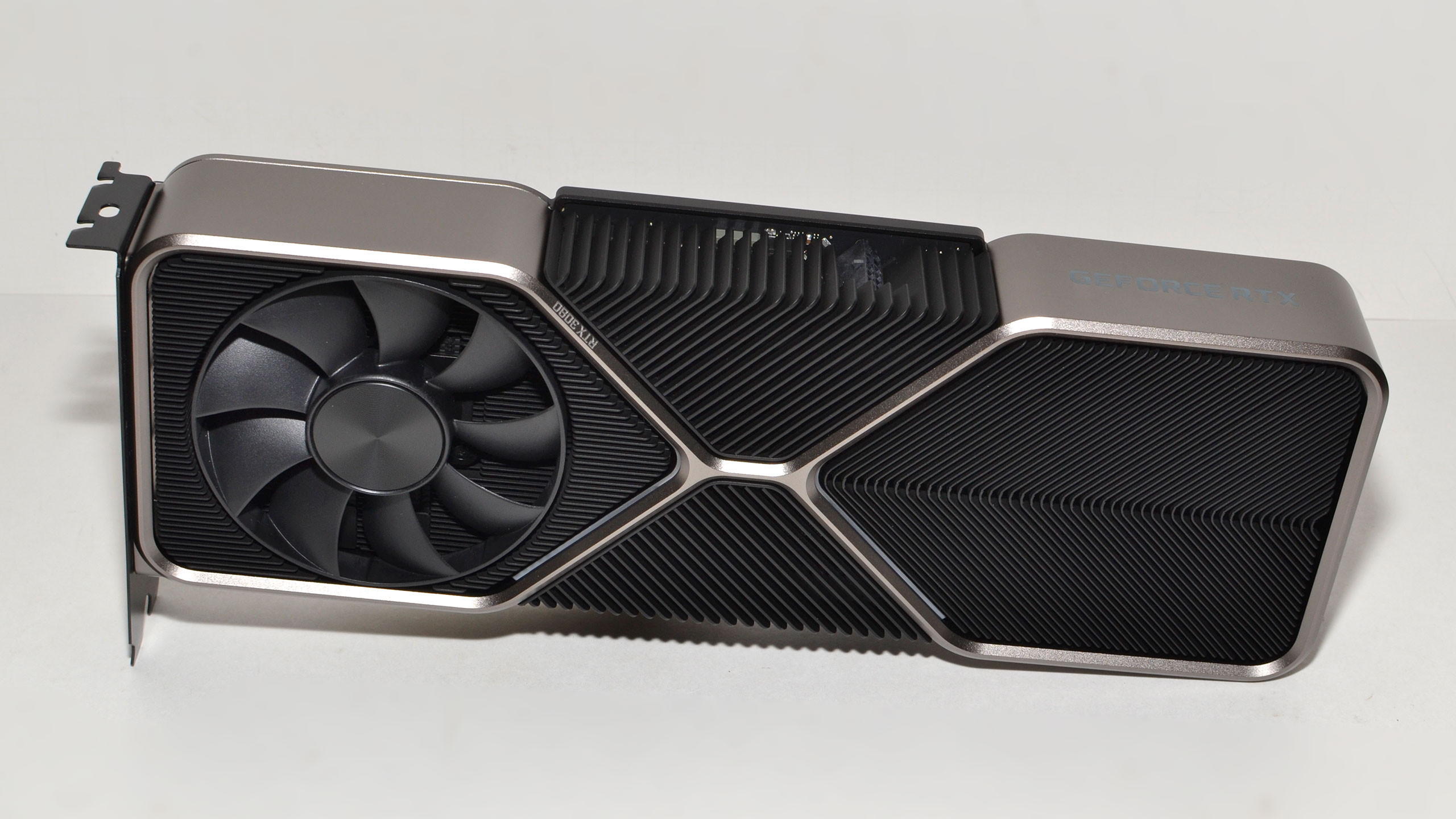

5 — GeForce RTX 3080: The GPU generation you couldn't buy

Nvidia achieved a comfortable lead over AMD with its GTX 10-series in 2016, and going into 2020 Nvidia was still able to maintain that lead. However, AMD was anticipated to launch a GPU that was truly capable of going toe-to-toe with Nvidia's best, which meant the upcoming GPUs would need to match that. The situation was complicated by the fact that the RTX 20-series neglected traditional rasterization performance in favor of then-niche ray tracing and AI-powered resolution upscaling. For the first time in a long time, Nvidia was caught with its pants down.

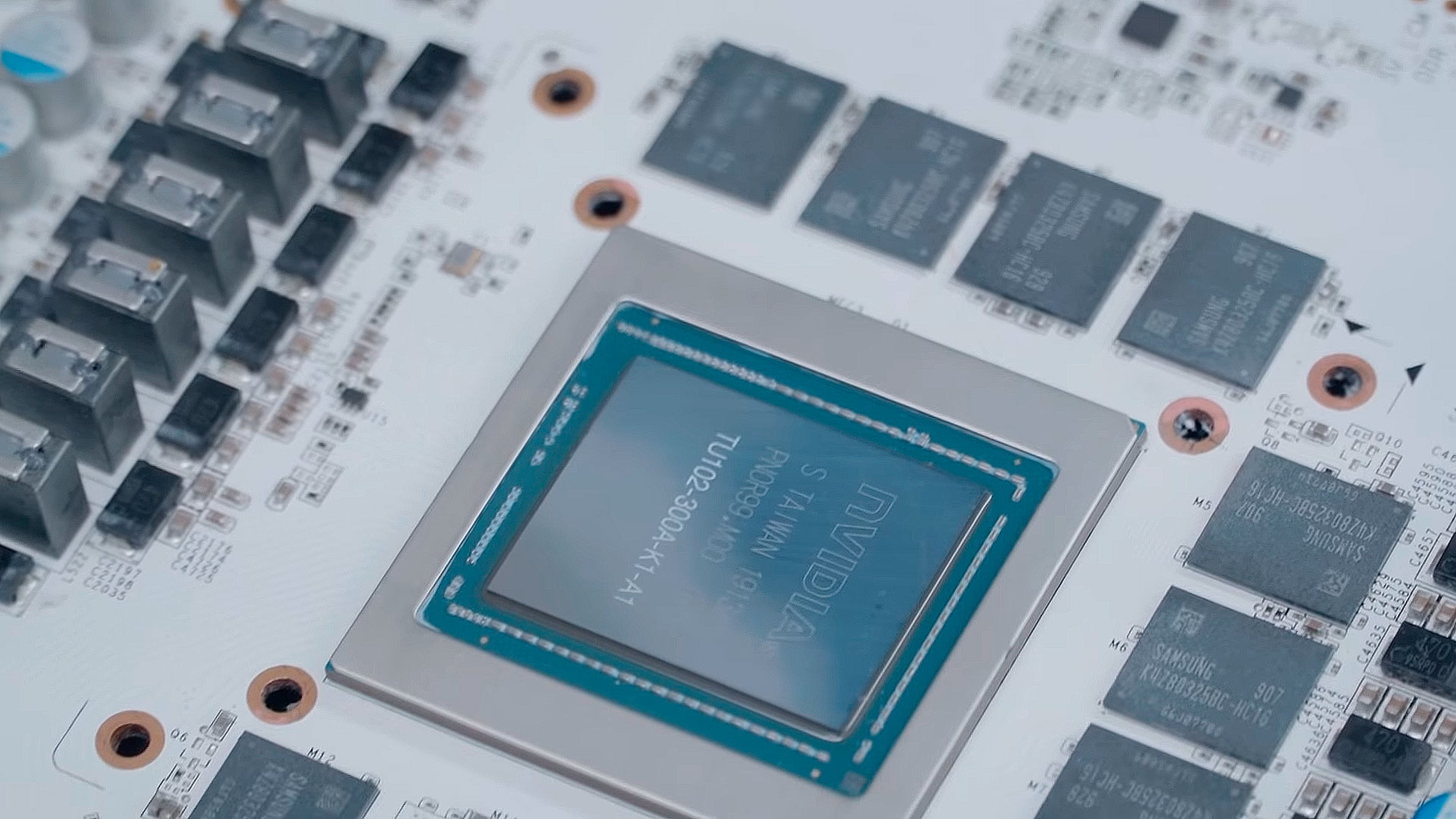

What Nvidia came up with for the RTX 30-series was the Ampere architecture, which addressed many of the issues with Turing-based RTX 20-series chips. Nvidia upgraded from TSMC's 12nm process (essentially its 16nm node but able to make big chips) to Samsung's 8nm, which meant increased density for additional cores and more efficiency. Ampere also made the dedicated integer execution units in floating-point plus integer units, meaning it could trade integer performance for additional floating point compute, depending on the workload. Nvidia advertised this change as a massive increase in core count and TFLOPS, which was a bit misleading.

Launching in September of 2020 with the RTX 3080 and 3090, Nvidia preempted AMD's RDNA 2-powered RX 6000-series. The 3080 at $699 was about on par with the $1,499 3090, and it was easily faster than the RTX 2080 Ti in both ray-traced and non-ray traced games. AMD's $649 RX 6800 XT and RX 6800 showed up in November, with the RX 6900 XT in December, and while they did tie the 3080 and 3090 in non-ray traced scenarios, they lagged behind with ray tracing enabled, and they also lacked a resolution upscaler like DLSS.

We originally rated the 3080 with 4.5 out of 5 stars, so you might be wondering how in the world it could have ended up on this list. Well, it boils down to everything that happened after launch day. The 3080's most immediate problem was that you couldn't realistically buy it at MSRP, and a big part of the problem was the infamous GPU shortage induced by the Covid pandemic, and then massively exacerbated by cryptocurrency miners pushing prices up well north of $1,500. But even after the shortage technically ended in early 2022, almost no 3080 10GBs went for $699 or less.

Meanwhile, high-end RX 6000 cards like the 6800 XT came out of the GPU shortage with sub-MSRP pricing. The 6800 XT has been going for less than its $649 MSRP all this year and into last year, and today it costs less than $500 brand-new. AMD also now has FSR2, which doesn't quite match DLSS, but it's a viable resolution upscaling and frame generation alternative. RTX 30 cards don't even get DLSS 3 frame generation and have to rely on FSR 3 frame generation instead.

Then there's the wider problems with the RTX 30-series. Pretty much every 30-series card has much less memory than it should, except for the 3060 12GB and the 3090 with its 24GB of VRAM. On launch day things were fine, but more and more evidence is cropping up that most RTX 30-series cards just don't have enough memory, especially in the latest games. Then there's the RTX 3050, the lowest-end member of the family with an MSRP of $249 and a real price of $300 or more for most of its life. Compare that to the $109 GTX 1050; in just two generations, Nvidia raised the minimum price of its GPUs by $140.

Even without the GPU shortage, it seems that the RTX 30-series was doomed from the start thanks to its limited amount of memory and merely modest advantage in ray tracing and resolution upscaling. The extreme retail prices were enough to make the RTX 3080 and most of its siblings some of Nvidia's worst cards, and directly led to Nvidia increasing prices on its next generation parts.

4 — GeForce RTX 4060 Ti: One step forward, one step back

It might be premature to call a GPU that came out half a year ago one of the worst ever, but sometimes it's obvious that it has to be. Not only is the RTX 4060 Ti a pretty bad graphics card in its own right, but it's something of a microcosm for the entire RTX 40-series and all of its problems.

Nvidia's previous RTX 30 GPUs had some real competition in the form of AMD's RX 6000-series, especially in the midrange. Though the RTX 3060 12GB was pretty good thanks to its increased VRAM capacity, higher-end RTX 3060 Ti and 3070 GPUs came with just 8GB and didn't have particularly compelling price tags, even after the GPU shortage ended. An (upper) midrange RTX 40-series GPU could have evened the playing field.

The RTX 4060 Ti does have some redeeming qualities: It's significantly more power efficient than 30-series cards, and it uses the impressively small AD106 die that's three times as dense as the GA104 die inside the RTX 3070 and RTX 3060 Ti. But there's a laundry list of things that are wrong with the 4060 Ti, and it primarily has to do with memory.

There are two models of the 4060 Ti: one with 8GB of VRAM and the other with 16GB. That the 8GB model of this GPU even exists is problematic, because it's so close in performance to the 3070 that already has memory capacity issues. So, obviously you should get the 16GB model just to make sure you're future-proofed, except the 16GB model costs a whopping $100 extra. With the 4060 Ti, Nvidia is basically telling you that you need to spend $499 instead of $399 to make sure your card actually performs properly 100% of the time.

The 4060 Ti also cuts corners in other areas, particularly when it comes to memory bandwidth. Nvidia gave this GPU a puny 128-bit memory bus, half that of the 256-bit bus on the 3070 and 3060 Ti, and this results in a meager bandwidth of 288GB/s on the 4060 Ti, whereas the previous generation had 448GB/s. Additionally, the 4060 Ti has eight instead of 16 PCIe lanes, which shouldn't matter too much, but it's still yet more penny pinching. A larger L2 cache helps with the bandwidth issue, but it only goes so far.

In the end, the RTX 4060 Ti is basically just an RTX 3070 with barely any additional performance, decently better efficiency, and support for DLSS 3 Frame Generation. And sure, the 8GB model costs $399 instead of $499 (now $449-ish), but it's also vulnerable to the same issues that the 3070 can experience. That means you should probably just buy the 16GB model, which is priced the same as the outgoing 3070. Either way, you're forced to compromise.

The RTX 4060 Ti isn't uniquely bad, echoing what we've seen out of the RTX 40-series as a whole. To date it's Nvidia's most expensive GPU lineup by far, ranging from the RTX 4060 starting at $299 to the RTX 4090 starting at $1,599 (and often costing much more). The 4060 Ti isn't the only 40-series card with a controversial memory setup, as Nvidia planned to initially launch the RTX 4070 Ti as the RTX 4080 12GB. Even with the name change and a $100 price cut, that graphics card warrants a mention on this list. But frankly, nearly every GPU in the RTX 40-series (other than the halo RTX 4090) feels overpriced and equipped with insufficient memory for 2022 and later.

Let's be clear. What we wanted from the RTX 40-series, and what Nvidia should have provided, was a 64-bit wider interface at every tier, and 4GB more memory at every level — with the exception of the RTX 4090, and perhaps an RTX 4050 8GB. Most of our complaints with the 4060 and 4060 Ti would be gone if they were 12GB cards with a 192-bit bus. The same goes for the 4070 and 4070 Ti: 16GB and a 256-bit bus would have made them much better GPUs. And the RTX 4080 and (presumably) upcoming RTX 4080 Super should be 320-bit with 20GB. The higher memory configurations could have even justified the increase in pricing to an extent, but instead Nvidia raised prices and cut the memory interface width. Which reminds us in all the bad ways of the next entry in this list...

3 — GeForce RTX 2080 and Turing: New features we didn't ask for and higher prices

Nvidia's influence in gaming graphics was at its peak after the launch of the GTX 10-series. Across the product stack, AMD could barely compete at all, especially in laptops against Nvidia's super efficient GPUs. And when Nvidia had a big lead, it would often follow it up with new GPUs that would offer real upgrades over the existing cards. Prior to the launch of the Turing architecture, the community was excited for what Jensen Huang and his team were cooking up.

In the summer of 2018, Nvidia finally revealed its 20-series of GPUs — and they weren't the GTX 20-series, but instead debuted as the RTX 20-series, with the name change signifying the fact that Nvidia had introduced the world's first real-time ray tracing graphics cards. Ray tracing was and still is an incredible technological achievement, described as the "holy grail" of graphics. That technology had made its way into gaming GPUs, promising to bring about a revolution in fidelity and realism.

But then people started reading the fine print. And let's be clear: More than any other GPUs on this list, this is really one where the entirety of the RTX 20-series family gets the blame.

First, there were the prices: These graphics cards were incredibly expensive (worse in many ways than the current 40-series pricing). The RTX 2080 Ti debuted at $1,199? No top-end Nvidia gaming graphics card using a single graphics chip had ever gotten into four digit territory before, unless you want to count the Titan Xp (we don't). The RTX 2080 was somehow more expensive than the outgoing GTX 1080 Ti. Nvidia also released the Founders Edition version of cards a month before the third-party models, with a $50 to $200 price premium — a premium that didn't really go away in some cases.

Also, ray tracing was cool and all, but literally no games supported it when the RTX 20-series released. It wasn't like all the game devs could suddenly switch over from traditional methods to ray tracing, ignoring 95% of the gaming hardware market. Were these GPUs really worth buying, or should people just skip the 20-series altogether?

Then the reviews came in. The RTX 2080 Ti by virtue of being a massive GPU was much faster than the GTX 1080 Ti. However, the RTX 2080 could only match the 1080 Ti and they were nominally the same price — plus, the 2080 had 3GB less memory. The RTX 2070 and RTX 2060 faired a bit better, but they didn't offer mind-boggling improvements like the previous GTX 1070 and GTX 1060 did, and again increased generational pricing by $100. The single game in 2018 that offered ray tracing, Battlefield V, ran very poorly even on the RTX 2080 Ti: It was only able to get an average 100 FPS in our 1080p testing. To be clear, the 2080 Ti was advertised as a 4K gaming GPU.

But hey, building an ecosystem of ray traced games takes time, and Nvidia had announced 10 additional titles that would get ray tracing. One of these games got cancelled, and seven of the remaining nine added ray tracing. However, one of those games only added ray tracing for the Xbox Series X/S version, and another two were Asian MMORPGs. Even Nvidia's ray tracing darling Atomic Heart skipped ray tracing when it finally came out in early 2023, five years after the initial RT tech demo video.

The whole RTX branding thing was also thrown into even more confusion when Nvidia later launched the GTX 16-series, starting with the GTX 1660 Ti, using the same Turing architecture but without ray tracing or tensor cores. If RT was the future and everyone was supposed to jump on that bandwagon, why was Nvidia backpedaling? We can only imagine what Nvidia could have done with a hypothetical GTX 1680 / 2020: A smaller die size, all the rasterization performance, none of the extra cruft.

To give Nvidia some credit, it did at least try to address the 20-series' pricing issue by launching 'Super' models with the 2080, 2070, and 2060. Those all offered better bang for buck (though the 2080 Super was mostly pointless). However, this also indicated that the original RTX 20-series GPUs weren't expensive because of production costs — after all, they used relatively cheap GDDR6 memory and were fabbed on TSMC's 12nm node, which was basically 16nm but able to make the big chip for the 2080 Ti. AMD shifted to TSMC 7nm for 2019's RDNA / RX 5000-series. RTX 20-series was probably expensive just because Nvidia believed they would sell at those prices.

In hindsight, the real "holy grail" in the RTX 20-series was Deep Learning Super Sampling, or DLSS, which dovetails into today's AI explosion. Although DLSS got off to a rocky start — just like ray tracing, with lots of promises being broken and the quality not being great — today the technology is present in 500 or so games and works amazingly well. Nvidia should have emphasized DLSS much more than ray tracing. Even today, only 37% of RTX 20-series users enable ray tracing, while 68% use DLSS. It really should have been DTX instead of RTX.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

2 — GeForce GTX 480: Fermi round one failed to deliver

Although Nvidia was used to being in first place by the late 2000s, in 2009 the company lost the crown to an ATI recently acquired by AMD. The HD 5000-series came out before Nvidia's own next-generation GPUs were ready, and the HD 5870 was the first winning ATI flagship since the Radeon 9700 Pro. Nvidia wasn't far behind though, with its brand-new graphics cards slated for early 2010.

It's important to note how differently Nvidia and AMD/ATI approached GPUs at this time. Nvidia was all about making massive dies on older nodes that were cheap and had good yields, while ATI had shifted to smaller dies on more advanced processes with the HD 3000-series, and kept that design strategy up to the HD 5000-series. Nvidia's upcoming GPUs, codenamed Fermi, would be just as big as its prior generation, though this time Nvidia would be equal in process, using TSMC's 40nm just like the HD 5000 lineup.

Launching in March 2010, the flagship GTX 480 was a winner. In our review, it beat the HD 5870 pretty handily. The top-end HD 5970 still stood as the fastest graphics card overall, but it used two graphics chips in CrossFire, which wasn't always consistent. But the GTX 480 cost $499, much higher than the roughly $400 HD 5870. Nvidia's flagships always charged a premium, so this wasn't too different here... except for one thing: power.

Nvidia's flagships were never particularly efficient, but the GTX 480 was ludicrous. Its power consumption was roughly 300 watts, equal to the dual-GPU HD 5970. The HD 5870 in contrast consumed just 200 watts, meaning the 480 had a 50% higher power draw for maybe 10% to 20% more performance. Keep in mind, this was back when the previous record was 236 watts on the GTX 280, and that was still relatively high for a flagship.

Of course, power turns into heat, and the GTX 480 was also a super hot GPU. Ironically, the reference cooler sort of looked like a grill, and the 480 was derided with memes talking about "the way it's meant to be grilled," satirizing Nvidia's "The Way It's Meant To Be Played" game sponsorships, and meme videos like cooking an egg on a GTX 480. It was not a great time to be an Nvidia marketer.

In the end, the issues plaguing the GTX 400-series proved to be so troublesome that Nvidia replaced it half a year later with a reworked Fermi architecture for the GTX 500-series. The new GTX 580 still consumed about 300 watts, but it was 10% faster than the 480, and the rest of the 500-series had generally better efficiency than the 400-series.

Nvidia really messed up with the 400-series — you don't replace your entire product stack in half a year if you're doing a good job. AMD was extremely close to overtaking Nvidia's share in the overall graphics market, something that hadn't happened since the mid 2000s. Nvidia still made way more money than AMD in spite of Fermi's failures, so at least it wasn't an absolute trainwreck. It also helped pave the way for a renewed focus on efficiency that made the next three generations of Nvidia GPUs far better.

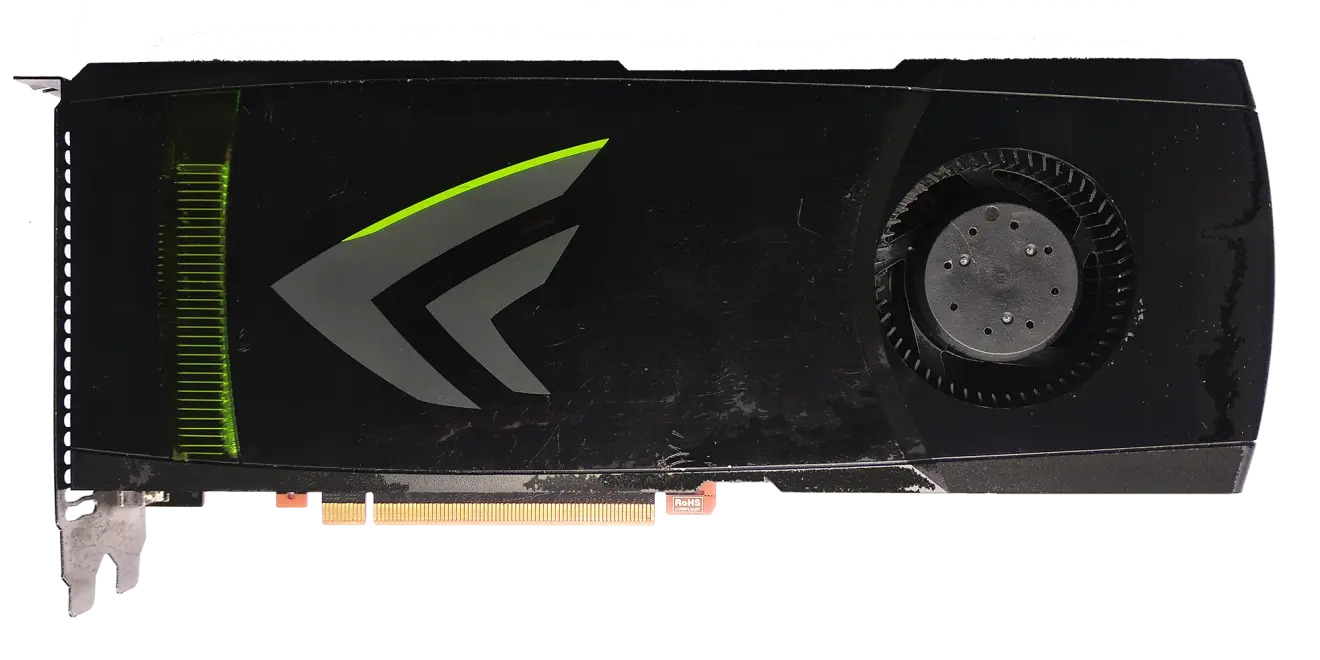

1 — GeForce FX 5800 Ultra: aka, the leaf blower

In the years following the introduction of the GeForce brand with the inaugural GeForce 256 in 1999, Nvidia was on a winning streak. Its last remaining rival was Canadian graphics company ATI Technologies, and although the company still had some fight in it, Nvidia kept winning for several consecutive generations. Then ATI changed the game with the Radeon 9700 Pro in 2002, which decimated the GeForce 4-series thanks to its record-smashing die size.

Nvidia would of course strike back with its own large GPU, which was likely in development before the 9700 Pro. However, if Nvidia had any doubts about making a chip with a big die size, ATI dispelled them. Besides, considering the massive lead ATI had with its Radeon 9000-series, Nvidia didn't really have much of a choice. But with Nvidia moving to the 130nm node while the 9700 Pro was on 150nm, Nvidia definitely had a big technical advantage on its side.

In early 2003, Nvidia fired back with its GeForce FX 5800 Ultra, which was competitively priced at $399, the same price the 9700 Pro launched at. However, the 5800 Ultra was only partially successful in reclaiming the lead, especially at higher resolutions. This was down to the 5800 Ultra's much lower memory bandwidth of 16GB/s compared to the nearly 20GB/s on the 9700 Pro.

There was also an issue where anisotropic filtering just didn't look very good, and that further undermined the performance lead the 5800 Ultra supposedly had. This turned out to be a driver issue (drivers were pretty important for visual quality back then), and although that meant it was fixable, it was nevertheless an issue.

Then there was the power consumption. Although a power draw of 75 watts or so might seem pretty quaint nowadays, back then that was truly massive. By contrast the 9700 Pro in our testing just hit 54 watts, so it was nearly 50% more efficient than Nvidia's much newer flagship. And the noise? "Like a vacuum cleaner," according to our review from two decades ago — others dubbed it the leaf blower.

Nvidia quickly got to work releasing a newer, improved flagship in the form of the GeForce FX 5900 Ultra just half a year later. This new GPU featured a massive 27GB/s worth of bandwidth, fixed drivers, and a much more reasonable power consumption of around 60 watts. Nvidia did bump the price up by $100, but that's fair enough given Nvidia had also improved its performance lead, even though the Radeon 9800 Pro had come out in the mean time.

Note that the GTX 400-series wasn't the first Nvidia GPU to reclaim the performance lead from ATI by a slim margin, while having power consumption issues, and to get replaced half a year later. But what pushes the GeForce FX 5800 Ultra into first (or last?) place is the fact that it was on a much more advanced process and yet still struggled so much against the 9700 Pro. That must have been a very humbling moment for Nvidia's engineers.

Dishonorable Mention: Proprietary technology nonsense

Nvidia isn't just a hardware company, it has software too. And what Nvidia seems to love doing most of all with its hardware plus software ecosystem is making it closed off. The company has introduced tons of features that will only work on Nvidia hardware, and sometimes only on specific types of Nvidia hardware. If that wasn't bad enough, these features also tend to have lifespans comparable to many of Google's products and projects.

Look, we get that sometimes a company wants to move the market forward with new technologies, but these should be the same technologies that the game developers and graphics professionals want, not just arbitrary vendor lock-in solutions — DirectX Raytracing (DXR) is a good example of this approach. Nearly every item in the following list could have been done as an open standard; Nvidia just used its market share to try and avoid going that route, and it often failed to pan out. Here's the short version.

SLI: First introduced by 3dfx, which went bankrupt and was subsequently acquired by Nvidia, SLI was a technology that allowed two (or more) GPUs to render a game. In theory, this meant doubling performance, but it hinged on good driver support, was prone to frame stuttering and visual glitches, and consumed tons of power. It was a staple of Nvidia's mainstream GPUs for years but was effectively discontinued with the RTX 30-series in 2020. RIP SLI and the red herring promise of increased performance from multiple GPUs.

PhysX: PhysX is physics software made for games that eventually ended up in the hands of Ageia. That company created Physics Processing Units (or PPU) cards to accelerate the usage of PhysX, which would have normally run on the CPU. Nvidia acquired Ageia in 2008 and added PhysX support directly into its GeForce GPUs, and there was a big push from Nvidia to convince users to get an extra graphics card just to speed up PhysX.

However, tons of people didn't like GPU-accelerated physics, including legendary game dev John Carmack, who argued physics belonged on the CPU. He was eventually proven correct, as PhysX was eventually opened up for CPUs and then made open source, and it hasn't really been used on GPUs since Batman: Arkham Asylum. PhysX, at least as a proprietary technology, is dead — and good riddance.

3D Vision: Debuting in 2009, 3D Vision sought to capitalize on the 3D vision craze of the 2000s and 2010s. It had two parts: the drivers that enabled stereoscopic vision in DirectX games, and glasses that could actually produce the 3D effect. Although we pondered that 3D Vision might be "the future of gaming," it turns out that people just didn't like 3D, and enthusiasm for 3D died down in the early to mid 2010s. 3D Vision limped along until 2019, when it was axed.

G-Sync: G-Sync, which first came out in 2013, was a piece of hardware installed into gaming monitors that could dynamically alter the refresh rate to match the framerate, which prevented screen-tearing. Although a superior solution to V-Sync, which locked the framerate, G-Sync monitors were super expensive and the feature didn't work with AMD GPUs.

Meanwhile, Vesa's Adaptive Sync and AMD's FreeSync (based on Adaptive Sync) did the same thing and didn't really cost anything extra, making G-Sync an objectively worse solution for Nvidia users. In 2019, Nvidia finally allowed its users to use Adaptive Sync and FreeSync monitors, and also introduced G-Sync Compatible branding for monitors based on Adaptive Sync — those could work on Nvidia and AMD cards. There are still proprietary G-Sync monitors, but they're almost exclusively top-end models and seem to represent a dying breed.

VXAO: Basically a high-end version of ambient occlusion that could create more realistic shadows. However, VXAO was very GPU intensive and only ever got into two games, Rise of the Tomb Raider in 2016 and Final Fantasy XV in 2018. The advent of real-time ray tracing has made VXAO redundant, though it was essentially dead on arrival.

DLSS: Deep Learning Super Sampling is one of the premiere features of RTX GPUs, and although it's pretty great and works well, it's still proprietary. It doesn't run on AMD GPUs at all, and it doesn't run on any non-RTX cards from Nvidia either. That means even the GTX 16-series, which use the Turing architecture in the RTX 20-series, don't support DLSS. DLSS 3 Frame Generation is also limited to RTX 40-series cards and later. It would have been nice if Nvidia did what Intel did with XeSS, and offered two versions: one for its RTX GPUs using Tensor cores, and a second mode for everything else.

Matthew Connatser is a freelancing writer for Tom's Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.

-

waltc3 I'll never forget nv30...after a weeks-long Internet "countdown," and more bogus benchmark numbers rumored than you have hair on your head, the "leaf blower" (so named because of the very loud cooler that sounded like a hair dryer) was released, and promptly flopped. Six months after initial shipping to panned reviews everywhere, nVidia pulled the plug and canceled the GPU entirely. 3dfx bankrupted itself on the STB factory in Mexico, which it purchased, apparently without doing due diligence, only to discover that the factory had secured more than $500M in debt that STB did not report to 3dfx. Boom, it was over just that quick. nVidia picked up what was left of 3dfx at a bankruptcy fire sale, and then promptly blamed the failure of nv30 on 3dfx, of course...;)Reply -

COLGeek With mostly recent GPUs (3 of 5), this seems to leave out a whole bunch of potential stinkers. Sill, great job starting a heated discussion that is sure to follow.Reply -

CelicaGT I had a GeForce 256 as my first GPU, back when they were the new hot. Having owned many ATi/AMD and Nvidia GPUs over the years I managed to miss all the stinkers (maaaybe the GTX 970 qualifies with the partitioned memory but it was overall a good card) and get most of the best cards. I just picked up a 4070ti under MSRP on a fire sale, time will tell if this was a mistake. So far, so good.Reply -

The Beav Reply

DLSS is proprietary because it works specifically with CUDA cores, it can't be done without them. No other cards have anything as advanced.Admin said:Although Nvidia has been the leader in gaming graphics for most of the 21st century, it has still released plenty of terrible GPUs. Here's our take on the worst five Nvidia GPUs of all time.

The five worst Nvidia GPUs of all time: Infamous and envious graphics cards : Read more

Also the 3080 was a great card, the only issue was that miners bought too many and there was a pandemic, otherwise it would have been like any other GPU release, and neither of those issues were caused by Nvidia... -

stonecarver Around 2005 a dud of a card was the Nvidia 5500. Were the cards in the article being judged on performance of the card or price and availability.Reply -

PEnns What a strange article.Reply

So, the 3080 was bad because......................people couldn't buy it??? -

-Fran- I'll have to pick on the 3080, as it's not a bad GPU per se. I cringe at the 10GB for that price, but they (kind of) made it less horrible with the 12GB version (even less obtainable XD), but they do perform well. I do remember the power issue at launch though, so yeah, not quite perfect. Now, why do I think it's not a good pick for the list? Simply because the 3050 6GB will exist shortly. I know we can't predict the future and all, but... Come on. The other strong contender to that list is any DDR version of a GDDR GPU they've put out. And, if you wan a specific model, the 1630. That thing is just trash.Reply

Other than that nitpick, the list is pretty spot on, yes. Another addition to the honorable mention could be the 8000 series that just fell off their PCBs. I think those were G92 dies?

Regards. -

atomicWAR Reply

Yeah that one rubbed me a little wrong too. I think I would have put the a either a 4070 or Ti version in its place as they are starved for ram considering the resolutions they aim for. Heck even the 4080 with it high price and its huge gap trailing the 4090 in performance would have worked for 5th.PEnns said:What a strange article.

So, the 3080 was bad because......................people couldn't buy it???

But for sure I agree with the 5800 FX ultra being supreme trash earning the top spot (I got a 9700 Pro that gen after four gens of Nvidia on my primary rig). But hey they remembered the bring up the Geforce 256 this time even if in passing...It should have made the list of top 5 best Nvidia GPUs if you asked me. -

thestryker A pretty deserving list outside of the 3080. The 400 series was a really weird stop as the 460 was a really good card for its segment.Reply

I don't think the 3080 should be in this list because the only real knock against it is the 10GB VRAM. The market was godawful, but none of that can really be blamed on nvidia so much as miners and then consumers for keeping the costs up when availability got better. I certainly bought a 6800 XT for a second machine this year over anything nvidia because the $/perf was so much better.

I think the instances of releasing the "same" card with meaningfully difference specs would be far more deserving. The 30 series example of that would be the 3060 8GB (and likely forthcoming 3050 6GB), because not only did it have less VRAM but the bus width drop caused the performance to plummet. There's also the multiple types of VRAM on the 1030, and the 1060 3GB which had fewer cores on top of less VRAM.