Tech Industry

Latest about Tech Industry

-

-

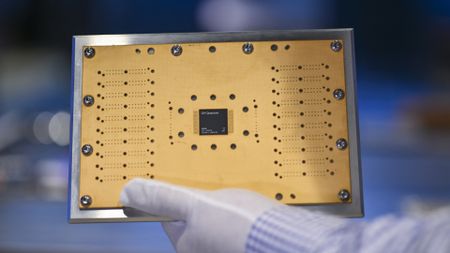

Rapidus explores panel-level packaging on glass substrates for next-generation processors

By Anton Shilov Published

-

Dual-PCB Linux computer with 843 components designed by AI boots on first attempt

By Mark Tyson Last updated

-

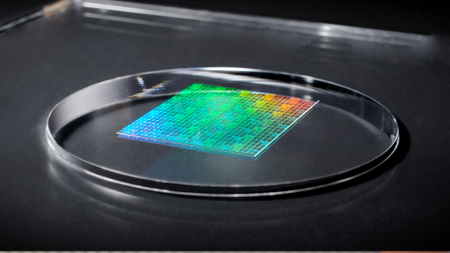

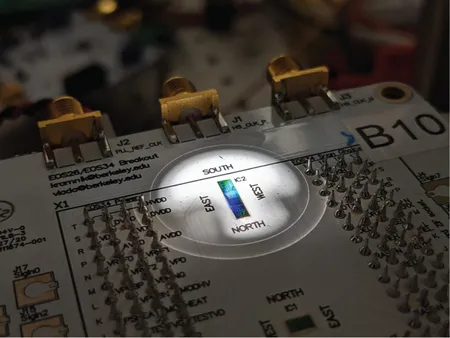

Intel details progress on fabbing 2D transistors a few atoms thick in standard high volume fab production environment

By Anton Shilov Published

-

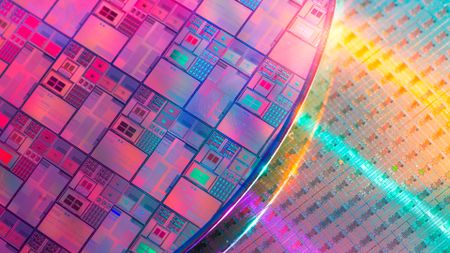

Intel installs industry's first commercial High-NA EUV lithography tool

By Anton Shilov Published

-

Bernie Sanders calls for halt on AI data center construction

By Jowi Morales Published

-

Nearly 7,000 of the world’s 8,808 data centers are built in the wrong climate, analysis find

By Luke James Published

-

Economic analysis of orbital data centers brings the idea down to Earth

By Bruno Ferreira Published

-

Explore Tech Industry

Artificial Intelligence

-

-

Dual-PCB Linux computer with 843 components designed by AI boots on first attempt

By Mark Tyson Last updated

-

Bernie Sanders calls for halt on AI data center construction

By Jowi Morales Published

-

Elizabeth Warren, other U.S. senators concerned about big tech pushing up electricity costs

By Jowi Morales Published

-

Oracle reportedly delays several new OpenAI data centers because of shortages

By Anton Shilov Published

-

Premium

PremiumMicrosoft, Google, OpenAI, and Anthropic join forces to form Agentic AI alliance, according to report

By Jon Martindale Published

-

Basement AI lab captures 10,000 hours of brain scans to train thought-to-text models

By Luke James Published

-

China starts list of government-approved AI hardware suppliers: Cambricon and Huawei are in, Nvidia is not

By Anton Shilov Published

-

Nvidia decries 'far-fetched' reports of smuggling in face of DeepSeek training reports

By Sunny Grimm Published

-

Research commissioned by OpenAI and Anthropic claims that workers are more efficient when using AI

By Sunny Grimm Published

-

Big Tech

-

-

Economic analysis of orbital data centers brings the idea down to Earth

By Bruno Ferreira Published

-

Cloudflare says it has fended off 416 billion AI bot scrape requests in five months

By Jowi Morales Published

-

Nvidia says it’s ‘delighted’ with Google’s success, but backhanded compliment says it is ‘the only platform that runs every AI model’

By Jowi Morales Published

-

Trump administration touts Genesis Mission to try and win the AI race—White House compares scope of its initiative to the Manhattan Project

By Bruno Ferreira Published

-

Elon Musk claims he will 'build chips at higher volumes ultimately than all other AI chips combined'

By Bruno Ferreira Published

-

ASML allegedly offered to spy on China for the US

By Jowi Morales Published

-

Yesterday's global internet outage caused by single file on Cloudflare servers

By Jowi Morales Published

-

Apple and Broadcom job listings suggest potential Intel Foundry collaborations

By Aaron Klotz Published

-

Microsoft to appeal ruling in favor of reselling perpetual Windows licenses

By Hassam Nasir Published

-

Cryptocurrency

-

-

Bitcoin creator Satoshi disappeared on this day 15 years ago, leaving a final public message

By Mark Tyson Published

-

South Korean crypto exchange Upbit reports $30 million theft

By Jowi Morales Published

-

Hobbyist solo miner scores a full Bitcoin block worth $270,000 despite 1 in 180 million odds

By Luke James Published

-

Homeland Security thinks Chinese firm's Bitcoin mining chips could be used for espionage or to sabotage the power grid

By Jowi Morales Published

-

Bitcoin price plunges, wipes $1 trillion from value weeks after it hit all-time high

By Jowi Morales Published

-

Major Bitcoin mining firm pivoting to AI, plans to fully abandon crypto mining by 2027 as miners convert to AI en masse

By Jowi Morales Published

-

'Bitcoin Queen' who laundered $5.6 billion in illicit funds through crypto gets nearly 12 years in prison

By Jowi Morales Published

-

China accuses Washington of stealing $13 billion worth of Bitcoin in alleged hack

By Jowi Morales Published

-

Crypto fraud and laundering ring that stole $689 million busted by European authorities

By Bruno Ferreira Published

-

Cybersecurity

-

-

Germany summons Russian ambassador over GRU-linked cyberattacks

By Luke James Published

-

February report from researcher found Chinese KVM had undocumented microphone and communicated with China-based servers, but many of the security issues are now addressed [Updated]

By Luke James Published

-

Critical flaws found in AI development tools dubbed an 'IDEsaster'

By Luke James Published

-

Five convicted for helping North Korean IT workers pose as Americans and secure jobs at U.S. firms

By Jowi Morales Published

-

Anthropic says it has foiled the first-ever AI-orchestrated cyber attack, originating from China

By Jowi Morales Published

-

Google sues China-based hackers it says stole $1 billion

By Jowi Morales Last updated

-

Laid-off Intel employee allegedly steals 'Top Secret' files, vanishes

By Jowi Morales Published

-

Louvre heist reveals museum used ‘LOUVRE’ as password for its video surveillance, still has workstations with Windows 2000

By Jowi Morales Published

-

37 years ago this week, the Morris worm infected 10% of the Internet within 24 hours

By Mark Tyson Last updated

-

Manufacturing

-

-

Rapidus explores panel-level packaging on glass substrates for next-generation processors

By Anton Shilov Published

-

Intel details progress on fabbing 2D transistors a few atoms thick in standard high volume fab production environment

By Anton Shilov Published

-

Intel installs industry's first commercial High-NA EUV lithography tool

By Anton Shilov Published

-

Premium

PremiumNew 1.4nm nanoimprint lithography template could reduce the need for EUV steps in advanced process nodes

By Luke James Published

-

Qualcomm’s Ventana acquisition points to a long-term RISC-V strategy to complement its Arm lineup

By Luke James Published

-

First truly 3D chip fabbed at US foundry, features carbon nanotube transistors and RAM on a single die

By Luke James Published

-

Nvidia weighs expanding H200 production as new China orders rush in, report claims

By Luke James Published

-

Huawei's latest mobile is armed with China's most advanced process node to date despite using blacklisted chipmaker

By Anton Shilov Published

-

Photonic latch memory could enable optical processor caches that run up to 60 GHz, twenty times faster than standard caches

By Luke James Published

-

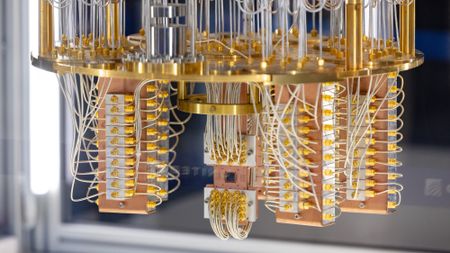

Quantum Computing

-

-

Premium

PremiumIBM and Cisco agree to lay the foundations for a quantum internet

By Luke James Published

-

New Chinese optical quantum chip allegedly 1,000x faster than Nvidia GPUs for processing AI workloads

By Aaron Klotz Published

-

IBM's boffins run a nifty quantum error-correction algorithm on conventional AMD FPGAs

By Bruno Ferreira Published

-

Trump administration to follow up Intel stake with investment in quantum computing, report claims

By Anton Shilov Published

-

Google's Quantum Echo algorithm shows world's first practical application of Quantum Computing — Willow 105-qubit chip runs algorithm 13,000x faster than a supercomputer

By Bruno Ferreira Published

-

Harvard researchers hail quantum computing breakthrough with a machine that can run for two hours

By Jowi Morales Published

-

Quantum internet is possible using standard Internet protocol

By Sunny Grimm Published

-

Quantum machine learning unlocks new efficient chip design pipeline

By Jon Martindale Published

-

The world's first hybrid chip combining photonics and electronics with quantum computing is here, and it's built like a normal silicon SoC

By Hassam Nasir Published

-

Supercomputers

-

-

AMD and Eviden unveil Europe's second exascale system

By Anton Shilov Published

-

Nvidia to build seven AI supercomputers for the U.S. gov't with over 100,000 Blackwell GPUs

By Anton Shilov Published

-

Nvidia unveils Vera Rubin supercomputers for Los Alamos National Laboratory

By Anton Shilov Published

-

U.S. Department of Energy and AMD cut a $1 billion deal for two AI supercomputers

By Bruno Ferreira Published

-

China's supercomputer breakthrough uses 37 million processor cores to model complex quantum chemistry at molecular scale

By Anton Shilov Last updated

-

Start-up hails world's first quantum computer made from standard silicon

By Luke James Published

-

Nvidia GPUs and Fujitsu Arm CPUs will power Japan's next $750M zetta-scale supercomputer

By Hassam Nasir Published

-

AMD's massive GPU VRAM on its Instinct cards has broken Linux's hibernation feature

By Hassam Nasir Published

-

AMD supercomputers take gold and silver in latest Top500 as Chinese HPC remains shrouded in secrecy

By Anton Shilov Published

-

Superconductors

-

-

New 3D printing process could improve superconductors

By Ash Hill Published

-

New research shows naturally occurring mineral is an 'unconventional superconductor' when purified

By Christopher Harper Published

-

New research reignites the possibility of LK-99 room-temperature superconductivity

By Francisco Pires Published

-

U.S. Govt and researchers seemingly discover new type of superconductivity in an exotic, crystal-like material

By Francisco Pires Published

-

Nature Retracts Controversial Room Temperature Superconductor Paper (But Not LK-99)

By Francisco Pires Published

-

What is a Superconductor?

By Francisco Pires Published

-

MIT's Superconducting Qubit Breakthrough Boosts Quantum Performance

By Francisco Pires Published

-

LK-99 Research Continues, Paper Says Superconductivity Could be Possible

By Francisco Pires Published

-

Is LK-99 a Superconductor After All? New Research and Updated Patent Say So

By Francisco Pires Published

-

More about Tech Industry

-

-

Economic analysis of orbital data centers brings the idea down to Earth

By Bruno Ferreira Published

-

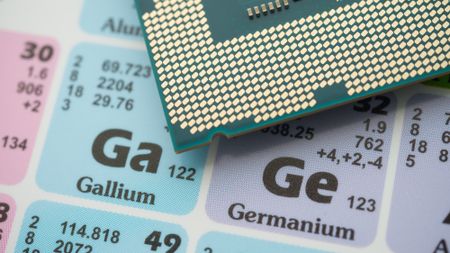

Trump secures deal with Korea Zinc to build rare earths processing facility in Tennessee

By Jowi Morales Published

-

Premium

PremiumNew 1.4nm nanoimprint lithography template could reduce the need for EUV steps in advanced process nodes

By Luke James Published

-