Gaming Shoot-Out: 18 CPUs And APUs Under $200, Benchmarked

Now that Piledriver-based CPUs and APUs are widely available (and the FX-8350 is selling for less than $200), it's a great time to compare value-oriented chips in our favorite titles. We're also breaking out a test that conveys the latency between frames.

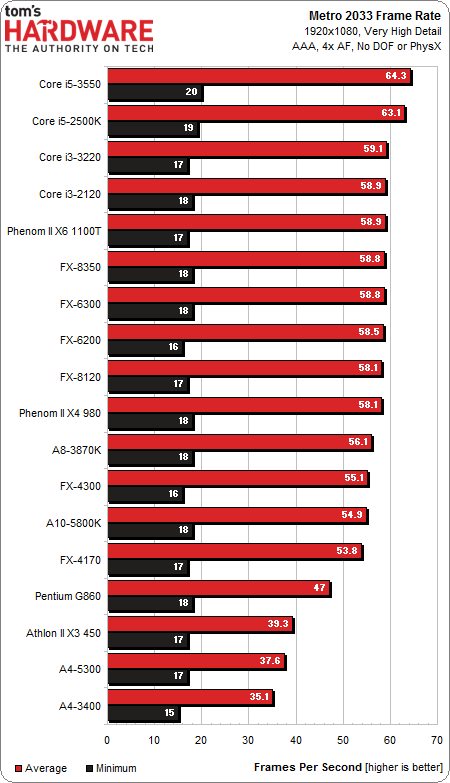

Results: Metro 2033

Metro 2033 is an older title, but it still presents modern hardware with a taxing workload. It's notorious for being GPU-limited, so we're not expecting CPU performance to make a massive difference in this game's outcome (at least, based on the data we've generated up until now).

Most of our CPUs maintain an average of at least 50 FPS. The dual-core A4s and the Athlon II X3 fall under 40, and the Pentium G860 sits in between with a 47 FPS average.

The minimums are similar across the board, suggesting that something other than the varying CPUs is dictating the performance floor.

Compare these results to what we saw in Picking A Sub-$200 Gaming CPU: FX, An APU, Or A Pentium? a year ago. You'll notice that the average frame rates are up on almost all of the CPUs except for Intel's Pentium G860 and AMD's Athlon II X3. At the time, the Radeon HD 7970 we used was brand new, and its drivers were decidedly immature, which may help explain why a similar-performing GeForce GTX 680 fares better today.

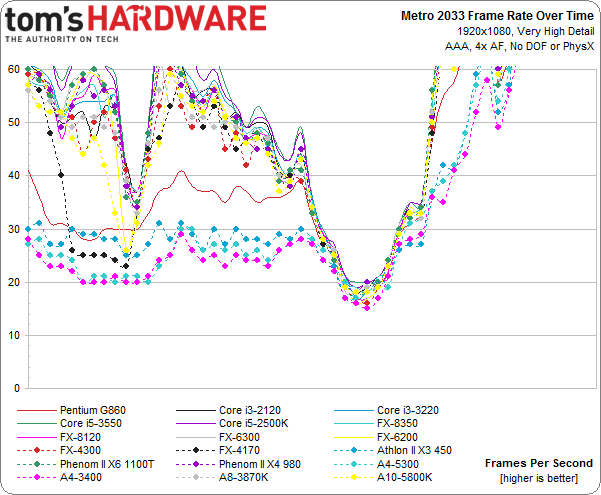

It's quite clear that the processors we're testing limit most of the first half of this benchmark, while the GPU load picks up about two-thirds of the way in, pulling all test beds down under 20 FPS.

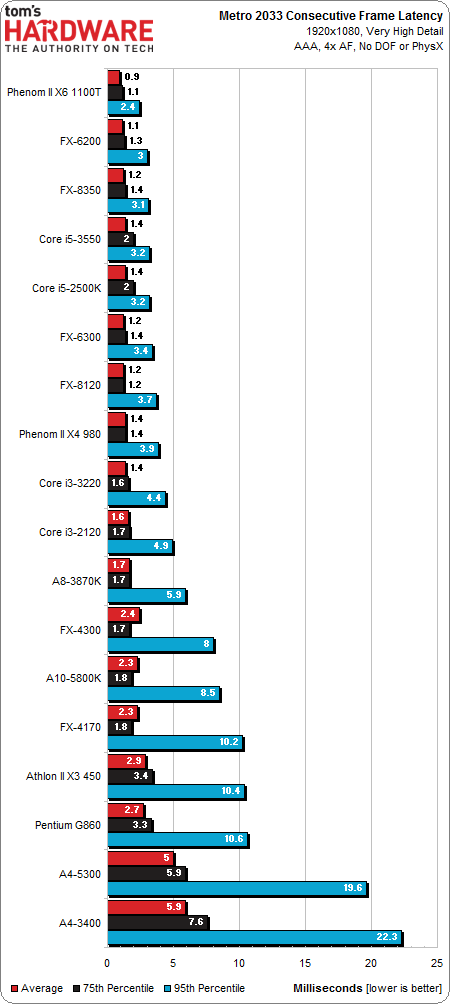

In general, the results are very good news for folks with inexpensive processors, since we're seeing low latencies between consecutive frames. The dual-core A4s are the worst performers by far, with a 95th percentile lag time of about 20 milliseconds.

How about a bit of perspective? On average, the A4 APUs run at about 35 FPS. That 20-millisecond lag would insert a single frame corresponding to around 20 FPS. If that happened once every 100 frames, at 35 FPS, it would happen about once every 2.5 seconds. That's an oversimplification because the percentile is pulled from the entire course of the benchmark, but it's a useful way of imagining what's going on.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

On the other hand, the Pentium G860 and Athlon II X3 450 experience a roughly 10-millisecond lag at the 95th percentile, representing a single frame time drop at 40 FPS down to an equivalent 30 FPS. That's not as big of a difference. Only the FX-4300, A10-5800K, and FX-4170 have a 95th percentile lag of more than eight milliseconds. The rest of the field is under six, and we consider that result statistically insignificant.

Don Woligroski was a former senior hardware editor for Tom's Hardware. He has covered a wide range of PC hardware topics, including CPUs, GPUs, system building, and emerging technologies.

-

esrever Wow. Frame latencies are completely different than the results on the tech report. Weird.Reply -

ingtar33 so... the amd chips test as good as the intel chips (sometimes better) in your latency test, yet your conclusion is yet again based on average FPS?Reply

what is the point of running the latency tests if you're not going to use it in your conclusion? -

shikamaru31789 I was hanging around on the site hoping this would finally get posted today. Looks like I got lucky. I'm definitely happy that newer titles are using more threads, which finally puts AMD back in the running in the budget range at least. Even APU's look like a better buy now, I can't wait to see some Richland and Kaveri APU tests. If one of them has a built in 7750 you could have a nice budget system, especially if you paired it with a discrete GPU for Crossfire.Reply -

hero1 ingtar33so... the amd chips test as good as the intel chips (sometimes better) in your latency test, yet your conclusion is yet again based on average FPS?what is the point of running the latency tests if you're not going to use it in your conclusion?Reply

Nice observation. I was wondering the same thing. It's time you provide conclusion based upon what you intended to test and not otherwise. You could state the FPS part after the fact. -

Anik8 I like this review.Its been a while now and at last we get to see some nicely rounded up benchmarks from Tom's.I wish the GPU or Game-specific benchmarks will be conducted in a similar fashion instead of stressing too much on bandwidth,AA or using settings that favor a particular company only.Reply -

cleeve ingtar33so... the amd chips test as good as the intel chips (sometimes better) in your latency test, yet your conclusion is yet again based on average FPS?what is the point of running the latency tests if you're not going to use it in your conclusion?Reply

We absolutely did take latency into account in our conclusion.

I think the problem is that you totally misunderstand the point of measuring latency, and the impact of the results. Please read page 2, and the commentary next to the charts.

To summarize, latency is only relevant if it's significant enough to notice. If it's not significant (and really, it wasn't in any of the tests we took except maybe in some dual-core examples), then, obviously, the frame rate is the relevant measurement.

*IF* the latency *WAS* horrible, say, with a high-FPS CPU, then in that case latency would be taken into account in the recommendations. But the latencies were very small, and so they don't really factor in much. Any CPUs that could handle at least four threads did great, the latencies are so imperceptible that they don't matter.

-

cleeve esreverWow. Frame latencies are completely different than the results on the tech report. Weird.Reply

Not really. We just report them a little differently in an attempt to distill the result. Read page 2. -

cleeve Anik8.I wish the GPU or Game-specific benchmarks will be conducted in a similar fashion instead of stressing too much on bandwidth,AA or using settings that favor a particular company only.Reply

I'm not sure what you're referring to. When we test games, we use a number of different settings and resolutions.

-

znakist Well it is good to see AMD return to the game. I am an intel fan but with the recent update on the FX line up i have more options. Good work AMDReply