xAI

Latest about xAI

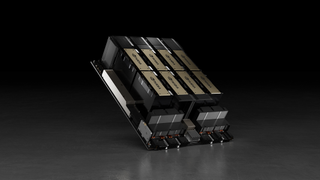

Faulty Nvidia H100 GPUs and HBM3 memory caused half of failures during LLama 3 training — one failure every three hours for Meta's 16,384 GPU training cluster

By Anton Shilov published

In a 16,384 H100 GPU cluster, something breaks down every few hours or so. In most cases, H100 GPUs are to blame, according to Meta.

Elon Musk powers new 'World's Fastest AI Data Center" with gargantuan portable power generators to sidestep electricity supply constraints

By Jowi Morales published

Elon Musk deployed 14 mobile generators at the xAI Memphis Supercluster to generate 35 MWe to power 32,000 H100 GPUs.

Elon Musk reveals photos of Dojo D1 Supercomputer cluster — roughly equivalent to 8,000 Nvidia H100 GPUs for AI training

By Jowi Morales published

Elon Musk says that he'll have 90,000 Nvidia H100s, 40,000 AI4 chips, and the equivalent of 8,000 H100 GPUs in Dojo D1 processors by the end of 2024.

Elon Musk fires up ‘the most powerful AI cluster in the world’ to create the 'world's most powerful AI' by December

By Mark Tyson published

Memphis Supercluster training started at ~4:20am local time.

Elon Musk's liquid-cooled data centers get big plug from Supermicro CEO

By Sunny Grimm published

The massive data centers use liquid cooling for top performance.

Elon Musk wants to purchase 300,000 Blackwell B200 Nvidia AI GPUs — Hardware upgrades to improve X's Grok AI bot

By Aaron Klotz published

Nvidia's B200 GPUs will be used to boost the X platform's AI capabilities

Elon Musk's xAI plans to build 'Gigafactory of Compute' with 100,000 H100 GPUs

By Anton Shilov published

xAI plans to build another massive supercomputer by late 2025, surprisingly based on Nvidia's H100 GPU.

Elon Musk says the next-generation Grok 3 model will require 100,000 Nvidia H100 GPUs to train

By Anton Shilov published

GPU shortages and power are the two main obstacles for AI development.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.