The Server Primer, Part 2

Blade Servers

A sub-category of rackmount servers are so called blade servers. These are even smaller and thinner systems that are typically installed into so-called blade enclosures, which are installed into 19" racks or are individual racks themselves. Blades are designed for high-density computing with reduced space requirements, and to lower maintenance efforts for the whole solution through simplified cabling, modularity and easy deployment. While rackmount servers have to be connected with power, display, network cables etc., blade servers slide into hot-plug connectors. Also, blade servers normally do not carry any fans; they’re ventilated by the cooling solution of their host. A full-size 42U 19" rack can accommodate up to 84 blades, which translates into 336 processing cores if you think of single-socket, quad core processors. Using dual-socket blades or eight core processors, the computation power can easily be doubled in the future.

Blade servers can even operate without hard drives, as the concept provides options to boot operating systems via a Storage Area Network (SAN), in which a network connection is used to transport a storage protocol, often is SCSI/iSCSI. In such a scenario, hard drives are located within other systems, probably even at a different location.

Virtualization

Virtualization is a buzzword that not only affects hardware, but also software applications. It can be best characterized by saying that virtualization helps to better utilize existing system resources. Many of you are probably familiar with this term through a program called VMware, which is a software virtualization solution. It was designed to run a client operating system under a host system using its Virtual Machine Manager (VMM). The VMM is responsible for allocating existing resources in an efficient way, and it does so by offering virtual hardware (which in reality doesn’t exist). As a result, a fully-featured Linux system can be run on a Windows Server.

AMD and Intel support hardware virtualization at the processor level, which means that the latest CPUs are capable of providing these virtual resources to the system, which allows the VMM to run multiple operating system instances on a single CPU. The reason why hardware virtualization has become so interesting is the increasing CPU core count. With two and four processing cores per processor, there is plenty of calculation power to run two or more operating system instances on a single computer.

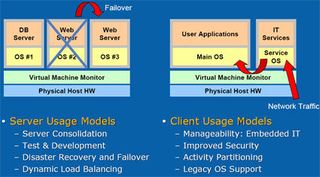

There are different usage models for hardware-supported virtualization. In both cases, you should consider that you can run multiple operating systems on a single system, which means that there are new application models. For client computers, Intel considers a service operating system as being an important addition to your existing main operating system. The service OS could take care of all network traffic, providing increased security and IT services to manage systems from remote locations. In the server world, it makes a lot of sense to split application pillars across various operating system partitions.

In the example below you will see a system that runs one database server partition and two additional partitions with web servers, which work redundantly. In this example, the database server runs decoupled from the web servers, which provides a level of security that only two physical machines can provide. The redundant web servers allow for better uptime, because if one of them should become unavailable, the second one will still be there to take over the load. Alternately, think of one of the web servers as a test environment that can be used to try software changes before they eventually go live.

Load balancing is another aspect that looks interesting. However, this may hit the limits of the remaining hardware - although I/O components are provided virtually as well, there is no hardware support for this type of application. Both AMD and Intel are working on this issue, and will release products with extended virtualization support for I/O devices, meaning that DMA remapping and I/O assignment can be supported in hardware soon.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Source: Intel / IDF presentation.

Source: Intel / IDF presentation.

Most Popular