AMD Big Navi and RDNA 2 GPUs: Everything We Know

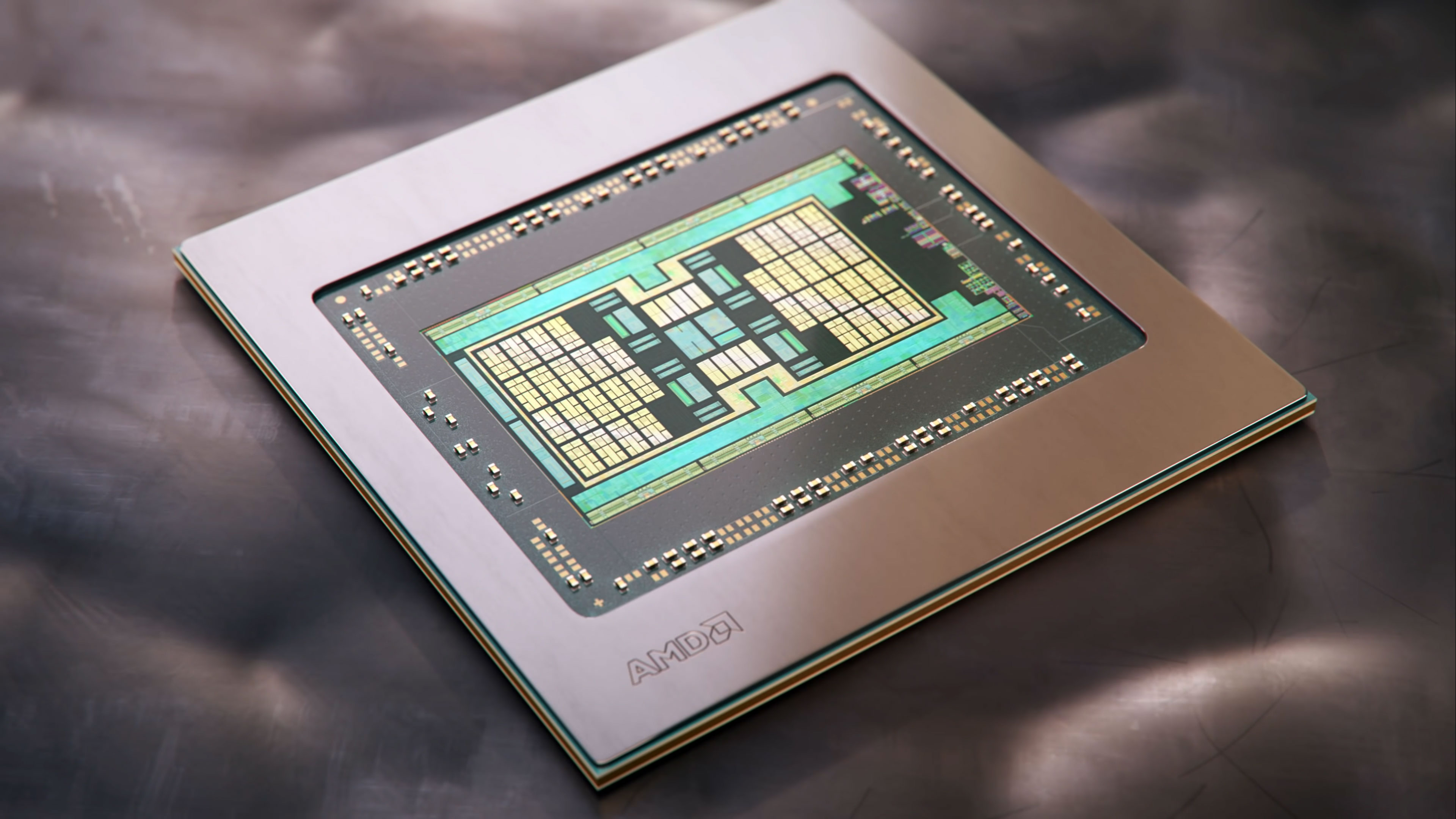

The AMD Big Navi / RDNA 2 architecture powers the latest consoles and high-end graphics cards.

AMD Big Navi, RX 6000, Navi 2x, RDNA 2. Whatever the name, AMD's latest GPUs promise big performance and efficiency gains, along with feature parity with Nvidia in terms of ray tracing support. Team Red finally puts up some serious competition in our GPU benchmarks hierarchy and provides several of the best graphics cards, going head to head with the Nvidia Ampere architecture.

AMD officially unveiled Big Navi on October 28, 2020, including specs for the RX 6900 XT, RTX 6800 XT, and RTX 6800. The Radeon RX 6800 XT and RX 6800 launched first, followed by the Radeon RX 6900 XT. In March 2021, AMD released the Radeon RX 6700 XT, then the Radeon RX 6600 XT came out in August 2021. A few other variations on the Navi 22 and Navi 23 GPUs arrived over time, and finally AMD closed out the RDNA 2 series with Navi 24 and the RX 6500 XT and RX 6400. We've updated this article with revised details over time, but outside of integrated solutions we don't expect any future RDNA2 products to be revealed.

Big Navi has finally put AMD's high graphics card power consumption behind it. Or at least, Big Navi is no worse than Nvidia's RTX 30-series cards, considering the 3080 and 3090 have the highest Nvidia TDPs for single GPUs ever. Let's start at the top, with the new RDNA2 architecture that powers RX 6000 / Big Navi / Navi 2x. Here's everything we know about AMD Big Navi, including the RDNA 2 architecture, specifications, performance, pricing, and availability.

Big Navi / RDNA2 at a Glance

- Up to 80 CUs / 5120 shaders

- 50% better performance per watt

- Launched November 18 (RX 6800 series) and December 8 (RX 6900 XT)

- Pricing of $159 to $1,099 for RX 6400 to RX 6950 XT

- Full DirectX 12 Ultimate support

The RDNA2 Architecture in Big Navi

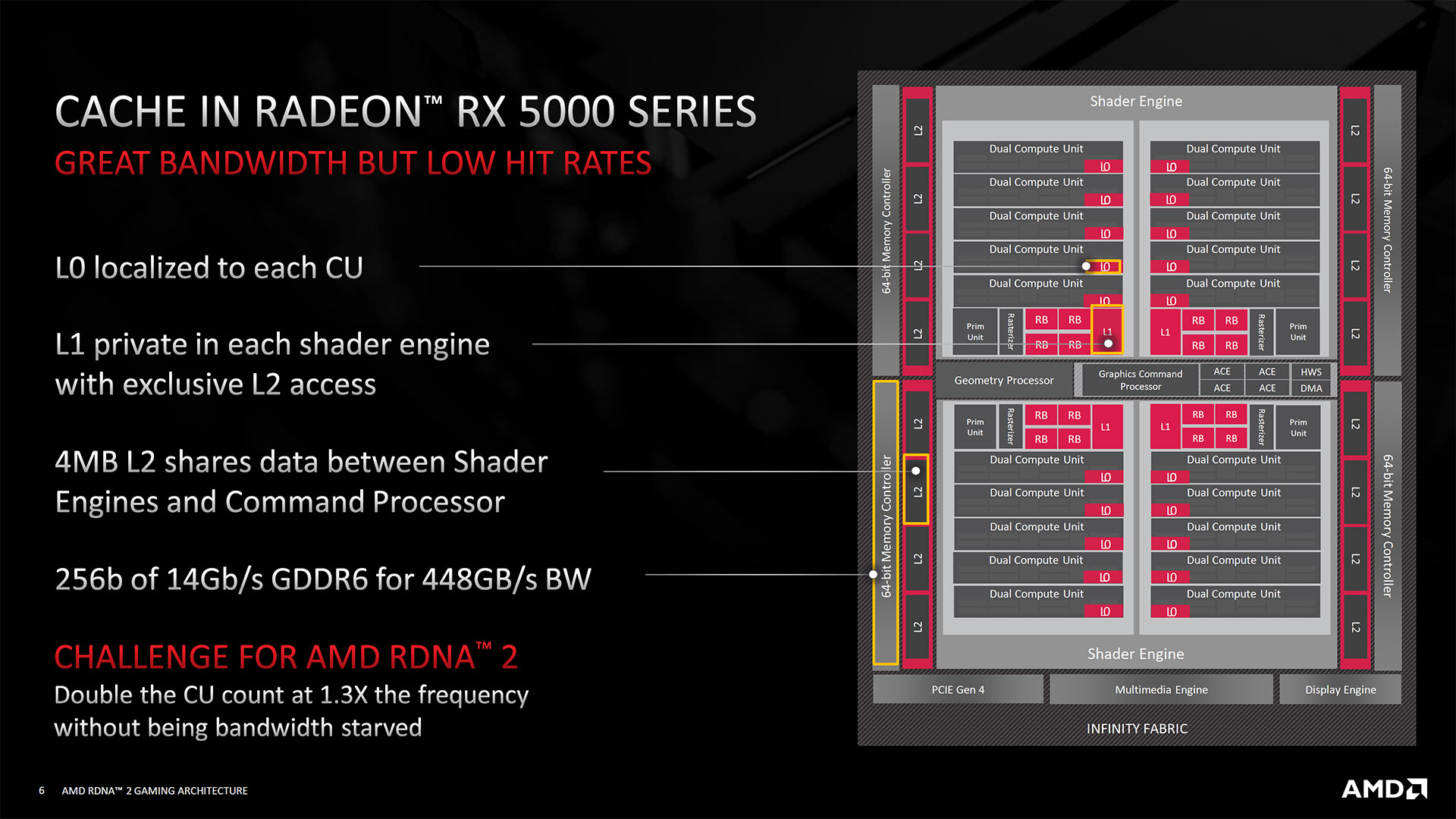

Every generation of GPUs is built from a core architecture, and each architecture offers improvements over the previous generation. It's an iterative and additive process that never really ends. AMD's GCN architecture went from first generation for its HD 7000 cards in 2012 up through fifth gen in the Vega and Radeon VII cards in 2017-2019. The RDNA architecture that powers the RX 5000 series of AMD GPUs arrived in mid 2019, bringing major improvements to efficiency and overall performance. RDNA2 doubled down on those improvements in late 2020.

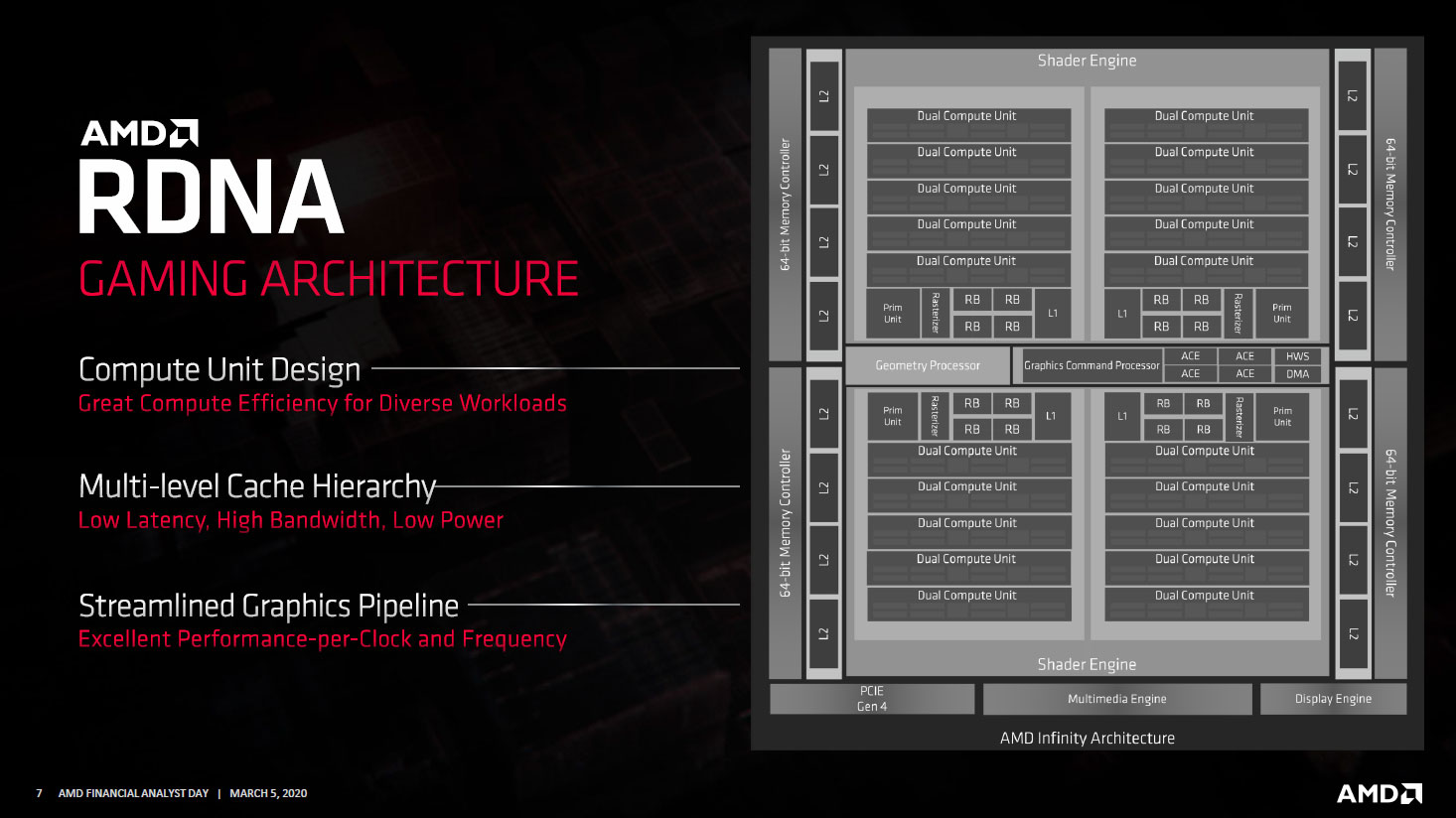

First, a quick recap of RDNA 1 is in order. The biggest changes with RDNA 1 over GCN involve a redistribution of resources and a change in how instructions are handled. In some ways, RDNA doesn't appear to be all that different from GCN. The instruction set is the same, but how those instructions are dispatched and executed has been improved. RDNA also added working support for primitive shaders, something present in the Vega GCN architecture that never got turned on due to complications.

Perhaps the most noteworthy update is that the wavefronts—the core unit of work that gets executed—have been changed from being 64 threads wide with four SIMD16 execution units, to being 32 threads wide with a single SIMD32 execution unit. SIMD stands for Single Instruction, Multiple Data; it's a vector processing element that optimizes workloads where the same instruction needs to be run on large chunks of data, which is common in graphics workloads.

This matching of the wavefront size to the SIMD size helps improve efficiency. GCN issued one instruction per wave every four cycles; RDNA issues an instruction every cycle. GCN used a wavefront of 64 threads (work items); RDNA supports 32- and 64-thread wavefronts. GCN has a Compute Unit (CU) with 64 GPU cores, 4 TMUs (Texture Mapping Units) and memory access logic. RDNA implements a new Workgroup Processor (WGP) that consists of two CUs, with each CU still providing the same 64 GPU cores and 4 TMUs plus memory access logic.

How much do these changes matter when it comes to actual performance and efficiency? It's perhaps best illustrated by looking at the Radeon VII, AMD's last GCN GPU, and comparing it with the RX 5700 XT. Radeon VII has 60 CUs, 3840 GPU cores, 16GB of HBM2 memory with 1 TBps of bandwidth, a GPU clock speed of up to 1750 MHz, and a theoretical peak performance rating of 13.8 TFLOPS. The RX 5700 XT has 40 CUs, 2560 GPU cores, 8GB of GDDR6 memory with 448 GBps of bandwidth, and clocks at up to 1905 MHz with peak performance of 9.75 TFLOPS.

On paper, Radeon VII looks like it should come out with an easy victory. In practice, across a dozen games that we've tested, the RX 5700 XT is slightly faster at 1080p gaming and slightly slower at 1440p. Only at 4K is the Radeon VII able to manage a 7% lead, helped no doubt by its memory bandwidth. Overall, the Radeon VII only has a 1-2% performance advantage, but it uses 300W compared to the RX 5700 XT's 225W.

In short, AMD was able to deliver roughly the same performance as the previous generation, with a third fewer cores, less than half the memory bandwidth and using 25% less power. That's a very impressive showing, and while TSMC's 7nm FinFET manufacturing process certainly warrants some of the credit (especially in regards to power), the performance uplift is mostly thanks to the RDNA architecture.

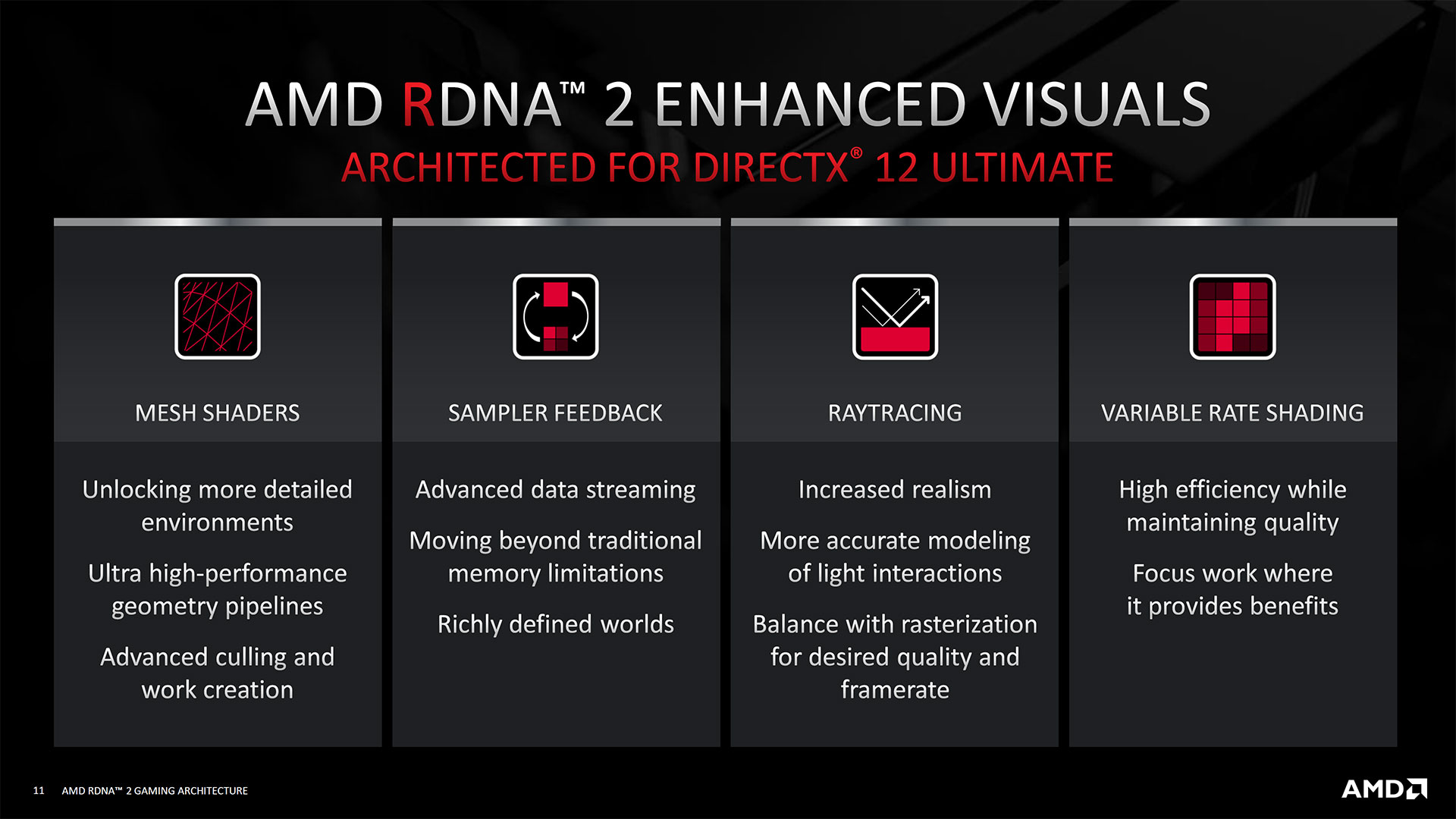

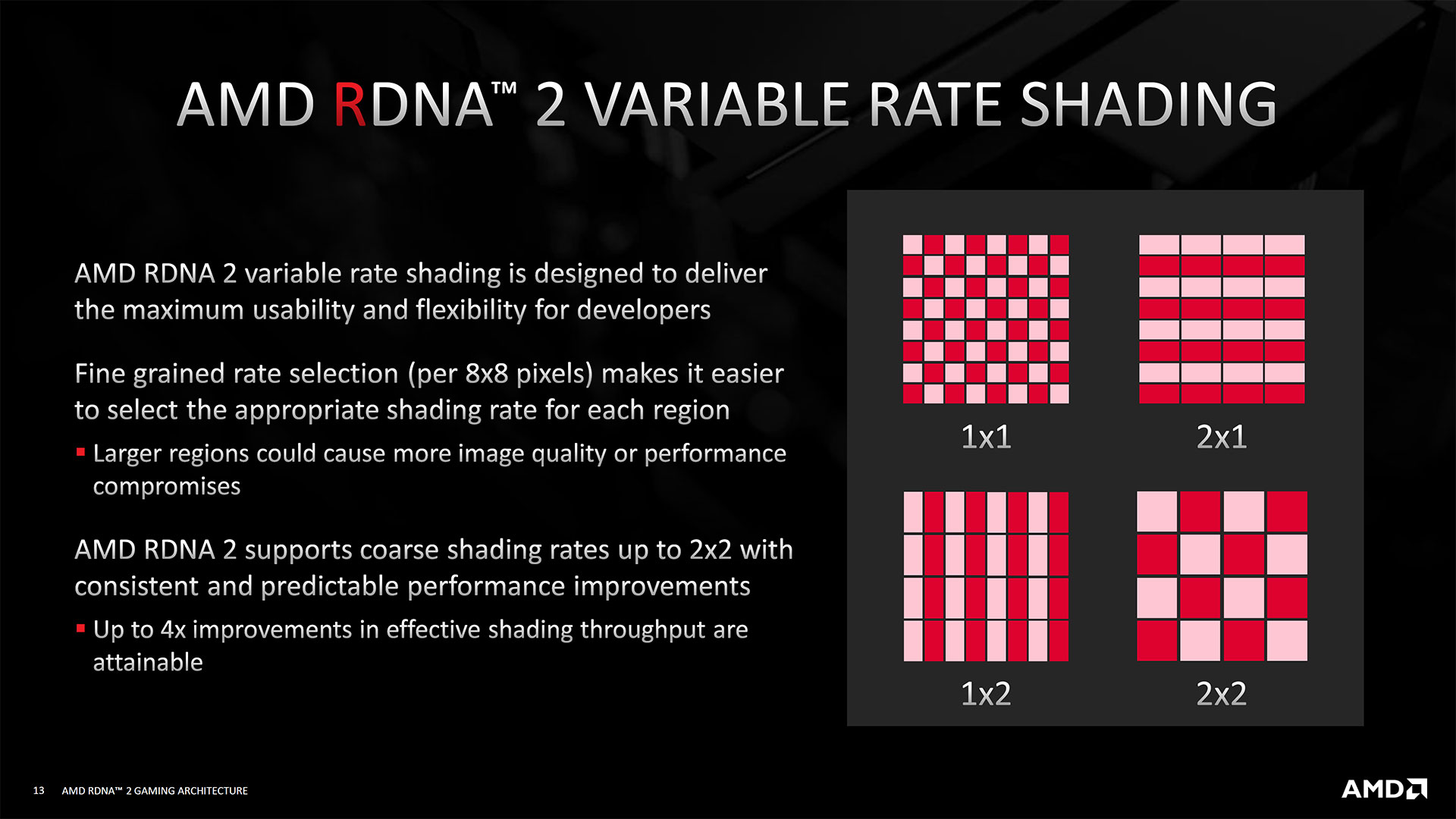

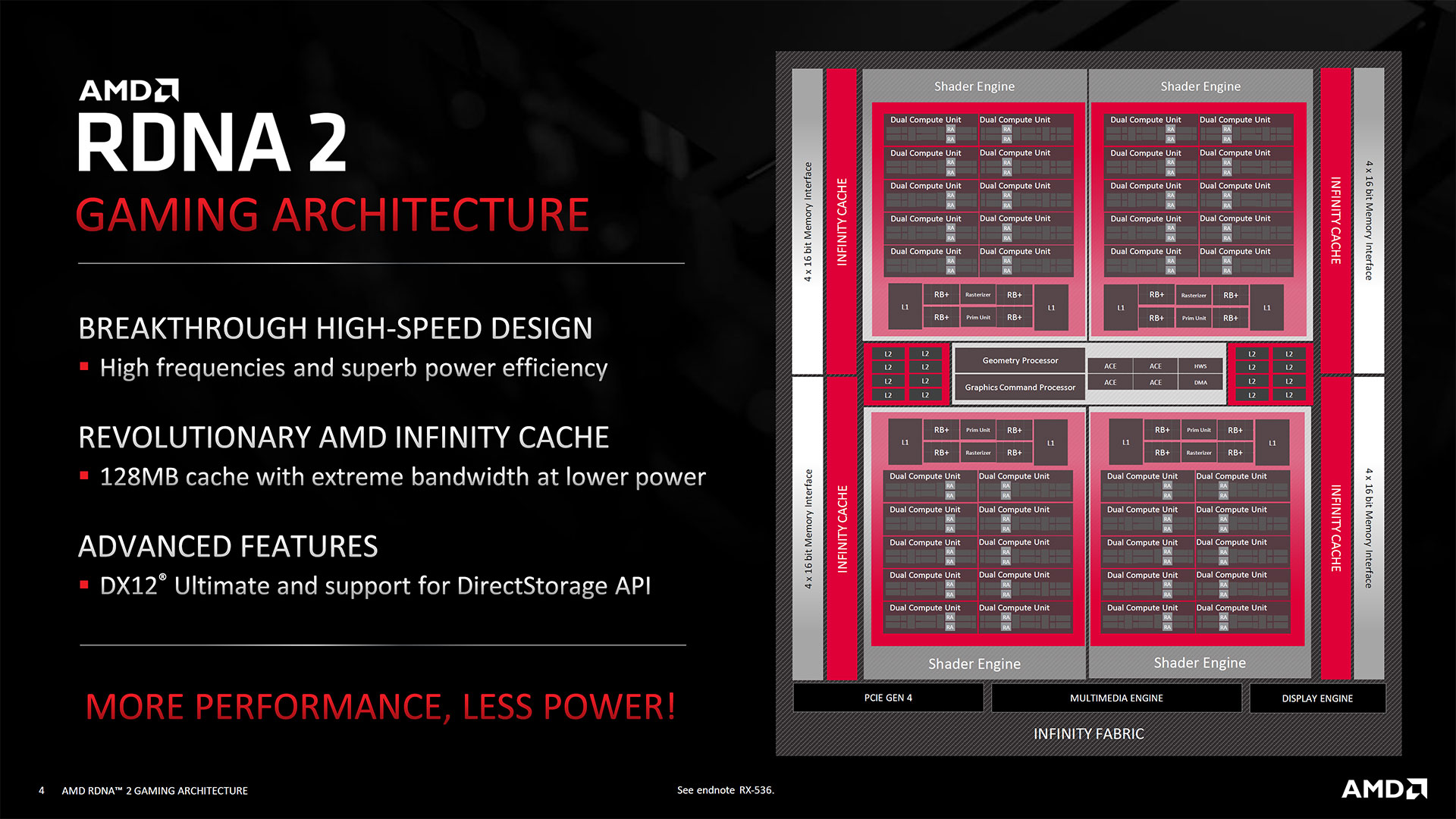

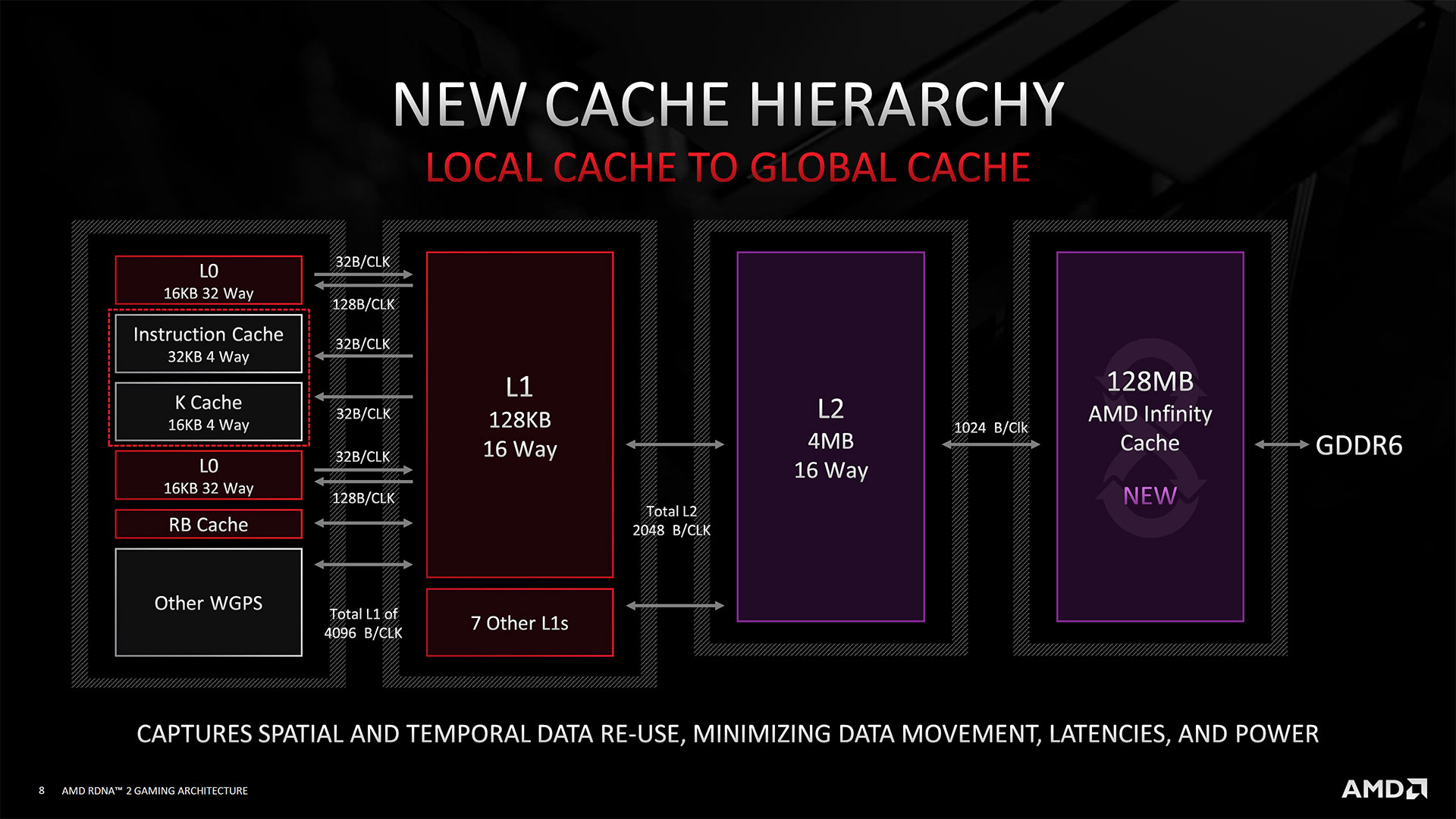

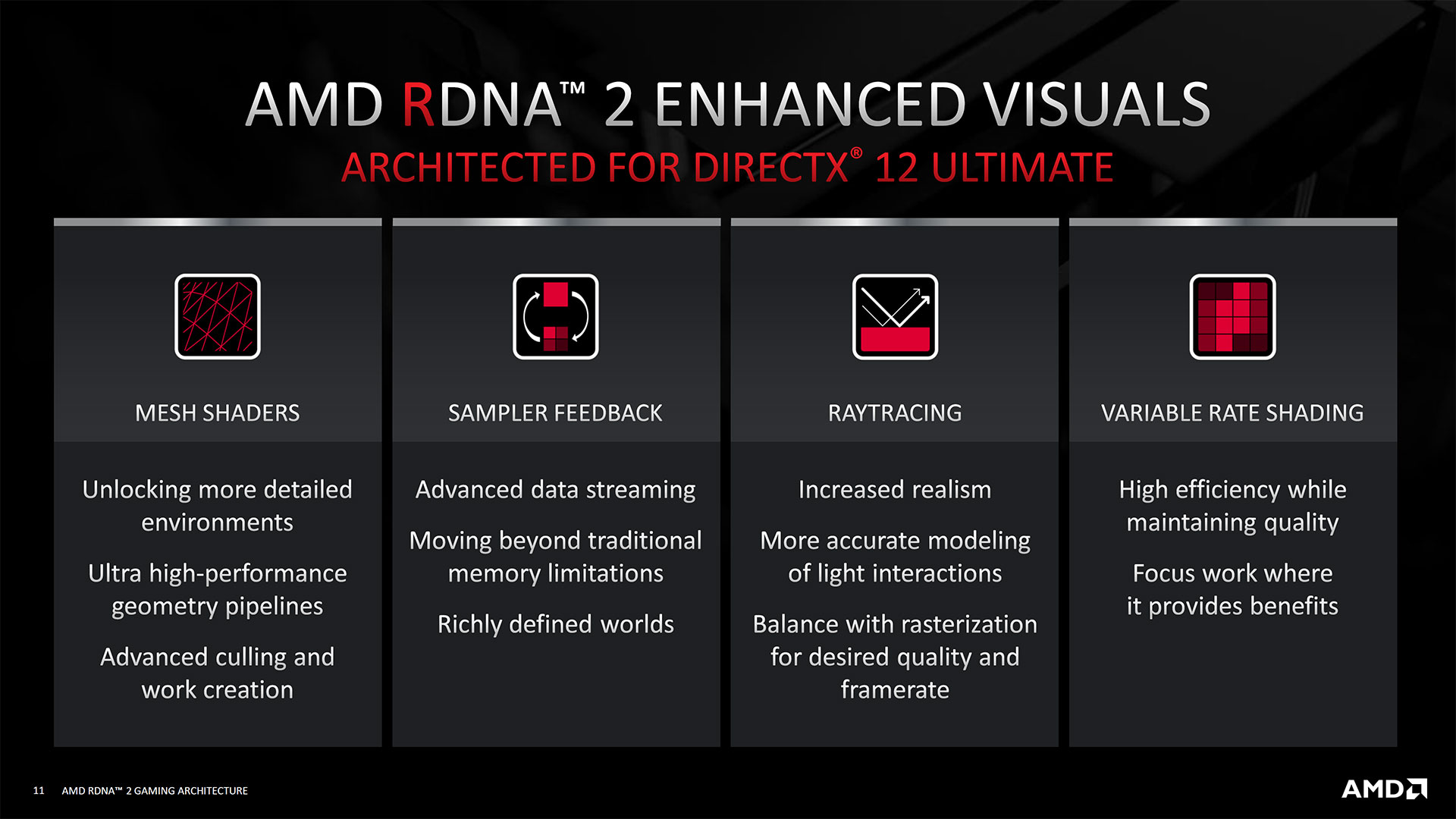

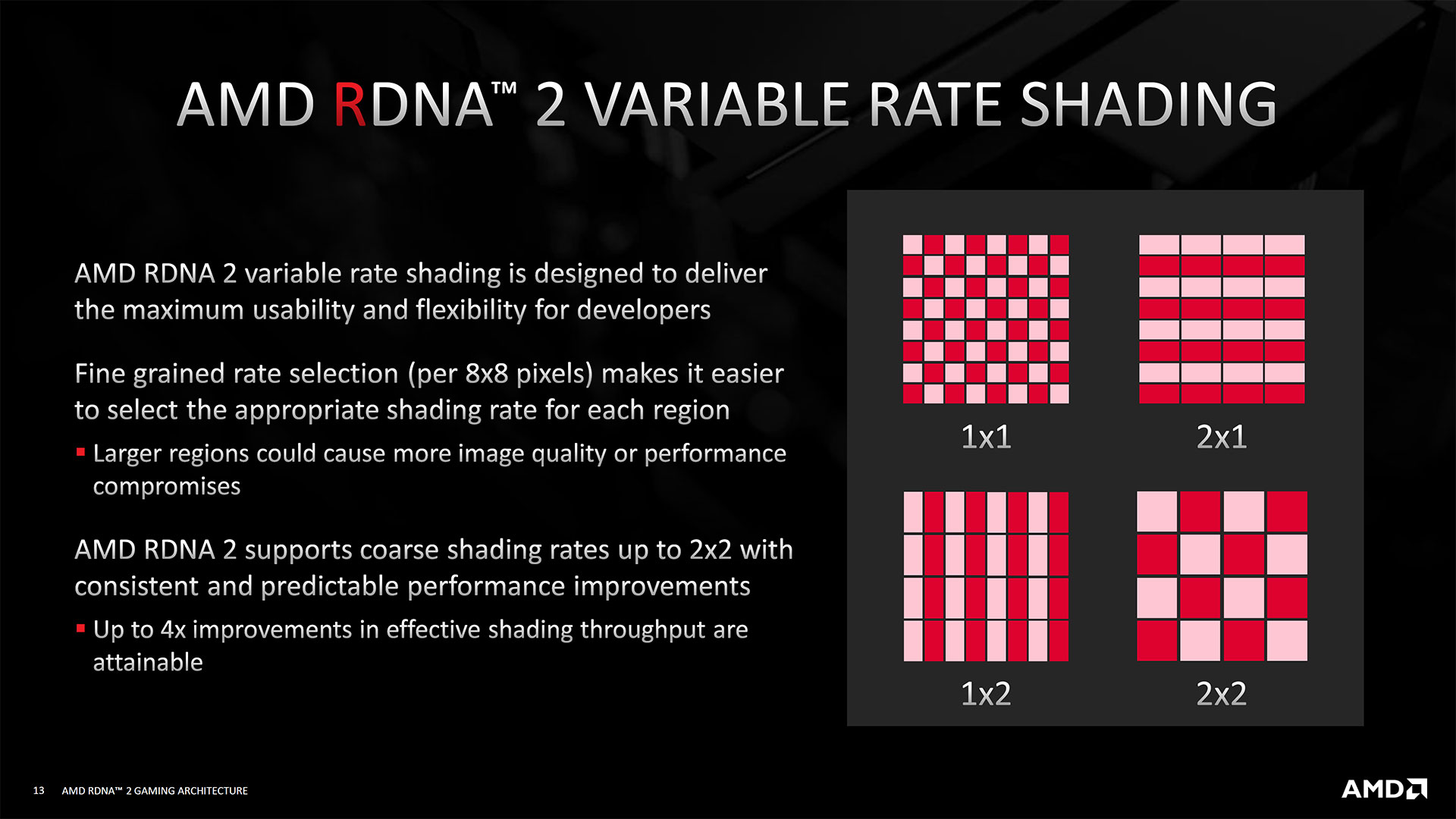

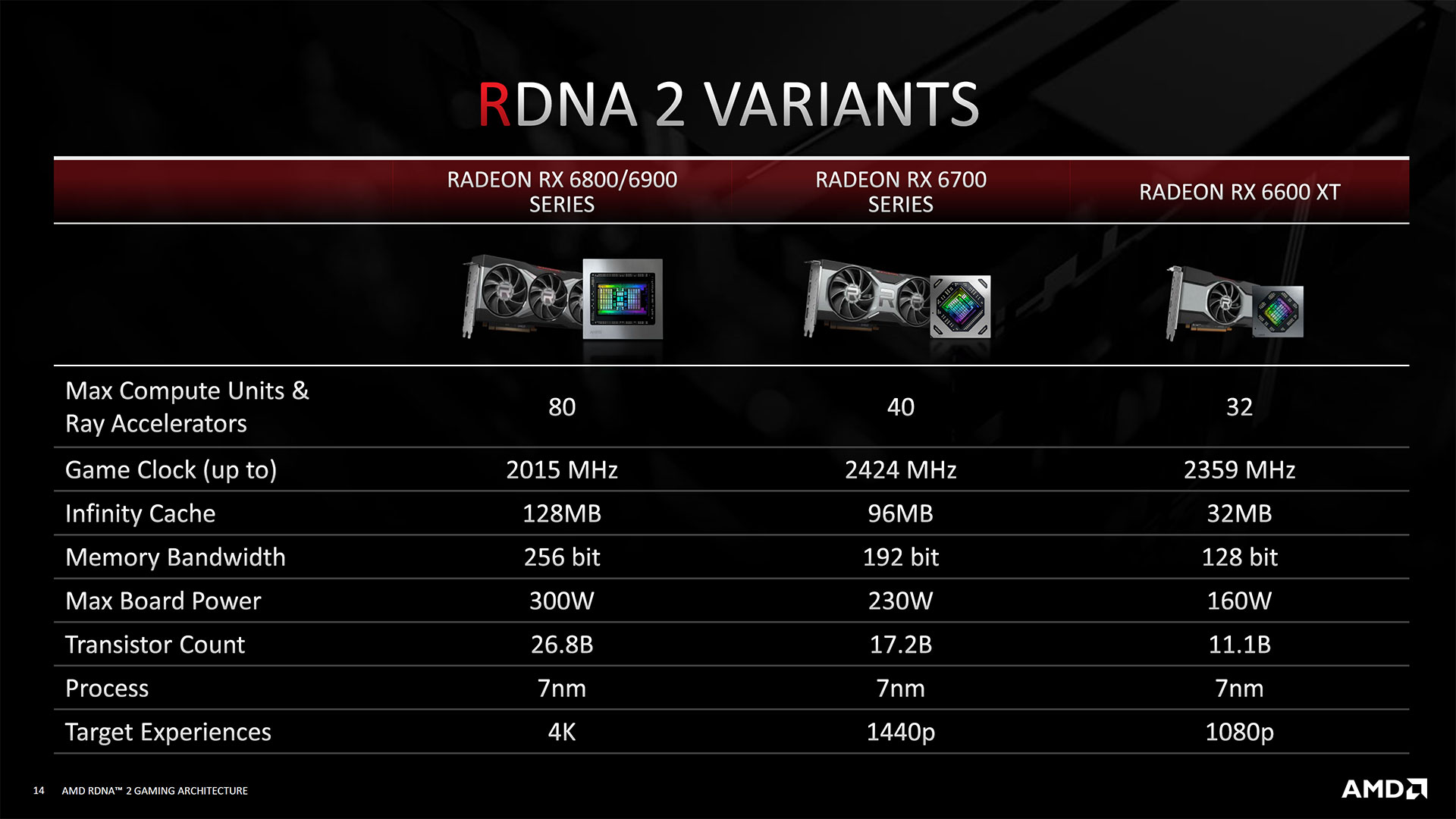

That's a lot of RDNA discussion, but it's important because RDNA2 carries all of that forward, with several major new additions. First is support for ray tracing, Variable Rate Shading (VRS), and everything else that's part of the DirectX 12 Ultimate spec. The other big addition is, literally, big: a 128MB Infinity Cache that help optimize memory bandwidth and latency. (Navi 22 has a 96MB Infinity Cache and Navi 23 gets by with a 32MB Infinity Cache.)

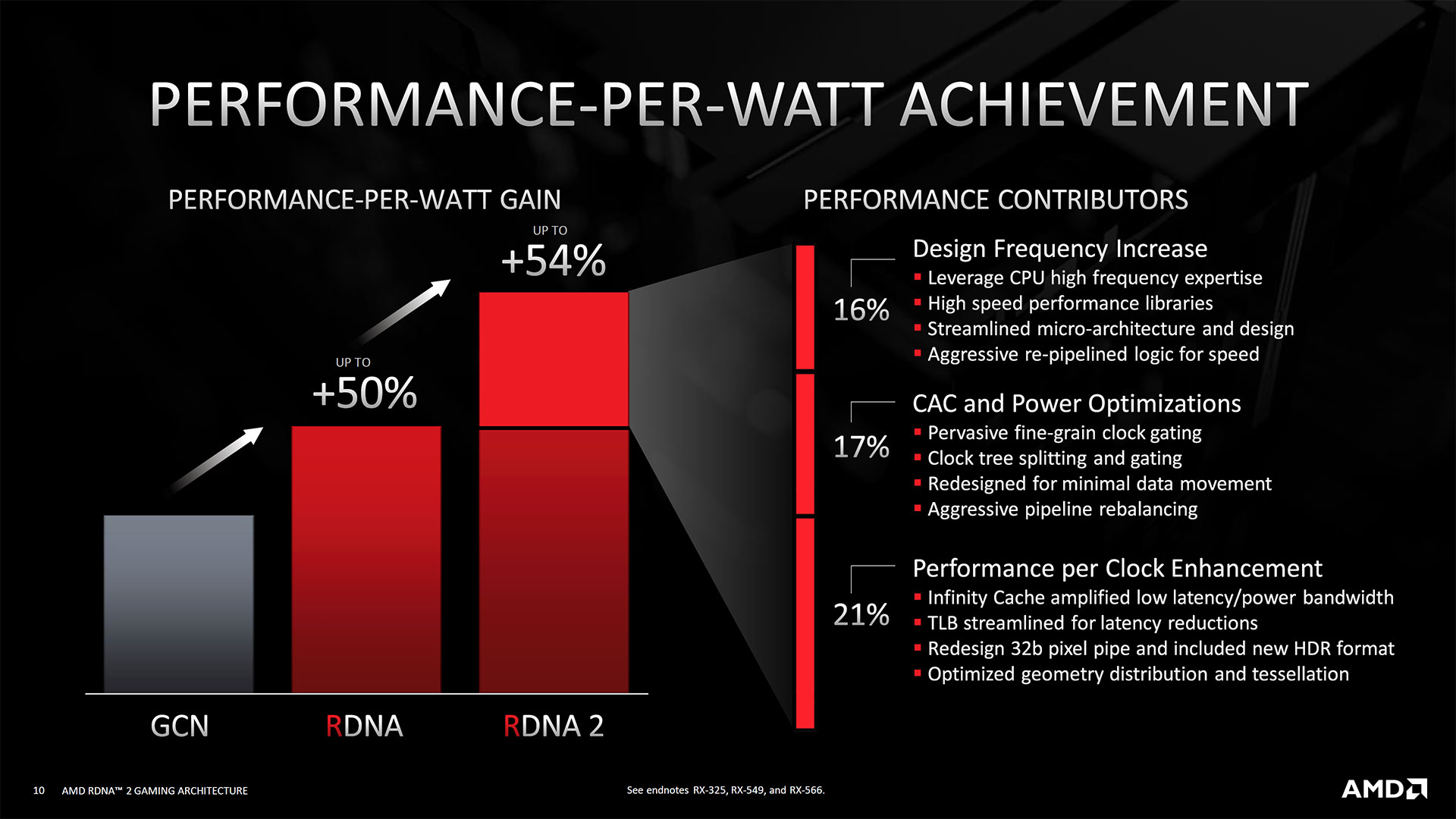

There are other tweaks to the architecture, but AMD made some big claims about Big Navi / RDNA2 / Navi 2x when it comes to performance per watt. Specifically, AMD said RDNA2 would offer 50% more performance per watt than RDNA 1, which is frankly a huge jump—the same large jump RDNA 1 saw relative to GCN. Even more importantly, AMD mostly succeeded. The RX 6600 XT ended up delivering slightly higher overall performance than the RX 5700 XT, while using 30% less power. Alternatively, the RX 6700 XT has the same 40 CUs as the RX 5700 XT, and it's about 30% faster than the older card while using a similar amount of power.

The other major change with RDNA2 involves tuning the entire GPU pipeline to hit substantially higher clockspeeds. Previous generation AMD GPUs tended to run at substantially lower clocks than their Nvidia counterparts, and while RDNA started to close the gap, RDNA2 flips the tables and blows past Nvidia with the fastest clocks we've ever seen on a GPU. Game Clocks across the entire RX 6000 range are above 2.1GHz, and cards like the RX 6700 XT and RX 6600 XT (and later variants) can average speeds of around 2.5GHz while gaming. Clock speeds aren't everything, but all else being equal, higher clocks are better, and the >20% boost in typical clocks accounts for a large chunk of the performance improvements we see with RDNA2 vs. RDNA GPUs.

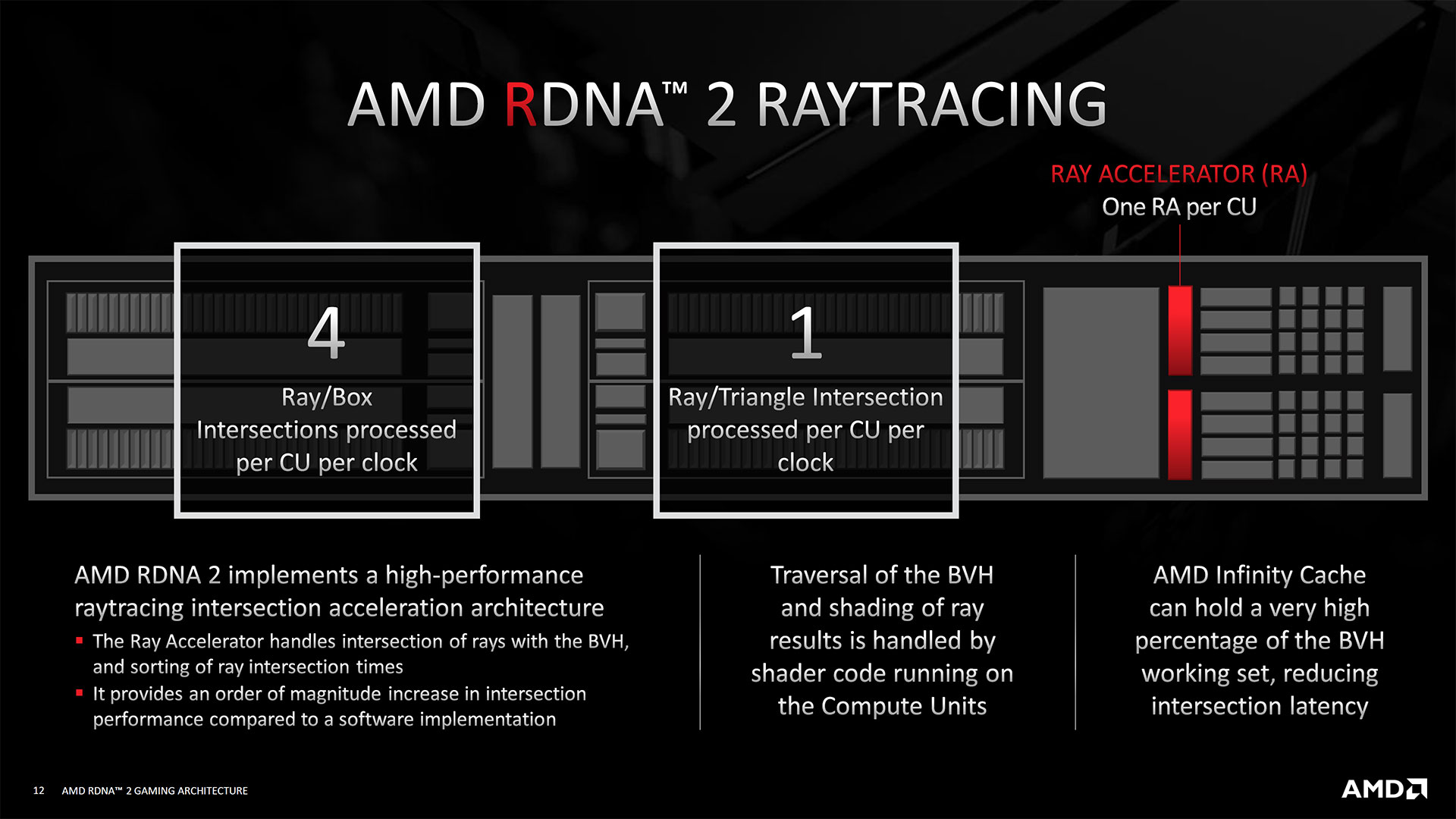

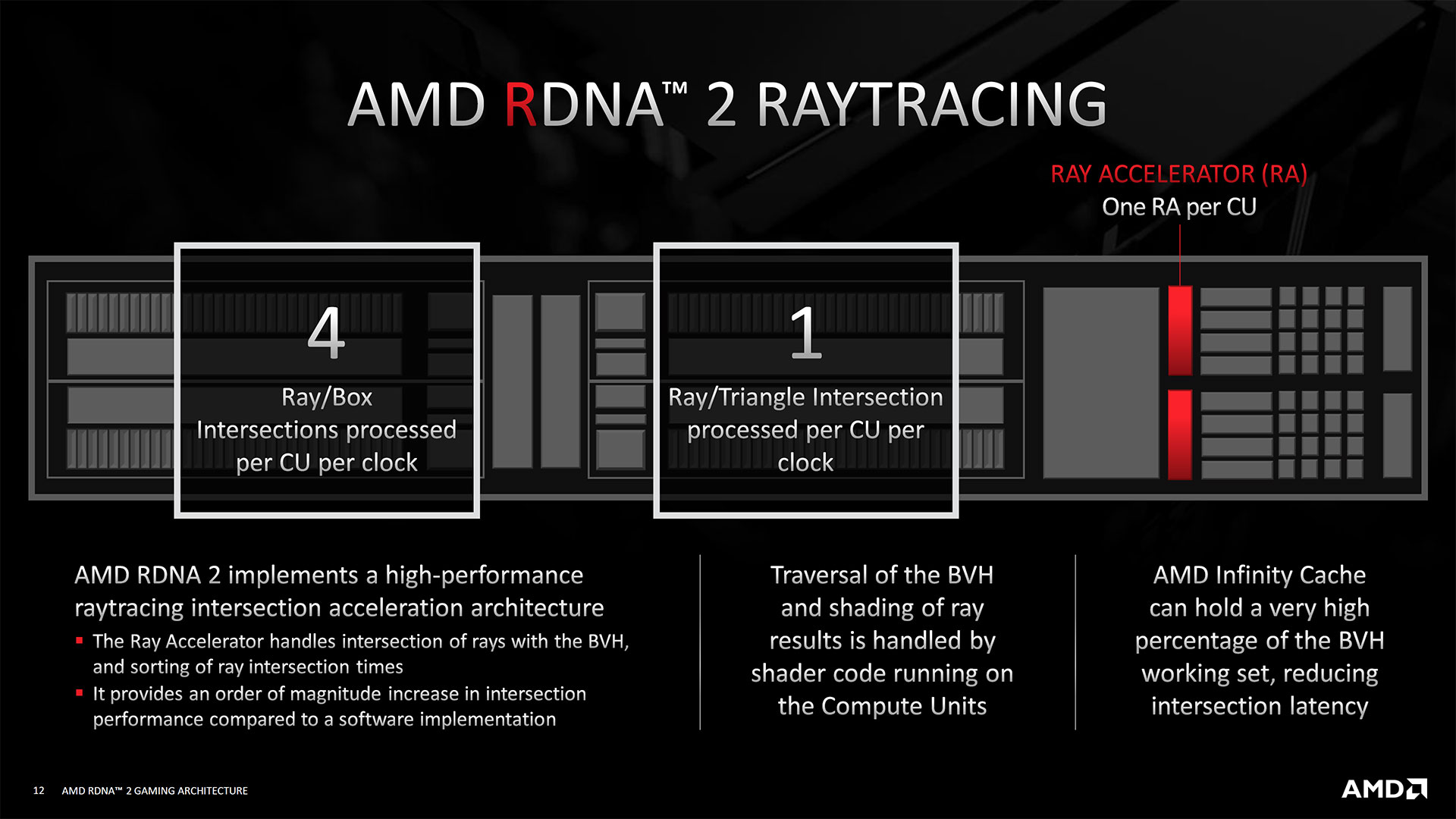

RDNA2 / Big Navi / RX 6000 GPUs support ray tracing, via DirectX 12 Ultimate or VulkanRT. That brings AMD up to feature parity with Nvidia. AMD uses the same BVH approach to ray tracing calculations as Nvidia — it has to, since it's part of the API. If you're not familiar with the term BVH, it stands for Bounding Volume Hierarchy and is used to efficiently find ray and triangle intersections; you can read more about it in our discussion of Nvidia's Turing architecture and its ray tracing algorithm.

AMD's RDNA2 chips contain one Ray Accelerator per CU, which is similar to what Nvidia has done with it's RT cores. Even though AMD sort of takes the same approach as Nvidia, the comparison between AMD and Nvidia isn't clear cut. The BVH algorithm depends on both ray/box intersection calculations and ray/triangle intersection calculations. AMD's RDNA2 architecture can do four ray/box intersections per CU per clock, or one ray/triangle intersection per CU per clock.

There's an important distinction here that we do need to point out. AMD apparently does the ray/box intersections using modified texture units. While a rate of four ray/box intersections per clock might sound good, we don't have exact details of how that compares with Nvidia's RTX hardware. What we do know is that, in general, Nvidia's ray tracing performance is better. Also note that Intel's Arc Architecture does up to 12 ray/box intersections per clock.

From our understanding, Nvidia's Ampere architecture can do up to two ray/triangle intersections per RT core per clock, plus some additional extras, but it's not clear what the ray/box rate is. In testing, Big Navi RT performance generally doesn't come anywhere close to matching Ampere, though it can usually keep up with Turing RT performance. That's likely due to Ampere's RT cores doing more ray/box and ray/triangle intersections per clock.

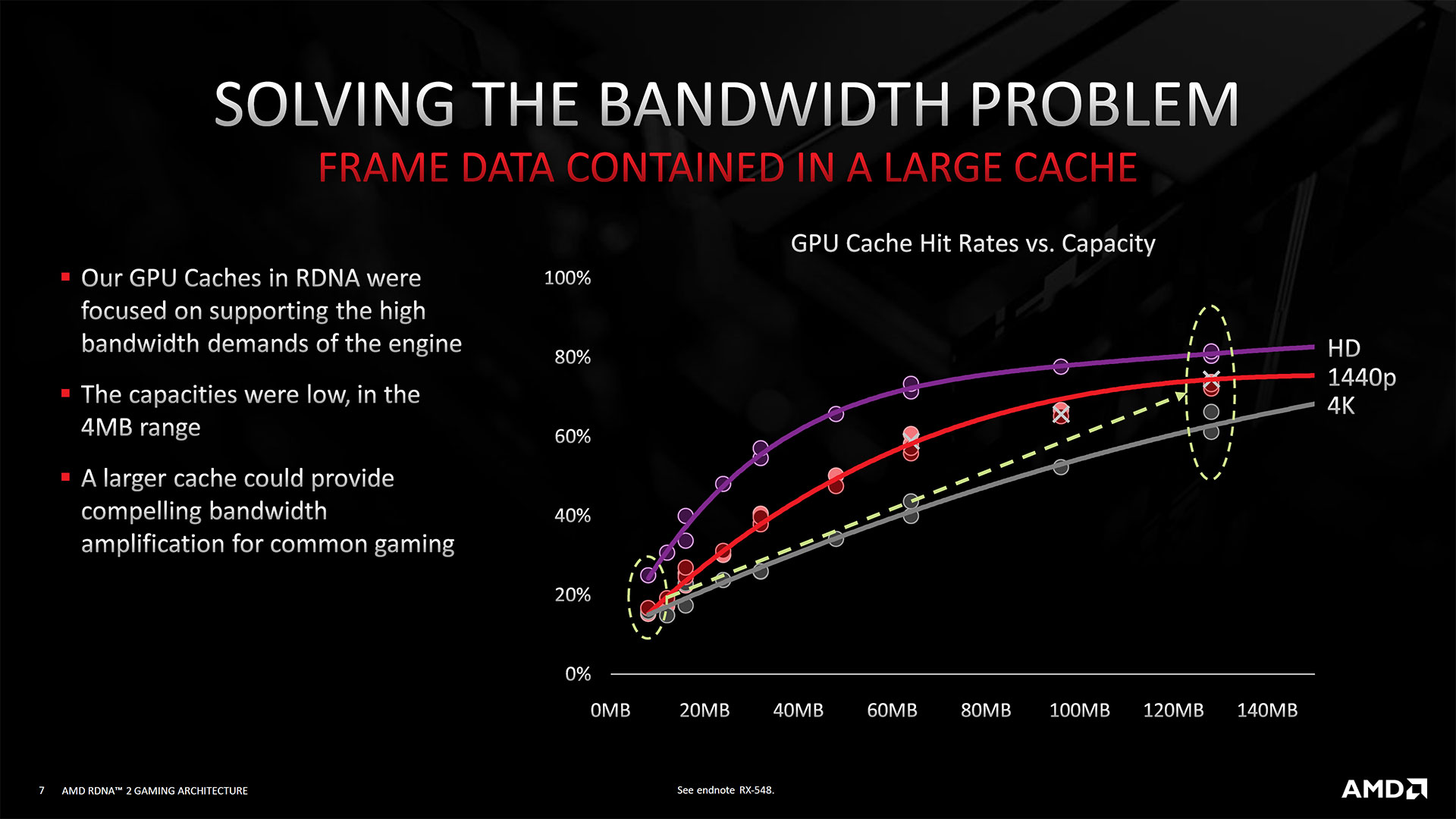

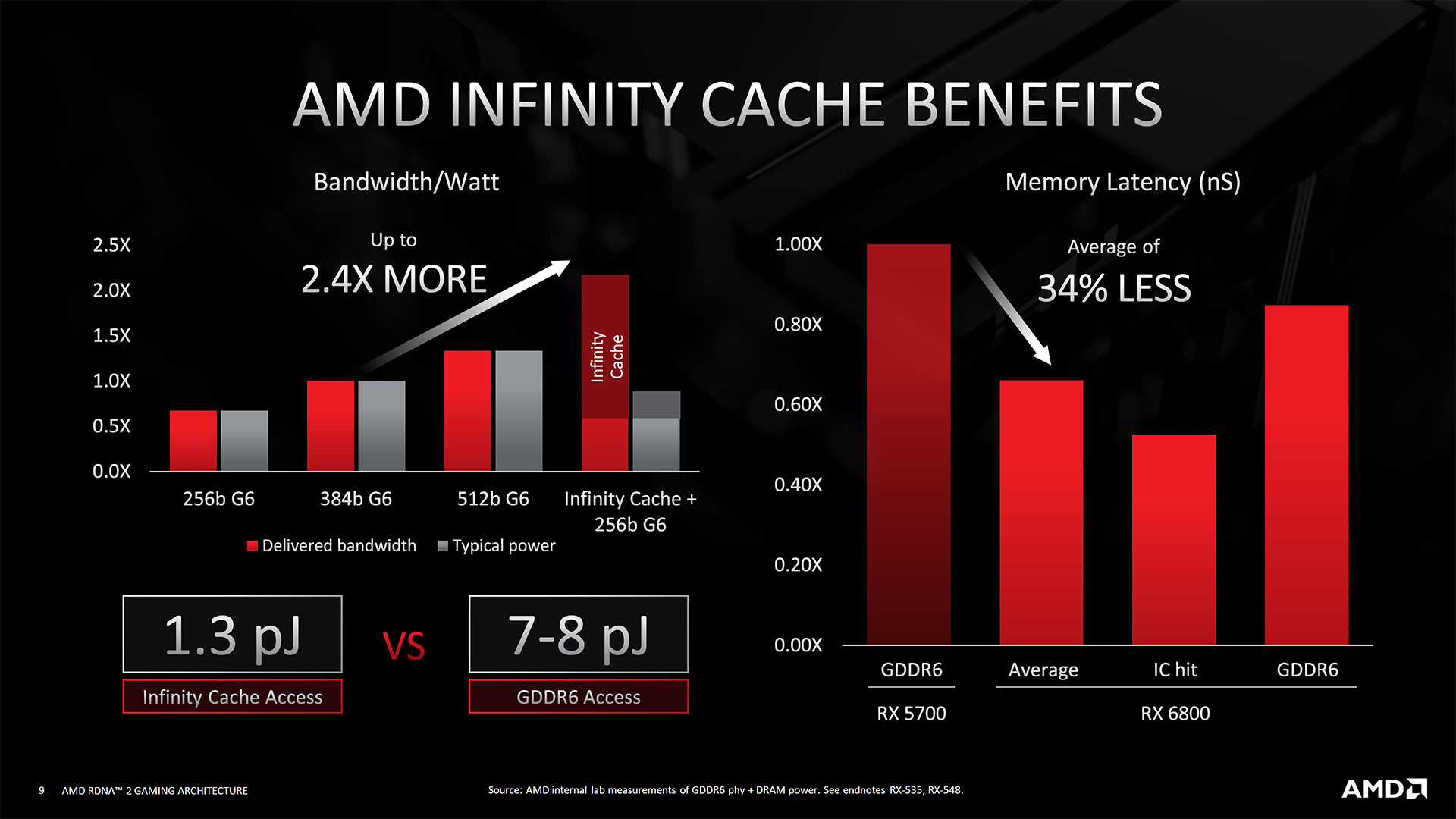

The Infinity Cache is perhaps the most interesting change. By including a whopping 128MB cache (L3, but with AMD branding), AMD should be able to keep basically all of the framebuffer cached, along with the z-buffer and the some recent textures. That will dramatically reduce memory bandwidth use and latency, and AMD claims the Infinity Cache allows the relatively tame GDDR6 16 Gbps memory to deliver an effective bandwidth that's 2.17 times higher than the raw numbers would suggest.

The Infinity Cache also helps with ray tracing calculations. We've seen on Nvidia's GPUs that memory bandwidth can impact RT performance on the lower tier cards like the RTX 2060, but it might also be memory latency that's to blame. We can't test AMD Big Navi performance in RT without the Infinity Cache, however, and all we know is that RT performance tends to lag behind Nvidia.

The Infinity Cache propagates down to the lower tier RDNA2 chips, but in different capacities. 128MB is very large, and based on AMD's image of the die, it's about 17 percent of the total die area on Navi 21. The CUs by comparison are only about 31 percent of the die area, with memory controllers, texture units, video controllers, video encoder/decoder hardware, and other elements taking up the rest of the chip. Navi 22, Navi 23, and Navi 24 have far lower CU counts, and less Infinity cache as well. Navi 22 has a 96MB L3 cache, Navi 23 trims that all the way down to just 32MB, and Navi 24 has 16MB. Interestingly, even with only one fourth the Infinity Cache, the RX 6600 XT still managed to outperform the RX 5700 XT at 1080p and 1440p — despite having 43% less raw bandwidth.

One big difference between AMD and Nvidia is that Nvidia also has Tensor cores in its Ampere and Turing architectures, which are used for deep learning and AI computations, as well as DLSS (Deep Learning Super Sampling). AMD doesn't have a Tensor core equivalent, though its FidelityFX Super Resolution does offer somewhat analogous features and works on just about any GPU. Meanwhile, Intel's future Arc Alchemist architecture will also have tensor processing elements, and XeSS will have a fall-back mode that runs using DP4a code on other GPUs.

AMD already has a dozen Navi 2x products, using the four GPUs we've discussed. The RDNA2 architecture is also being used in some upcoming smartphone chips like the Samsung Exynos 2100, likely without any Infinity Cache and with far different capabilities in terms of performance. AMD's RX 6000 cards span the entire gamut, from budget to midrange to extreme performance categories.

RX 6000 / Big Navi / Navi 2x Specifications

That takes care of all the core architectural changes. Now let's put it all together and look at all of the announced RDNA2 / RX 6000 / Big Navi GPUs. AMD basically doubled down on Navi 10 when it comes to CUs and shaders, shoving twice the number of both into the largest Navi 21 GPU. At the same time, Navi 10 is relatively small at just 251mm square, and Big Navi is more than double that in its largest configuration. AMD also more than doubled the number of graphics card models with Big Navi. Here's the full rundown.

| Graphics Card | RX 6950 XT | RX 6900 XT | RX 6800 XT | RX 6800 | RX 6750 XT | RX 6700 XT | RX 6700 | RX 6650 XT | RX 6600 XT | RX 6600 | RX 6500 XT | RX 6400 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Architecture | Navi 21 | Navi 21 | Navi 21 | Navi 21 | Navi 22 | Navi 22 | Navi 22 | Navi 23 | Navi 23 | Navi 23 | Navi 24 | Navi 24 |

| Process Technology | TSMC N7 | TSMC N7 | TSMC N7 | TSMC N7 | TSMC N7 | TSMC N7 | TSMC N7 | TSMC N7 | TSMC N7 | TSMC N7 | TSMC N6 | TSMC N6 |

| Transistors (Billion) | 26.8 | 26.8 | 26.8 | 26.8 | 17.2 | 17.2 | 17.2 | 11.1 | 11.1 | 11.1 | 5.4 | 5.4 |

| Die size (mm^2) | 519 | 519 | 519 | 519 | 336 | 336 | 336 | 237 | 237 | 237 | 107 | 107 |

| Compute Units | 80 | 80 | 72 | 60 | 40 | 40 | 36 | 32 | 32 | 28 | 16 | 12 |

| GPU Cores (Shaders) | 5120 | 5120 | 4608 | 3840 | 2560 | 2560 | 2304 | 2048 | 2048 | 1792 | 1024 | 768 |

| Ray Accelerators | 80 | 80 | 72 | 60 | 40 | 40 | 36 | 32 | 32 | 28 | 16 | 12 |

| Boost Clock (MHz) | 2310 | 2250 | 2250 | 2105 | 2600 | 2581 | 2450 | 2635 | 2589 | 2491 | 2815 | 2321 |

| VRAM Speed (Gbps) | 18 | 16 | 16 | 16 | 18 | 16 | 16 | 18 | 16 | 14 | 18 | 16 |

| VRAM (GB) | 16 | 16 | 16 | 16 | 12 | 12 | 10 | 8 | 8 | 8 | 4 | 4 |

| VRAM Bus Width | 256 | 256 | 256 | 256 | 192 | 192 | 160 | 128 | 128 | 128 | 64 | 64 |

| ROPs | 128 | 128 | 128 | 96 | 64 | 64 | 64 | 64 | 64 | 64 | 32 | 32 |

| TMUs | 320 | 320 | 288 | 240 | 160 | 160 | 144 | 128 | 128 | 112 | 64 | 48 |

| TFLOPS FP32 (Boost) | 23.7 | 23.0 | 20.7 | 16.2 | 13.3 | 13.2 | 11.3 | 10.8 | 10.6 | 8.9 | 5.8 | 3.6 |

| TFLOPS FP16 (Boost) | 47.4 | 46 | 41.4 | 32.4 | 26.6 | 26.4 | 22.6 | 21.6 | 21.2 | 17.8 | 11.6 | 7.2 |

| Bandwidth (GBps) | 576 | 512 | 512 | 512 | 432 | 384 | 320 | 288 | 256 | 224 | 144 | 128 |

| TDP (watts) | 335 | 300 | 300 | 250 | 250 | 230 | 175 | 180 | 160 | 132 | 107 | 53 |

| Launch Date | May 2022 | Dec 2020 | Nov 2020 | Nov 2020 | May 2022 | Mar 2021 | Mar 2021 | Jun 2022 | Aug 2021 | Oct 2021 | Jan 2022 | Jan 2022 |

| Launch Price | $1,099 | $999 | $649 | $579 | $549 | $479 | $479 | ? | $379 | $329 | $199 | $159 |

The highest spec parts all use the same Navi 21 GPU, just with differing numbers of enabled functional units. Navi 21 has 80 CUs and 5120 GPU cores, and is more than double the size (519mm square) of the previous generation Navi 10 used in the RX 5700 XT. But a big chip means lower yields, so AMD has parts with 72 and 60 CUs as well. The same goes for the other chips, though Navi 22 has mostly been used in fully enabled variants for the desktop — Sapphire is the only company doing an RX 6700 10GB card that we're aware of.

All the RDNA2 GPUs were hard to come by, up until mid-2022. Look at our GPU price index and you can see how many of each card has been sold (and resold) on eBay during the past year and more. These days, all of the GPUs are in stock at retail, with prices now below AMD's official MSRPs. However, Nvidia's RTX 30-series GPUs still appear to have vastly outsold their AMD counterparts.

What's interesting is how the die sizes and other features line up. Big Navi / RDNA2 adds support for ray tracing and other DX12 Ultimate features, which requires quite a few transistors. The very large Infinity Cache is also going to use up a huge chunk of die area, but it also helps overcome potential bandwidth limitations caused by the somewhat narrow 256-bit bus width on Navi 21. Ultimately, Navi 23 ended up with a slightly smaller die than Navi 10, but with similar performance and the additional new features.

AMD's budget-friendly Navi 24 unfortunately scaled things back too far. Originally intended as a mobile-only solution, where it would have been paired with an integrated GPU, AMD decided to use it for some desktop cards. It has an x4 PCIe link, a 64-bit memory interface with 16MB of Infinity Cache, and a maximum of 16 CUs. AMD also removed all support for video encoding (part of the mobile design), and it only has H.264/H.265 decode support. It's possible to give the GPU 8GB of VRAM if the GDDR6 is set up in clamshell mode (VRAM on both sides of the PCB), but it's still going to be an underpowered chip.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

One thing that has truly impressed with RDNA2 is the Infinity Cache. Not only does AMD mostly give plenty of VRAM for the various models, but the Infinity Cache truly does help with real-world performance. The RX 6700 XT as an example has less bandwidth than the RTX 3060 Ti and yet still keeps up with it in gaming performance, and the same goes for the RX 6800 XT vs. the RTX 3080.

The RX 6500 XT marks the transition between competitive and lackluster GPUs. Nvidia doesn't bother making a budget RTX 30-series chip, perhaps for good reason. The RX 6500 XT and RX 6400 end up competing with Nvidia's previous generation GTX 16-series cards, and basically only match the performance of the GTX 1650 Super and GTX 1650, respectively. Navi 24 would have been far more interesting if it were a 6GB card with a 96-bit interface.

Big Navi / Navi 2x Performance

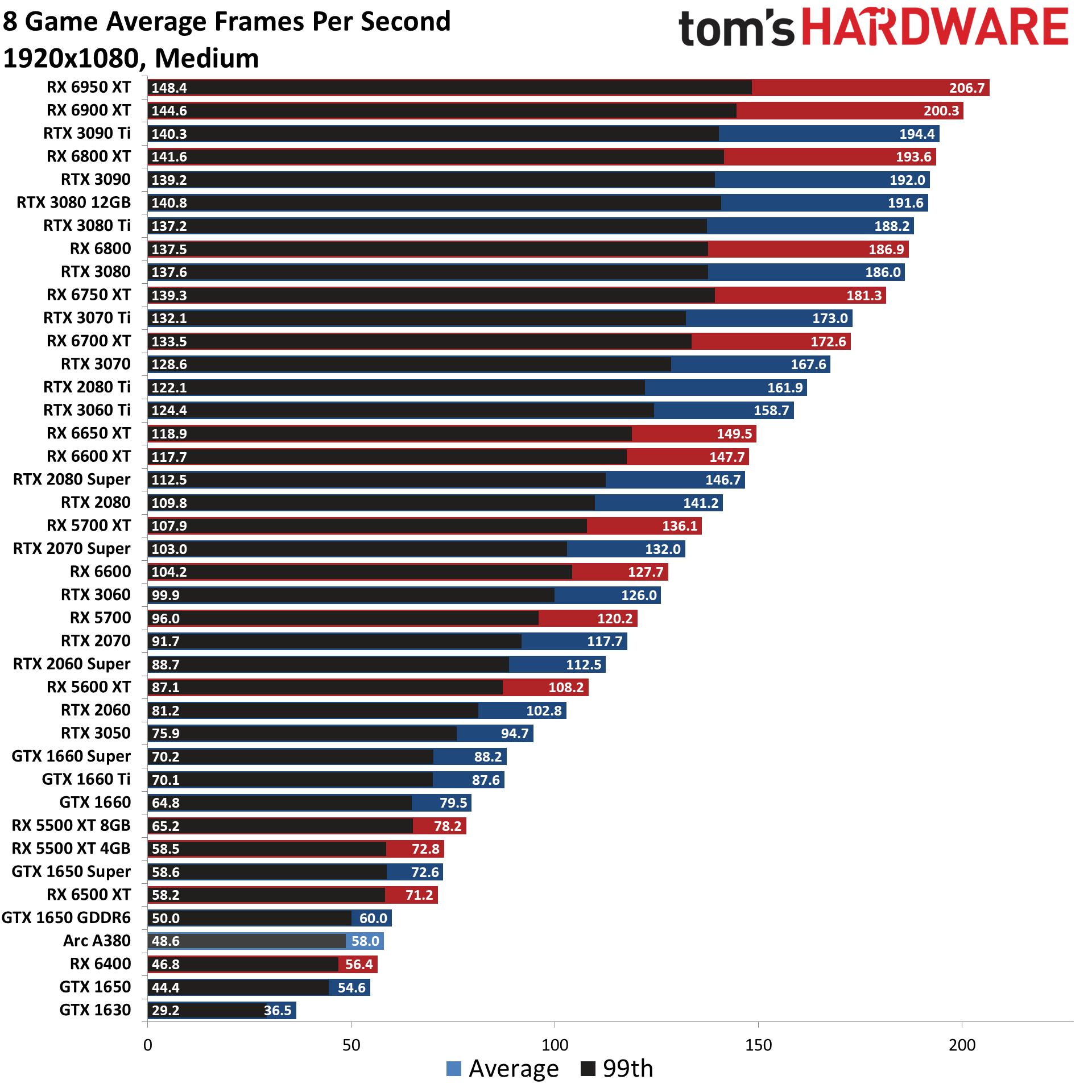

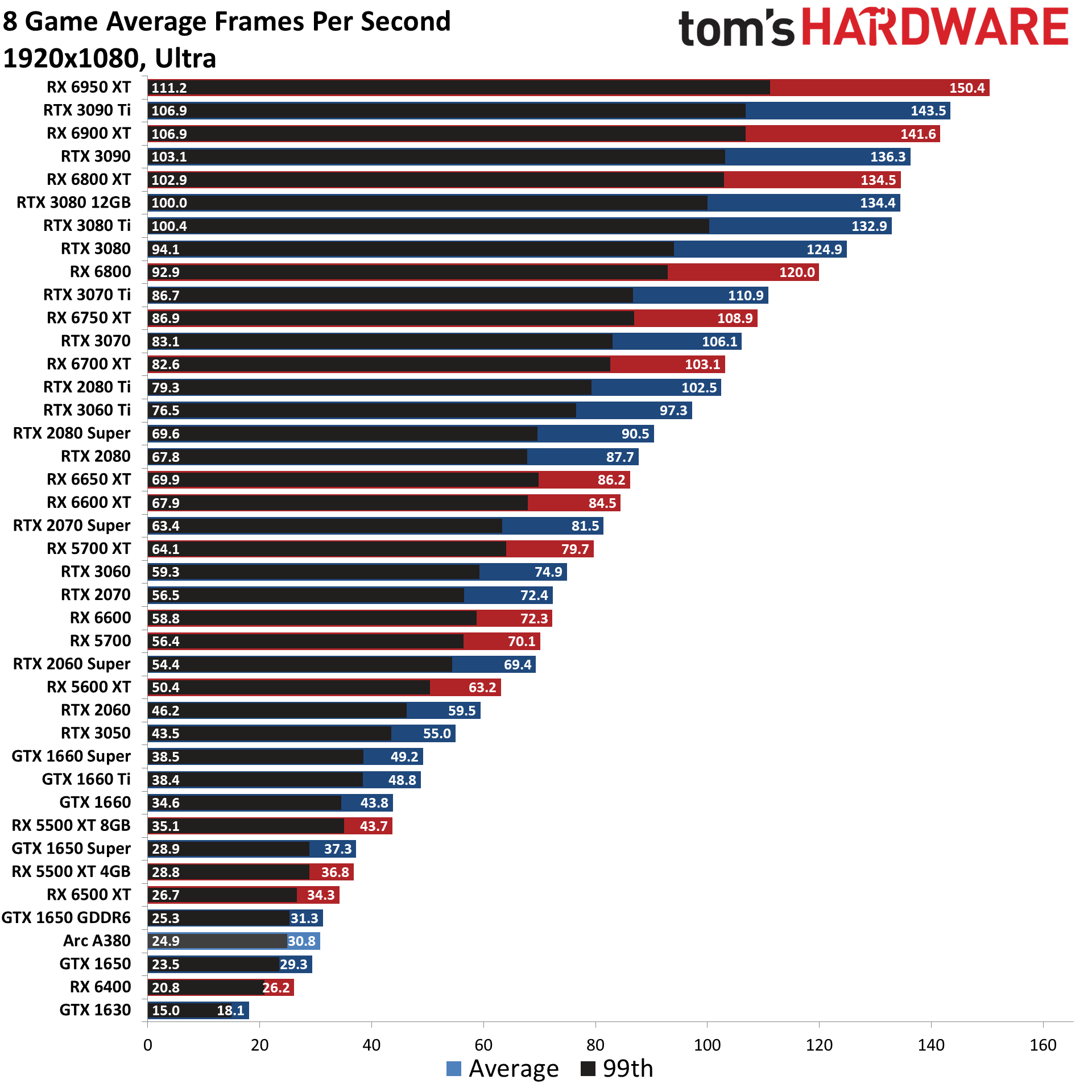

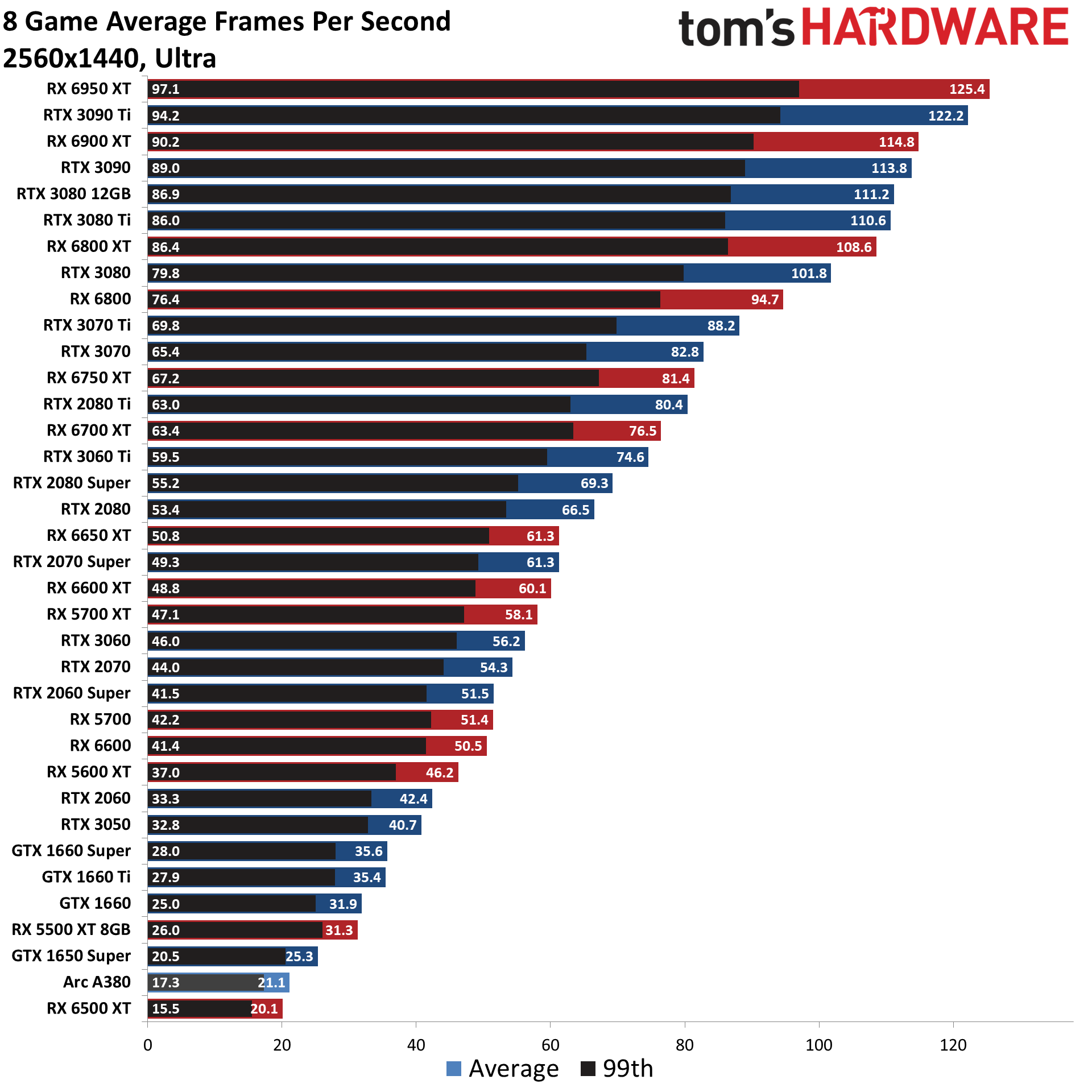

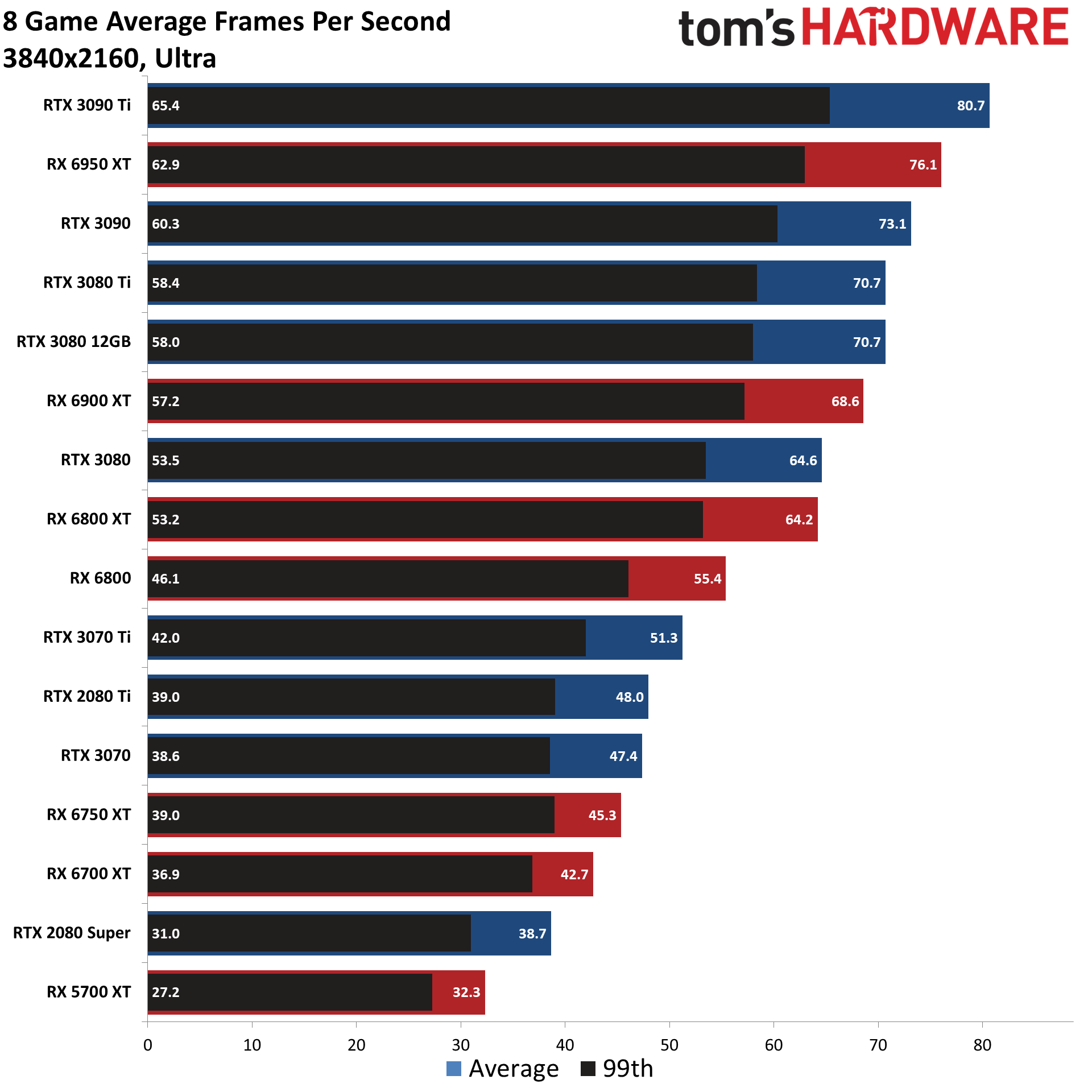

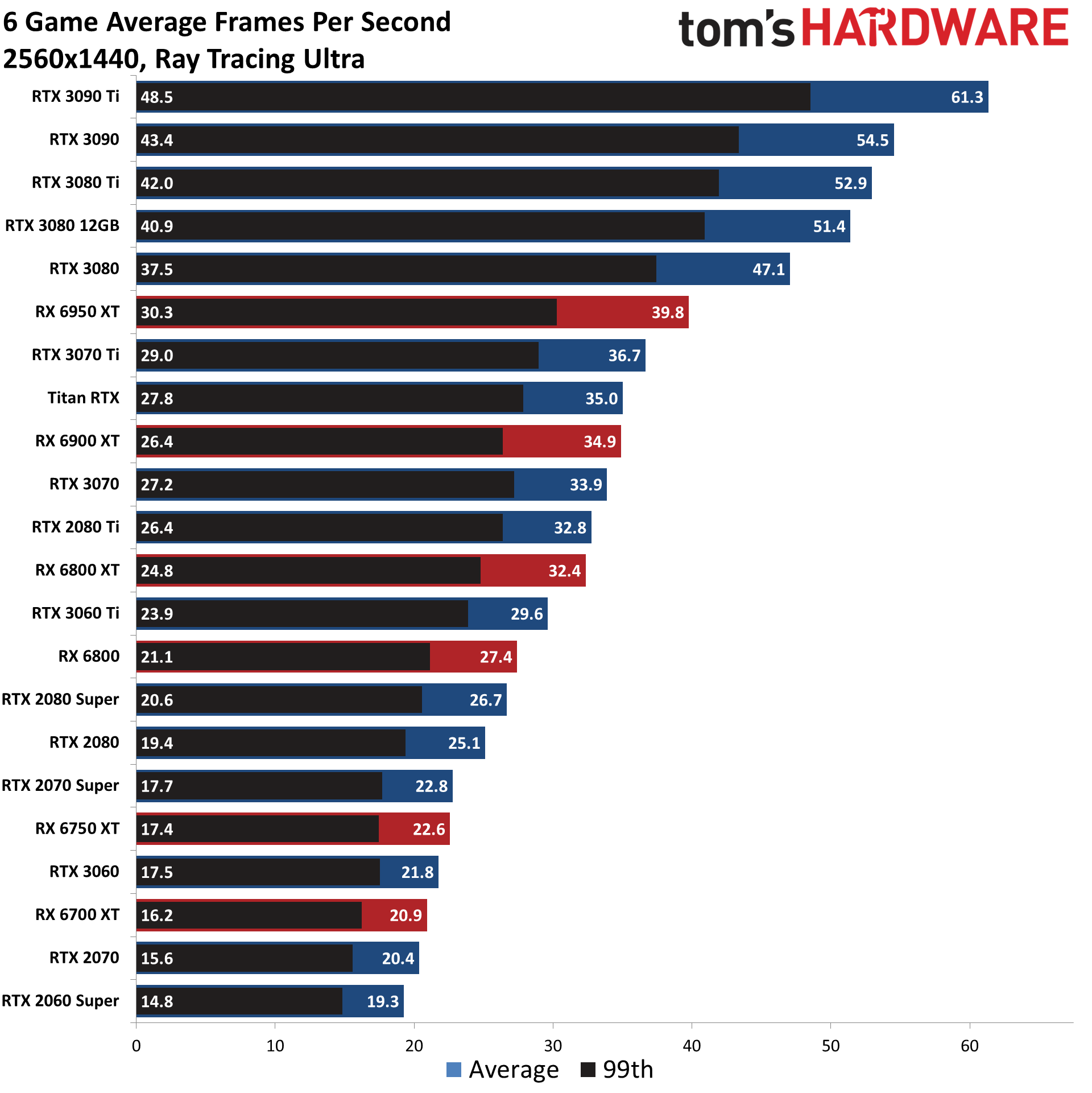

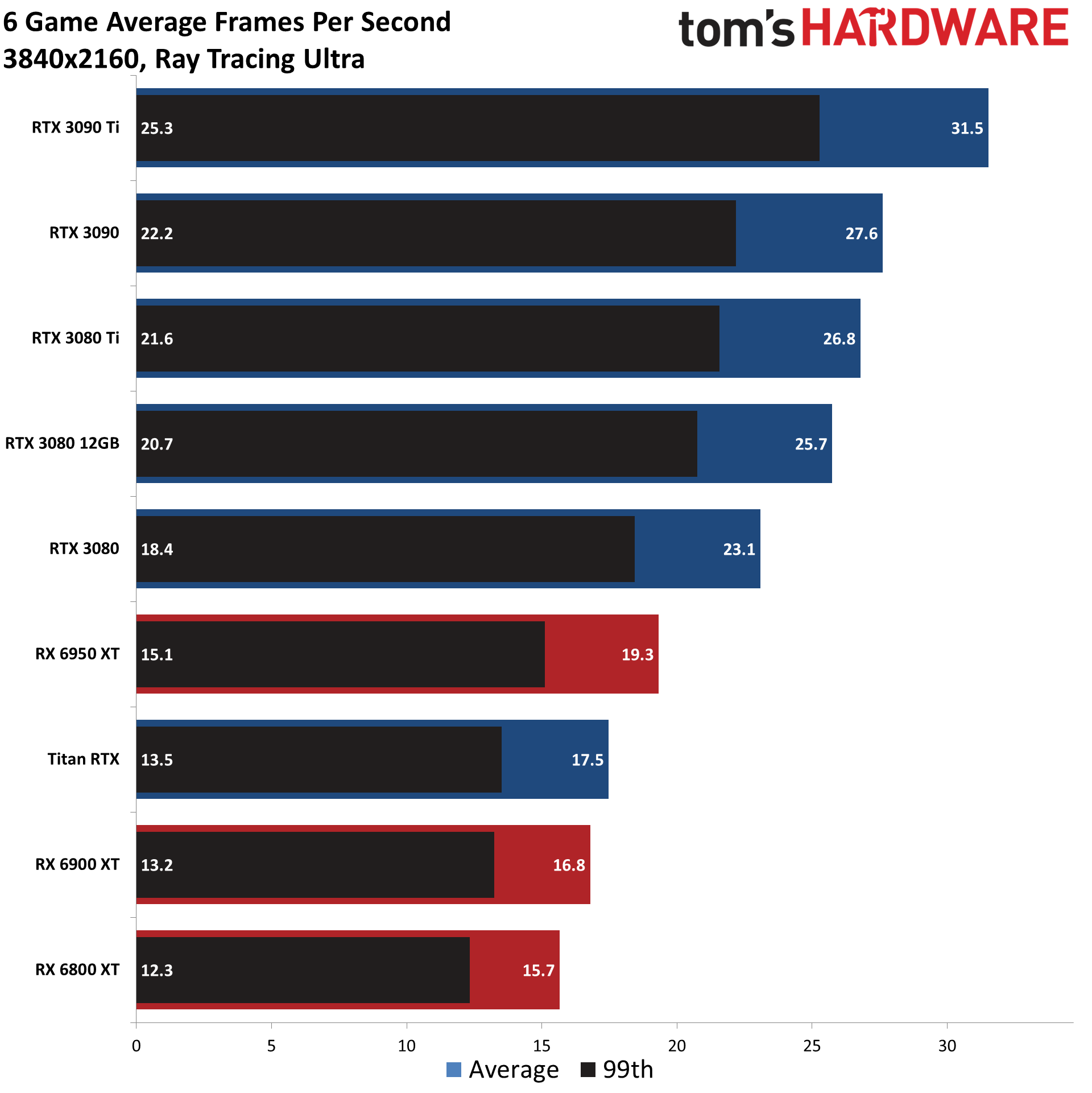

With the official launches now complete, we have created the above charts using our own suite of 14 games (six of which use DirectX Raytracing, aka DXR) run at three resolutions. All of the testing was done on a Core i9-12900K setup, with resizable BAR support enabled on the BIOS. The performance of RDNA2 and RX 6000 cards are good to great in rasterization games, but AMD generally comes up short of the competition in ray tracing workloads.

At the top, the RX 6950 XT goes up against the RTX 3090 Ti and RTX 3080 Ti. AMD leads at 1080p and 1440p in our standard suite, where the Infinity Cache benefits it the most, while Nvidia still leads at 4K. The RX 6800 XT meanwhile takes down the RTX 3080, and the RX 6700 XT splits the difference between the RTX 3070 and RTX 3060 Ti, and so on down the charts. Things do get a bit sketchy with the budget GPUs, however.

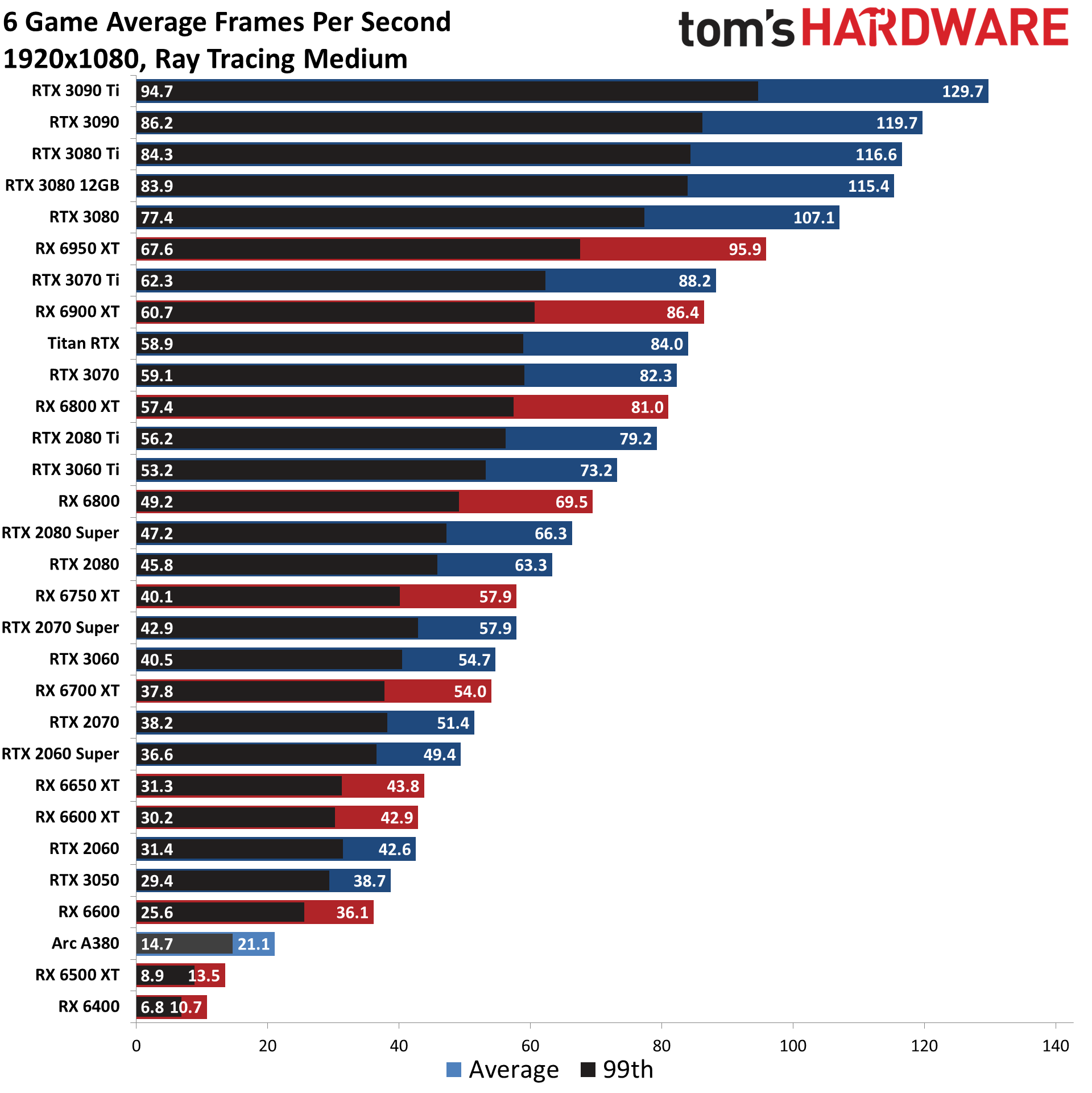

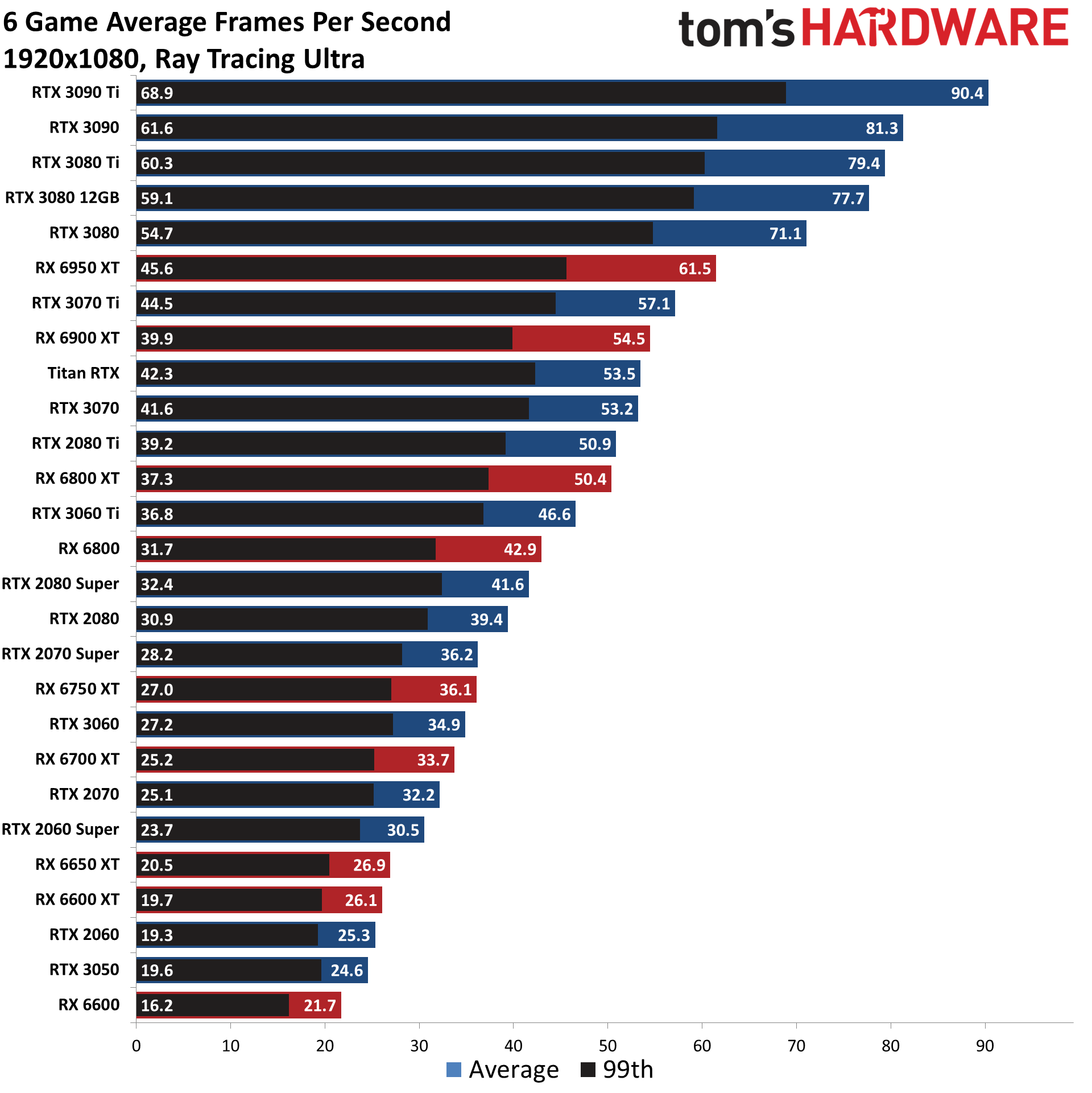

Of course, that's only in traditional rasterization games. We've used a different suite of games with ray tracing enabled, and we've run benchmarks for each of the major GPU launches of the past year. Here are the overall summary charts for 1080p, 1440p, and 4K, running natively (without DLSS).

That... doesn't look good for AMD. The Navi 24 cards can't even manage 1080p medium with DXR, and even the RX 6600 barely breaks 30 fps. AMD's fastest GPU, the RX 6950 XT, takes sixth place overall, trailing even the RTX 3080. The RX 6800 XT meanwhile falls behind the RTX 3070, RX 6700 XT ranks below RTX 3060, and RX the RX 6600 XT is just a hair faster than the old RTX 2060. Turn on DLSS and it would be a complete slaughterfest — and FSR 2.0 only partially closes the gap, since it's in far fewer games.

Note also that as more RT effects get used, Nvidia's Ampere GPUs tend to widen their performance advantage. We didn't show the individual game charts, but Minecraft as an example uses "full path tracing" and even the RTX 3090 Ti only manages 35 fps at 4K. The RX 6950 XT is half that fast, and even the RTX 3070 can outpace it in such a workload.

Big Navi and RX 6000 Closing Thoughts

AMD provided a deeper dive into the RDNA2 architecture at Hot Chips 2021. We've used several of the slides in our latest updates, but the full suite is in the above gallery for reference.

AMD had a lot riding on Big Navi, RDNA2, and the Radeon RX 6000 series. After playing second fiddle to Nvidia for the past several generations, AMD is taking its shot at the top. AMD has to worry about more than just PC graphics cards, though. RDNA2 is the GPU architecture that powers the next generation of consoles, which tend to have much longer shelf lives than PC graphics cards. Look at the PS4 and Xbox One: both launched in late 2013 and are still in use today.

If you were hoping for a clear win from AMD, across all games and rendering APIs, that didn't happen. Big Navi performs great in many cases, but with ray tracing it looks decidedly mediocre. Higher performance in games that don't use ray tracing might be more important today, but a year or two down to road, that could change. Then again, the consoles have AMD GPUs and are more likely to see AMD-specific optimizations, so AMD isn't out of the running yet.

Just as important as performance and price, though, we need actual cards for sale. There's clearly demand for new levels of performance, and every Ampere GPU and Big Navi GPU sold out at launch and continued to do so until mid-2022, which supply finally started to catch up with demand. Now we're getting ready for the next generation RDNA 3 and Ada Lovelace. There's only so much silicon to go around, and while Samsung couldn't keep up with demand for Ampere GPUs, TSMC had a lot more going on. Based on what we've seen in our GPU price index and the latest Steam Hardware Survey, Nvidia probably sold ten times as many Ampere GPUs as AMD has sold RDNA2 cards, at least in sales to gamers that might run Steam.

The bottom line is that if you're looking for a new high-end graphics card, Big Navi is a good competitor. But if you want something that can run every game at maxed out settings, even with ray tracing, at 4K and 60 fps? Not even the RTX 3090 Ti can manage that, but maybe the next-gen GPUs will clear that hurdle. And maybe their pricing and availability won't suck for two whole years.

Save us, Lovelace and RDNA3. You're our only hope! Thankfully, Ethereum mining will no longer be a thing in the near future (though a different coin might take its place).

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

animalosity Unless my math is wrong; 80 Compute Units * 96 Raster Operations * 1600 Mhz clock = 12.28 TFLOPS of single precision floating point (FP32).Reply

Not bad AMD. Not bad. Let's see what that translates to in the real world, though with the advances of DX12 and now Vulkan being implemented I expect AMD to be on a more even level playing field with high end Nvidia. I might be inclined to head back to team Red, especially if the price is right. -

JarredWaltonGPU Reply

Your math is wrong. :-)animalosity said:Unless my math is wrong; 80 Compute Units * 96 Raster Operations * 1600 Mhz clock = 12.28 TFLOPS of single precision floating point (FP32).

Not bad AMD. Not bad. Let's see what that translates to in the real world, though with the advances of DX12 and now Vulkan being implemented I expect AMD to be on a more even level playing field with high end Nvidia. I might be inclined to head back to team Red, especially if the price is right.

FLOPS is simply FP operations per second. It's calculated as a "best-case" figure, so FMA instructions (fused multiply add) count as two operations, and each GPU core in AMD and Nvidia GPUs can do one FMA per clock (peak theoretical performance). So FLOPS ends up being:

GPU cores * 2 * clock

For the tables:

80 CUs * 64 cores/CU * 2 * clock (1600 MHz) = 16,384 GFLOPS.

ROPs and TMUs and some other functional elements of GPUs might do work that sort of looks like an FP operation, but they're not programmable or accessible in the same way as the GPUs and so any instructions run on the ROPs or TMUs generally aren't counted as part of the FP32 performance. -

animalosity ReplyJarredWaltonGPU said:Your math is wrong. :)

FLOPS is simply FP operations per second. It's calculated as a "best-case" figure, so FMA instructions (fused multiply add) count as two operations, and each GPU core in AMD and Nvidia GPUs can do one FMA per clock (peak theoretical performance). So FLOPS ends up being:

GPU cores * 2 * clock

For the tables:

80 CUs * 64 cores/CU * 2 * clock (1600 MHz) = 16,384 GFLOPS.

Ah yes, I knew I was forgetting Texture Mapping Units. Thank you for the correction. I am assuming you meant 16.3 TFLOPS vice GigaFLOPS. I knew what you were trying to convey. Either way, those some pretty impressive theoretical compute performance. Excited to see how that translates tor real world performance versus some pointless synthetic benchmark. -

JamesSneed Speaking of FLOPS we also should note that AMD gutted most of GCN that was left especially the parts that helped compute. I fully expect the same amount of FLOPS from this architecture to translate into more FPS since they are no longer making general gaming and compute GPU but a dedicated gaming GPU.Reply -

JarredWaltonGPU Reply

Well, 16384 GFLOPS is the same as 16.384 TFLOPS if you want to do it that way. I prefer the slightly higher precision of GFLOPS instead of rounding to the nearest 0.1 TFLOPS, but it would be 16.4 TFLOPS if you want to go that route.animalosity said:Ah yes, I knew I was forgetting Texture Mapping Units. Thank you for the correction. I am assuming you meant 16.3 TFLOPS vice GigaFLOPS. I knew what you were trying to convey. Either way, those some pretty impressive theoretical compute performance. Excited to see how that translates tor real world performance versus some pointless synthetic benchmark. -

JarredWaltonGPU Reply

I'm not sure that's completely accurate. If you are writing highly optimized compute code (not gaming or general code), you should be able to get relatively close to the theoretical compute performance. Or at least, both GCN and Navi should end up with a relatively similar percentage of the theoretical compute. Which means:JamesSneed said:Speaking of FLOPS we also should note that AMD gutted most of GCN that was left especially the parts that helped compute. I fully expect the same amount of FLOPS from this architecture to translate into more FPS since they are no longer making general gaming and compute GPU but a dedicated gaming GPU.

RX 5700 XT = 9,654 GFLOPS

RX Vega 64 = 12,665 GFLOPS

Radeon VII = 13,824 GFLOPS

For gaming code that uses a more general approach, the new dual-CU workgroup processor design and change from 1 SIMD16 (4 cycle latency) to 2 SIMD32 (1 cycle latency) clearly helps, as RX 5700 XT easily outperforms Vega 64 in every test I've seen. But with the right computational workload, Vega 64 should still be up to 30% faster. Navi 21 with 80 CUs meanwhile would be at least 30% faster than Vega 64 in pure compute, and probably a lot more than that in games. -

JamesSneed ReplyJarredWaltonGPU said:I'm not sure that's completely accurate. If you are writing highly optimized compute code (not gaming or general code), you should be able to get relatively close to the theoretical compute performance. Or at least, both GCN and Navi should end up with a relatively similar percentage of the theoretical compute. Which means:

RX 5700 XT = 9,654 GFLOPS

RX Vega 64 = 12,665 GFLOPS

Radeon VII = 13,824 GFLOPS

For gaming code that uses a more general approach, the new dual-CU workgroup processor design and change from 1 SIMD16 (4 cycle latency) to 2 SIMD32 (1 cycle latency) clearly helps, as RX 5700 XT easily outperforms Vega 64 in every test I've seen. But with the right computational workload, Vega 64 should still be up to 30% faster. Navi 21 with 80 CUs meanwhile would be at least 30% faster than Vega 64 in pure compute, and probably a lot more than that in games.

"Navi 21 with 80 CUs meanwhile would be at least 30% faster than Vega 64 in pure compute, and probably a lot more than that in games. "

Was my point ^ We will see more FPS in games than the FLOPS is telling us. It is not a flops is 30% more so we can expect that much more gaming performance it wont be linear this go around. -

JarredWaltonGPU Reply

I agree with that part, though it wasn't clear from your original post that you were saying that. Specifically, the bit about "AMD gutted most of GCN that was left especially the parts that helped compute" isn't really accurate. AMD didn't "gut" anything -- it added hardware and reorganized things to make better use of the hardware. And ultimately, that leads to better performance in nearly all workloads.JamesSneed said:"Navi 21 with 80 CUs meanwhile would be at least 30% faster than Vega 64 in pure compute, and probably a lot more than that in games. "

Was my point ^ We will see more FPS in games than the FLOPS is telling us. It is not a flops is 30% more so we can expect that much more gaming performance it wont be linear this go around.

Interesting thought:

If AMD really does an 80 CU Navi 2x part, at close to the specs I listed, performance should be roughly 60% higher than RX 5700 XT. Considering the RTX 2080 Ti is only about 30% faster than RX 5700 XT, that would actually be a monstrously powerful GPU. I suspect it will be a datacenter part first, if it exists, and maybe AMD will finally get a chance to make a Titan killer. Except Nvidia can probably get a 40-50% boost to performance over Turing by moving to 7nm and adding more cores, so I guess we wait and see. -

jeremyj_83 Reply

Looking at the numbers AMD could get an RX 5700XT performance part in a 150W envelope if their performance/watt numbers can be believed. Having a 1440p GPU in the power envelope of a GTX 1660 would be a killer product.JarredWaltonGPU said:I agree with that part, though it wasn't clear from your original post that you were saying that. Specifically, the bit about "AMD gutted most of GCN that was left especially the parts that helped compute" isn't really accurate. AMD didn't "gut" anything -- it added hardware and reorganized things to make better use of the hardware. And ultimately, that leads to better performance in nearly all workloads.

Interesting thought:

If AMD really does an 80 CU Navi 2x part, at close to the specs I listed, performance should be roughly 60% higher than RX 5700 XT. Considering the RTX 2080 Ti is only about 30% faster than RX 5700 XT, that would actually be a monstrously powerful GPU. I suspect it will be a datacenter part first, if it exists, and maybe AMD will finally get a chance to make a Titan killer. Except Nvidia can probably get a 40-50% boost to performance over Turing by moving to 7nm and adding more cores, so I guess we wait and see. -

JamesSneed I am expecting INT8 performance to not move much from the RX5700XT. Shall see though as they do need to handle ray tracing.Reply