Ashes Of The Singularity Beta: Async Compute, Multi-Adapter & Power

DirectX 12 has been available since Windows 10, but there aren't any games for it yet, so we're using the Ashes of the Singularity beta to examine DX12 performance.

Introduction And Test System

We've benchmarked unfinished games several times in our reviews, most recently in our Radeon R9 Nano launch article. Back then, we found some driver issues and, even worse, hardware problems on Nvidia graphics cards. It didn't take that company long to respond with new software that not only helped overcome those technical shortcomings, but even turned the performance story around, enabling a small lead over the competition.

This time around was a bit different. Since AMD provided us with an optimized launch driver, we asked Nvidia if it had one for us as well. Company reps didn't seem too pleased with the question and pointed us to its current WHQL-certified driver, version 361.91. That was all Nvidia would say on the matter.

As a game, Ashes of the Singularity is really the perfect showcase for DirectX 12. It features many small AI-controlled entities moving across a large area. This is both a pro and a con. It does show off the API's benefits nicely. But we also have to recognize that those gains will probably be less pronounced in titles that don't exploit DirectX 12's new features as heavily.

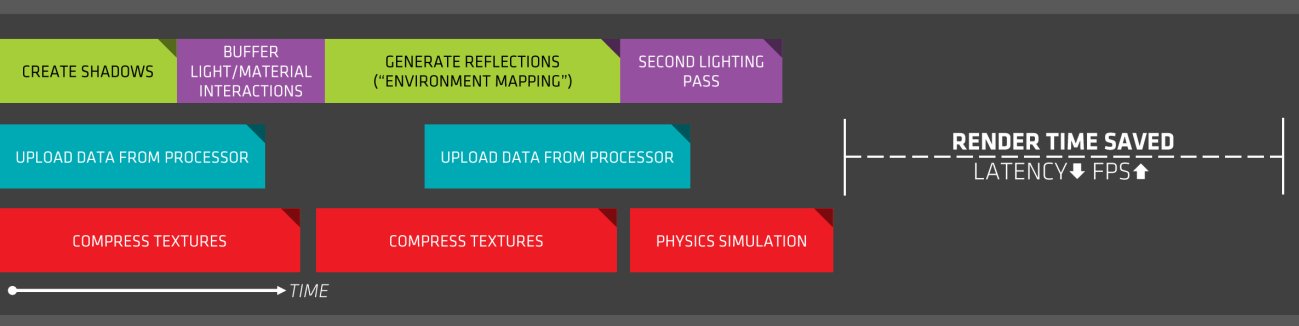

The capability making the headlines lately is called Asynchronous Shading/Compute. It allows the parallel and asynchronous (meaning completely order-independent) execution of graphical tasks (shading) and calculations (compute). If correctly implemented in hardware and software, the technology can cut latency down by a massive amount, which in turn results in higher performance.

Let's review a diagram of how tasks are handled in DirectX 11. They are executed one by one in a fixed order. This order can't be changed, and the only way to achieve efficiency is to split up the queue and keep it relatively short.

In DirectX 12, the queue can be split so that tasks are completed at the same time and somewhat offset from each other. This works fairly well for the benchmark we're using today, so we'll actually see its potential in practice. Just remember, though, how much of a difference this new feature makes is going to depend on how much the tasks in a particular game benefit from being executed in parallel, which is to say asynchronously. There won't be any benefit if tasks are dependent on other tasks' results, or if the overhead involved in managing all the tasks is higher than the gains from the asynchronous execution.

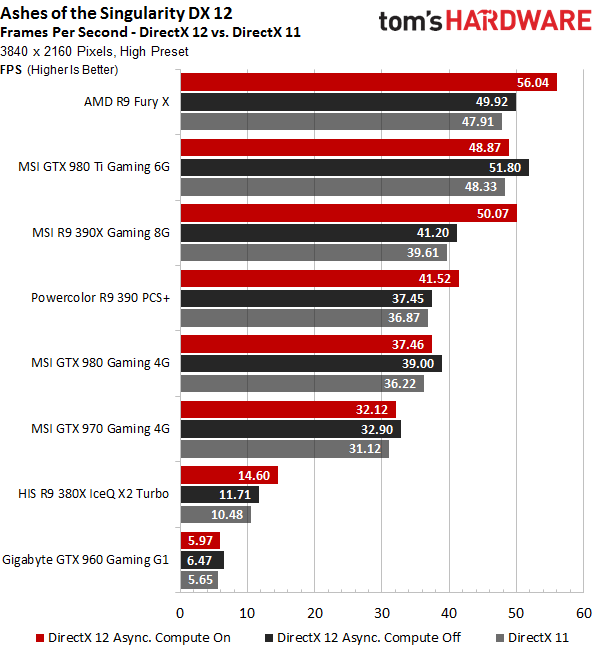

Several revisions of AMD's GCN architecture include provisions for this functionality, so its Tonga, Hawaii and Fiji GPUs fare the best with asynchronous shading/compute. Nvidia's Kepler and Maxwell architectures are having a much harder time. The company is trying to compensate with software-based solutions instead.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The following graph clearly shows that Nvidia isn't there yet. After activating the new DirectX 12 feature in the .ini file, AMD's performance increases markedly, whereas Nvidia's actually gets slightly worse. The option to switch this setting on and off convinced us to leave DirectX 11 alone completely and focus all of our efforts on DirectX 12 and asynchronous compute. To keep things fair, we're testing all graphics cards with the setting that works best for them.

MORE: Best Graphics Cards

MORE: All Graphics Content

Test System

We're using the same test system that we've been using for quite a while. We didn't make any changes to it:

| Technical Specifications | |

|---|---|

| Test System | Intel Core i7-5930K at 4.2GHzAlphacool Water Cooler (NexXxos CPU Cooler, VPP655 Pump, Phobya Balancer, 24cm Radiator)Crucial Ballistix Sport, 4x 4GB DDR4-2400MSI X99S XPower AC1x Crucial MX200, 500GB SSD (System)1x Corsair Force LS 960GB SSD (Applications, Data)be quiet Dark Power Pro, 850W PSUWindows 10 Pro (All Updates) |

| Drivers | AMD: Radeon Software 15.301 B35 (Press Beta Driver, February 2016)Nvidia: ForceWare 361.91 WHQL |

| Gaming Benchmarks | Ashes of the Singularity Beta 2 (Press) |

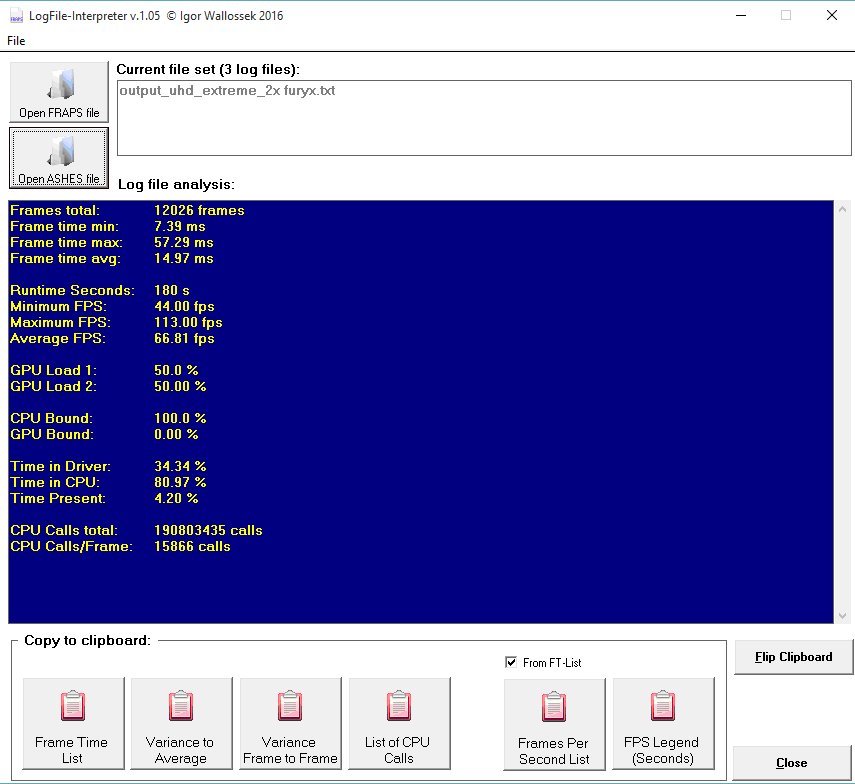

Since the benchmark provides us with a very interesting log file that contains a lot of details (and not just the overall results), we updated our interpreter to take full advantage of it by looking at important things like frame times.

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

In other words DX12 is business gimmick which doesn't translate to squat in real game scenario and I am glad I stayed on Windows 7...running crossfire R9 390x.Reply

-

FormatC Especially Hawaii / Grenada can benefit from asynchronous shading / compute (and your energy supplier). :)Reply -

17seconds An AMD sponsored title that shows off the one and only part of DirectX 12 where AMD cards have an advantage. The key statement is: "But where are the games that take advantage of the technology?" Without that, Async Compute will quickly start to take the same road taken by Mantle, remember that great technology?Reply -

FormatC ReplyAn AMD sponsored title

Really? Sponsoring and knowledge sharing are two pairs of shows. Nvidia was invited too. ;)

Async Compute will quickly start to take the same road taken by Mantle

Sure? The design of the most current titles and engines was started long time before Microsoft started with DirectX 12. You can find the DirectX 12 render path in first steps now in a lot of common engines and I'm sure that PhysX will faster die than DirectX12. Mantle was the key feature to wake up MS, not more. And: it's async compute AND shading :) -

turkey3_scratch Well, there's no denying that for this game the 390X sure is great performing.Reply -

James Mason ReplyAn AMD sponsored title

Really? Sponsoring and knowledge sharing are two pairs of shows. Nvidia was invited too. ;)

Async Compute will quickly start to take the same road taken by Mantle

Sure? The design of the most current titles and engines was started long time before Microsoft started with DirectX 12. You can find the DirectX 12 render path in first steps now in a lot of common engines and I'm sure that PhysX will faster die than DirectX12. Mantle was the key feature to wake up MS, not more. And: it's async compute AND shading :)

Geez, Phsyx has been around for so long now and usually only the fanciest of games try and make use of it. It seems pretty well adopted, but it's just that not all games really need to add an extra layer of physics processing "just for the lulz." -

Wisecracker Thanks for the effort, THG! Lotsa work in here.Reply

What jumps out at me is how the GCN Async Compute frame output for the R9 380X/390X barely moves from 1080p to 1440p ---- despite 75% more frames. That's sumthin' right there.

It will be interesting to see how Pascal responds ---- and how Polaris might *up* AMD's GPU compute.

Neat stuff on the CPU, too. It would be interesting to see how i5 ---> i7 hyperthreads react, and how the FX 8-cores (and 6-cores) handle the increased emphasis on parallelization.

You guys don't have anything better to do .... right? :)

-

For someone who runs Crossfire R9 390x (three cards) DX12 makes no difference in term of performance. For all BS Windows 10 brings not worth *downgrading to considering that lot of games under Windows 10 are simply broken or run like garbage where no issue under Windows 7.Reply

-

ohim ReplyAn AMD sponsored title that shows off the one and only part of DirectX 12 where AMD cards have an advantage. The key statement is: "But where are the games that take advantage of the technology?" Without that, Async Compute will quickly start to take the same road taken by Mantle, remember that great technology?

Instead of making random assumptions about the future of DX12 and Async shaders you should first be mad at Nvidia for stating they have full DX12 cards and that`s not the case, and the fact that Nvidia is trying hard to fix this issues trough software tells a lot.

PS: it`s so funny to see the 980ti being beaten by 390x :) -

cptnjarhead ReplyFor someone who runs Crossfire R9 390x (three cards) DX12 makes no difference in term of performance. For all BS Windows 10 brings not worth *downgrading to considering that lot of games under Windows 10 are simply broken or run like garbage where no issue under Windows 7.

There are no DX12 games yet for review, so why would you assume that you should see better performance in games made for windows 7 DX11, in win10 DX12? Especially in "tri-Fire". DX12 has significant advantages over DX11 so you should wait till these games actually come out before making assumptions on performance, or the validity of DX12's ability to increase performance.

My games, FO4, GTAV and others run better in Win10 and i have had zero problems. I think your issue is more Driver related, which is on AMD's side, not MS's operating system.

I'm on the Red team by the way.