Larrabee, CUDA And The Quest For The Free Lunch

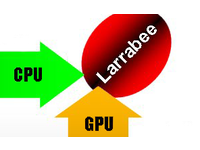

Opinion - Intel unveiled some key details about its upcoming Larrabee accelerator/discrete graphics architecture earlier this week, sparking speculation how this new technology will stack up to what is already out there in the market. We had some time to digest the information and talk to developers - and it appears to be clear that there is more to Larrabee than what meets the eye. For example, we are convinced that Larrabee is much more than a graphics card and will debut as a graphics card just for one particular reason. While consumers may be interested in the graphics performance, developers are more interested in standard versus peak performance and how much performance will be provided in exchange for how much coding effort: If Larrabee can deliver what Intel claims it can do, and if Intel can convince developers to work with this new architecture, then general computing could see its most significant performance jumps yet.

Intel engineers are visibly excited about the fact that they could finally disclose some details about Larrabee this week. And while we agree that it is a completely new computing product that will help to usher in a new phase of high-performance computing on professional and consumer platforms, there is the obvious question whether Larrabee can be successful and what Intel will have to do to make it successful. If you look at this question closely, the bottom line is very simple: It comes down to people purchasing the product and developers writing software for it.

It is one of those chicken-and-egg-problems. Without applications, there is no motivation for consumers to buy Larrabee cards. Without Larrabee cards being bought by consumers, there is no motivation for developers to build applications. So, how do you get consumers to buy Larrabee and how do you get developers to write for Larrabee?

The answer to the first question is relatively simple. Intel is positioning Larrabee in a market that already exists and that has plenty of applications: Gaming. Intel told us that Larrabee will support all the existing APIs (and some more) which should enable gamers to run games on it. How well Larrabee can run games is unknown to us and a "secret", according to Intel. But it would be strategic and financial suicide for Intel if Larrabee would not be competitive with the best of graphics cards at the time of its debut. Nvidia for example, believes that Larrabee needs to be a "killer product" in order to be successful. Will that be the case? No one knows. Not even Intel - at least not if the company does not have spies in the right spots within Nvidia.

However, graphics appears to be only the first phase for Larrabee. Those extra features, which Intel says will allow developers to innovate, cover the more interesting part. In the end, Larrabee is a potent accelerator board that just happens to be able to run game graphics and playback videos. But Intel wants developers to do much more with it: Like GPGPUs, Larrabee can ignite a whole new wave of floating-point accelerated (visual computing) applications far beyond gaming. If Intel can get dozens of millions Larrabee cards into the market - by selling Larrabee as a graphics card - the company may be able to motivate developers to go beyond games and take advantage of the advanced cGPU features of Larrabee.

That, of course, is a milestone Nvidia has reached already. There are more than 70 million GeForce GPUs on consumer PCs that can be used as general purpose GPUs (GPGPUs) and support applications developed and compiled with CUDA. Nvidia has been actively educating students and lecturers on CUDA around the country, it has developed course material and Nvidia employees taught CUDA classes themselves for several years at numerous U.S. universities. It cannot be denied that Nvidia has the time advantage on its side. By the time the first Larrabee card will be sold, Nvidia will have sold more than 100 million CUDA capable cards. The company has learned its lessons and it has established developer relations. Intel will have to catch up and it plans to do so by selling Larrabee as a graphics card to consumers and promoting Larrabee as a product that can be as easily programmed as your average x86 processor. If Larrabee is a success with consumers, developers suddenly will have a choice (we leave ATI’s stream processors out of consideration since AMD still has work to do to create a mainstream appeal for its stream processor cards, work on its software platform and promote its Radeon chips as GPGPU engines.) Which way will they go?

After our initial conversations with a few developers, the trend appears to be the quest for the free lunch. In other words, developers are looking for a platform that is easily approachable and that, in an ideal case, offers them an opportunity to run and scale their applications in a multi-threaded environment without having to understand the hardware. In a way, this is exactly what Nvidia is promising with CUDA and that is what Intel is saying about Larrabee. Nvidia always said that CUDA is just a set of C++ extensions and Intel says that Larrabee runs plain C++ in a way an x86 processor would run it. If you ask Nvidia about Larrabee, you will hear that the company doubts that this is the case and claims that applications will not scale as easily as Intel claims, at least not with the proper hardware knowledge. If you ask Intel about CUDA, you may hear that the explanation about C++ is an oversimplification and that you do need knowledge of the hardware - the memory architecture, for example - to exploit the horsepower of the GPGPU.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Our developer sources partially confirmed and partially denied those claims. On Nvidia’s side, it appears that a carelessly programmed CUDA application still run faster than what you would get from a CPU, but you do need sufficient knowledge of the GPU hardware, such as the memory architecture, to get to the heart of the acceleration. The same is true for Intel’s Larrabee: The claim that developers simply need x86 knowledge to program Larrabee applications is not entirely correct. While Larrabee may accelerate even applications that are not programmed for it, the purpose of Larrabee is to access its complete potential and that is only possible through the vector units, which require vectorized code. Without vectorization, you will have to rely on a compiler to do that for you and it is no secret that this automated version will rarely work as well as hand-crafted code. Long story short: Both CUDA and Larrabee development benefit from the understanding of the hardware. Both platforms promise decent performance without fine tuning and without knowledge of the hardware. But there seems to be little doubt at this time that developers who understand the devices they are developing for will have a big advantage.

Interestingly, we talked to developers who believed that Larrabee will not be able to handle essential x86 capabilities, such as system calls. In fact, Intel’s published Larrabee document clearly supports this conclusion. However, Intel confirmed that Larrabee can do everything an x86 CPU can do and some of these features are actually being achieved through a micro OS that is running on top of the architecture. We got the impression that the way how Larrabee will support essential x86 features and how well they are processed will be closely watched by developers.

A key criticism of CUDA by Intel is a lack of flexibility and the fact that its compiler is tied to GPUs. This claim may be true at this time, but could evaporate within a matter of days. Nvidia says CUDA code can run on any multi-core platform. To prove its point, the company is about to roll out an x86 CUDA compiler. Our sources indicate that the software will be available as a beta by the time the company’s tradeshow Nvision08 opens its doors. In that case, CUDA could be considered to be much more flexible than Larrabee, as it will support x86 as well as GPUs (and possibly even AMD/ATI GPUs.) Even if Intel often describes x86 programming as the root of all programming, the company will have to realize that CUDA may have an edge at this point. The question will be how well CUDA code will run on x86 processors.

There is no doubt that Intel will put all of its marketing and engineering might behind Larrabee. In the end, it is what we perceive a product that is a bridge into the company’s many-core future. It will be critical for the company to succeed on all fronts and to win all of its battles. The hardware needs to deliver and the company will have to convince developers about the benefit of its vector units in Larrabee as it has convinced developers to adjust to SSEs in its x86 CPUs. There are roadblocks and Intel’s road to success is not a done deal.

And, of course, Nvidia is not sitting still. The green team is more powerful than ever and has the leading product in the market right now. I doubt that Nvidia will simply hand over the market to Intel. However, AMD has learned what enormous impact Intel’s massive resources can have. Nvidia should not take this challenge lightly and accelerate its development efforts in this space. I have said it before and I say it again: GPGPU and cGPU computing is the most exciting opportunity in computing hardware I have seen in nearly two decades and there is no doubt in my mind that it will catch fire in corporate and consumer markets as soon as one company gets it right.

Personally, I don’t care whether it will be Nvidia or Intel (or AMD?). The fact is that competition is great and I would hate to see only one company in this segment. The market entry of Intel will drive innovation and it will be interesting to see two of the most powerful semiconductor firms compete in this relatively new market.

So, who will offer free lunch?

Wolfgang Gruener is an experienced professional in digital strategy and content, specializing in web strategy, content architecture, user experience, and applying AI in content operations within the insurtech industry. His previous roles include Director, Digital Strategy and Content Experience at American Eagle, Managing Editor at TG Daily, and contributing to publications like Tom's Guide and Tom's Hardware.

-

Mr_Man It seems to me that AMD/ATI would be the best place to look for this kind of thing. It's interesting that Intel and nVidia are the front runners right now. Maybe AMD/ATI will have a different perspective? Selling platforms, perhaps?Reply -

Blessedman for CUDA to work as Nvidia wishes, it will need to support AMD/ATI cards/CPU's which is probably why Nvidia is helping that kid port CUDA for use with ATI chipsets.Reply -

falchard Larrabee looks disappointing as a video card. It really isn't a discrete video card but a processor on a PCI-e port, much like the Cell Processor right now. If it comes to competing with discrete graphics cards over rendering, I think the graphics cards win because they are more specialized to thier task. Processors right now really aren't capable of rendering simple scenes at a playable frame rate.Reply

I think this also applies to simulations.