Radeon HD 5850: Knocking Down GTX 295 In CrossFire

Power Consumption And Noise

We already saw in our Radeon HD 5870 review the effects of ATI’s power-saving efforts on this generation of hardware.

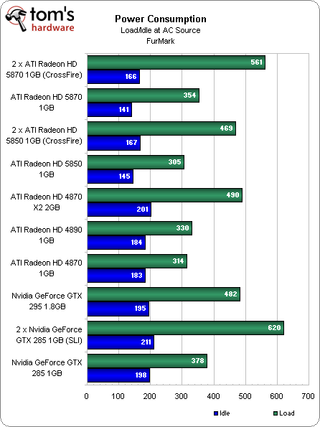

Those results carry over fairly evenly here. As expected, a single Radeon HD 5850 and 5870 idle at roughly the same power levels. And as a load is applied, the lower clocks and disabled sections of the GPU help shave off as much as 49W—even more than the 37W ATI told us to expect. Those savings multiply out when a second card is added, though. A Radeon HD 5850 CrossFire setup uses 92W less than a 5870 CrossFire arrangement under load.

Incidentally, the two 5850s also use less energy than a single Radeon HD 4870 X2, a single GeForce GTX 295, and two GeForce GTX 285s. We’d hesitate to call the Radeon HD 5850 a “green” graphics card, but it is the most energy-efficient high-end board ATI has released since the original Radeon HD 4870.

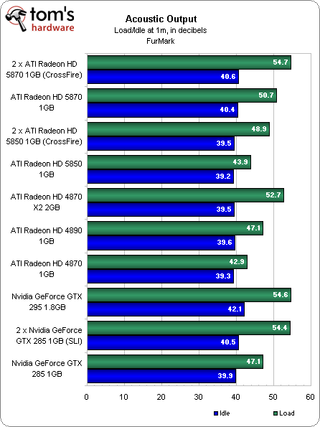

Here’s something special. While there’s little difference between any of these new 5800-series cards at idle, one Radeon HD 5850 under load is almost as quiet as a Radeon HD 4870, and two under load are quieter than a single Radeon HD 5870 at full tilt.

Perhaps more impressive was the fact that two cards never broke 90 degrees Celsius under load, whereas the Radeon HD 5870s were easily made to throttle at 100 degrees. Cooler, quieter, and more power-friendly. Nice combination.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Current page: Power Consumption And Noise

Prev Page Benchmark Results: Grand Theft Auto IV Next Page The Lynnfield Element-

duckmanx88 another great article. can you guy add these to your 2009 charts please. and the new i5 and i7 cpu's too please! =)Reply -

jj463rd Quote "ATI has two cards that are faster than its competitor’s quickest single-GPU board. My, how times have changed." LoLReply

Yep I was looking at the Radeon 5850 especially CF'd for a build.

The Radeon 5870's seem a bit pricey to me so I'd prefer 2 5850's.

I can wait till they become available.

Thanks for the great review very impressive on those scores of the 5850.

-

coonday Ball's in your court now Nvidia. Time to stop whining and bring some competition to the table.Reply -

Annisman Hi, very very good article, It's nice to see my two 5870's at the top of every chart destroying every game out there!Reply

I hope you guys will go into more details about how you run your benchmarks for games. When I compare my own results, sometimes I wonder if you are using ingame FRAPS results, or a benchmark tool such as Crysis to get your results, this is very important for me to know. Please dedicated a small portion of reviews to let us know exactly what part of the game you benched, and in what fashion, it will be very helpful. Also, it would be great to see exactly what settings were used in games. For example you state that you set GTA4 to the 'highest' settings, however without 2GB of Vram, the texture settings can only be set on Med. unless you are compromising in the view distance category or somewhere else. So maybe a screenshot of the settings you used should be included, I would like to see this become regular in Tom's video card reviews. Great article, and please conisder by requests. -

Kl2amer Solid review. Now we just have to wait for aftermarket coolers/designs to get them a quiter and even cooler.Reply -

megamanx00 Glad that 5850 is shorter, but I'll probably wait till Sapphire or Asus put out cards with a cooler better than the reference. Damn I want one now though :D.Reply -

JohnnyLucky Another interesting article. I'm almost tempted to get a 5850. I'm just wondering how power consumption during Furmark which is a rigorous stress test compares to power consumption during gaming. Am I correct in assuming power consumption during a typical gaming session would be less? If I'm not mistaken ATI is recommending a 600 watt power supply with 40 amps on the 12 volt rail(s) for a system with two 5850's in Crossfire mode.Reply -

cangelini annismanHi, very very good article, It's nice to see my two 5870's at the top of every chart destroying every game out there!I hope you guys will go into more details about how you run your benchmarks for games. When I compare my own results, sometimes I wonder if you are using ingame FRAPS results, or a benchmark tool such as Crysis to get your results, this is very important for me to know. Please dedicated a small portion of reviews to let us know exactly what part of the game you benched, and in what fashion, it will be very helpful. Also, it would be great to see exactly what settings were used in games. For example you state that you set GTA4 to the 'highest' settings, however without 2GB of Vram, the texture settings can only be set on Med. unless you are compromising in the view distance category or somewhere else. So maybe a screenshot of the settings you used should be included, I would like to see this become regular in Tom's video card reviews. Great article, and please conisder by requests.Reply

Usually try to include them on a page in the review. Anything more detailed you'd like, feel free to let me know and I'm happy to oblige! -

SchizoFrog It does seem that the 5850 is a great £200 card and definately the option to go for if you are buying today. I pride myself on getting good performance from great value and the test of this is to try and get my GPU to last 2 years and still be playing high end games. My current O/C 9600GT 512MB which cost me a huge £95 18 months ago, is doing just that right now. So, for a £200 DX11 GPU the 5850 is on its own and a great buy by default. However, and this is a big however! While Windows 7 will support DX11 and a few upcoming games will use a few visual effects based on DX11, nothing else does and certainly there are no true DX11 games and won't be for some time as nearly all games released these days are developed with the console market in mind. So I for one will wait. I will wait for nVidia to decide it is time to launch their DX11 GPU's. Either their GPU's will push them firmly back to the top or at least drive ATi's prices down.Reply

Most Popular