MIT CSAIL Researchers Show How Vulnerable AI Is To Hacking

The MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) have shown that even the most advanced artificial intelligence, such as Google’s image recognition AI, can be fooled by hackers. With their new method, the hack can also be performed 1,000x faster than compared to existing approaches.

Changing What The AI Sees

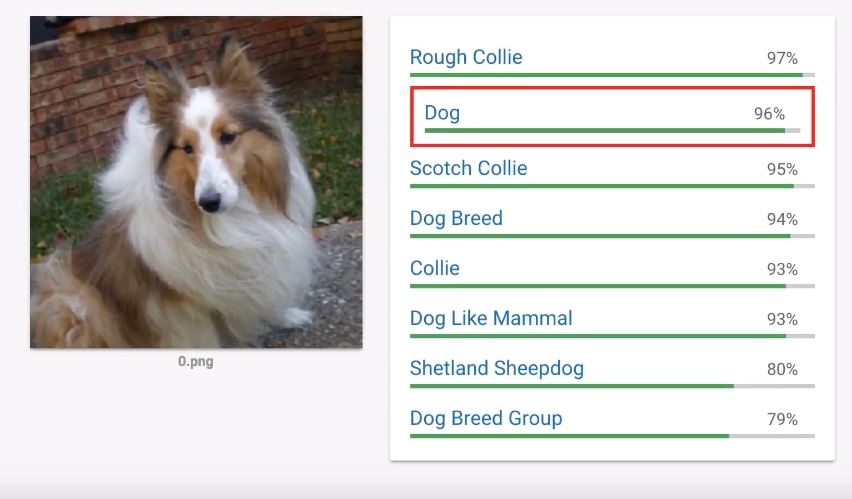

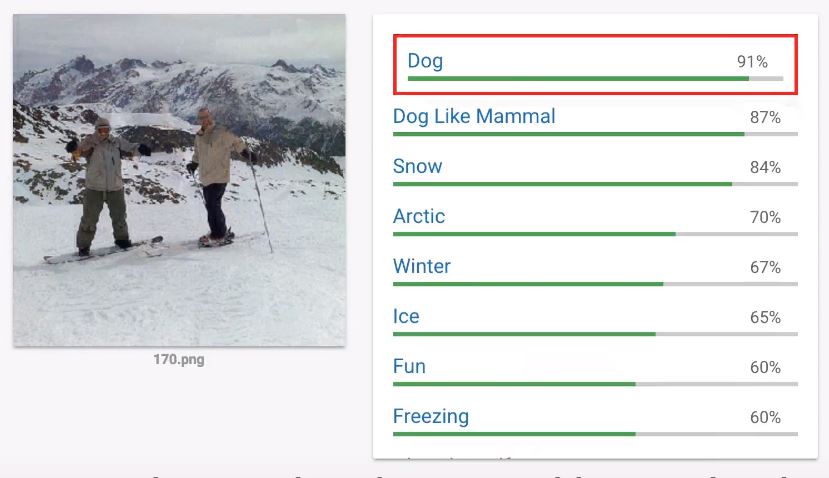

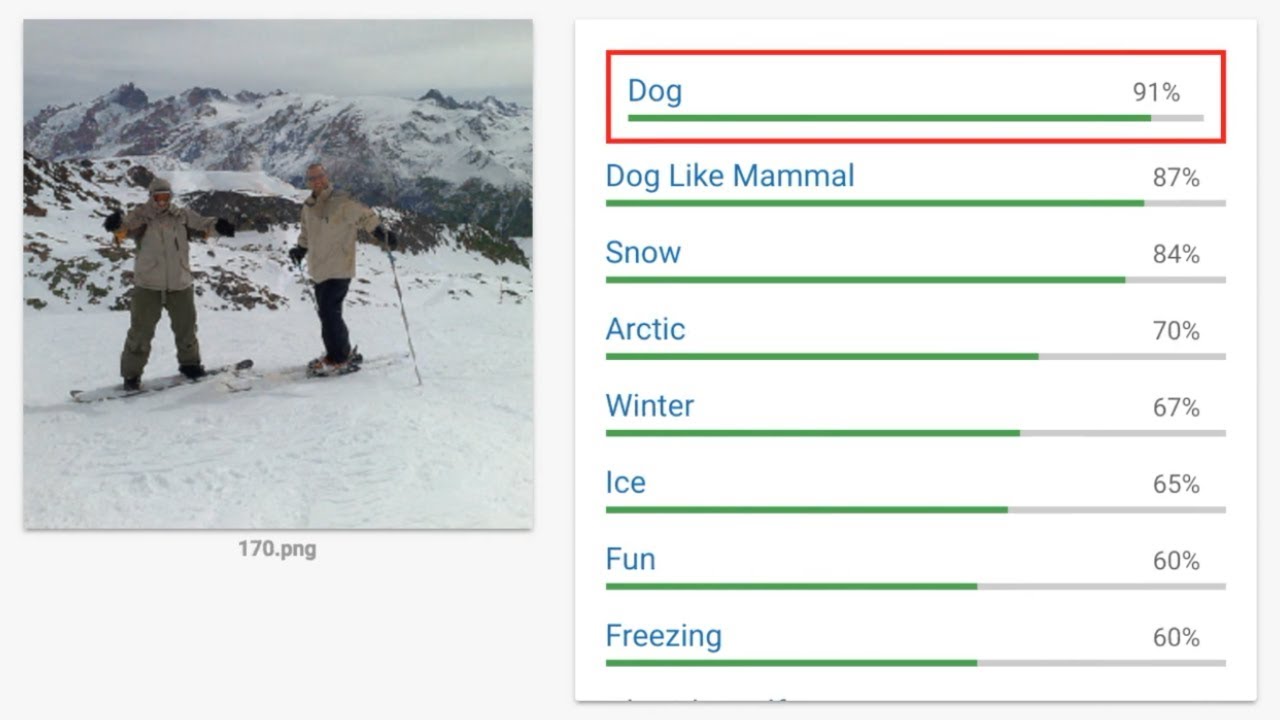

The students were able to change an image pixel by pixel into a completely different object while still maintaining the original classification of the object in the picture. For instance, the researchers could turn a picture of a dog into one showing two skiers on a mountain, while the AI algorithm would still believe the picture is one of a dog.

The team tested this on Google’s Cloud Vision API, but they said the hack could work against image recognition algorithms from Facebook or other companies.

The MIT researchers warned that this type of technology could be used to trick TSA’s own threat recognition algorithms into thinking that, for instance, a turtle is a gun, or vice-versa. Such hacks could also be employed by terrorists to disguise their bombs from TSA’s image recognition systems.

This type of hack could also work against self-driving cars. There is already a danger that self-driving cars could be fooled by physical attacks in which malicious people could change road signs or other road elements to fool autonomous cars and cause them to create accidents. This AI hack could take it one step further by fooling cars into “seeing” things that aren’t there at all.

Carmakers are in a hurry to prove that their cars can reach “Level 5” autonomy, but even if they can achieve that soon, and their cars can drive perfectly on any road, not many seem to be taking into account all the security issues that could appear. This new type of AI hack is just another way in which self-driving cars could be forced to cause accidents, beyond all the expected exploits of software and server-side vulnerabilities.

Hacking AI 1,000x Faster

The MIT CSAIL researchers were themselves surprised how much faster and more efficient their new method is compared to existing approaches of trying to hack machine learning systems. They discovered that their method is 1,000x faster than existing methods for so-called “black-box” AI systems, or systems where the researchers couldn’t see the internal structures of the AI.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

What this means is that the researchers could hack the AI without knowing exactly how the AI “sees” an image. There have been other methods to fool AI systems into seeing something else by changing the image pixel by pixel. However, those methods are typically limited to low-resolution pictures, such as 50-by-50 pixel thumbnails. This method becomes impractical for higher resolution images.

The CSAIL team used an algorithm called “natural evolution strategy” (NES) that can look at similar adversarial images and make changes to the pixels of the image in the direction of similar objects.

In the dog-turned-into-skiers example, the algorithm implements two changes: it first tries to make the image look more like a dog, from the AI’s perspective, and then it changes the RGB values of the pixels to make the image look more like the two skiers.

AI Hacking - Just The Beginning?

MIT CSAIL’s research shows not only that hacking AI is possible but that it could be relatively easy to do, at least until the AI developers can significantly improve their algorithms. However, it’s already starting to look like this will be a game of cat and mouse, similar to the race between security professionals and hackers in the software industry.

If there is this much potential to hack AI systems, we’re likely going to see much more research in this area in the coming years, as well as potentially some real-world attacks against systems that are managed by artificial intelligence.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

derekullo On the other hand, maybe the AI sees a dog that was recently buried in the snow and is in need of rescue?Reply

STOP POSING AND START DIGGING!!!! -

alextheblue I wouldn't ski with these two guys... there's a very good chance one or both of them is a werewolf. The AI concurs.Reply -

jasonkaler It's like the guy at the bank told me: Computers don't make mistakes.Reply

So at least one of those two people is actually a dog. -

Wisecracker Though I tend toward the positive side of advancing AI, my dark side is thinking, "Whhhhhoa ..." We don't know what we don't know.Reply

The havoc wreaked by certain levels of insidious hackery are near unimaginable and inconceivable. It's like we need to advance secure 'good' AI to protect us from the evolution of really nasty 'bad' AI. The potential damage is boundless.

On the road these days I'd trust an autonomous vehicle more than most drivers. It is not difficult to envision automobile hackery -- even that of tens of thousands in a single 'global incident.' The problems I have are with the truly disgusting demented sicko's we can't imagine, with their monumental, societal long-term douchery.

Example: CSAIL researchers are making wonderful advances using AI in the diagnosis if early cancers. Combined with enhanced imagery techniques these advances are of incredible benefit to us all ...

but if the AI is vulnerable and not highly secure, some sick, aggrieved whack-job could 'worm' their way in --- using nasty AI to spoof medicos into thinking third-stage malignancies are benign fatty tissues. Literally, years down the road you have thousands dying from cancer-ridden bodies.

It just seems to me that secure must be conjoined with AI as a global priority (s-AI ?) --- or we are preordained to 'Hacker with Mental Illness Crashes Subway Trains In Catastrophic Disaster' headlines.

The industry must learn to police themselves and highly secure their AI, because our fearless politicos are simply too dumb or indifferent (or bought off...).

-

DavidC1 All this is going to do is make internet usage worse by using more annoying ReCaptcha like verification procedures.Reply -

bit_user I wonder how difficult it would be to fool two parallel, independent classifiers.Reply

I do think the real-world relevance of this is somewhat limited, but it's definitely a tool that can be used for mischief, harm, and theft.

One consequence I find rather troubling is that, given this exploit requires access to the classifier, some will undoubtedly be tempted to seek security via obscurity by simply trying to lock up their models and APIs.

BTW, let's not forget there are some rather famous optical illusions that hack the human visual system to fool us into seeing vastly different interpretations. -

michaelzehr I had some of those same thoughts, bit_user, first about the multiple classifiers, and also about real world applications. But two classifiers can be thought of as one better classifier (except if one says skiers and the other says cat, which one does the black box decide on?). If you have 1 million pictures of cats, and a 99% accurate classifiers, then 10,000 of those pictures will be wrong classified, and this is a fast way to create such pictures. But... it's at the pixel level of control, so I don't see it working on cars (since the angle and distance will change -- it isn't at all clear that a printed picture, i.e. sign, that's categorized wrong from one angle will be categorized wrong at another angle). (The original paper didn't suggest it would.)Reply

Here's the real security point (in my mind, for the amount of time I've had to think about it): if you have a classifier or evaluator (aka "AI"), and it is wrong part of the time, assume an adversary will be able to quickly find examples of input that fail.

And, as you alluded, bit user, painting over a sign already "fools" human drivers. -

bit_user Reply

If the two classifiers use different architectures or operational principles, then the paper's method might have difficulty converging. Neural networks are designed to have a relatively smooth error surface to facilitate training. But the joint error surface from two different kinds of classifiers might have lots of discontinuities.20508868 said:I had some of those same thoughts, bit_user, first about the multiple classifiers, and also about real world applications. But two classifiers can be thought of as one better classifier (except if one says skiers and the other says cat, which one does the black box decide on?).

GANs are another. In fact, I wonder if there's some relationship.20508868 said:If you have 1 million pictures of cats, and a 99% accurate classifiers, then 10,000 of those pictures will be wrong classified, and this is a fast way to create such pictures.

https://en.wikipedia.org/wiki/Generative_adversarial_network

Exactly my thoughts - this is constructing a still image, whereas most real-world applications involve video and objects moving in 3D space. The false image is probably pretty fragile (although did you see the article about the 3D printed turtle?). Generally speaking, it would be impractical to spoof self-driving cars' classifiers without putting things in the environment (i.e. on or near roadways) that look obviously out-of-place.20508868 said:But... it's at the pixel level of control, so I don't see it working on cars (since the angle and distance will change -- it isn't at all clear that a printed picture, i.e. sign, that's categorized wrong from one angle will be categorized wrong at another angle).

Furthermore, I expect 3D self-driving cars will use depth to help them ignore things like billboards and advertisements affixed to other vehicles. With depth information, they'll also be much more difficult to fool.

Humans are wrong in so many cases that it hardly even registers. I can't even count the number of times I've had to do a double-take after misinterpreting some shapes in a darkened room. Or maybe some bushes give the impression that a figure is standing among them. Heck, people see shapes in the clouds, a man in the moon, and various faces and likenesses in everything from water stains to pieces of burnt toast and misshapen Cheetos.20508868 said:And, as you alluded, bit user, painting over a sign already "fools" human drivers. -

Olle P This won't work in real life.Reply

What they did was to first have the original picture correctly interpreted as a dog. Then they not only changed the pixel, but also the AI's "knowledge", by making small changes to the picture and having the AI interpret every single iteration!

The AI would not have interpreted the final image as "dog" if it was shown that one alone, or just after the original image. -

bit_user Reply

It did require access to be able to run the classifier and inspect the output, but they didn't change the classifier as you seem to imply.20529456 said:This won't work in real life.

What they did was to first have the original picture correctly interpreted as a dog. Then they not only changed the pixel, but also the AI's "knowledge", by making small changes to the picture and having the AI interpret every single iteration!

The real-world significance is that if someone builds a product using a standard classifier, like this one from Google, then other people can use the method to create images that spoof the classifier without having access to its source code or weights. It does mean they either have to figure out which classifier it's using, or at least find some way to directly access whatever classifier it's using.

I agree that it's of limited usefulness - mostly for spoofing web services, IMO. Not a big threat for self-driving cars or video surveillance cameras, for instance.