Roundup: Three 16-Port Enterprise SAS Controllers

Results: Sequential Throughput

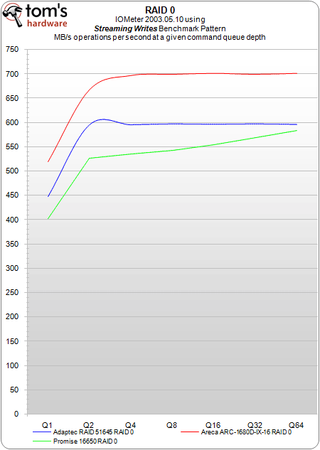

RAID 0

Areca’s card clearly is the fastest product when it comes to simple sequential reads without pending commands. It starts off at 680 MB/s and maxes out at 820 MB/s, as soon as deeper command queues are involved. Adaptec’s maximum performance is very much the same. Promise starts at 390 MB/s and reaches 800 MB/s, but only when long command queues are involved.

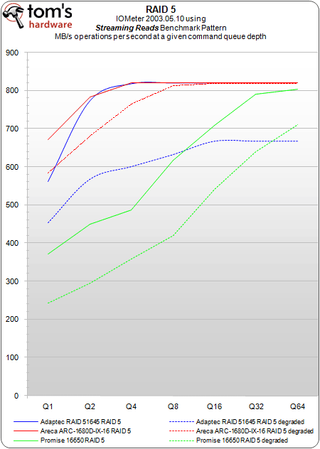

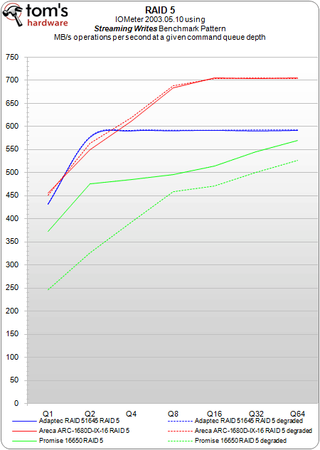

RAID 5

With all RAID 5 member drives available, RAID 5 throughput is very much like the numbers we saw in RAID 0. However, once the controllers must rebuild array data on the fly, performance drops. Areca manages to maintain its performance level the best, while Adaptec and Promise are impacted by the missing drive.

Areca and Adaptec manage to maintain the same write performance for sequential operation on degraded arrays, while the Promise card shows a noticeable performance drop once one RAID 5 drive is missing.

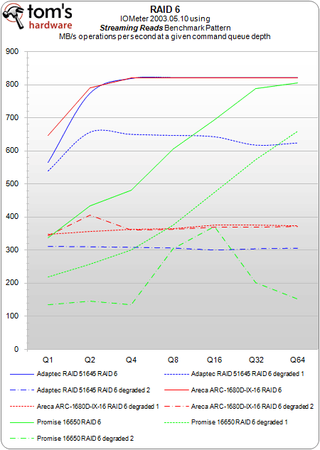

RAID 6

RAID 6 with double redundancy is important for mission-critical systems. Again, read throughput is similar to the excellent results seen in RAID 0. Removing one drive (to simulate a failure) has only a small impact on Adaptec’s performance, but makes a larger difference for Areca and Promise. Once two drives fail, Areca manages to maintain the same performance level as with only one failed drive, while Adaptec and Promise lose still more performance.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

In a RAID 6 array, sequential write performance is always the same on healthy, single, or double degraded arrays in the case of Adaptec and Areca. Unfortunately, the Promise card’s performance drops by almost 50% once one or two drives of a RAID 6 array are missing.

Current page: Results: Sequential Throughput

Prev Page Hardware Comparison Table and Test Setup Next Page Results: I/O Performance RAID 0Most Popular