Qualcomm At The Network Edge

The main theme for Qualcomm’s Uplinq developer’s conference in San Francisco, as CEO Steve Mollenkopf discussed in the opening keynote presentation, is growth and computing at the network edge. With over one billion shipped devices already running Snapdragon SoCs, Qualcomm is looking to expand into new markets beyond smartphones and tablets. Wearables is one obvious application, with Snapdragon powering several of the Android-based smartwatches currently available, such as the LG G Watch and ASUS ZenWatch.

After many years of slow development, augmented reality glasses are finally becoming a non-virtual reality, and Qualcomm wants its silicon to power them. As an incentive to OEMs and developers, Qualcomm is announcing the new Digital Eyewear SDK for Vuforia, which will help streamline the development of augmented reality apps. The SDK is already being used by several digital eyewear devices, including the Epson BT-200, Samsung Gear VR and ODG R-7, which you can read more about here.

Another application for Qualcomm’s Vuforia mobile vision platform and edge computing is robotics. The Smart Terrain feature, which uses 3D depth-sensing cameras to build a three-dimensional map of a physical space, was put to use by three different robots during the opening keynote. The Snapdragon Rover, running a Snapdragon 600 SoC, dual cameras and force feedback actuators, is capable of detecting and classifying objects, picking them up and placing them in color-coded bins.

The Snapdragon Micro Rover is a 3D-printed robot that uses a smartphone with a Bluetooth link for brains. For those who would like to build their own Micro Rover, you can download the plans and source code from Qualcomm’s robotics page.

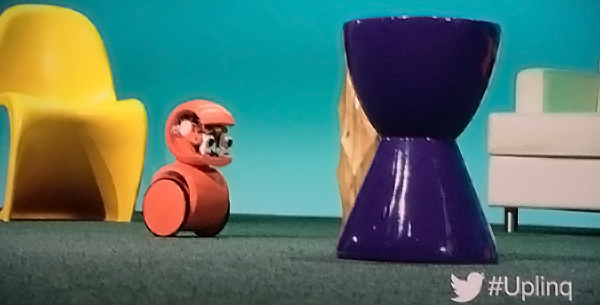

The third robot that appeared onstage was Brain Corporation’s eyeRover, a two-wheeled, self-balancing robot with stereo vision. Running the BrainOS, which uses a combination of machine learning and neural networks, it can be trained to do simple tasks in a similar manner such as training an animal. During the demonstration, it learned to navigate a course around several objects and to come when summoned by a hand gesture.

A common technology shared by these robots is machine learning, a behavior that can be extended to smartphones and tablets. By learning about us and how we interact with them, they could perform common tasks autonomously and thereby simplify the user interface. Google Now is a simple cloud-based version of this idea, giving us contextual information when we need it most. But with powerful CPUs onboard, our devices can learn and perform actions on their own or even on our behalf.

Another feature we may see in the near future is Snapdragon Sense Audio. Assuming the power consumption issue can be solved, your phone would always be listening and ready to respond to commands, unlike today where you usually need to press a button before using a voice command. One use for this feature that was demonstrated onstage was an always-on Shazam-powered music recognition app leveraging the Snapdragon DSP. Running in the background, the app would listen and log all of the music you listen to throughout the day. Later in the evening, you could review all the songs and build a playlist of your day. The biggest hurdle for this technology that I see isn’t power consumption, but the rather scary privacy concerns.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Qualcomm’s focus on edge computing and the Internet of Everything will enable more interaction with devices, both with us and themselves. For Qualcomm this means more devices using Snapdragon silicon and a better return on investment by moving yesterday’s high-end technology to today’s lower-end, edge computing devices. For us, hopefully, it means a richer yet simpler relationship with technology.