Qualcomm Announces Inference Accelerator Cloud AI 100 for Data Center

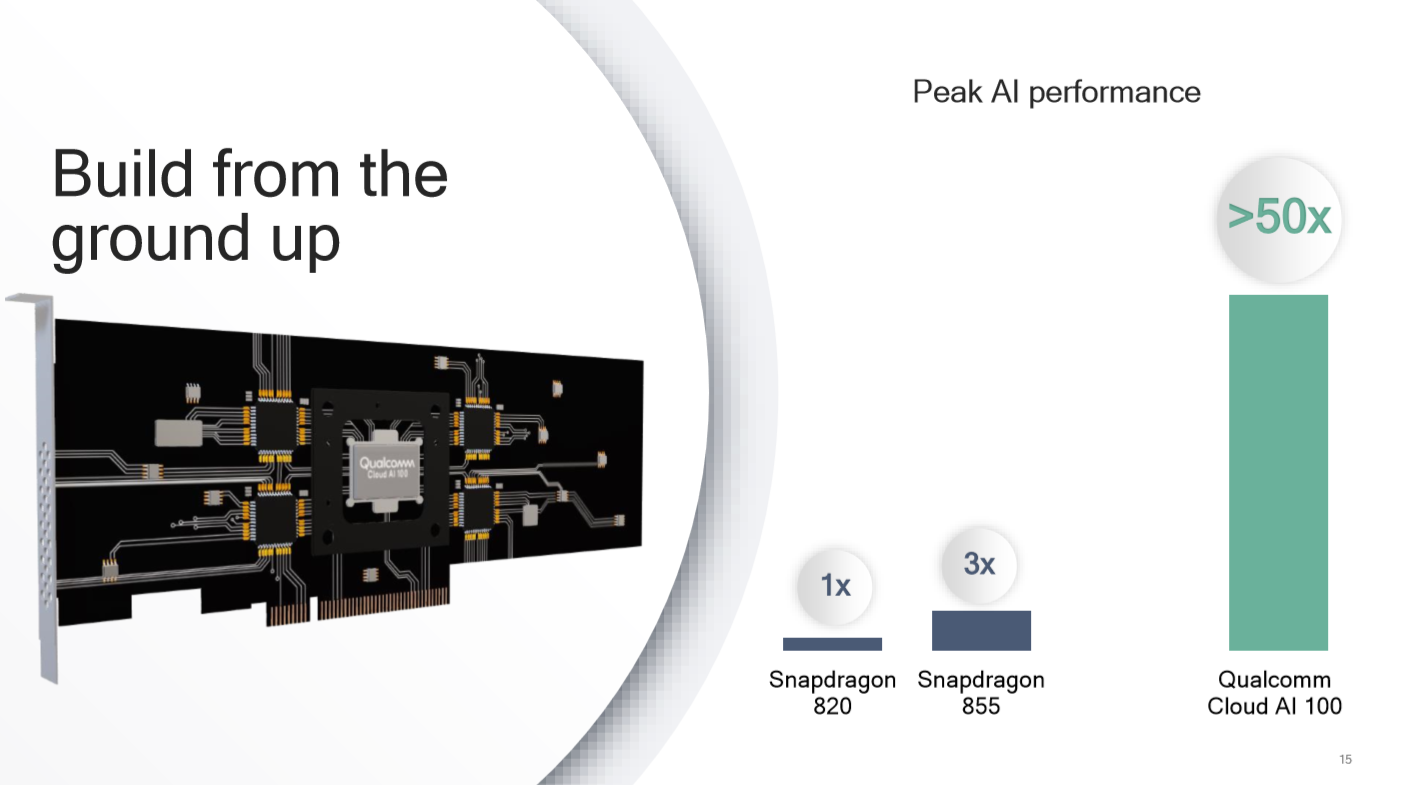

In quite a major bit of news today, Qualcomm announced that it is entering the cloud AI inference processing market. As part of the company's AI Day, it announced the 7nm Cloud AI 100 chip aimed at bringing the company's power efficiency expertise in the mobile space to the data center. Qualcomm designed the new chip for Tier 1 and Tier 2 cloud players and claims a 10x improvement in inference performance compared to the best solutions available.

Qualcomm already sees its Snapdragon processors as a key player in inference on the edge in client devices. The company now wants to bring its capabilities in production scale, leading-edge nodes, power efficiency, and signal processing to the data center. Qualcomm envisions providing lower latency with 5G and bringing much-increased performance and efficiency to the cloud and edge with the Qualcomm Cloud AI 100, which is built on TSMC's 7nm node.

While Qualcomm is not yet ready to talk about specifics in performance or architecture, its says the Cloud AI 100 offers "more than 10x performance over the industry's most advanced AI inference solutions available today." Qualcomm is also committed to supporting the leading software stacks, like PyTorch, Glow, TensorFlow, Keras, and ONNX.

This is quite an early announcement, as the company doesn't have working silicon yet. Qualcomm is working with partners such as Facebook, Microsoft, and ODMs to develop different form factors and power levels. The company is targeting power levels that range from 20W to 75W, which allows it to fit in the M.2 form factor. The Cloud AI 100 will start sampling late this year, and full production is planned for 2020.

Weighing the Market

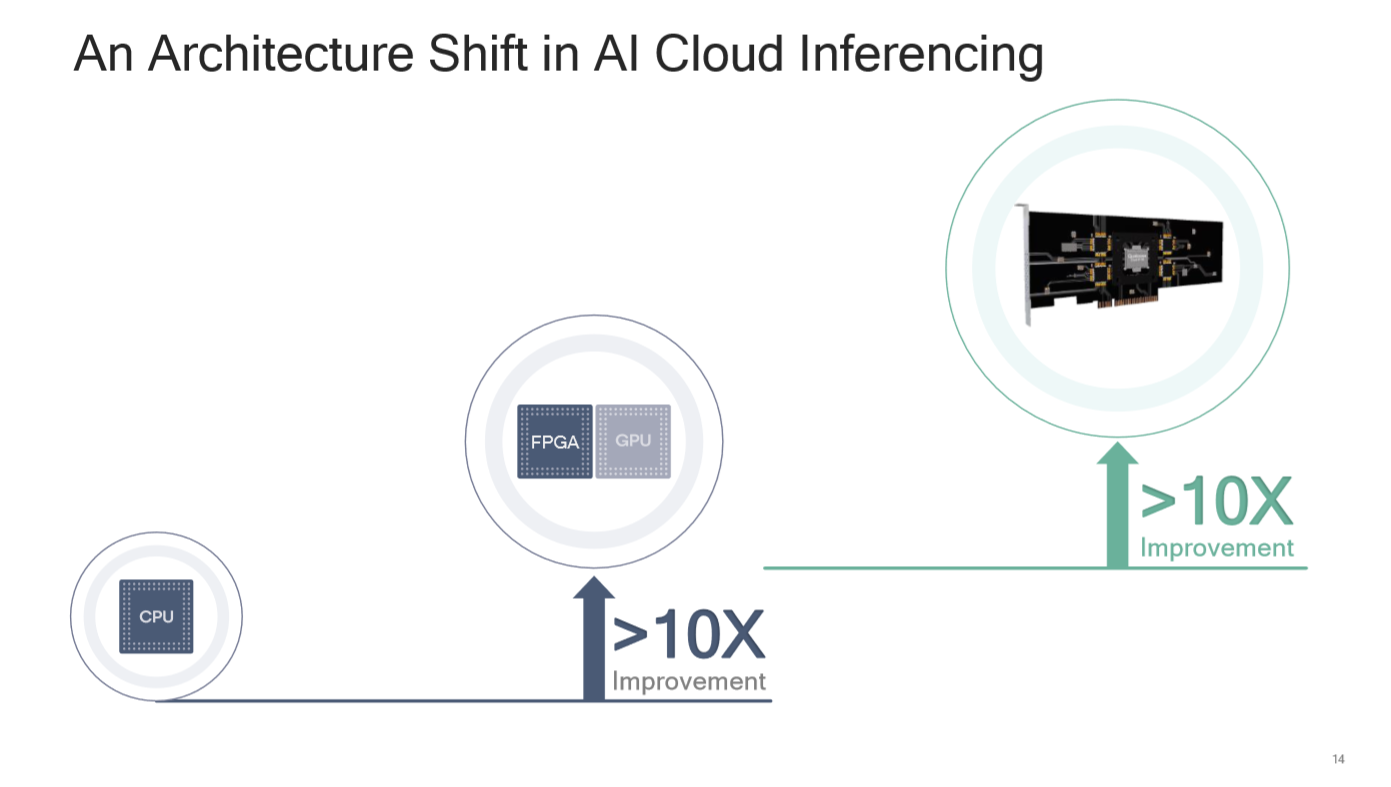

Deep learning is split between training (building the model) and inference (using the model), but the initial AI growth came from the training workloads where Nvidia's Tesla GPUs reigned supreme. Nvidia generated $2B in revenue in 2017 and $3B last year from the data center.

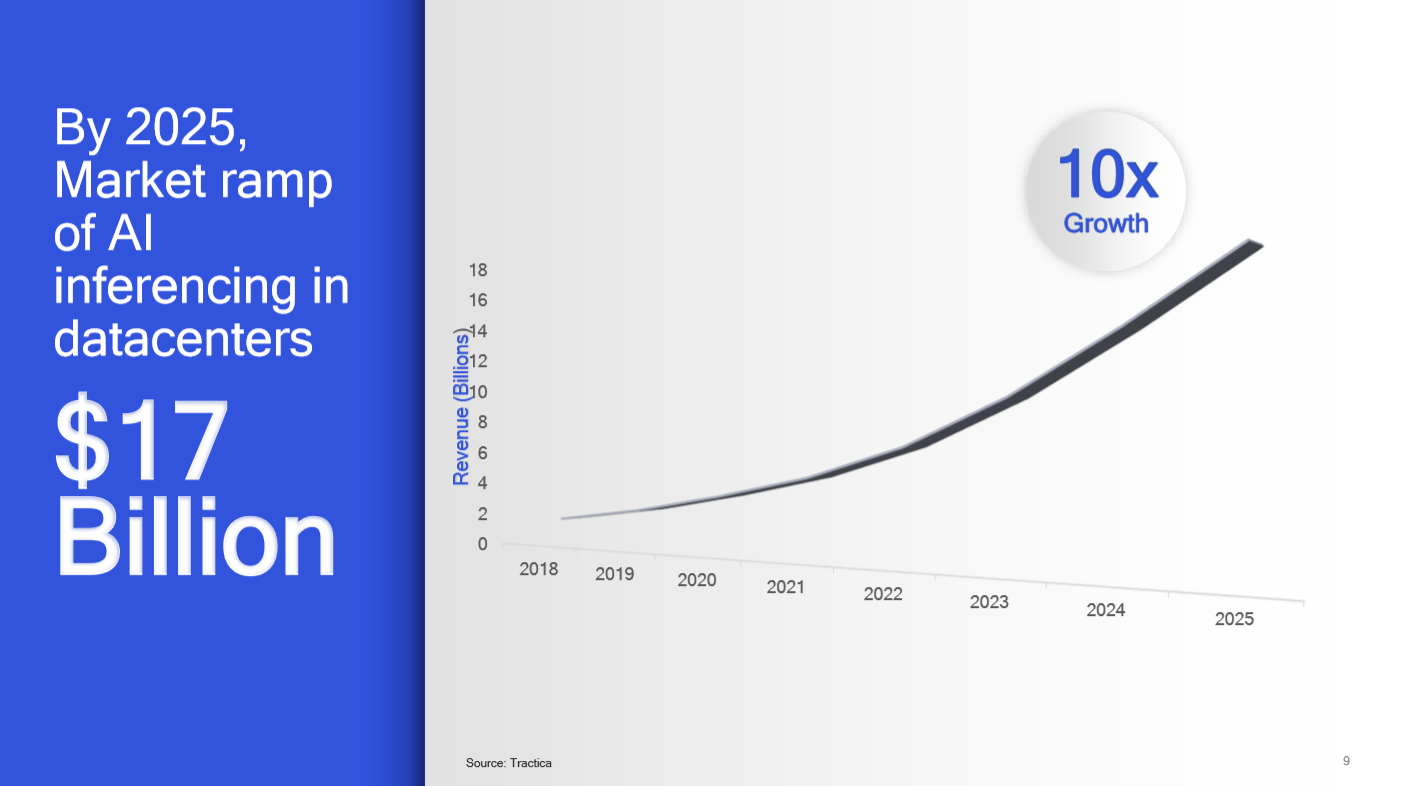

The initial hype of deep learning has cooled down a bit, but the AI market is expected to become much larger still, which has attracted big companies and startups vying for their share of the market especially as the focus is now shifting towards inference, which over time is expected to become much larger than training.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Inference is also seen as more attractive because diverse types of compute can be used, such as CPUs and FPGAs, and inference at the edge is also becoming more important. Intel recently reported $1B in revenue from AI running on Xeon, and now Qualcomm expects data center inference to become a $17B market by 2025. This continues the growth trend that Intel saw (it forecasts a $10B deep learning market by 2022).

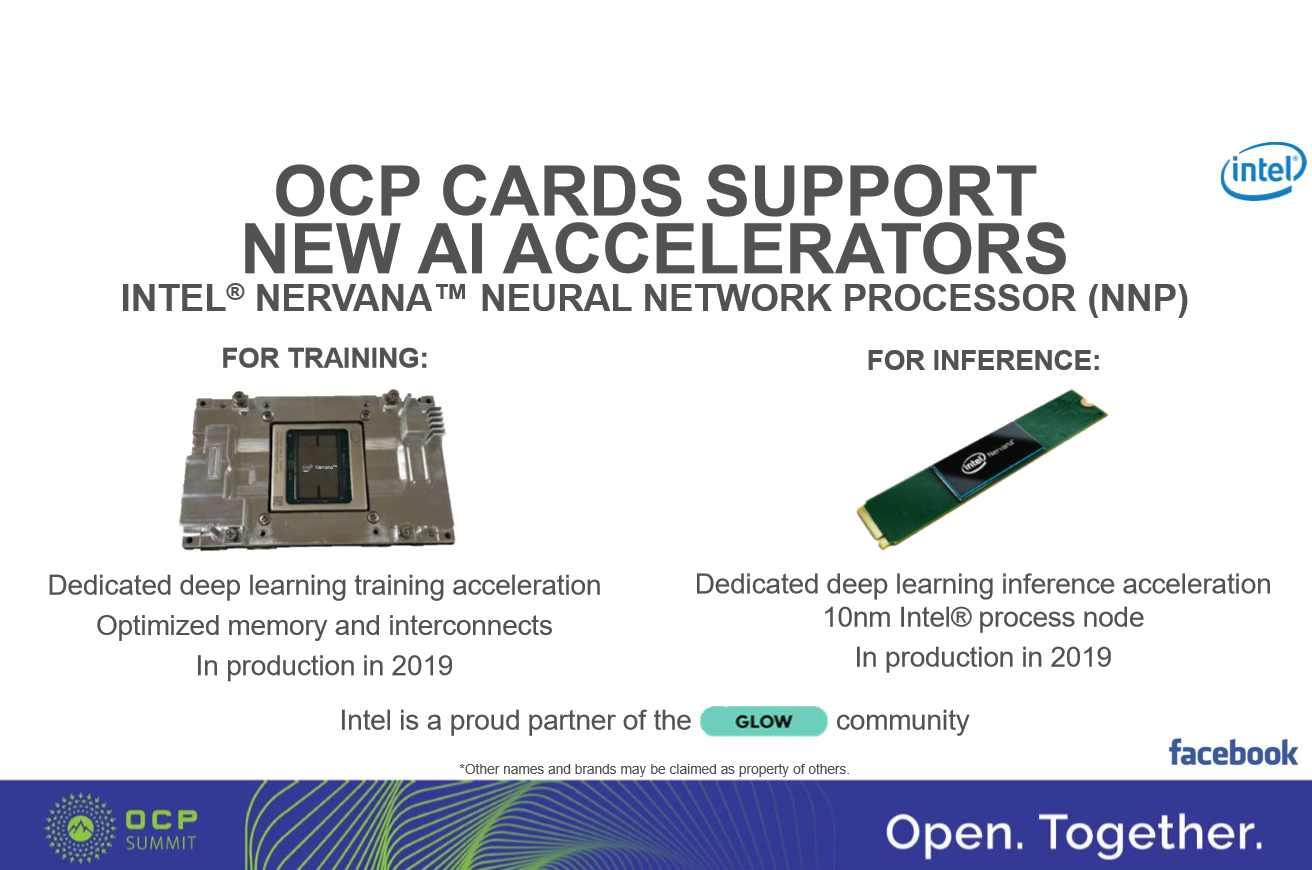

Last year, Nvidia launched the Turing based Tesla T4 for inference, but the company will likely have also moved to 7nm by 2020. At CES, Intel announced the Nervana NNP-I for inference, with production starting in 2019. Naveen Rao confirmed that is will be built on 10nm and includes Sunny Cove cores. Intel too said performance per watt is the focus, and also like the Cloud AI 100, the Nervana NNP-I will fit in the M.2 form factor. Given their similarities, those two should be close competitors. The NNP-I should not be confused with the NNP-L 1000, which is for training and manufactured on TSMC 16nm.

This announcement also comes off the back of Qualcomm reportedly leaving the CPU cloud market that it had tried to enter with the Centriq data center processors.

Image Credits: Qualcomm