Centaur Creates First x86 SoC with Integrated AI Co-Processor

The VIA subsidiary is offering deep learning inference performance without the need for a GPU.

People typically think of x86 processors as coming from Intel and AMD, but there is a third architectural license holder: VIA. This week, an Austin, Texas-based subsidiary of the Taipei-headquartered company announced it's demonstrating an x86 processor that comes with an integrated artificial intelligence (AI) co-processor.

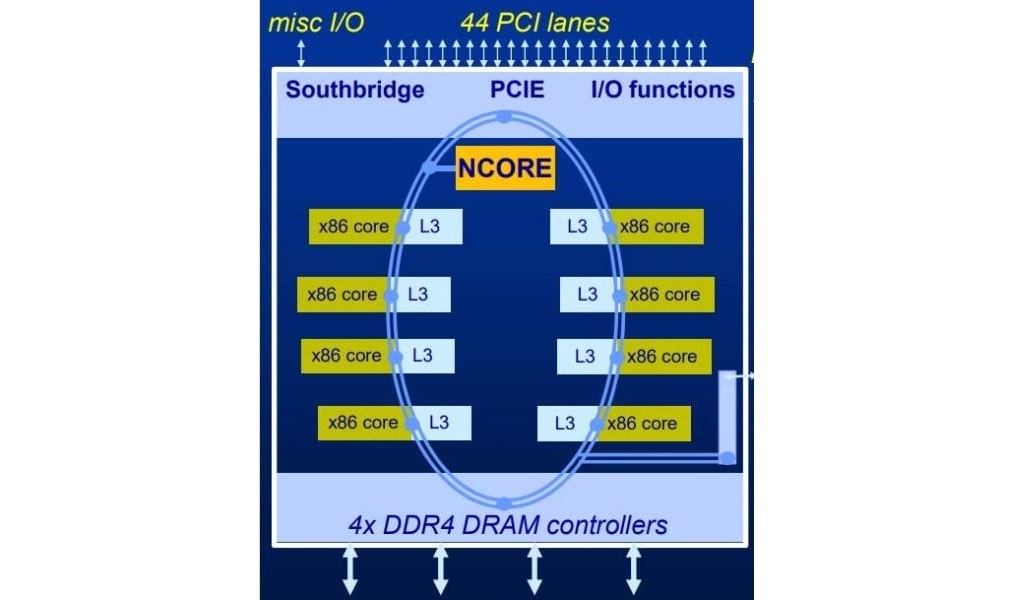

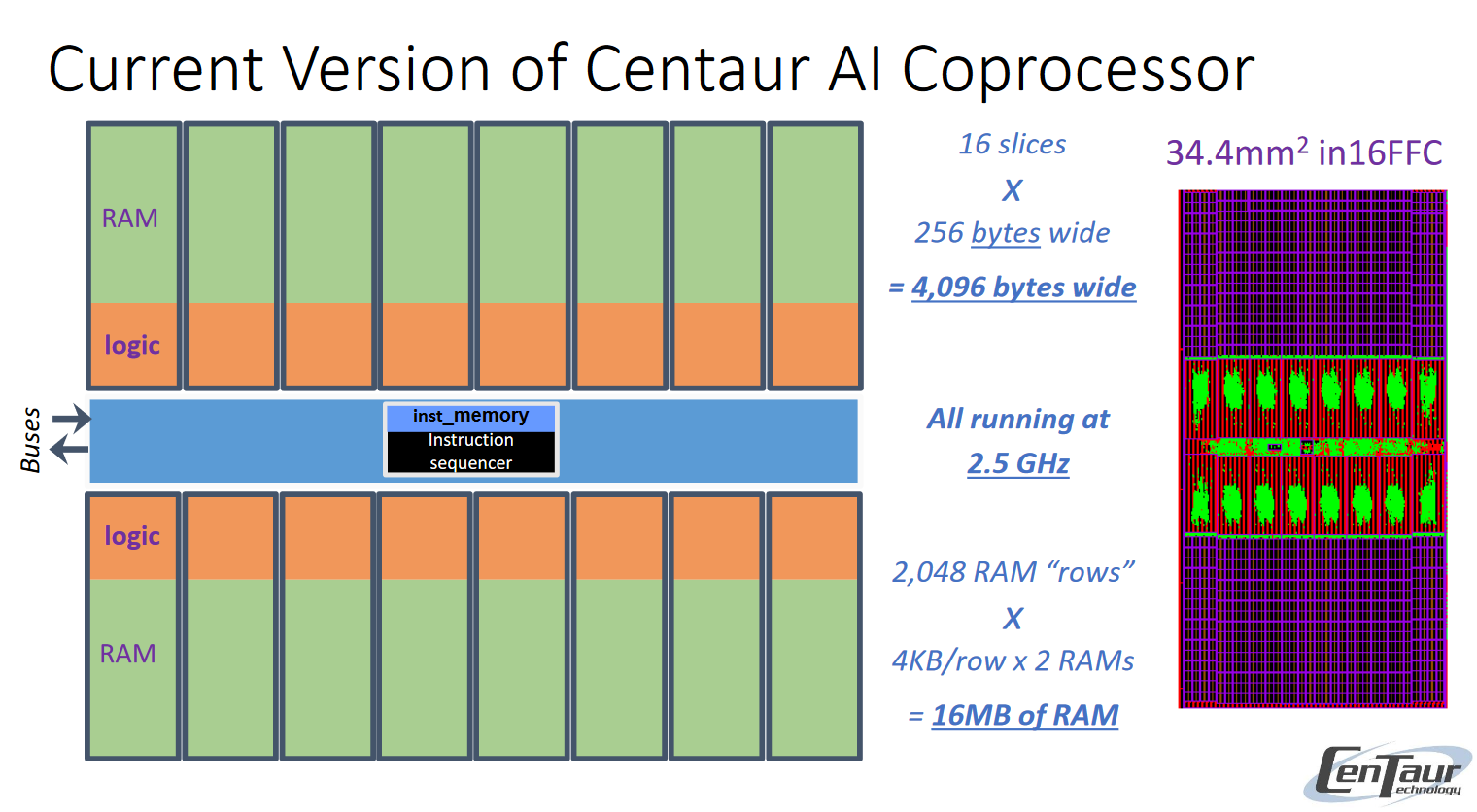

VIA's Centaur Technology is a small CPU design company. The unnamed processor it's developing is built on the 16nm fabrication process and manufactured by TSMC. It's a complete system-on-chip (SoC) with eight cores, 16MB of L3 cache and am AI co-processor. In total, it has a die size of 195 square millimeters, which isn’t all that big. For comparison, a Ryzen 5 chip with one CCD and one IOD has a die measuring 199 square millimeters.

Centaur’s new chip isn't meant to land in consumer PCs. Rather, the end goal is to land in enterprise systems aimed at deep learning and other industrial applications.

Centaur is developing this processor to tackle the challenge of x86 processors needing external inference acceleration (such as a GPU with Nvidia’s Tensor cores). It wants to integrate this feature into one chip and, consequently, reduce power consumption for deep learning tasks.

Next to its eight x86 cores, 16MB of L3 cache and 20 TOPS AI co-processor, Centaur’s chip comes with a total of 44 PCIe lanes and four DDR4 memory channels. Therefore, if a user wanted to further improve a supporting system’s inference performance they'd also be able to add GPUs into the mix.

Currently, the reference platform runs at 2.5 GHz. It also comes with the AVX-512 instruction set, which, thus far, has only been implemented in very select few processors.

The 16MB of memory that the AI co-processors also have access to enable them to communicate at up to 20 TBps, which has led to the lowest latency for image classification within just 330 microseconds in the MLPerf benchmark.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

“We set out to design an AI co-processor with 50 times the inference performance of a general-purpose CPU. We achieved that goal. Now we are working to enhance the hardware for both high-performance and low-power systems, and we are disclosing some of our technology details to encourage feedback from potential customers and technology partners," Glenn Hendry, Centaur’s Chief Architect of the AI co-processor, said in a statement.

Centaur’s x86 CPU with its AI co-processor isn’t ready for prime time yet but will be demonstrated at ISC East on November 20 and 21, with technical details to be published on December 2.

Niels Broekhuijsen is a Contributing Writer for Tom's Hardware US. He reviews cases, water cooling and pc builds.