Nvidia As x86 Manufacturer

There is usually some truth behind every rumor. For years, there were rumors of Apple’s Marklar division, a team dedicated to porting OS X from PowerPC to x86. Though this seemed crazy during the Intel Pentium 4 era, this was a rumor that never died. Turns out, it wasn’t a rumor.

Along those same lines, I don’t believe it is simply an unsubstantiated rumor that Nvidia is making an x86 CPU. This has come up too often, and the company's recruiting of x86 validation engineers and licensing of "other Transmeta technologies" besides LongRun hint at a bigger picture.

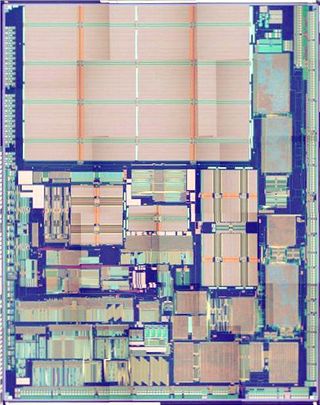

Without having any inside information, there are two areas where the x86 investment could prove worthwhile. First, I’ll tell you what it’s not. It’s not a high-end CPU. Although AMD and Intel are both working on integrating CPUs and GPUs on the same die, neither company will be able to integrate flagship performance graphics on the same die. This is because the increase in die size from incorporating a high-end GPU and CPU will result in exponentially greater manufacturing costs, and the challenge of thermal management for such a large chip will be another engineering challenge (Ed.: just look at the time Nvidia is already having with GF100, and that's a GPU-only).

The first option is that Nvidia is continuing development of the Transmeta Crusoe CPU. Though the Crusoe was not a commercial success, on a performance per watt level, the CPU was highly competitive against even today’s Intel Atom. A newer version of the VLIW architecture of the Crusoe, augmented by improvements in manufacturing technology and the code-morphing algorithms could be a competitive low-power device. When combined with an embedded GPU, Nvidia would have a product that competes against AMD Fusion and Intel embedded products. This could be a desktop version of Tegra.

The second option, which is more likely, is that Nvidia will incorporate a simple CPU on future versions of the Tesla or Quadro. Currently, one of the most computationally-inefficient portions of GPGPU is transferring data back and forth between the graphics card and the rest of the system. By incorporating a true general purpose CPU on the graphics card itself, "housekeeping tasks" can be performed on the GPU with local graphics memory, thereby improving performance. It could be an intermediate to better manage asynchronous data transfers from the GPU to this mini CPU. This device would not need to run x86; it could apply code morphing to work with Nvidia PTX instructions or have some efficient combination that makes it worthwhile.

Hardware REYES Acceleration?

Remember all that talk about Pixar-class graphics? Pixar’s films are rendered using Renderman, a software implementation of the REYES architecture. In traditional 3D graphics, large triangles are sorted, drawn, shaded, lit, and then textured. REYES divides curves into micropolygons that are smaller than a pixel in size, along with stochastic sampling to prevent aliasing. It’s a different way of rendering. At SIGGRAPH 2009, a GPU implementation of a REYES render was demonstrated using a GeForce GTX 280. Though more work will need to be done, Nvidia appears to be headed in this direction with Bill Dally in the position of VP of research. I’d be surprised if we didn’t see an Nvidia implementation of REYES in the future.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

In fact, Nvidia already has an investment in Hollywood. Late last year, it announced iRay, hardware-accelerated ray tracing for use with the mental ray suite. Mental ray is a global illumination/ray tracing engine that competes against Renderman/REYES, and has been used by feature films such as Spiderman 3, Speed Racer, and The Day After Tomorrow. Oh, and Mental Images is a wholly owned subsidiary of Nvidia.

Nvidia’s Outlook

Nvidia’s corporate philosophy and track record is consistent with the goal of providing hardware-accelerated graphics to consumers, hardware-accelerated rendering to Hollywood, and throughput computing to the scientific community. The hardware and software expertise required to produce this is available within Nvidia’s walls. Whereas AMD has the track record with CPU and GPU hardware, and Intel has the deepest pockets, Nvidia has built the strongest portfolio of software technology. Software is what made the iPod. Software is what made the iPhone. Nvidia’s vision is coherent, but the company’s success requires timely execution of both its hardware and software milestones (Ed.: notable, then, that this is currently an issue for the company).

Conclusion

The next few years will be an exciting time for computing. We have a bona fide three-horse race with AMD, Intel, and Nvidia. Perhaps more important, each company has non-overlapping talents and a unique approach toward success. The next generation of products will not simply be "me too" launches, but instead reflect a world of new ideas and paradigms. These technologies will enable new areas of entertainment, science, and creativity. And games will look pretty sweet, too. At least, that’s the way I see it.

-

anamaniac Alan DangAnd games will look pretty sweet, too. At least, that’s the way I see it.After several pages of technology mumbo jumbo jargon, that was a perfect closing statement. =)Reply

Wicked article Alan. Sounds like you've had an interesting last decade indeed.

I'm hoping we all get to see another decade of constant change and improvement to technology as we know it.

Also interesting is that you almost seemed to be attacking every company, you still managed to remain neutral.

Everyone has benefits and flaws, nice to see you mentioned them both for everybody.

Here's to another 10 years of success everyone! -

" Simply put, software development has not been moving as fast as hardware growth. While hardware manufacturers have to make faster and faster products to stay in business, software developers have to sell more and more games"Reply

Hardware is moving so fast and game developers just cant keep pace with it. -

Ikke_Niels What I miss in the article is the following (well it's partly told):Reply

I am allready suspecting a long time that the videocards are gonna surpass the CPU's.

You allready see it atm, videocards get cheaper, CPU's on the other hand keep going pricer for the relative performance.

In the past I had the problem with upgrading my videocard, but with that pushing my CPU to the limit and thus not using the full potential of the videocard.

In my view we're on that point again: you buy a system and if you upgrade your videocard after a year/year-and-a-half your mostlikely pushing your CPU to the limits, at least in the high-end part of the market.

Ofcourse in the lower regions these problems are smaller but still, it "might" happen sooner then we think especially if the NVidia design is as astonishing as they say and on the same time the major development of cpu's slowly break up.

-

lashton one of the most interesting and informativfe articles from toms hardware, what about another story about the smaller players, like Intel Atom and VILW chips and so onReply -

JeanLuc Out of all 3 companies Nvidia is the one that's facing the more threats. It may have a lead in the GPGPU arena but that's rather a niche market compared to consumer entertainment wouldn't you say? Nvidia are also facing problems at the low end of market with Intel now supplying integrated video on their CPU's which makes the need for low end video cards practically redundant and no doubt AMD will be supplying a smiler product with Fusion at some point in the near future.Reply -

jontseng This means that we haven’t reached the plateau in "subjective experience" either. Newer and more powerful GPUs will continue to be produced as software titles with more complex graphics are created. Only when this plateau is reached will sales of dedicated graphics chips begin to decline.Reply

I'm surprised that you've completely missed the console factor.

The reason why devs are not coding newer and more powerful games is nothing to do with budgetary constraints or lack thereof. It is because they are coding for an XBox360 / PS3 baseline hardware spec that is stuck somewhere in the GeForce 7800 era. Remember only 13% of COD:MW2 units were PC (and probably less as a % sales given PC ASPs are lower).

So your logic is flawed, or rather you have the wrong end of the stick. Because software titles with more complex graphics are not being created (because of the console baseline), newer and more powerful GPUs will not continue to produced.

Or to put it in more practical terms, because the most graphically demanding title you can possibly get is now three years old (Crysis), then NVidia has been happy to churn out G92 respins based on a 2006 spec.

Until we next generation of consoles comes through there is zero commercial incentive for a developer to build a AAA title which exploits the 13% of the market that has PCs (or the even smaller bit of that has a modern graphics card). Which means you don't get phat new GPUs, QED.

And the problem is the console cycle seems to be elongating...

J -

Swindez95 I agree with jontseng above ^. I've already made a point of this a couple of times. We will not see an increase in graphics intensity until the next generation of consoles come out simply because consoles is where the majority of games sales are. And as stated above developers are simply coding games and graphics for use on much older and less powerful hardware than the PC has available to it currently due to these last generation consoles still being the most popular venue for consumers.Reply

Most Popular