Larrabee: Intel's New GPU

An Overview

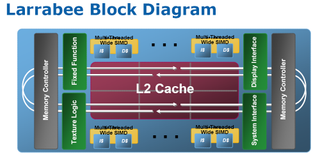

The first thing that needs to be pointed out about Larrabee is that it’s not like other GPUs. In fact, it’s not really a GPU at all. As the diagram shows, Larrabee is really a group of several very simple x86 processors, executing instructions in order, and extended with a vector floating point unit (FPU) operating on 512-bit vectors (16 single precision floating-point numbers/integers or eight double-precision floating-point numbers). Each core has 256 KB of cache memory, and communication between processors and cache coherence is handled by a ring bus. The only components that suggest this isn’t just a highly-parallel CPU like the Cell are the texture units and the display interface.

The Cores In Detail: Something New and Something (Very) Old

Like IBM with its Cell architecture, Intel made the following observation: the most demanding applications for processing power generally have a very linear flow of instructions. There are few branchings and dependencies between instructions, so consequently, modern processors, which devote a large part of their total die area to control logic (branch prediction and dynamic instruction scheduling), are not really suited for this type of task.

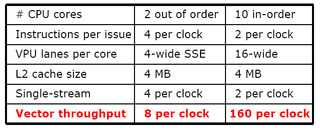

For an equal number of transistors, rather than following the usual design track by integrating two or four of these “big” processors on a die, it makes much more sense for tasks like this to use a large number of small, much simpler processors. Intel has issued a comparative table justifying its choice:

As the chart shows, for an equal die area and comparable power consumption, the in-order cores (not identical to the ones Larrabee uses) offer a vector instruction throughput 20 times greater than a Core 2 Duo at the same clock frequency. Having made that choice, it was time to design the new processors themselves. The choice of the instruction set was really no choice at all. Since Larrabee was to be Intel’s Trojan horse, with which it would enter the discrete GPU world using its x86 architecture, the architecture for the instruction set was already a known quantity.

Still the choice can seem surprising, since the x86 instruction set is complex (with its CISC architecture) and its support of all the instructions that have been added over the course of its history has an impact on the number of transistors needed compared to RISC architectures.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

-

thepinkpanther very interesting, i know nvidia cant settle for being the second best. As always its good for the consumer.Reply -

IzzyCraft Yes interesting, but intel already makes like 50% of every gpu i rather not see them take more market share and push nvidia and amd out although i doubt it unless they can make a real performer, which i have no doubt on paper they can but with drivers etc i doubt it.Reply -

Alien_959 Very interesting, finally some more information about Intel upcoming "GPU".Reply

But as I sad before here if the drivers aren't good, even the best hardware design is for nothing. I hope Intel invests more on to the software side of things and will be nice to have a third player. -

crisisavatar cool ill wait for windows 7 for my next build and hope to see some directx 11 and openGL3 support by then.Reply -

Stardude82 Maybe there is more than a little commonality with the Atom CPUs: in-order execution, hyper threading, low power/small foot print.Reply

Does the duo-core NV330 have the same sort of ring architecture? -

"Simultaneous Multithreading (SMT). This technology has just made a comeback in Intel architectures with the Core i7, and is built into the Larrabee processors."Reply

just thought i'd point out that with the current amd vs intel fight..if intel takes away the x86 licence amd will take its multithreading and ht tech back leaving intel without a cpu and a useless gpu -

liemfukliang Driver. If Intel made driver as bad as Intel Extreme than event if Intel can make faster and cheaper GPU it will be useless.Reply -

phantom93 Damn, hoped there would be some pictures :(. Looks interesting, I didn't read the full article but I hope it is cheaper so some of my friends with reg desktps can join in some Orginal Hardcore PC Gaming XD.Reply

Most Popular