We Overclocked Raspberry Pi 5 to 3 GHz, Up to 25% Perf Boost

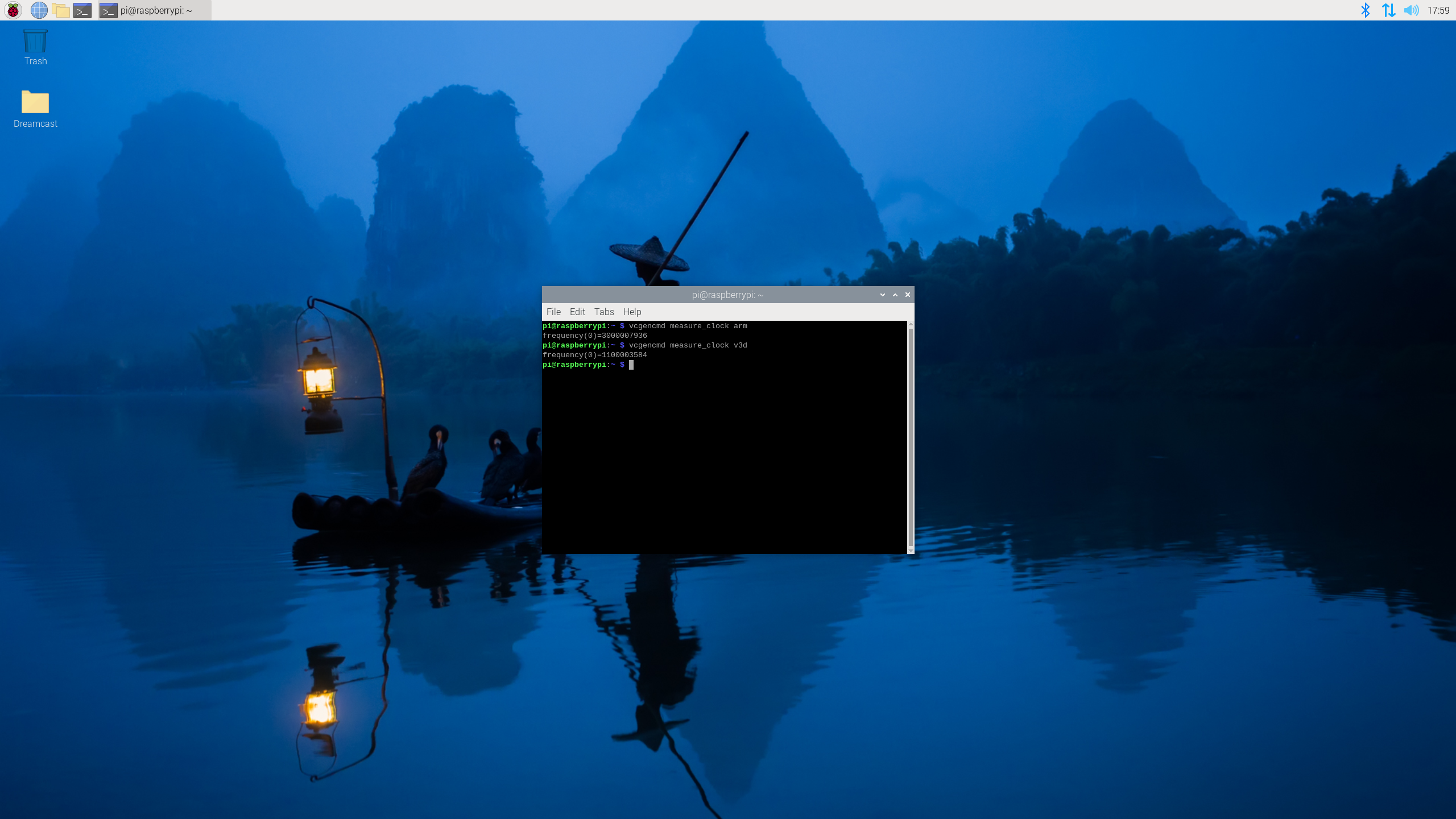

We were also able to push the GPU to 1,100 MHz.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

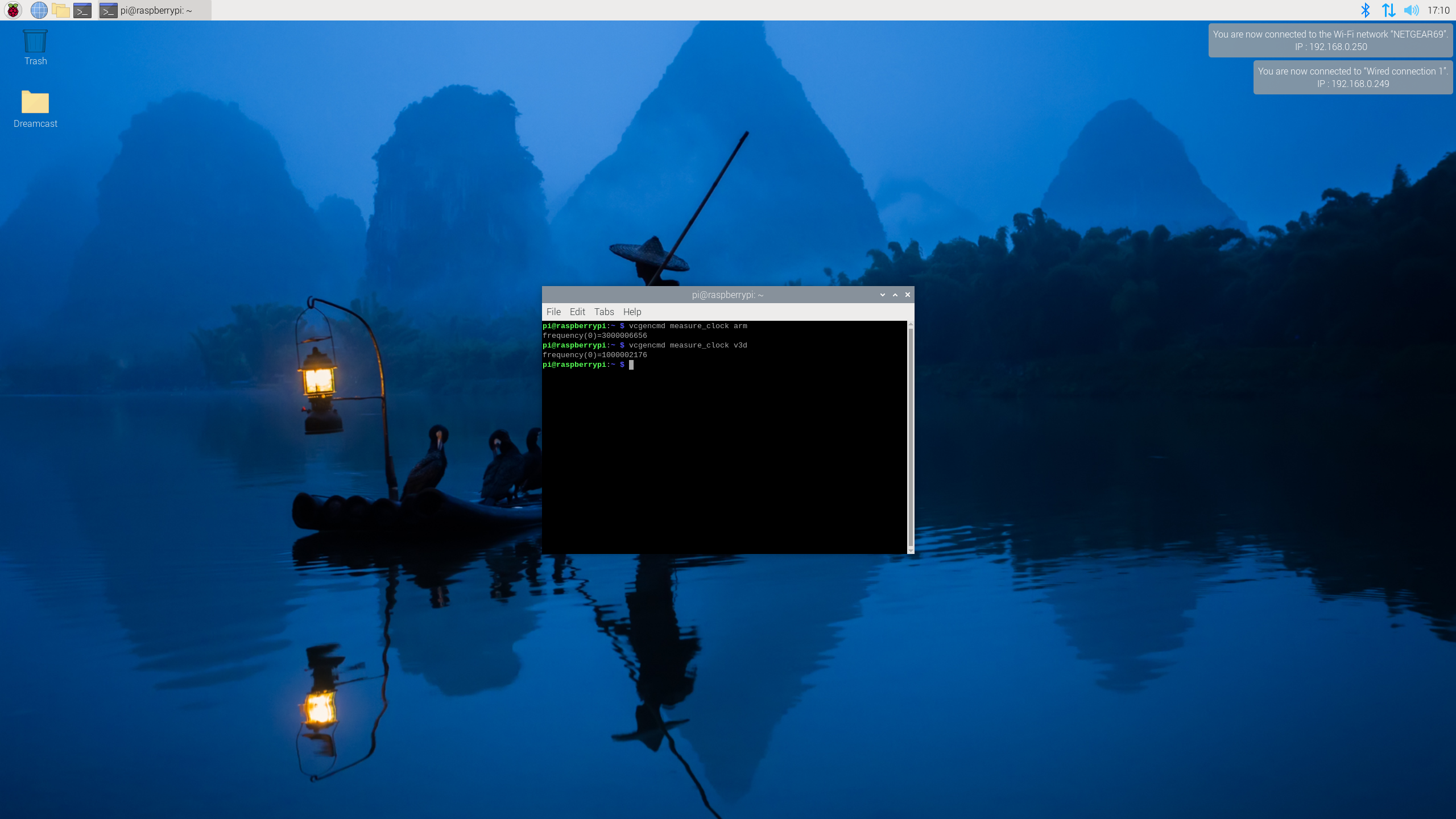

The Raspberry Pi 5 is here and we have already reviewed it, pushing it to its limits, or so we thought. Just how far can you push a Raspberry Pi 5? We decided to take it upon ourselves to overclock both the impressive 2.4-GHz Arm Cortex-A76 64-bit CPU and the new 800 MHz VideoCore VII GPU and found we could push both to new heights of 3 GHz and 1.1 GHz.

With the overclocks, we got noticeably better performance -- up to 25% in some processor-heavy workloads. However, on graphics-intensive tasks such as video streaming or gaming, we didn't see many gains.

Overclocking CPU and GPU

In testing for our review, we had already proven that our Raspberry Pi 5 could stably overclock to 3 GHz (silicon lottery allowing) but we never overclocked the VideoCore VII GPU. So after a quick "sanity test" to 900 MHz in order to see if the GPU was overclockable we decided to go for broke and pushed the VideoCore VII to 1 GHz. No over voltages, no warranty breaking hackery, just a few lines of text in the config.txt file and we had a free speed boost.

To check that the system was stable we ran a five minute Stressberry test. All four CPU cores were pushed to 3 GHz for the full duration. No instability, no graphical corruption, just a snappier desktop experience. During the stress test, the Pi 5 used 10 watts and reached between 69 and 74 degrees Celsius. At idle, we saw a temperature around 46 degrees degrees with 3 watts of power consumption.

We used the official Raspberry Pi 5 cooler during our overclocking tests. While you can try to use one of the third-party coolers that were made for the Pi 4, they don't fit perfectly and passive cooling just won't cut it.

We should also say that, while Associate Editor Les Pounder didn't experience any instability with his Pi 5 at 3 GHz, Editor-in-Chief Avram Piltch did see his unit freeze a few times when he attempted to run benchmarks such as Geekbench 5.4. However, the stress test also ran smoothly for him.

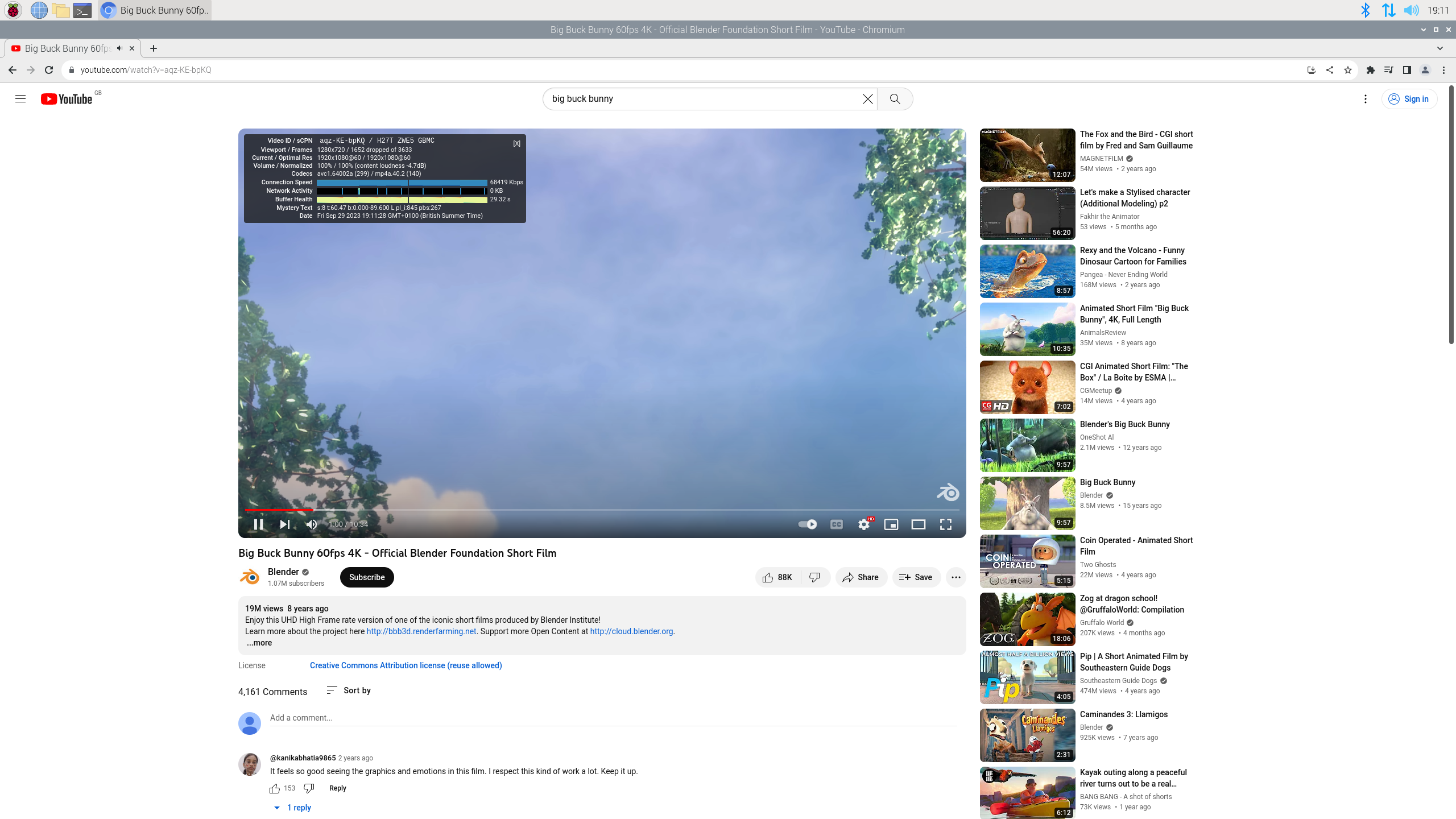

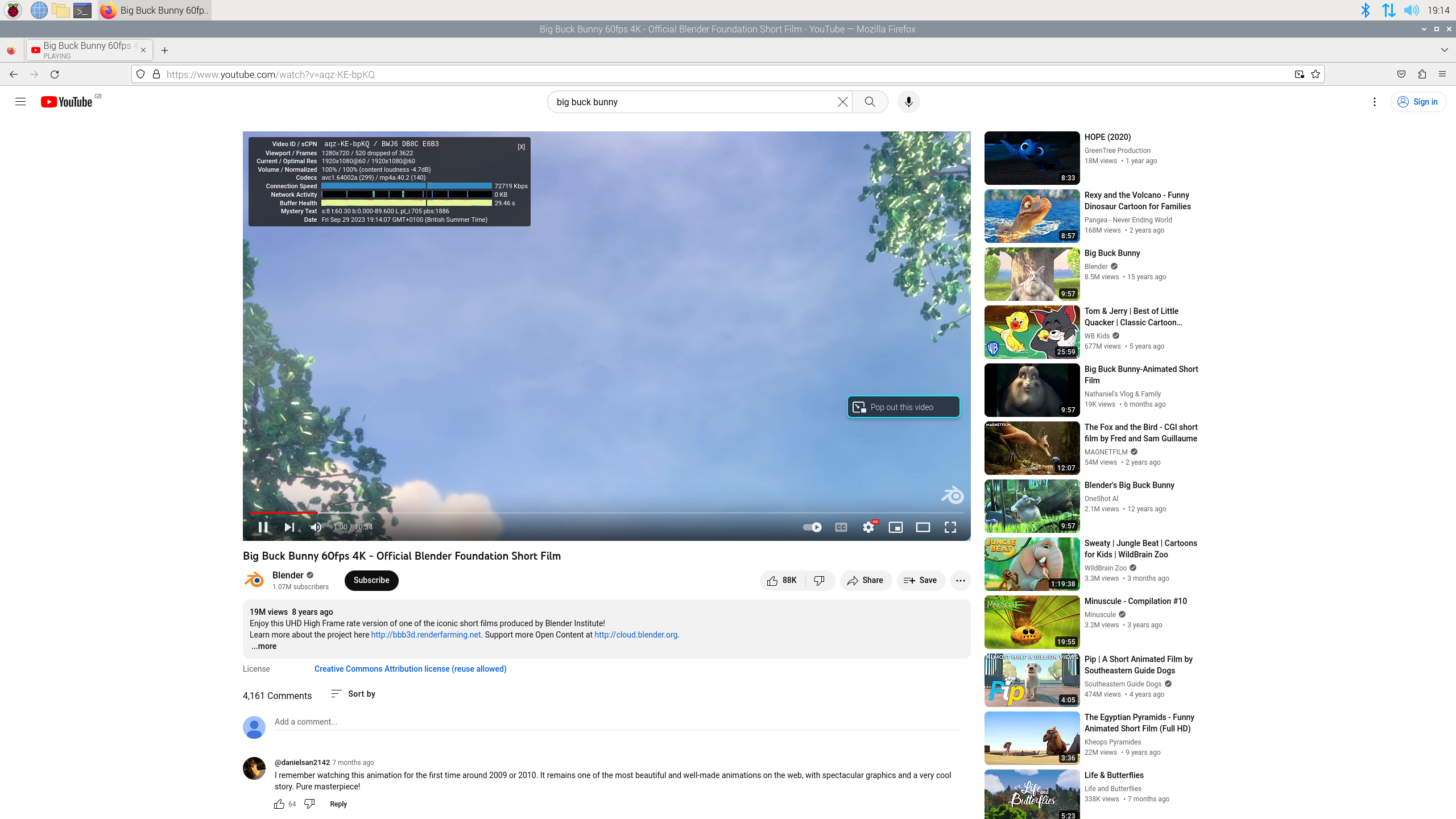

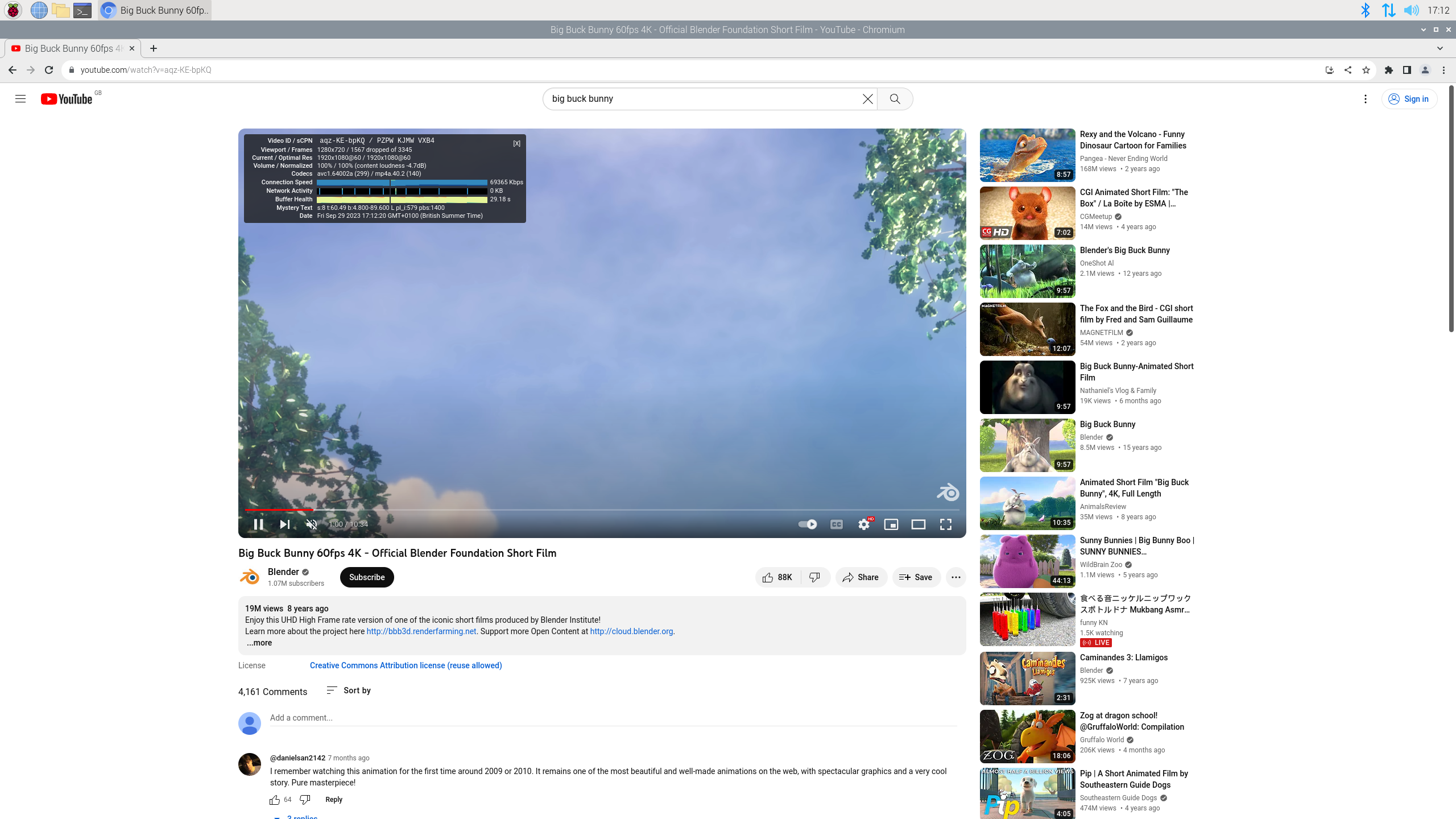

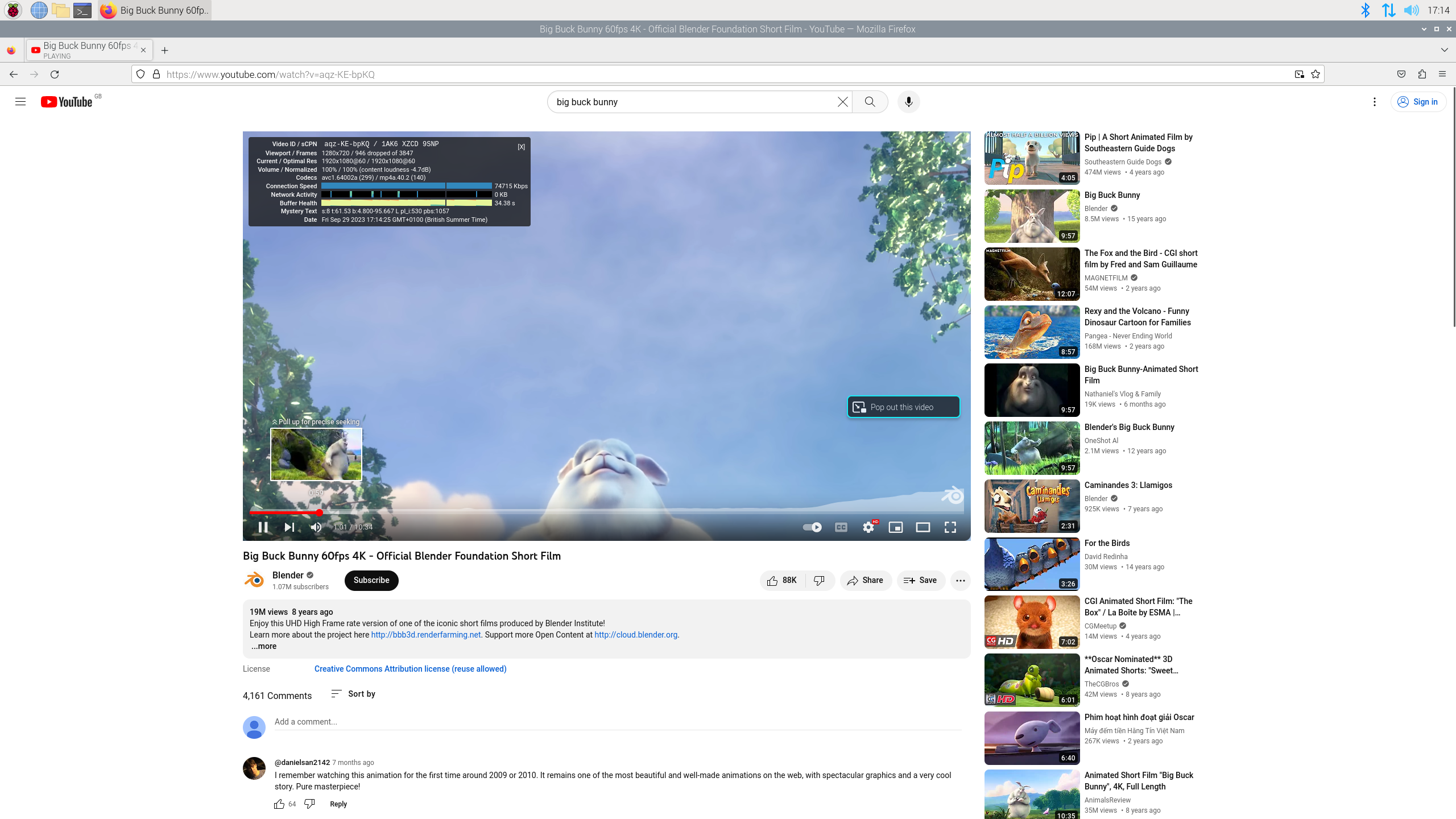

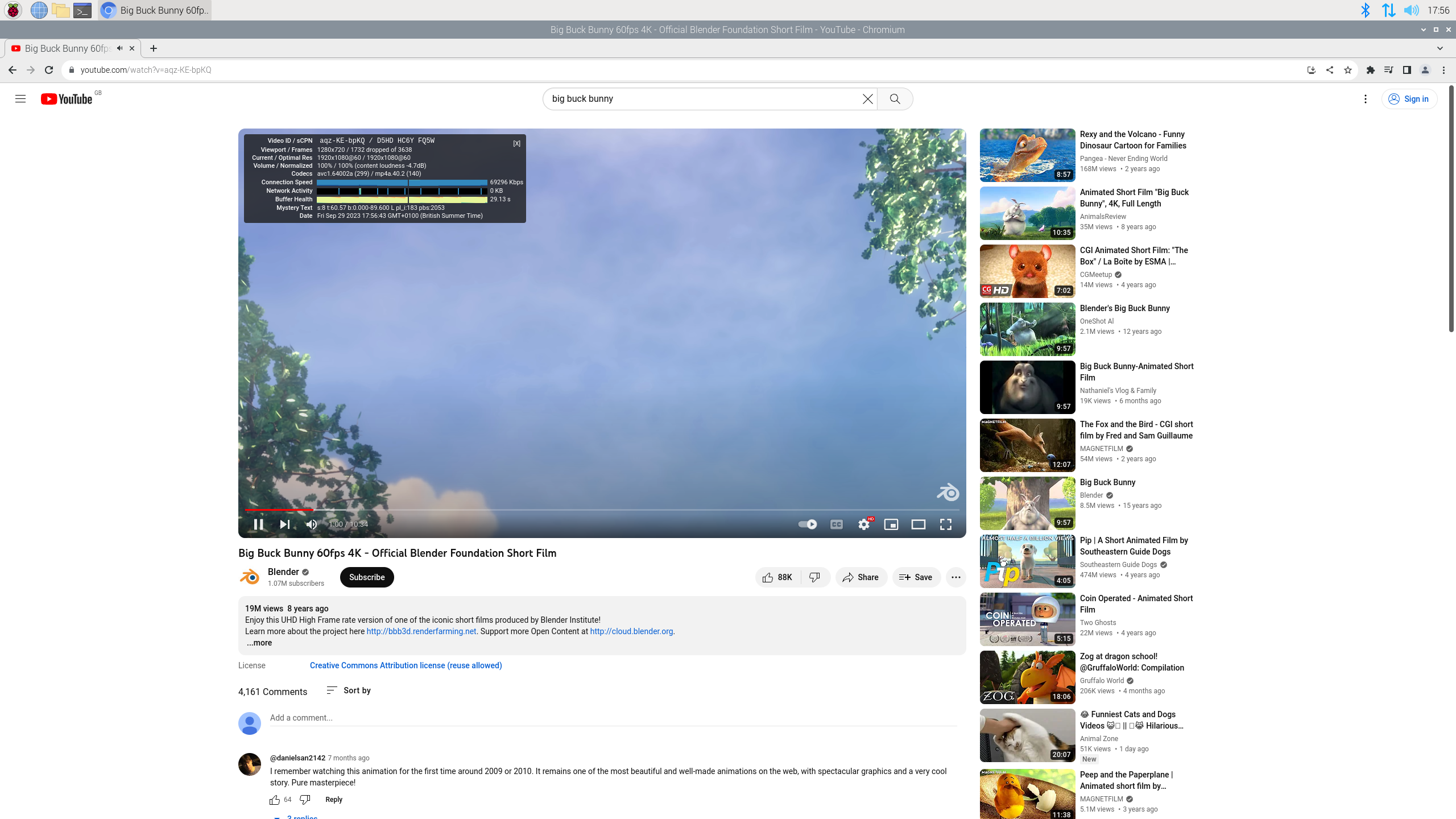

We wanted to test YouTube performance on both Firefox and Chromium, specifically the number of dropped frames. Our desktop resolution was 2560 x 1440 but we kept video playback to 1080p, 60 fps. On each browser we played Big Buck Bunny for one minute and recorded the number of dropped frames at the mark.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

| Row 0 - Cell 0 | Row 0 - Cell 1 | Row 0 - Cell 2 | Row 0 - Cell 3 |

| CPU Speed / GPU Speed | 3 GHz / 1 GHz | 3 GHz / 1.1 GHz | Stock 2.4 GHz / 800 MHz |

| Firefox Dropped Frame / Total | 946 / 3847 (24.6%) | 869 / 3649 (23.8%) | 520 / 3622 (14.4%) |

| Chromium Dropped Frames / Total | 1567 / 3345 (46.8%) | 1732 / 3638 (47.6%) | 1652 / 3633 (45.5%) |

It seems that overclocking doesn't really benefit YouTube video playback. In fact our best performance was at stock speeds using Firefox. We saw only 14.4% dropped frames in one minute of video. When we compare this to 1 GHz GPU (24.6%) and 1.1 GHz GPU (23.8%), the situation was actually a little worse. There appears to be no real benefit to stressing out the GPU if all we want to do is watch YouTube videos.

What about gaming? We'd already tested Dreamcast emulation via the ReDream emulator and even at stock speeds it ran beautifully. At 2560 x 1440 it played Jet Set Radio at 59/60 fps. Could we get better performance with an overclock? Long story short, no, the fps counter stayed the same. But an overclock may help other consoles hit the magic 60 fps (we're looking at you N64, PSP Sega Saturn).

Where can Overclocking be of Benefit?

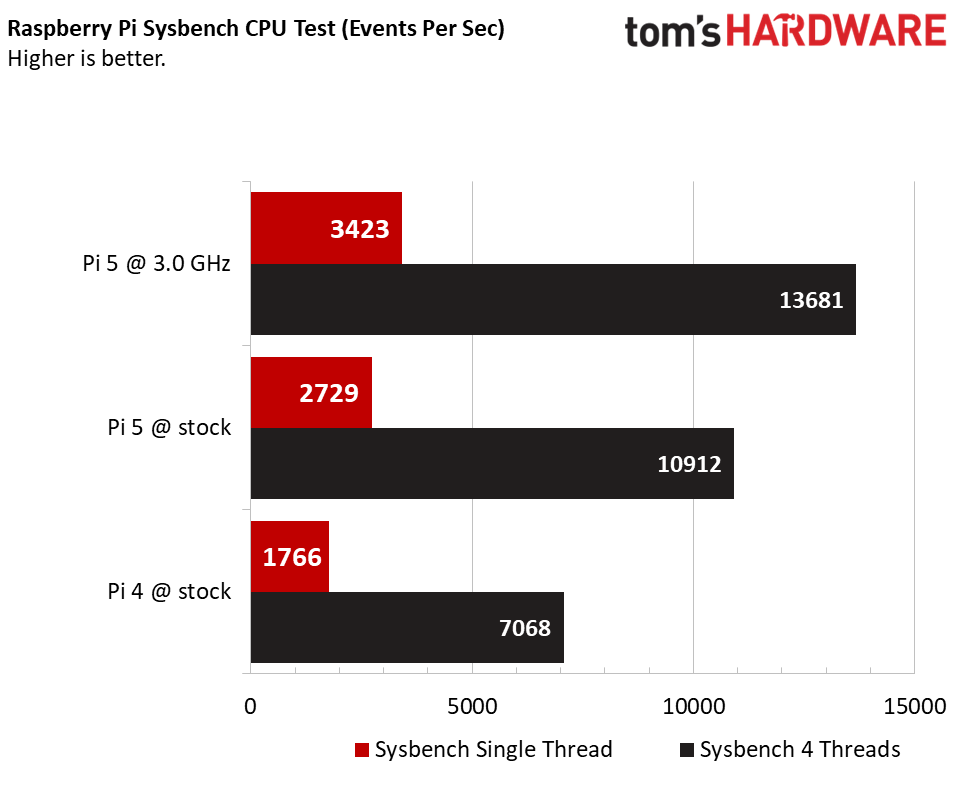

When you need raw computational power, the power to crunch numbers, then a little more clock speed can be a good thing. We ran the Sysbench synthetic CPU benchmark and, at stock during a single thread test, we got 2,729 events per second and, running four threads, we saw 10,921 events per second. Compare that to the Raspberry Pi 4 which scored on 1,766 and 7,068 respectively.

After overclocking to the CPU to 3 GHz and GPU to 1.1 GHz, we saw a marked improvement. A single thread score of 3,423 and multi-core of 13,681. That is a 25% improvement for both single and four threads. Not bad and all it cost us was a little config file trickery.

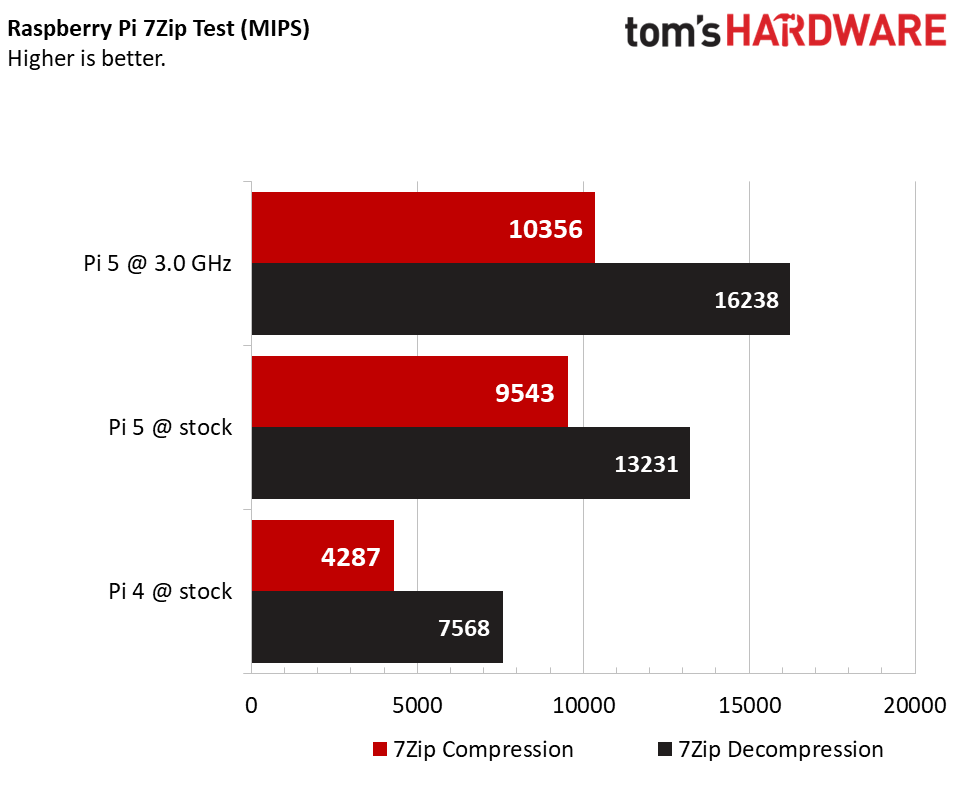

Out final test was 7-Zip benchmark which tests hash calculation methods, compression and encryption codecs using 7-Zip. The stock Raspberry Pi 5 performed compression at 9,543 MIPS, over double what the Raspberry Pi 4 (4287) could achieve. For decompression, the Pi 5 managed 13,231 MIPs, just under double the Pi 4's score of 7568 MIPS.

What performance boost does overclocking afford us? In compression we saw a score of 10,356 MIPS, an 8% improvement over stock. For decompression we recorded a score of 16,238 MIPS, a healthy 20% boost.

We've yet to test AI / Machine Vision. How would TensorFlow perform on the Raspberry Pi 5? On the Pi 4 it ran, ok, but a low FPS crippled its performance. Could the Raspberry Pi 5 unlock a lower cost platform for AI / ML?

Les Pounder is an associate editor at Tom's Hardware. He is a creative technologist and for seven years has created projects to educate and inspire minds both young and old. He has worked with the Raspberry Pi Foundation to write and deliver their teacher training program "Picademy".

-

bit_user Not a word about what cooling solution was used, temperatures, or how it affected power consumption?Reply

This being Tom's Hardware, I had to check several times! I hope the article gets updated with some of this info.

: ) -

LabRat 891 Now that the CPU is OC'd,Reply

take the M.2 Hat and adapt a x4 Uplink PCIe switch/HBA, and start adding GPGPUs. -

bit_user Reply

Toms' Raspberry Pi 5 Live Blog article linked to one where Jeff Geerling tried a variety of things, ultimately working up to dGPUs:LabRat 891 said:Now that the CPU is OC'd,

take the M.2 Hat and adapt a x4 Uplink PCIe switch/HBA, and start adding GPGPUs.

https://www.jeffgeerling.com/blog/2023/testing-pcie-on-raspberry-pi-5 -

apiltch Replybit_user said:Not a word about what cooling solution was used, temperatures, or how it affected power consumption?

This being Tom's Hardware, I had to check several times! I hope the article gets updated with some of this info.

: )

Thanks (and I mean this) for holding our feet to the fire. I'll put some more detail in. I guess we though it was implied but we should have said that we used the official Raspberry Pi 5 cooler. In our review, we noted that this is really the only cooler that fits right now (you can awkwardly try to put a Raspberry Pi 4 cooler on it but it doesn't fit correctly).

Les mentioned some of this in the review that we should have put into the breakout overclocking article as well. He said "At 3 GHz the Raspberry Pi 5 had an idle temperature of 46.6°C and consumed 3 Watts. Under stress the Raspberry Pi 5 hit a top temperature of 69.2°C and consumed 10 Watts." -

Giroro I want to see the Pi 5 performance compared to Pi 4, when neither has a heat sink or fan.Reply

Not just thermals, but some performance numbers. -

bit_user Reply

Indeed, that's what I thought most likely, but I hate making assumptions. Furthermore, your Raspberry Pi 5 Live Blog article did feature that 52Pi Ice Tower cooler:apiltch said:I guess we though it was implied but we should have said that we used the official Raspberry Pi 5 cooler.

Fair point. I did read that very shortly after it was posted, having stumbled across the launch coverage just as I was about to go to bed.apiltch said:idle temperature of 46.6°C and consumed 3 Watts. Under stress the Raspberry Pi 5 hit a top temperature of 69.2°C and consumed 10 Watts."

😫

Respect to you and your staff for covering that midnight launch! Actually, when did the embargo lift? Was it like 8 AM UK? -

mechkbfan How does these benchmarks compare to x86 CPU/GPU?Reply

Are we up to Intel sandy bridge laptop performance now? -

brandonjclark This is not an import comment or anything, but I REALLY liked the clip art used for this article.Reply -

bit_user Reply

Good point. I think I sort of noticed it, but was too eager to get into the content.brandonjclark said:This is not an import comment or anything, but I REALLY liked the clip art used for this article.

(Image credit: Future / Pexels / Openclipart)

Ah, that's a WebP. Which reminds me about a recent critical vulnerability. Make sure your browsers are up to date, people!"Critical libwebp Vulnerability Under Active Exploitation - Gets Maximum CVSS Score"https://thehackernews.com/2023/09/new-libwebp-vulnerability-under-active.html

I know cybersecurity isn't really Toms' brief, but I am a bit surprised not to see that mentioned here!