Programmer builds homemade GPU, shows it off with 3D graphics and physics engine made from scratch

3D game engines can be built from scratch too, not just graphics cards.

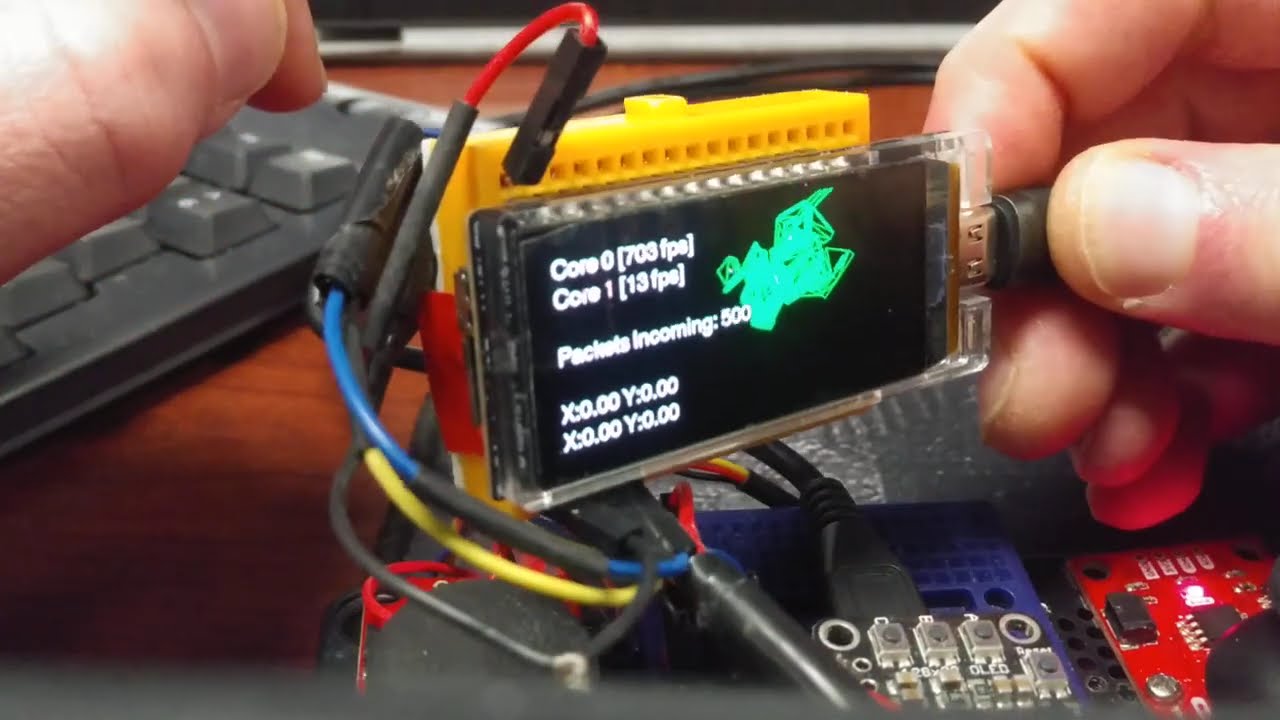

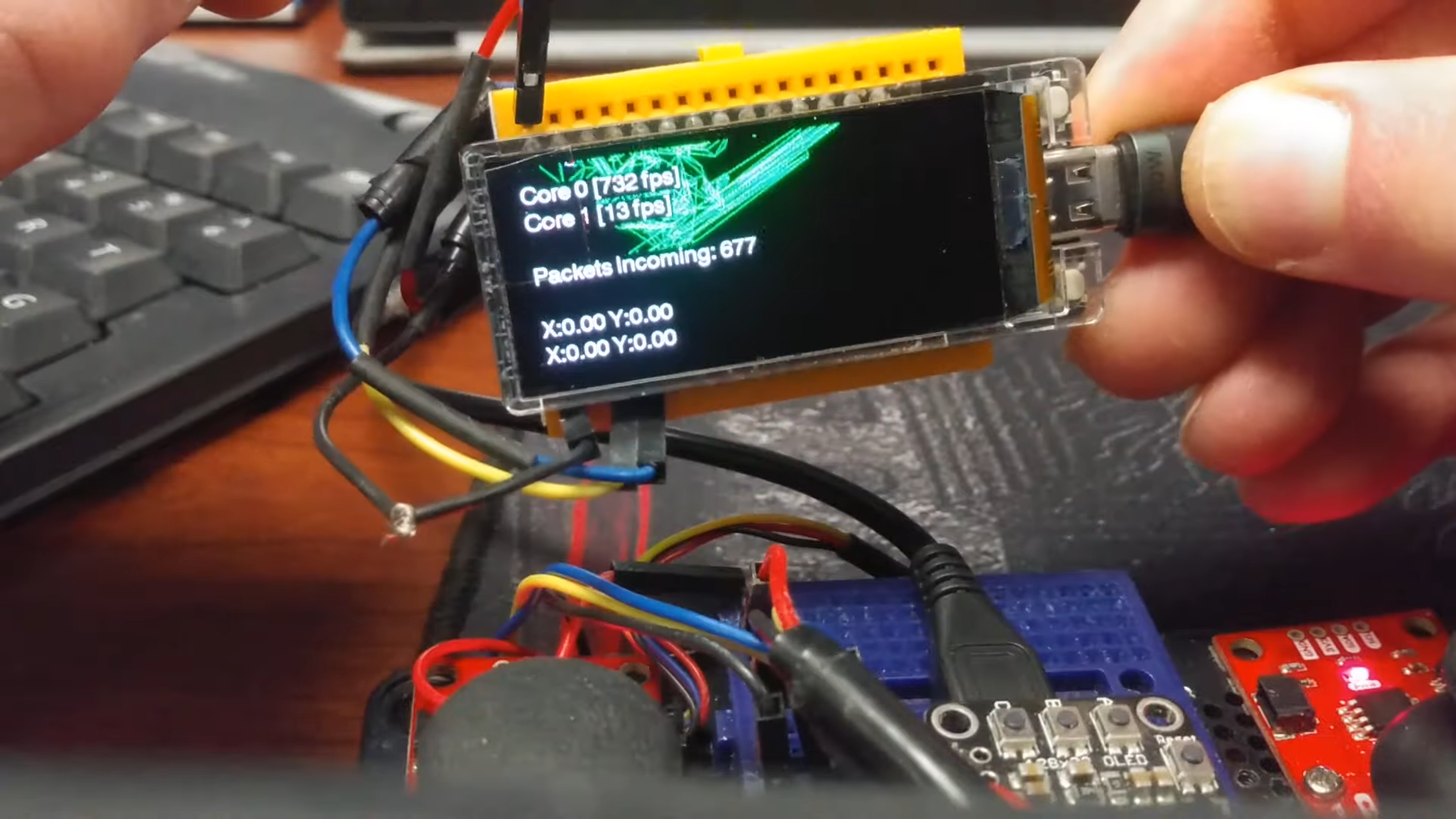

Self-taught computer programmer Alex Fish published a demonstration of a 3D graphics and physics engine he made entirely from scratch — running on a GPU he made from basic parts. The GPU itself features a built-in screen with thumbsticks to control on-screen graphics.

This story is very similar to the homemade FuryGPU we covered a week ago. But this homemade GPU from Alex Fish is geared towards the software side of GPU development — rather than just the GPU hardware alone.

The hardware itself was made with various off-the-shelf parts that you can buy yourself. The parts consist of an AMOLED 1.91-inch display with a development board wireless module attached to it, SparkFun Joysticks, and a SparkFun Qwiic Mux breakout board.

While the hardware isn't completely custom, Alex's 3D engine used on the homemade GPU is. The programmer built the 3D graphics and physics engines (dubbed the ESPescado engine) completely from scratch using C++ and OpenGL. Even the libraries, featuring the vector and matrix math, were written from scratch. The physics and geometry are rendered in 3D — however, to make the images truly look 3D, the engine uses a perspective projection matrix and perspective division to turn 3D objects into 2D images. These are the fundamentals of 3D graphics.

The homemade 3D engine also takes advantage of meshes. Meshes are made from points and lines that are grouped into triangles, which are then grouped into meshes. In video-game terminology, meshes form the foundation of objects and terrain that we see in-game, and are what gives them their photo-realistic characteristics. These meshes are placed into the "world" with a model-to-world matrix (TRS). The TRS system can scale, rotate, and translate every point visible from local space to world space.

Alex was able to share a live demo of this homemade graphics engine working on his tiny 1.91-inch GPU development kit. A YouTube video shows the GPU displaying a green triangular object on a black background. With the two joysticks he connected to the GPU, he was able to move the object around, similar to a third-person viewpoint in a video game.

The demo itself isn't groundbreaking, but it proves that handmade 3D graphics engines can be made from scratch. If Alex has connections with Dylan Barrie — creator of the FuryGPU — we could potentially see a full-blown 3D video game run entirely off of homemade hardware and software. If you want to check out Alex's 3D engine, he has created a couple of GitHub pages with details on the 3D engine as well as the hardware he used to run it.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

Eximo My money is on the next big thing that Microsoft adds to DX is AI. They will probably brand it as Direct AI or something.Reply -

TechyInAZ Reply-Fran- said:This reminds me... Where's DX13? LOL

Regards.

I feel like DirectX has turned into a service, similar to Windows LOL. Cause by now, yeah there would of definitely been DX13. -

vior331 This reads like the article author has never heard of software 3D-renderers before :p This particular one happening to be run on a microcontroller (personally I find calling this a "GPU" a stretch, given the microcontroller just so happens to at the current moment be running software that outputs IO that the screen-side controller can interpret as input).Reply

I should mention that the "written from scratch" part (that isn't commonly written in 3D-renderer implementations these days) really is primarily just a couple-hundred lines of point, line and triangle-fill drawing code (that substitute the original OpenGL calls the creator used previously). (Actually outputting the buffer to the screen was done using a pre-existing library.) The implementation of meshes, transform/projection matrices, a 3D-math library, physics, ... is all basic game/graphics programming that is commonly written for/along-side 3D-renderers (anybody that's followed decent e.g. OpenGL and game-dev tutorials has likely implemented these).

I congratulate the person on succeeding with this project (unironically :)) and the article author for having managed to pass this as TH-tier news. -

bit_user Reply

Thank you for looking into this. Imagine my amazement when I read an article about someone building a GPU, and yet the details of the actual GPU are the part conspicuously missing!! Simply beyond ridiculous.vior331 said:This particular one happening to be run on a microcontroller (personally I find calling this a "GPU" a stretch, given the microcontroller just so happens to at the current moment be running software that outputs IO that the screen-side controller can interpret as input).

Back when I first started dabbling with 3D graphics, this was the only option. OpenGL existed, but not on any hardware I could afford.vior331 said:I should mention that the "written from scratch" part ... really is primarily just a couple-hundred lines of point, line and triangle-fill drawing code (that substitute the original OpenGL calls the creator used previously). -

DieReineGier Well, back in the day when CPU clocks were below 100 MHz I painted some shaded surfaces made from meshed triangles. I used homogeneeous coordinates to merge all mathematical operations needed to project the model to the 2D screen space into the very same matrix. I wouldn't have called it even the core of a 3D engine.Reply

The article seems to oversell a little, doesn't it?

However I like the idea of using the acceleration sensor to control the rotation of the model! -

bit_user Reply

Same. I didn't bother with Z-buffering, though. I just depth-sorted my triangles.DieReineGier said:Well, back in the day when CPU clocks were below 100 MHz I painted some shaded surfaces made from meshed triangles. I used homogeneeous coordinates to merge all mathematical operations needed to project the model to the 2D screen space into the very same matrix. I wouldn't have called it even the core of a 3D engine. -

Sethius Totally agree with this. There was a time, obviously before this writer was around, where you just did all this math programming because there was no such thing as a graphics/game engine. Kudos that they assembled a bunch of parts and got it working but hardly something to write home about. What's next? Person is discovered that can answer math questions without a calculator?Reply -

Peter Cockerell GPU. You keep using that word. I do not think it means what you think it means.Reply -

AkroZ Since when making a 3D application with OpenGL becomes a rarity ? His project is more on using motion tracking than 3D rendering. They are some people coding rasterisation or ray tracing libraries, we don't speak much of it because it is not so hard to do.Reply

On FuryGPU he make the hardware, created a Windows driver, and a rendering library, and modified Doom to use his own rendering library.

It is to be noted that the original Doom do not use the GPU, it has his own rendering engine for pseudo 3D (based on a BSP tree, similar to ray tracing) and is able to run on a 25 Mhz CPU.