SIPEW 2008: All About Benchmarking

Power Efficiency Testing with SPECpower_ssj2008

While power consumption was never a real issue in desktop systems until Intel reached unreasonable power requirements with its Pentium 4 and Pentium D processors, the issue has been taken seriously in server farms for many years. Power is not only required to run servers, but increasing power requirements force data center and server farm operators to dedicate even more energy to air conditioning solutions. High density, high performance computing solutions require efficiency, hence SPEC decided to work on a power efficiency benchmark. SPECpower_ssj2008 was released in the end of 2007, and it relates performance and power requirements using the Java benchmark SPECjbb2005.

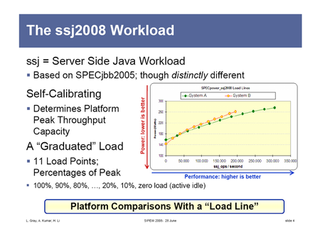

SPEC’s SPECpower_ssj2008 is based on SPECjbb2005, which is the Java benchmark suite. It can be used on all sorts of systems, so it is a flexible choice. However, the benchmark suite had to be modified to an extent that prevents the results of SPECpower_ssj2008 and SPECjbb2005 from being comparable.

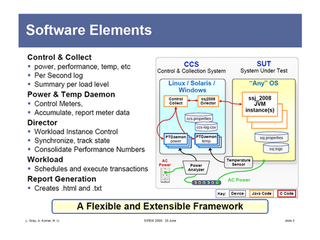

SPEC calls the test system SUT, System under Test, and the monitoring system the CCS, Control & Collection System.

You will need a power meter and a second system for tracking power consumption in order to run SPECpower_ssj2008; SPEC refers to the monitoring system as the Control and Collection System (CCS). As you can see, the benchmark consists of a server part for the SUT (ssj_2008 JVM) and a client instance, the ssj2008 Director. The CCS actually commences the benchmark and takes care of measurements using the power meter and a thermometer.

Performance Calibration and Measurements

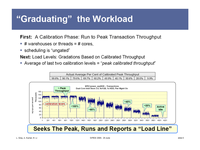

First of all, the ssj Director executes a peak transaction analysis to calibrate the system under test. Once this is done, the benchmark looks for the peak, runs all tests, and reports a load line as in the image below.

The ssj2008 Director triggers one worker per processing core to determine the peak throughput on the benchmark, in order to calibrate the test system. It then runs its test suite at 100% load and at 10% increments all the way down to active idle, which represents the system waiting for work. SPEC decided to do this because servers typically run at low workloads, but they almost never actually idle. To reduce errors, the measurement starts only a few seconds after the workload began, and it ends some moments before the benchmark run will be finished. This is done to avoid measuring results while the system is in a transitional state.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Java Performance Per Watt for 0-100% Load

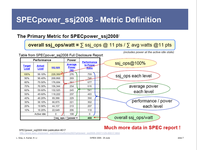

This is how SPECpower_ssj2008 calculares performance per Watt: It uses the sum of the performance results in operations per second and divides it by the sum of average power for each of the 11 test runs. The result is displays as ssj2008 operations per Watt.

It is obvious, given this workload and the test system requirements, that this benchmark was not designed to be executed at home. But it is the first industry standard benchmark of its kind that actually utilizes the core of a common Java benchmark. Since a large proportion of enterprise applications are based on Java VMs, the Java performance per watt results can be considered very relevant to the industry. However, despite drawing a comprehensive picture, it is of course not the only tool you would consult when making a significant decision.

Current page: Power Efficiency Testing with SPECpower_ssj2008

Prev Page Scheduling in Server Farms Next Page ConclusionMost Popular