AI Learns Like a Pigeon, Researchers Say

Both pigeons and AI models can be better than humans at solving some complex tasks

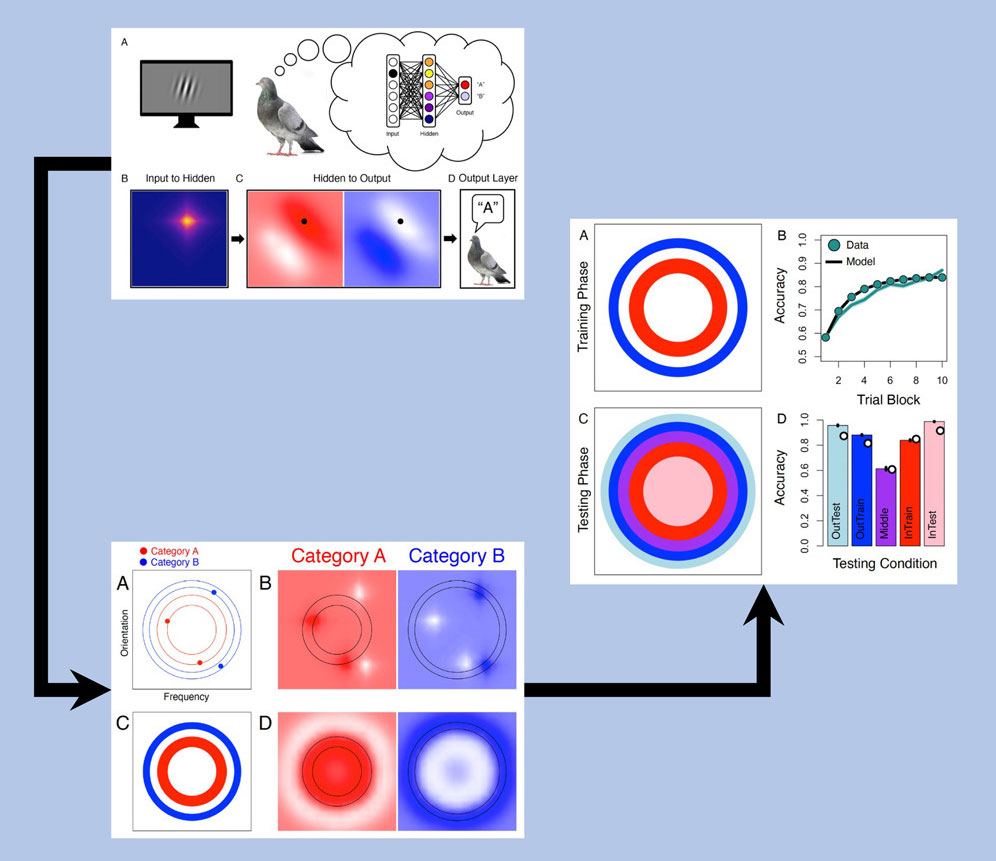

Researchers at Ohio State University have found that pigeons tackle some problems in a very similar way to modern computer AI models. In essence, pigeons have been found to use a ‘brute force’ learning method called "associative learning." Thus pigeons, and modern computer AIs, can reach solutions to complex problems that befuddle human thinking patterns.

Brandon Turner, lead author of the new study and professor of psychology at Ohio State University, worked with Edward Wasserman, a professor of psychology at the University of Iowa, on the new study, published in iScience.

Here are the key findings:

- Pigeons can solve an exceptionally broad range of visual categorization tasks

- Some of these tasks seem to require advanced cognitive and attentional processes, yet computational modeling indicates that pigeons don’t deploy such complex processes

- A simple associative mechanism may be sufficient to account for the pigeon’s success

Turner told the Ohio State news blog that the research started with a strong hunch that pigeons learned in a similar way to computer AIs. Initial research confirmed earlier thoughts and observations. “We found really strong evidence that the mechanisms guiding pigeon learning are remarkably similar to the same principles that guide modern machine learning and AI techniques,” said Turner.

A pigeon’s “associative learning” can find solutions to complex problems that are hard to reach by humans or other primates. Primate thinking is typically steered by selective attention and explicit rule use, which can get in the way of solving some problems.

For the study, pigeons were tested with a range of four tasks. In easier tasks, it was found pigeons could learn the correct choices over time and grow their success rates from about 55% to 95%. The most complex tasks didn’t see such a stark improvement over the study time, going from 55% to only 68%. Nevertheless, the results served to show close parallels between pigeon performance and AI model learning performance. Both pigeon and machine learners seemed to use both associative learning and error correction techniques to steer their decisions toward success.

Further insight was provided by Turner in comments on human vs pigeon vs AI learning models. He noted that some of the tasks would really frustrate humans as making rules wouldn’t help simplify problems, leading to task abandonment. Meanwhile, for pigeons (and machine AIs), in some tasks “this brute force way of trial and error and associative learning... helps them perform better than humans.”

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Interestingly, the study recalls that in his Letter to the Marquess of Newcastle (1646), French philosopher René Descartes argued that animals were nothing more than beastly mechanisms — bête-machines, simply following impulses from organic reactions.

The conclusion of the Ohio State blog highlighted how humans have traditionally looked down upon pigeons as dim-witted. Now we have to admit something: our latest crowning technological achievement of computer AI relies on relatively simple brute-force pigeon-like learning mechanisms.

Will this new research have any influence on computer science going forward? It seems like those involved in AI / machine learning and those developing neuromorphic computing might find some useful crossover here.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

Geef Lets just hope AI doesn't start flying drones and bombing people's cars with bird AI <Mod Edit>Reply -

atomicWAR Reply

ROFL...that is SO true. I love tech, see great potential in AI but fear the industry isn't listening to the people who design/invent this stuff. Even though people like Hinton are telling the tech sector or anyone who will listen, that we need to pump the breaks on AI so we can better understand how AI is 'thinking'. Yet we/the tech sector blindly push forward without a proper understanding of what is actually going on in some of these AI models. I hope our soon to be AI overlords are kinder than we are....thisisaname said:Pigeons and Ai so similar, they both do not care where their <Mod Edit> lands. -

bit_user I wonder if humans can be trained to use this associative learning technique. If you could identify problems where it's a more effective way to learn, and then deploy it effectively, perhaps you could master certain tasks better and more quickly than others.Reply -

mitch074 Reply

Reading the article, it seems to be brute-force learning at its best - pigeons simply don't get bored.bit_user said:I wonder if humans can be trained to use this associative learning technique. If you could identify problems where it's a more effective way to learn, and then deploy it effectively, perhaps you could master certain tasks better and more quickly than others.

Humans can master it by not getting impatient in front of repetitive, simplistic problems.

...

Like, how to tighten that bolt with a wrench.

...

I guess I'll keep trying to think outside the box, thank you. -

stelarius Reply

Uhm, school biology has taught me that pigeons are intelligent creatures that are capable of complex learning, and that humans can learn repetitive tasks in a more efficient way than pigeons by using our knowledge and understanding of the world.mitch074 said:Reading the article, it seems to be brute-force learning at its best - pigeons simply don't get bored.

Humans can master it by not getting impatient in front of repetitive, simplistic problems.

https://edubirdie.com/pay-for-homework helps me pay for homework.

...

Like, how to tighten that bolt with a wrench.

...

I guess I'll keep trying to think outside the box, thank you.

Overall, the best way for us to learn is to combine our ability to think outside the box with our ability to learn from repetition)) -

mitch074 Reply

Pigeons ARE capable of complex learning : they can repeat a task that they've been shown. Crows (and several other corvidae) can solve puzzles. Current AIs are like pigeons, indeed, but nowhere near problem-solving intelligence.stelarius said:Uhm, school biology has taught me that pigeons are intelligent creatures that are capable of complex learning, and that humans can learn repetitive tasks in a more efficient way than pigeons by using our knowledge and understanding of the world.

Overall, the best way for us to learn is to combine our ability to think outside the box with our ability to learn from repetition))

Or, to cite a founder of AI (who insists on calling it Applied Epistemology, I would tend to agree), current AIs know "How", but we're still ways away from "Why". -

bit_user Reply

GPT-class AI can solve word problems. Seriously, how could it write code if it were incapable of problem-solving?mitch074 said:Current AIs are like pigeons, indeed, but nowhere near problem-solving intelligence.

Which founder and when did they say it?mitch074 said:Or, to cite a founder of AI (who insists on calling it Applied Epistemology, I would tend to agree), current AIs know "How", but we're still ways away from "Why". -

mitch074 Reply

Q 1 : pattern recognition. Don't kid yourself, 99% of the code out there is always solving the same base problems; current AIs recognize those patterns and regurgitate these solutions - they are VERY good at it. Ask them to solve a problem no one has ever solved before, you'll get garbage - current AI engines are basically probability generators, they'll find a pattern and "guess" (i.e. get the pattern with the highest possibility to match) the solution. No matching pattern for a problem? You'll get garbage.bit_user said:GPT-class AI can solve word problems. Seriously, how could it write code if it were incapable of problem-solving?

Which founder and when did they say it?

Q 2 : Hermann Iline, he told me so a few months ago. I know him personally. You probably won't find anything about him in the US, as he's Russian-born and he mostly writes in French. Started studying AE in the 80's, wrote a few papers on the subject (AI nerds at the beginning of the 2000's knew him), then he veered towards social science epistemology and philosophy - you know, the next step : "why". -

bit_user Reply

That's too simplistic. They can do arithmetic, basic reasoning, and solve word problems. It's a lot more than just pattern-matching.mitch074 said:Q 1 : pattern recognition. Don't kid yourself, 99% of the code out there is always solving the same base problems; current AIs recognize those patterns and regurgitate these solutions - they are VERY good at it.

Why is it people are so quick to dismiss AI after one bad answer or hallucination? If you don't get a good answer, there are techniques you can use to work around its limitations, one of which is that it can't take arbitrarily long to think about the request and generate output.mitch074 said:Ask them to solve a problem no one has ever solved before, you'll get garbage