GPU Fingerprinting Can Be Used to Track You Online: Researchers

And we thought cookies were dangerous enough.

An international team of researchers from France, Israel and Australia have developed a new technique that can identify individual users according to their specific, unique graphics card signature. Named DrawnApart, the research, which serves as a proof of concept, serves as a warning towards more invasive identification measures that websites or ill-intentioned actors could take in order to collect data on individual users’ online activities in real-time.

The technique is based on the inherent variations of hardware due to variability in manufacturing processes and individual components. Much like no human fingerprint is identical to another, no single CPU, GPU, or any other consumer item is identical to one another. This is part of the reason why CPU and GPU overclocking varies even within the same product model from manufacturers, and gave rise to the emergence of said "golden" hardware. This, in turn, means that there are minute, individual variations on performance, power, and processing capabilities of each graphics card, making this kind of identification possible.

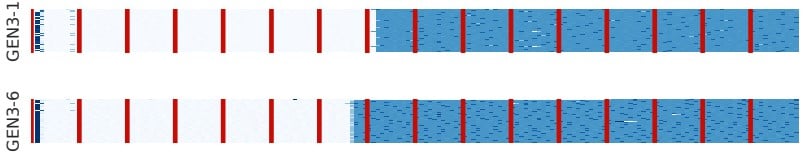

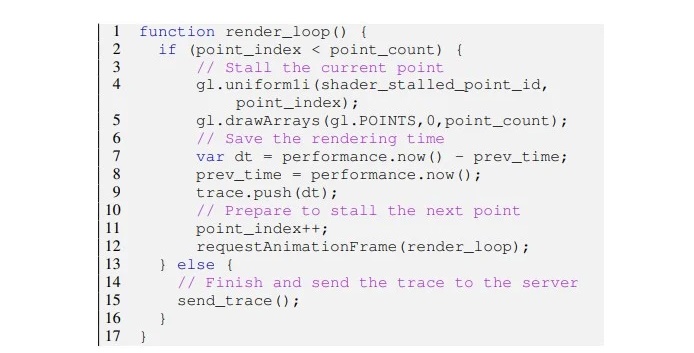

The model created by the researchers makes use of fixed workloads based on WebGL (web Graphics Library), the cross-platform API that allows graphics cards to render graphics as their are presented in-browser. Through it, DrawnApart takes over 176 measurements across 16 data collection points by running vertex operations related to short GLSL (OpenGL Shading Language), which prevents workloads from being distributed across random work units – making the results repeatable and, as such, individual for each GPU. DrawnApart can then measure the time needed to complete vertex renders, handle stall functions, and other graphics-specific workloads.

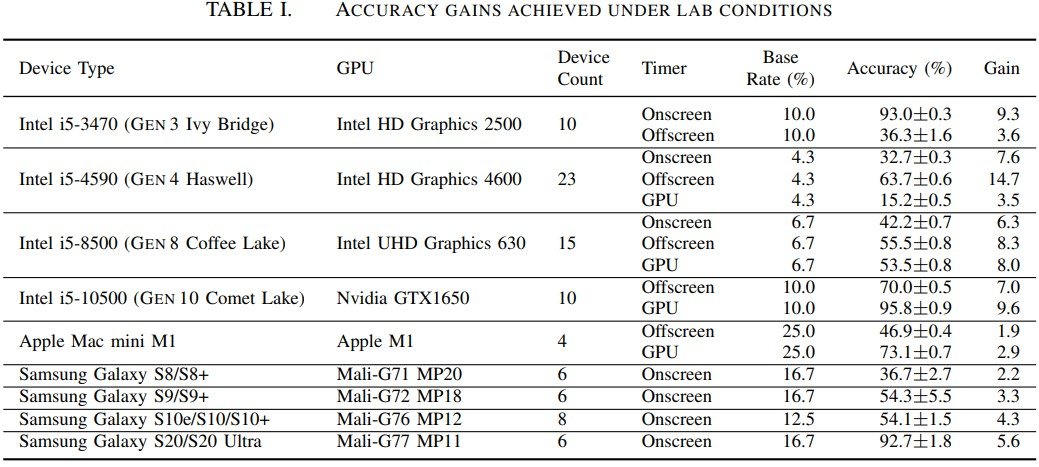

According to the research team, this is the first study that explores semiconductor manufacturing variation in a privacy context, saying that “on the practical front, it demonstrates a robust technique for distinguishing between machines with identical hardware and software configurations”, and added that it can boost "the median tracking duration to 67 percent compared to current state-of-the-art [online fingerprinting] methods."

The paper further details that the current implementation can successfully fingerprint a GPU in just eight seconds, but warns that next-gen APIs in development for the next step of the world wide web could allow for even faster and more accurate fingerprinting. WebGPU, for instance, will feature support for compute shader operations to be run through the browser. The researchers tested a compute shader approach to its DrawnApart identification process, and found that not only was accuracy tremendously increased to 98%, but that it reduced the identification time from 8 seconds through the vertex shaders down to just 150 milliseconds with the compute solution. Potentially, this could mean that merely misclicking on a website could be enough for consumers’ GPUs to be singularly identified, with all the risks that entails for personal privacy and cybersecurity. Additionally, legislation and protections on online tracking practices are mostly incompetent in protecting users from this particular technique.

Khronos, the non-profit organization responsible for the development of the WebGL library, has already formed a technical group which is currently exploring solutions to mitigate the technique. The research team in its paper outlined some potential solutions for the problema already (including parallel execution prevention, attribute value changes, script blocking, API blocking and time measurement prevention) that will likely be explored by the organization as it attempts to curb this potential assault on online users’ privacy.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

digitalgriffin WebAPI calls for things like number monitors resolution os version, browser version, patches, plugins, supported features, security monikers and fonts installed are a lot more effective and harder to bypass unless you install a dummy clean browser designed to avert such attacks.Reply -

warezme I would think the analyzer would need various runs of consistent data to build a "model" of your hardware that denotes you as that individual. Randomly accessing a spiked page wouldn't necessarily build a database of your hardware. Also, in theory you could script you're hardware to randomize GPU, memory and frequency boost levels so that the hardware presented would never give the exact same results to the same image processing or whatever it's doing. There is also an option to disable hardware on browser processing for the GPU.Reply -

InvalidError Reply

The technique doesn't need a "database of your hardware", only the performance characteristics of a single shader program running on the GPU for ~150ms.warezme said:I would think the analyzer would need various runs of consistent data to build a "model" of your hardware that denotes you as that individual. Randomly accessing a spiked page wouldn't necessarily build a database of your hardware.

Given how small the variances being measured might be, I'd be surprised if the accuracy didn't get thrown off by people having a bunch of background processes also using the GPU. -

digitalgriffin Reply

Very astute. I was wondering the same thing, Especially if you are running low end hardware like a Vega 11/8 APU.InvalidError said:The technique doesn't need a "database of your hardware", only the performance characteristics of a single shader program running on the GPU for ~150ms.

Given how small the variances being measured might be, I'd be surprised if the accuracy didn't get thrown off by people having a bunch of background processes also using the GPU. -

samopa "Khronos, the non-profit organization responsible for the development of the WebGL library, has already formed a technical group which is currently exploring solutions to mitigate the technique. "Reply

All they need to do is introduce an option called "incognito mode", which when selected, it will introduce random delay for all define function before they finishing their task.

Granted it will make performance little bit suffer, but i think its worth trade-off. -

rluker5 Intel igpus are set to run well below their limits. For example the igpu on my 12700k can be overclocked from 1500mhz to 2000mhz on stock volts. If most are like this, almost all will have little trouble running websites pegged at 100% performance for the task with no significant variation.Reply -

InvalidError Reply

It may not look like a "significant variation" in GPU benchmarks but it can still be statistically significant enough signature to be relatively unique.rluker5 said:Intel igpus are set to run well below their limits. For example the igpu on my 12700k can be overclocked from 1500mhz to 2000mhz on stock volts. If most are like this, almost all will have little trouble running websites pegged at 100% performance for the task with no significant variation.

For example, every reference clock crystal has a different frequency since it is nearly impossible to create atomically identical crystals are and what few atomic twins may exist are unlikely to operate under identical environments. As long as you have a sufficiently accurate time base to measure the crystals' frequency and jitter with the necessary precision, you could hypothetically uniquely identify every one of them. -

samopa ReplyInvalidError said:It may not look like a "significant variation" in GPU benchmarks but it can still be statistically significant enough signature to be relatively unique.

For example, every reference clock crystal has a different frequency since it is nearly impossible to create atomically identical crystals are and what few atomic twins may exist are unlikely to operate under identical environments. As long as you have a sufficiently accurate time base to measure the crystals' frequency and jitter with the necessary precision, you could hypothetically uniquely identify every one of them.

That's why introducing a random delay for every function call is the best way to avoid this kind of fingerprinting techniques. -

InvalidError Reply

In most programming, there is a nearly infinite number of ways to achieve any given goal, especially when performance is non-critical. Noise from attempting to fudge with function call timings and performance timers can possibly be averaged out over multiple iterations. I have no doubt there are many other potential timing side-channels besides the obvious ones.samopa said:That's why introducing a random delay for every function call is the best way to avoid this kind of fingerprinting techniques. -

rluker5 Reply

After reading the source research it seems you are right. I was hoping the browser meltdown or spectre mitigations would help, but apparently not. And using gpu passthrough and other trickery (like using a dgpu through an igpu with postprocessing cmaa applied, or using one dgpu as the output for a different dgpu) that can sometimes fool benchmarking software would just make you look more obvious to this, having a very unique identifying response.InvalidError said:It may not look like a "significant variation" in GPU benchmarks but it can still be statistically significant enough signature to be relatively unique.

For example, every reference clock crystal has a different frequency since it is nearly impossible to create atomically identical crystals are and what few atomic twins may exist are unlikely to operate under identical environments. As long as you have a sufficiently accurate time base to measure the crystals' frequency and jitter with the necessary precision, you could hypothetically uniquely identify every one of them.

Meh, maybe I'll just get some egpu adapter setup and plug in some old gpu when I want temporary anonymity from this. Let the tracker see another "never before seen" signature for a short while.