YouTuber Upgrades RTX 2070 to 16GB of VRAM

The 16GB RTX 2070/2080 rumors may have been real.

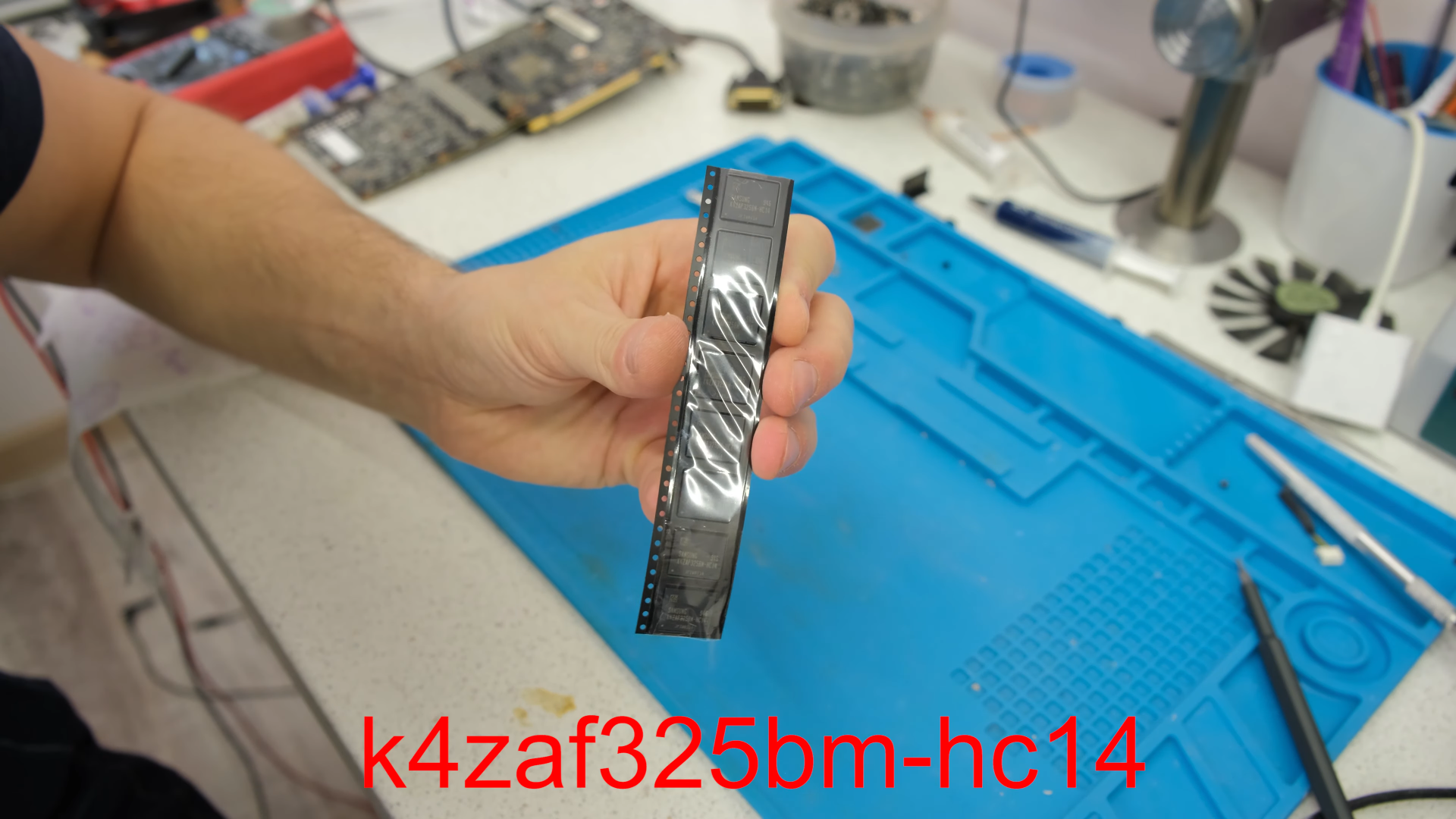

Wouldn't it be cool to upgrade your graphics card with more VRAM? While the average person might not do it, YouTuber VIK-on published a video showcasing his journey to upgrading a Palit-branded GeForce RTX 2070 from 8GB of Micron GDDR6 to 16GB of Samsung GDDR6 memory.

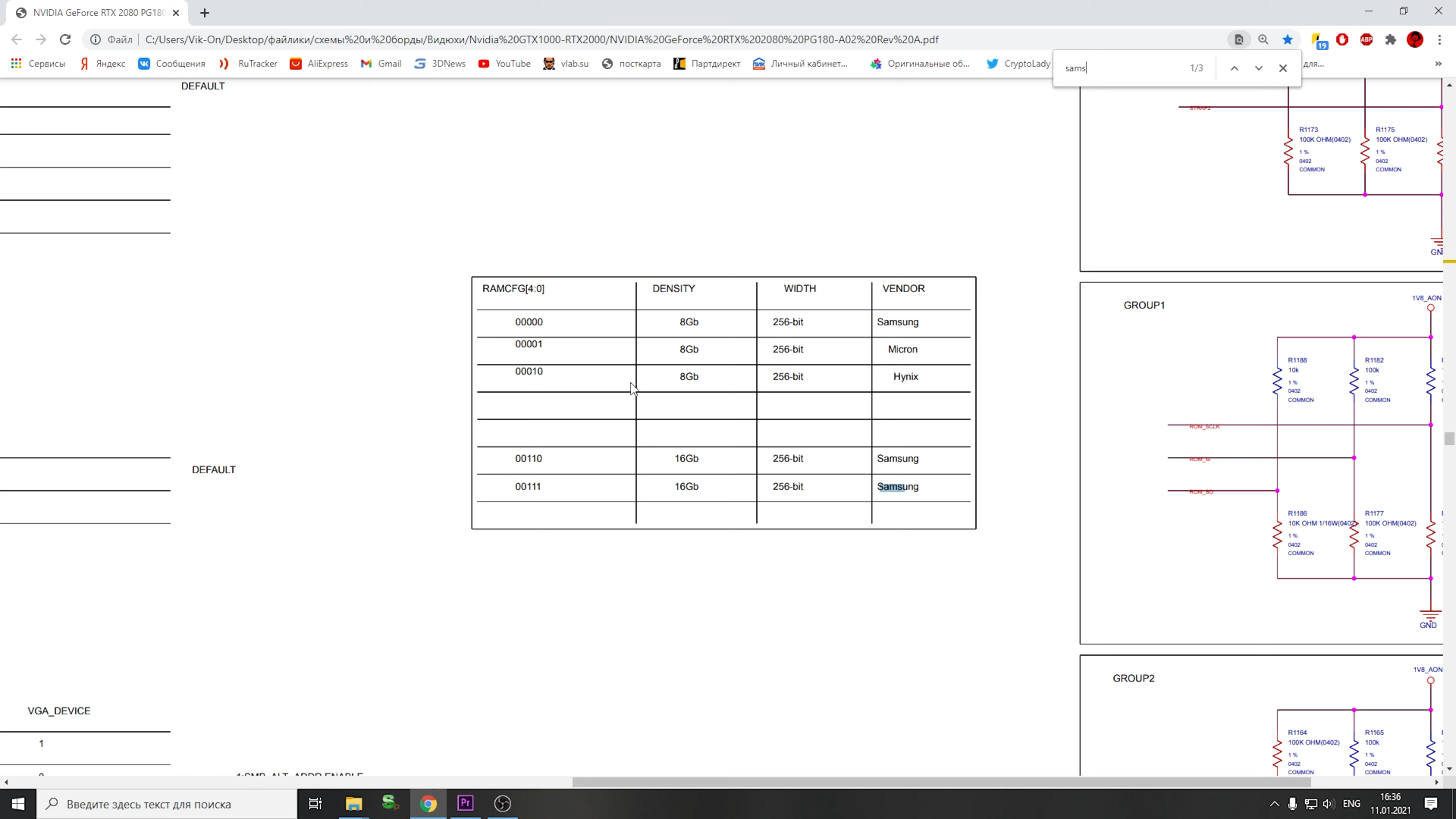

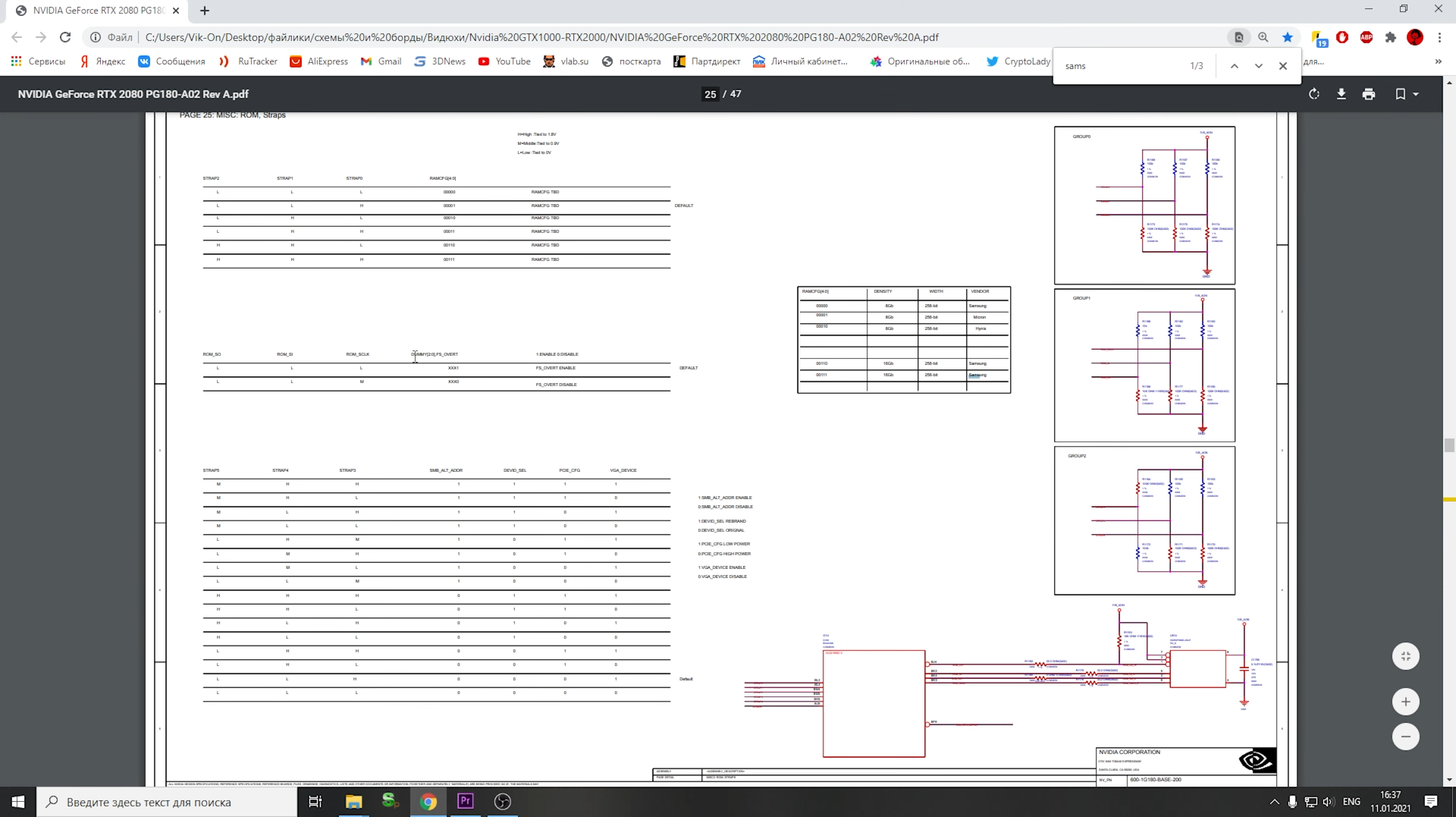

VIK-on first got the idea of upgrading the RTX 2070 to 16GB when he was sent a leaked diagram for the RTX 2070 with a 16GB VRAM option. The leaked diagram shows that the RTX 2070 can support both 1GB and 2GB GDDR6 chips from Samsung (the 2GB chips are used to make 16GB of total VRAM capacity)

Remember those leaks on a potential 16GB RTX 2070 and RTX 2080 a few years ago? Well, this leaked diagram, if real, suggests that Nvidia was at least working on the idea behind closed doors.

To start the upgrade process, VIK-on used a heatgun to detach the current Micron GDDR6 chips equipped on the RTX 2070. Next, he moved some resistors on the back of the PCB (behind the GDDR6 chips), which allow the Samsung chips to be hardware compatible with the RTX 2070. Finally, he grabbed eight 2GB Samsung GDDR6 modules and installed them onto the board. He was able to purchase these Samsung chips off of aliexpress.com for 15,000 rubles - or $202 USD.

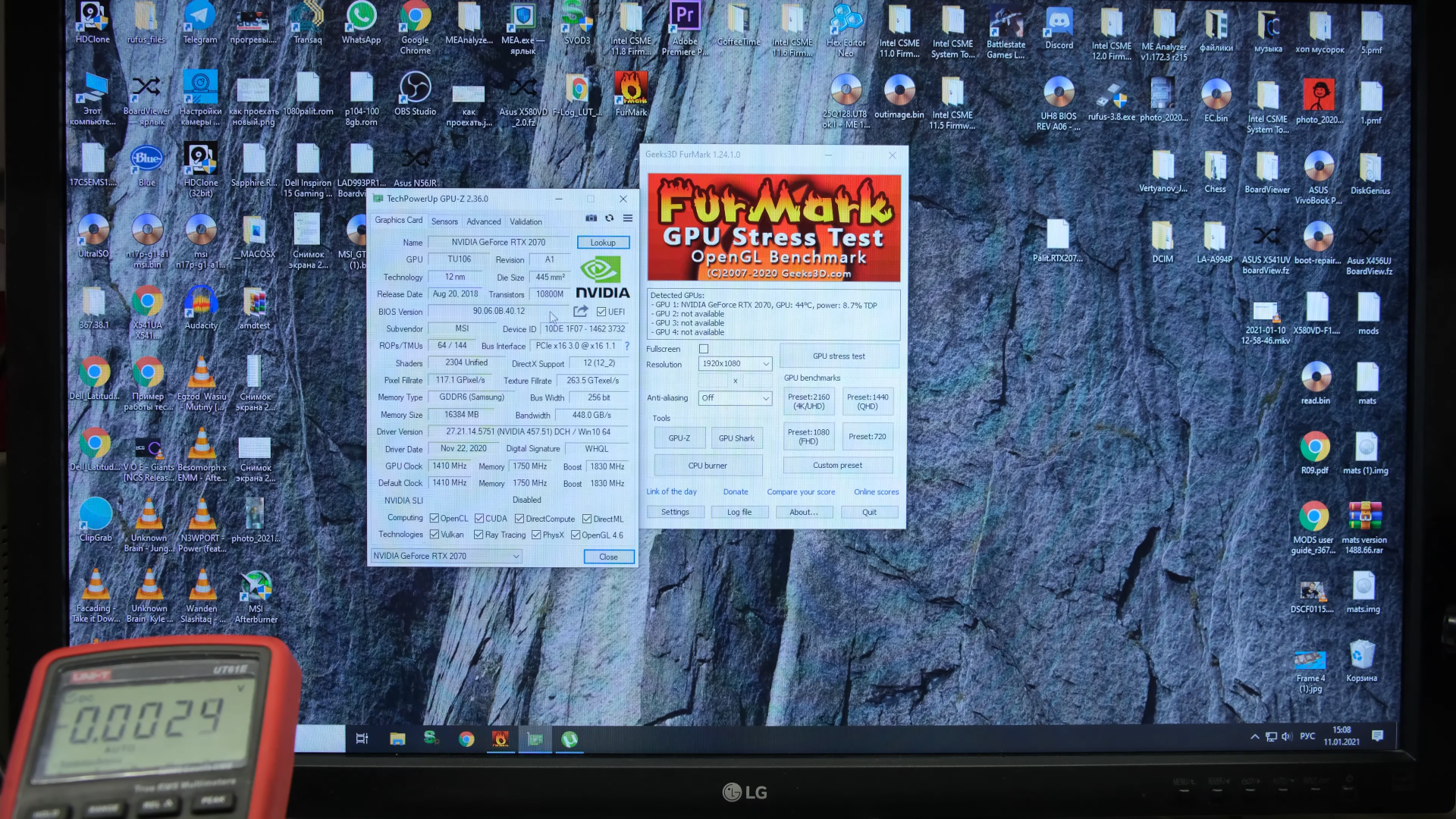

Next, VIC-on was able to start the bootup process and the RTX 2070 posted perfectly with the Samsung chips installed. In the GPU-Z photo, you can clearly see all 16GB of the Samsung VRAM registered by the RTX 2070. Unfortunately, though, the card was not stable under full load; it exhibited strange clock speed behavior which ended with the card crashing and posting a black screen. This could be due to a poor soldering job or bugs between the Samsung chips and the firmware.

Either way, it was cool to see that upgrade an RTX 2070 to 16GB does actually work. Hopefully one day we'll see an RTX 2070 that is fully functional with 16GB of GDDR6 to see if adding an additional 8GB of VRAM would have been worth it for Nvidia.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

bigdragon This is awesome. I wonder why they went with Samsung memory instead of sticking with Micron? Maybe using the same manufacturer would have helped stability. Regardless, seeing efforts to upgrade Nvidia's absolutely anemic VRAM allotments is inspiring.Reply -

TechyInAZ Replybigdragon said:This is awesome. I wonder why they went with Samsung memory instead of sticking with Micron? Maybe using the same manufacturer would have helped stability. Regardless, seeing efforts to upgrade Nvidia's absolutely anemic VRAM allotments is inspiring.

Because the only 2GB ICs compatible on the list were Samsung. Nvidia never fully developed support for 2GB ICs so it makes sense that they only used one model. -

gggplaya I bought a 3060ti, and had to turn down some settings to keep RAM usage under 8GB. This card really needs like 12GB of ram, but I would have liked 16GB. Nvidia, needs to at least offer the option for higher RAM models. The whole "ram is well suited to the capabilities of the GPU" arguement does not work in my situation. If i could have getting a 6800xt, I would have bought that just for the 16GB of VRAM. But I had to go with whatever I could get this year.Reply -

SethNW Yeah, development of pretty much anything is bit like iceberg, there is usually huge amount of things they wanted to try or were testing, that was never see. While reasons for why they were dropped can vary, we only see the top of the iceberg. Rest we only hear in rumors.Reply

And yeah, there probably were done leftovers from them using 16GB. But neither BIOS, nor potentially silicon quality was tested or binned for it. So yeah, he would have to get very lucky for it to be stable. Still, interesting to see. Kind of makes it look interesting if VRAM was user upgradable. I get why it is not, but still. -

Sleepy_Hollowed Replygggplaya said:I bought a 3060ti, and had to turn down some settings to keep RAM usage under 8GB. This card really needs like 12GB of ram, but I would have liked 16GB. Nvidia, needs to at least offer the option for higher RAM models. The whole "ram is well suited to the capabilities of the GPU" arguement does not work in my situation. If i could have getting a 6800xt, I would have bought that just for the 16GB of VRAM. But I had to go with whatever I could get this year.

This is absolutely true, and although RTX seems to be sticking around, in the past stuff from them like PhysX proprietary stuff that got phased out has ultimately made it pointless to buy them for stuff like that instead of better basics like RAM.

If I can find an AMD card instead of an NVIDIA for my next card I will be doing so due to the RAM 100%, because at the bare minimum you can hike texture quality to 4K (or 8K if they do release that) on older games and not be afraid to run out of RAM with basic settings, or just repurpose an older card as a compute card. -

demonicus This is unreal he should be contacting nvidia...game changer ...make video cards that have updateable ram slots that we can just slip in would be ideal. Thats genius then we can upgrade both ram and video card ram that would be epic.Reply -

NP ReplySleepy_Hollowed said:This is absolutely true, and although RTX seems to be sticking around, in the past stuff from them like PhysX proprietary stuff that got phased out has ultimately made it pointless to buy them for stuff like that instead of better basics like RAM.

If I can find an AMD card instead of an NVIDIA for my next card I will be doing so due to the RAM 100%, because at the bare minimum you can hike texture quality to 4K (or 8K if they do release that) on older games and not be afraid to run out of RAM with basic settings, or just repurpose an older card as a compute card.

2070, 3060 ti or 3070 for that matter are not for 4K gaming. -

gggplaya ReplyNP said:2070, 3060 ti or 3070 for that matter are not for 4K gaming.

I have the 3060ti and am 1440p gaming. On several games I can bring it over the 8gb limit and have to turn down some graphics settings and it's still over 100fps. 8GB is not enough for this card. On some games, especially single play games, I'd rather have around 60fps but with better quality graphics, but that will put me over 8gb. -

NP Replygggplaya said:I have the 3060ti and am 1440p gaming. On several games I can bring it over the 8gb limit and have to turn down some graphics settings and it's still over 100fps. 8GB is not enough for this card. On some games, especially single play games, I'd rather have around 60fps but with better quality graphics, but that will put me over 8gb.

I fully agree. 10GB or 12GB would be ideal, just for 1440p. But the arguments about how taxing 4K is VRAM-wise do not belong in this discussion as these cards are not 4K card. And the 8K discussion is just totally pointless and inane. For any card in the foreseeable future. If no one mentioned 8K gaming in a few years in public, everyone would be better off.