Full scan of 1 cubic millimeter of brain tissue took 1.4 petabytes of data, equivalent to 14,000 4K movies — Google's AI experts assist researchers

Mind-boggling mind research.

A recent attempt to fully map a mere cubic millimeter of a human brain took up 1.4 petabytes of storage just in pictures of the specimen. A collaborative effort between Harvard researchers and Google AI experts took the deepest dive yet into neural mapping with the recent full imaging and mapping of the brain sample, making puzzling discoveries and utilizing incredible technology. We did the back-of-napkin math on what ramping up this experiment to the entire brain would cost, and the scale is impossibly large — 1.6 zettabytes of storage costing $50 billion and spanning 140 acres, making it the largest data center on the planet.

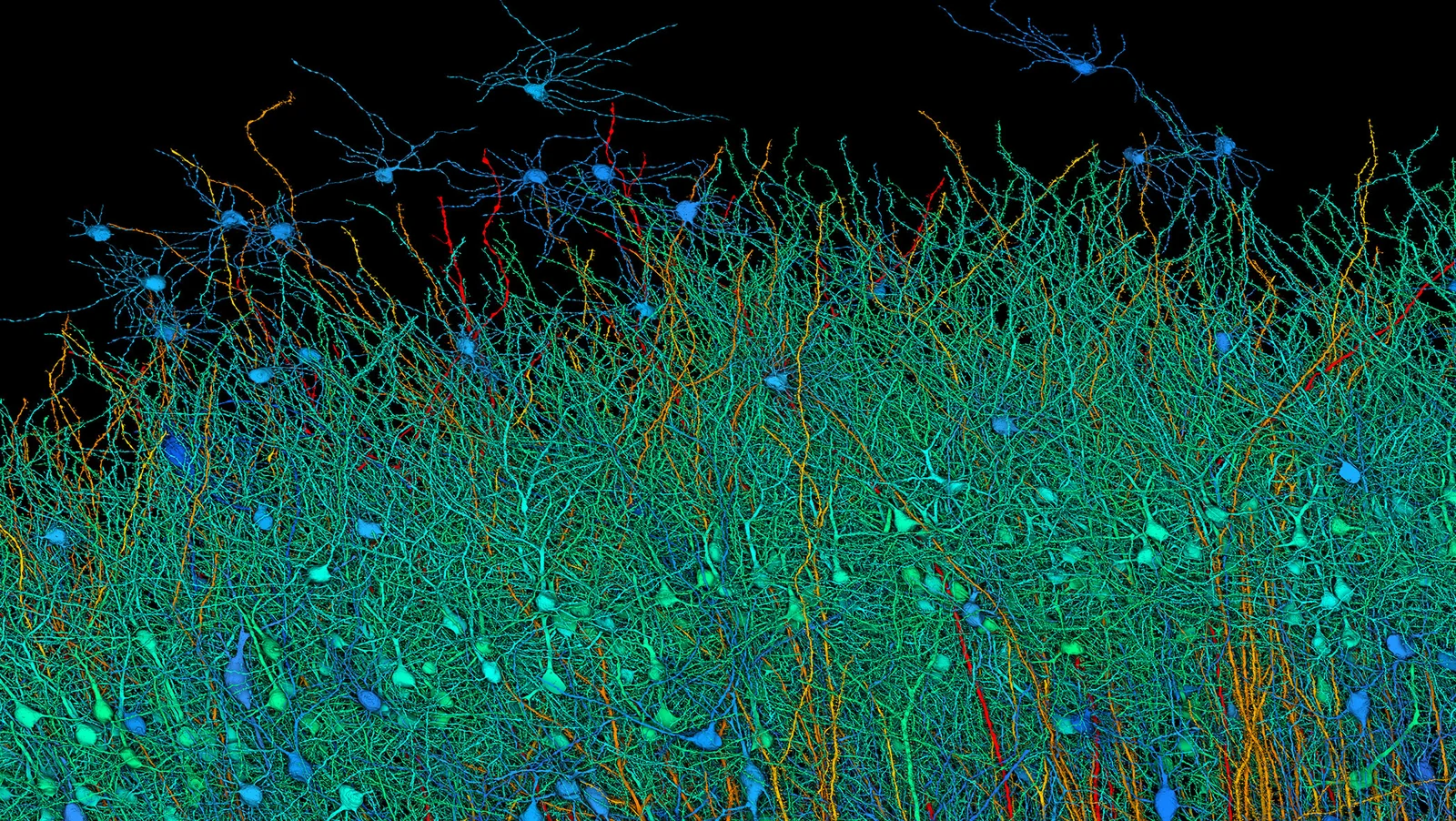

The study is full of mind-numbing stats. To image a human brain, the researchers needed to utilize Google's machine learning tech, shaving estimated years off of the project. Scientists first sliced the sample into 5000 wafers orders of magnitude thinner than human hair. Electron microscope images were taken of each slice, which were recombined to count around 50,000 cells and 150 million synapses, the connection points where neurons meet and interact. To recombine these images and map the fibers and cells accurately, Google's AI imaging tech had to be used, digitally working out the routes of the gray matter.

The synthesized images revealed many exciting secrets about the brain that were previously totally unknown — some cell clusters grew in mirror images of one another, one neuron was found with 5,000+ connection points to other neurons, and some axons (signal-carrying ends of nerves) had become tightly coiled into yarn ball shapes for totally unknown reasons. These and other discoveries made in the course of research excited their scientists beyond reason. Jeff Lichtman, a Harvard professor said to The Guardian on the project, "We found many things in this dataset that are not in the textbooks. We don’t understand those things, but I can tell you they suggest there’s a chasm between what we already know and what we need to know."

For context on the size of the brain sample and the data collected from it, we need to get into mind-numbingly colossal numbers. The cubic millimeter of brain matter is only one-millionth of the size of an adult human brain, and yet the imaging scans and full map of its intricacies comprises 1.4 petabytes, or 1.4 million gigabytes. If someone were to utilize the Google/Harvard approach to mapping an entire human brain today, the scans would fill up 1.6 zettabytes of storage.

Taking these logistics further, storing 1.6 zettabytes on the cheapest consumer hard drives (assuming $0.03 per GB) would cost a cool $48 billion, and that's without any redundancy. The $48 billion price tag does not factor in the cost of server hardware to put the drives in, networking, cooling, power, and a roof to put over this prospective data center. The roof in question will also have to be massive; assuming full server racks holding 1.8 PB, the array of racks needed to store the full imaging of a human brain would cover over 140 acres if smushed together as tightly as possible. This footprint alone, without any infrastructure, would make Google the owner of one of the top 10 largest data centers in the world, even approaching (if not reaching) the scale of Microsoft and OpenAI's planned $100 billion AI data center.

All of this is to say the human brain is an impossibly dense and very smart piece of art, and the act of mapping it would be both impossibly expensive (we didn't even begin to guess how long it would take) and likely foolish. Just because mapping is done does not mean that scientists would know what to do with the maps, as just the one-millionth piece of the brain we have mapped will pose questions for researchers for likely years to come. Thankfully, we apparently don't need to know everything about the brain to start meddling with it; in case you missed it, Elon Musk's Neuralink has begun rolling out to very early adopters. And if you want more on Google's efforts in the AI space, OpenAI is not playing very nice with them today.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Sunny Grimm is a contributing writer for Tom's Hardware. He has been building and breaking computers since 2017, serving as the resident youngster at Tom's. From APUs to RGB, Sunny has a handle on all the latest tech news.

-

bit_user Reply

The key detail you seem to be overlooking is that the pictures are a very inefficient representation of the information content in those neurons and synapses.The article said:A recent attempt to fully map a mere cubic millimeter of a human brain took up 1.4 petabytes of storage just in pictures of the specimen.

...

All of this is to say the human brain is an impossibly dense and very smart piece of art, and the act of mapping it would be both impossibly expensive ...

You go on to make a flawed assumption that all of the pictures would need to be stored, but this is not so. I'm sure the idea is to form a far more direct representation of the brain's connectivity & other parameters. This could be done on-the-fly, eliminating the need ever to store all of those pictures. -

vanadiel007 So if we keep peering in deeper and deeper, will we eventually find out of we live in a world, or in a simulation of a world?Reply -

JTWrenn Reply

There is no way they would not store all of the raw data for future analysis. They absolutely would need to be stored long term for future use and reference. No way they wouldn't do that. They could get away with a much less useful dataset by not doing it, but no way they would go through the entire process and throw out anything useful.bit_user said:The key detail you seem to be overlooking is that the pictures are a very inefficient representation of the information content in those neurons and synapses.

You go on to make a flawed assumption that all of the pictures would need to be stored, but this is not so. I'm sure the idea is to form a far more direct representation of the brain's connectivity & other parameters. This could be done on-the-fly, eliminating the need ever to store all of those pictures.

Edit: actually this is wrong to some degree, continued in a post below. Without the original data, it can't be checked. -

Alvar "Miles" Udell Size, of course, comes with an asterisk. What takes 1.4PB today took many times that not long ago, and will take many times less that in the not very far future.Reply -

bit_user Reply

They wouldn't if it's too costly. At the very least, you can bet they'd use some advanced compression techniques to exploit the characteristics of volumetric data and wouldn't merely store them as a bunch of PNG files.JTWrenn said:There is no way they would not store all of the raw data for future analysis.

Also, I'd point out that we're debating what researchers might want to do, rather than the actual information density of those photographs, which was my original point and seems to lie at the heart of what the article was claiming.JTWrenn said:They absolutely would need to be stored long term for future use and reference. No way they wouldn't do that. -

gg83 Reply

Right. There's always shortcuts that work "good enough". Just like compression algorithms removing all that nonsensebit_user said:They wouldn't if it's too costly. At the very least, you can bet they'd use some advanced compression techniques to exploit the characteristics of volumetric data and wouldn't merely store them as a bunch of PNG files.

Also, I'd point out that we're debating what researchers might want to do, rather than the actual information density of those photographs, which was my original point and seem to lie at the heart of what the article was claiming. -

ecophobia Reply

Totally agree that the numbers are off. Even today commercially available HDD can reach densities of 20T or more. Hyper scale providers like Google are at not spending anywhere close $0.03/GB. That would make a 20T HDD ~$600 which can be found on Amazon for ~$225. They are getting a much better price at volume. At the Amazon price per GB we are at $0.011/GB and would cost $18B, but we should expect at least a 40% discount at this scale the cost would be ~$10.8B.Alvar Miles Udell said:Size, of course, comes with an asterisk. What takes 1.4PB today took many times that not long ago, and will take many times less that in the not very far future.

Then there is the footprint. Let's assume that the hyper scalers can fit 500 HDDs in a standard 19" 42RU rack (no raid, just pure storage + compute management).

500 HDD * 20TB = 10PB for 19" of liner space or 253k feet of linear space for a single copy (as the article suggests). Data center liner space is some of the most expensive real estate on the planet, but if nothing could be done to increase compression and/or density of HDDs, that is an enormous amount of space at a very low density compared to typical power consumption. This would most likely require specialty designed data centers to support where land is very cheep to make it cost effective.

But to your point, as storage density increases ( the roadmap to 40T isn't too long), reduces cost overtime.

Alvar Miles Udell said:Size, of course, comes with an asterisk. What takes 1.4PB today took many times that not long ago, and will take many times less that in the not very far future. -

vijosef -HDD is not the cheapest storage available.Reply

-Mapping the hardware doesn't says much about the software. -

vijosef Reply

Your argument belongs to a religion called Gnosticism.vanadiel007 said:So if we keep peering in deeper and deeper, will we eventually find out of we live in a world, or in a simulation of a world?

If we were living in a simulation, there would be a long Inception chain of simulated worlds, one inside of each other, but we should be the first one (where all others are simulated) or the last one, (simulated inside all others), since we cannot yet simulate sentient worlds.

Hence the probability of being simulated is practically zero.