Audience MQ100 And eS700 Series Sensor Coprocessors

Audience introduces two new revisions to their sensor processor family: the MQ100 Motion and earSmart eS700 series. Using proprietary CASA technology that models human hearing, the company aims to improve sensing and environmental awareness.

Audience is a relative newcomer in many ways, and as the name implies, its focus is audio recording technology. Founded in 2000, it took around a decade before they made significant inroads in the mobile space. By the time the company went public in 2012, it had partnered with a variety of first- and second-tier hardware and software vendors, including Acer, Google, Huawei, Intel, Lenovo, LG, Meizu, Microsoft, Motorola, Samsung, Sony, Xiaomi, and many others. More recently, Audience’s technology was notably used in Samsung’s Galaxy S4 and Note 3 handsets as part of the S-Voice ASR and Samsung Life suite - a key part of Samsung’s “human touch” experience.

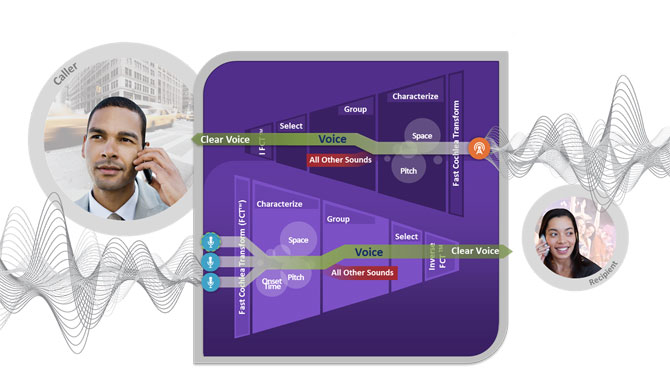

The company’s proprietary earSmart technology models human hearing for increased accuracy and sensor-driven application intelligence. The science behind this works via something known as ASA, or Audio Scene Analysis, first coined by psychologist Albert Bregman to define the principles of the human auditory system. He posited the “cocktail party effect”, the way in which we can single out a conversation from background noise at a party by organizing different elements of audio into perceptually meaningful elements. Audience uses a software model that simulates this effect, referred to as “Computational Auditory Scene Analysis” or CASA: the field of study that attempts to technologically recreate sound source separation in the same manner as human hearing.

One of they key aspects of earSmart is bettering microphone quality and sensitivity in noisy environments - like how we can hone in on a single voice despite loud distractions. Consequently, call quality and speech recognition are also improved, two aspects Samsung used to good effect in the aforementioned devices. Indeed, Samsung went to great lengths at the S4 launch to explain the “human touch” as the phone reacted to natural language and gestures. Audience’s CASA modelling was at the heart of that experience.

Always On & User Differentiation Via VoiceQ

The eS700 series extends this functionality with Always On technology via the proprietary VoiceQ system, which offers a similar experience to Motorola’s Moto X with its Always Listening approach. VoiceQ is a pun on “IQ” and “Queue”, illustrating that the feature both utilizes intelligent data analysis and also queues or buffers audio before waking the Application Processor (CPU core), so as not to unnecessarily drain the battery.

In the example, VoiceQ is shown easily identifying two users independent of one another. Coupled with Android’s multi-user context (a feature introduced in version 4.2) a single device could effectively be used by more than one person without the need to worry about cumbersome logins. Here, the device identifies each user via their literal voiceprint ID, all without waking the system until absolutely necessary, thus saving battery.

CASA modelling is what enables this to work. Further to this, it can also help prevent wasted CPU power.

Minimizing Battery Usage Via False Alarm Reduction

One of the issues generally plaguing always-on sensor recognition systems is the increased potential for battery drain. Just ask any Nexus 5 user who’s tried any of the Sweep2Wake or Knock2Wake kernel mods - the touchscreen sensor is always active, and so the battery continually drains waiting for a response. By the same token, a voice recognition system that is always listening for keywords could just as easily drain the battery, particularly when non-keywords are misinterpreted, waking the system unnecessarily. A simple recognition error like that, where a device incorrectly wakes, even if for seconds, can add up to tens of minutes of wasted battery life. Over a full day of usage, that can end up costing hours of battery life.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Audience claims that VoiceQ manages to avoid this via False Alarm Reduction, which buffers incoming words, and unless a properly-vetted word or phrase is recognized, it will not wake the device, once again saving battery life.

MQ100 Motion Processor

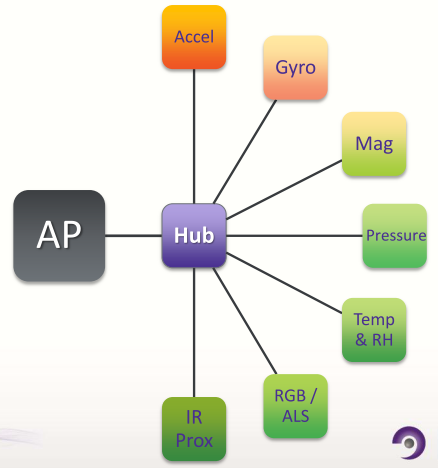

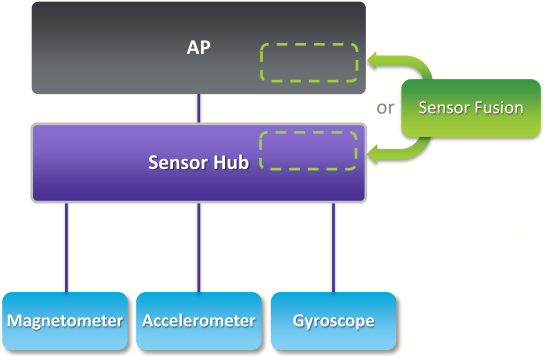

Beyond the Always On feature of VoiceQ, the MQ100 Motion Processor adds a variety of intelligence to the eSmart sensor array (what Audience dubs the Sensor Hub) via Sensor Fusion. Sensor Fusion is a software layer that blends sensor information contextually, enabling applications to make use of sensor data intelligently.

Importantly, both chips aim to extend functionality while focusing on extremely low power consumption. The idea is for these coprocessors to do the vast majority of their work without ever waking the Application Processor (CPU Core).

Apple released something similar last year, the M7 motion co-processor used in the iPhone 5s, iPad Air, and iPad Mini Retina.

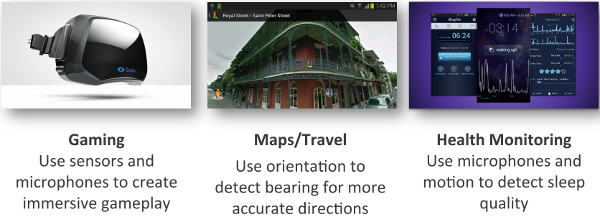

The idea behind MQ100 is to offer a comprehensive biometric sensing context that goes beyond straight voice recognition and audio tagging. Not dissimilar to Google’s Project Tango, it uses a mixture of all the sensor data from the aforementioned Sensor Hub, blended together (Sensor Fusion), and tracked with dynamism and intelligence to generate layers of information which can be used together in applications.

For example, think of a shopping center navigation system that uses your phone’s camera, echolocation data from the mic array, and barometric pressure readings to guide you back to where you parked your car via a virtual “bread crumb” system, all without accessing the generally battery-heavy GPS (which may be useless inside a shopping center anyway). That’s the idea behind Sensor Fusion, and again, all the while being extremely battery efficient - Audience claims that the MQ100 is meant use less than 5 mW at peak!

If Sensor Hub is the brain, than Sensor Fusion is mind. Sensor Fusion is very much a forward-looking approach, with applications in both Virtual Reality (think Oculus Rift-alikes for mobile) and Augmented Reality (such as in the bread-crumbing example). Eventually, Sensor Fusion may become a key component of wearable technology, particularly in terms of fitness and general health monitoring, something Audience believes will be key in the years to come.

The idea of your glasses or watch calling back to your insurer, family, or workplace to warn of an impending health problem may seem like science fiction, but for better or worse, it’s really only a few years away. For now though, the Audience Sensor Hub- and Fusion-equipped eS700 series and MQ100 Motion Processor should be available in phones and tablets later this year.

Follow Dorian and Tom’s Hardware on Google+