Fujitsu's 'DLU' AI Processor Promises 10x The Performance Of 'The Competition'

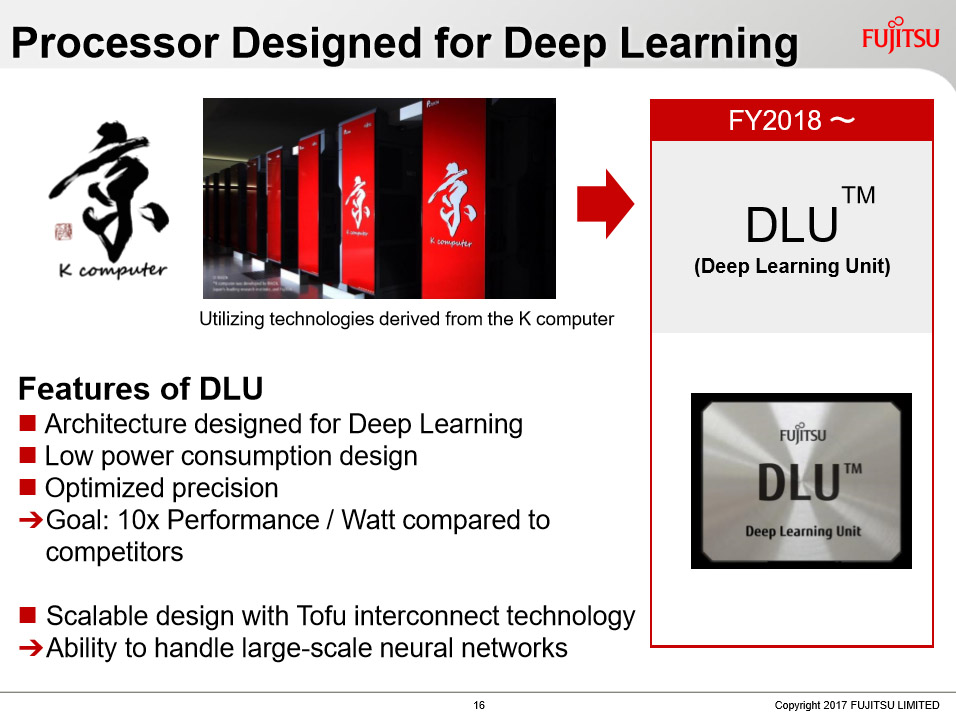

Fujitsu announced the development of an AI processor it refers to as a Deep Learning Unit (DLU). In addition to the company's plans to build an AI supercomputer with 24 Nvidia DGX-1 systems, Fujitsu also plans to produce an AI processor delivering more than ten times the performance per watt than the competition by 2018.

The fact that the company is developing a DLU isn’t surprising in and of itself. Fujitsu has more than three decades of experience developing AI and associated technologies, and its K Computer sits at number eight on the top 10 list of supercomputers in the world. We are, though, rather taken aback by the incredible 10x performance goal and, although Fujitsu isn’t naming names, everyone knows that the “competition” they are referring to are industry heavyweights like Nvidia, Google, Intel, and AMD.

All of the aforementioned companies are heavily invested in the AI and deep learning space. To say the competition is high would be a massive understatement.

Takumi Maruyama, senior director of Fujitsu’s AI Platform Business Unit, laid out Fujitsu’s DLU plans at ISC 2017. Maruyama has been involved in SPARC processor development since 1993 and is currently heading up the DLU project. According to the charts provided by Maruyama, Fujitsu's DLU coprocessor is designed for deep learning workloads and low power consumption.

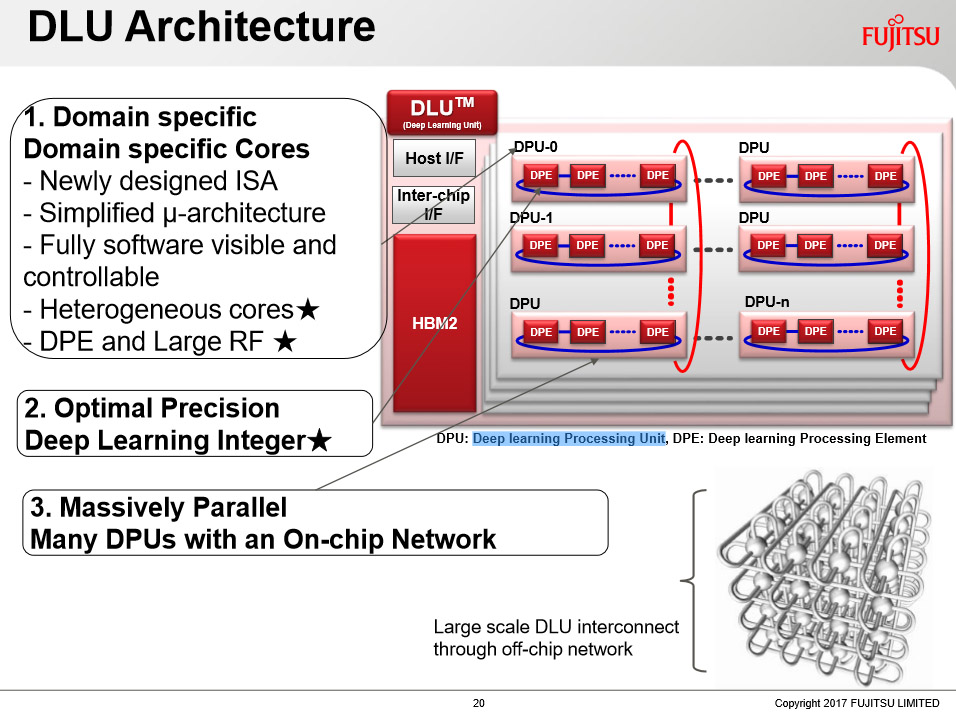

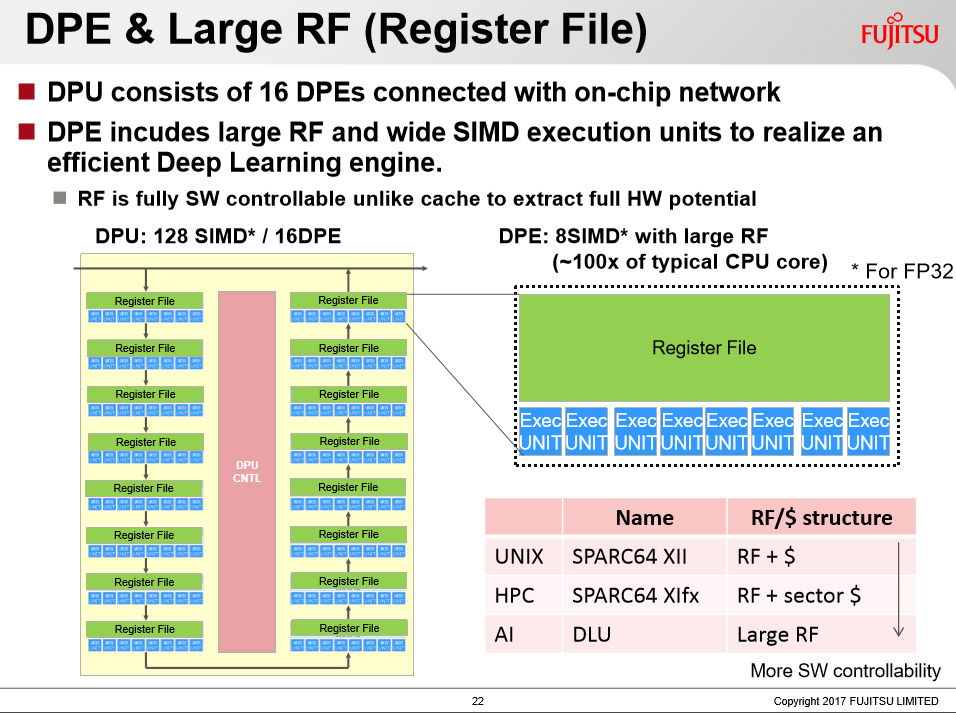

The chip features 16 deep learning processing elements (DPE). Each contains eight single-instruction, multiple data (SIMD) execution units with a scalable design utilizing the company's Tofu interconnect technology.

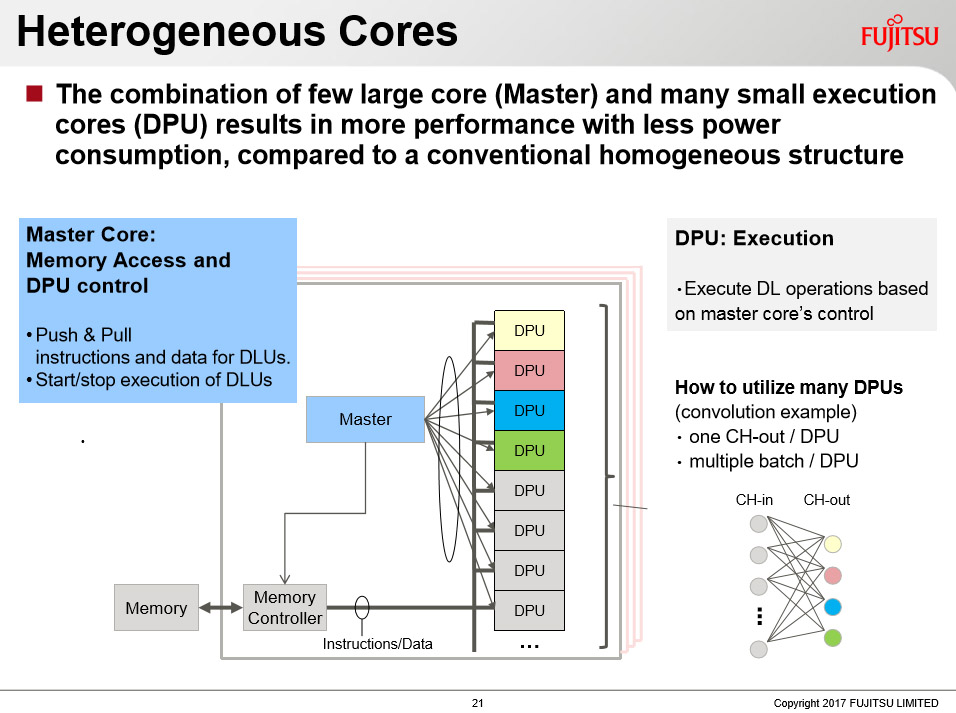

The DLU natively supports FP32, FP16, INT16, and INT8 data types and relies heavily on lower precision math to optimize both performance and energy efficiency for processing neural networks. Fujitsu stated that the combination of a few large cores (Master) with many small execution cores such as a Deep Learning Processing Unit (DPU) will result in higher performance with less power consumption than anything currently on the market.

Can Fujitsu pull it off? All we can say is, if the company comes even close to it’s 10x goal, the AI industry is going to get mighty interesting in 2018.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Steven Lynch is a contributor for Tom’s Hardware, primarily covering case reviews and news.

-

tslot05qsljgo9ed Quote: 10x Performance / Watt compared to competitorsReply

Are they comparing that number 10x to past products (Nvidia Pascal) or current products (Nvidia Volta).

Nvidia is not standing still and they too will have new products in 2018. -

FranticPonE It's a GPU's general purpose SIMD units... that's more efficient because they say it will be. "Look our 'master units' coordinate memory access and SIMD units!" ... Exactly like GPU "wavefronts" or whatever do now. Good job Fujitsu, you reinvented the wheel then bragged about it. Just like Google did with their own "Deep Learning" chip, and just like Google's chip it will no doubt be no faster nor more efficient than what Nvidia and AMD already do.Reply -

bit_user Reply... with a scalable design utilizing the company's Tofu interconnect technology

I love it! Finally, something Japanese-sounding in a Japanese chip!

Seriously, did Fujitsu ever set any records with their SPARC CPUs? I don't recall reading anything about it, but I don't follow the supercomputer or mainframe sectors very closely.

I was hoping to see some exotic new technology, like phase-change memory embedded with the processing elements. But, unless their Tofu interconnect somehow lets them scale incredibly well, I just don't see any reason they should beat (or even match) Volta or Google's Gen2 TPU.

-

bit_user Reply

Yes. The whole thing seems incredibly GPU-like, to me. I guess it has the advantage that latencies and memory access patterns could be more predictable, allowing for a number of simplifications not possible in GPUs. But you don't get an order of magnitude from that.19959480 said:It's a GPU's general purpose SIMD units... that's more efficient because they say it will be.

Good luck to them.