Intel Arc Alchemist: Release Date, Specs, Everything We Know

(Updated) Intel's Arc Alchemist looks to compete with AMD and Nvidia GPUs

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Intel has been hyping up Xe Graphics for about two years, but the Intel Arc Alchemist GPU will finally bring some needed performance and competition from Team Blue to the discrete GPU space. This is the first 'real' dedicated Intel GPU since the i740 back in 1998 — or technically, a proper discrete GPU after the Intel Xe DG1 paved the way last. The competition among the best graphics cards is fierce, and Intel's current integrated graphics solutions basically don't even rank on our GPU benchmarks hierarchy (UHD Graphics 630 sits at 1.8% of the RTX 3090 based on just 1080p medium performance).

The latest announcement from Intel is that the Arc A770 is coming October 12, starting at $329. That's a lot lower on pricing than what was initially rumored, but then the A770 is also coming out far later than originally intended. With Intel targeting better than RTX 3060 levels of performance, at a potentially lower price and with more VRAM, things are shaping up nicely for Team Blue.

Could Intel, purveyor of low performance integrated GPUs—"the most popular GPUs in the world"—possibly hope to compete? Yes, it can. Plenty of questions remain, but with the official China-first launch of Intel Arc Alchemist laptops and the desktop Intel Arc A380 now behind us, plus plenty of additional details of the Alchemist GPU architecture, we now have a reasonable idea of what to expect. Intel has been gearing up its driver team for the launch, fixing compatibility and performance issues on existing graphics solutions, hopefully getting ready for the US and "rest of the world" launch. Frankly, there's nowhere to go from here but up.

The difficulty Intel faces in cracking the dedicated GPU market can't be underestimated. AMD's Big Navi / RDNA 2 architecture has competed with Nvidia's Ampere architecture since late 2020. While the first Xe GPUs arrived in 2020, in the form of Tiger Lake mobile processors, and Xe DG1 showed up by the middle of 2021, neither one can hope to compete with even GPUs from several generations back. Overall, Xe DG1 performed about the same as Nvidia's GT 1030 GDDR5, a weak-sauce GPU hailing from May 2017. It was also a bit better than half the performance of 2016's GTX 1050 2GB, despite having twice as much memory.

The Arc A380 did better, but it still only managed to match or slightly exceed the performance of the GTX 1650 (GDDR5 variant) and RX 6400. Video encoding hardware was a high point at least. More importantly, the A380 is potentially about a quarter of the performance of the top-end Arc A770, so there's still hope.

Intel has a steep mountain to ascend if it wants to be taken seriously in the dedicated GPU space. Here's the breakdown of the Arc Alchemist architecture, a look at the announced products, some Intel-provided benchmarks, all of which give us a glimpse into how Intel hopes to reach the summit. Truthfully, we're just hoping Intel can make it to base camp, leaving the actual summiting for the future Battlemage, Celestial, and Druid architectures. But we'll leave those for a future discussion.

Specs: Up to 512 Vector Units / 4096 Shader Cores

Memory: Up to 16GB GDDR6

Process: TSMC N6 (refined N7)

Performance: Up to ~RTX 3060 Ti / ~RX 6700 level

Release Date: Q3 2022 for desktop

Price: $329 for Arc A770, $139 for A380

Intel's Xe Graphics aspirations hit center stage in early 2018, starting with the hiring of Raja Koduri from AMD, followed by chip architect Jim Keller and graphics marketer Chris Hook, to name just a few. Raja was the driving force behind AMD's Radeon Technologies Group, created in November 2015, along with the Vega and Navi architectures. Clearly, the hope is that he can help lead Intel's GPU division into new frontiers, and Arc Alchemist represents the results of several years worth of labor.

Not that Intel hasn't tried this before. Besides the i740 in 1998, Larrabee and the Xeon Phi had similar goals back in 2009, though the GPU aspect never really panned out. Plus, Intel has steadily improved the performance and features in its integrated graphics solutions over the past couple of decades (albeit at a slow and steady snail's pace). So, third time's the charm, right?

There's much more to building a good GPU than just saying you want to make one, and Intel has a lot to prove. Here's everything we know about the upcoming Intel Arc Alchemist, including specifications, performance expectations, release date, and more.

Potential Intel Arc Alchemist Specifications and Price

We'll get into the details of the Arc Alchemist architecture below, but let's start with the high-level overview. Intel has two different Arc Alchemist GPU dies, covering three different product families, the 700-series, 500-series, and 300-series. The first letter also denotes the family, so A770 are for Alchemist, and the future Battlemage parts will likely be named Arc B770 or similar.

Here are the specifications for the various desktop Arc GPUs that Intel has revealed. All of the figures are now more or less confirmed, except for A580 power.

| Header Cell - Column 0 | Arc A770 | Arc A750 | Arc A580 | Arc A380 |

|---|---|---|---|---|

| Architecture | ACM-G10 | ACM-G10 | ACM-G10 | ACM-G11 |

| Process Technology | TSMC N6 | TSMC N6 | TSMC N6 | TSMC N6 |

| Transistors (Billion) | 21.7 | 21.7 | 21.7 | 7.2 |

| Die size (mm^2) | 406 | 406 | 406 | 157 |

| Xe-Cores | 32 | 28 | 24 | 8 |

| GPU Cores (Shaders) | 4096 | 3584 | 3072 | 1024 |

| XMX Engines | 512 | 448 | 384 | 128 |

| RTUs | 32 | 28 | 24 | 8 |

| Game Clock (MHz) | 2100 | 2050 | 1700 | 2000 |

| VRAM Speed (Gbps) | 17.5 | 16 | 16 | 15.5 |

| VRAM (GB) | 16/8 | 8 | 8 | 6 |

| VRAM Bus Width | 256 | 256 | 256 | 96 |

| ROPs | 128 | 128 | 128 | 32 |

| TMUs | 256 | 224 | 192 | 64 |

| TFLOPS FP32 (Boost) | 17.2 | 14.7 | 10.4 | 4.1 |

| TFLOPS FP16 (XMX) | 138 | 118 | 84 | 33 |

| Bandwidth (GBps) | 560 | 512 | 512 | 186 |

| PCIe Link | x16 4.0 | x16 4.0 | x16 4.0 | x8 4.0 |

| TBP (watts) | 225 | 225 | 175 | 75 |

| Launch Date | Oct 12, 2022 | Oct 12, 2022 | Oct 2022? | June 2022 |

| Starting Price | $349 (16GB) / $329 (8GB) | $289 | ? | $139 |

These are Intel's official core specs on the full large and small Arc Alchemist chips. Based on the wafer and die shots, along with other information, we expect Intel to enter the dedicated GPU market with products spanning the entire budget to high-end range.

Intel has five different mobile SKUs, the A350M, A370M, A550M, A730M, and A770M. Those are understandably power constrained, while for desktops there will be (at least) A770, A750, A580, and A380 models. Intel also has Pro A40 and Pro A50 variants for professional markets (still using the smaller chip), and we can expect additional models for that market as well.

The Arc A300-series targets entry-level performance, the the A500 series goes after the midrange market, and A700 is for the high-end offerings — though we'll have to see where they actually land in our GPU benchmarks hierarchy when they launch. Arc mobile GPUs along with the A380 were available in China first, but the desktop A580, A750, and A770 should be full world-wide launches. Releasing the first parts in China wasn't a good look, especially since one of Intel's previous "China only" products was Cannon Lake, with the Core i3-8121U that basically only just saw the light of day before getting buried deep under ground.

We now have an official launch date for the A770 and presumably A750 as well, though we're not clear if the A580 will also be launching on that date or what it might cost. Intel did reveal that the A770 will start at $329, presumably for the 8GB variant — unless Intel is feeling very generous and the A770 Limited Edition made by Intel will also start at $329? (Probably not.)

Also note that the maximum theoretical compute performance in teraflops (TFLOPS) uses Intel's "Game Clock," which is supposedly an average of typical gaming clocks. AMD and Nvidia sort of have that as well but call it a boost clock, and in practice we usually see gaming clocks higher than the official values. For Intel, we're not sure what will happen. The Gunnir Arc A380 has a 2450 MHz boost clock for example, and in gaming it was pretty much locked in at that speed, though it also used more power than the 75W Intel gives for the reference design. It sounds like "typical" gaming scenarios will probably run at higher clocks, which would be good.

Real-world performance will also depend on drivers, which have been a sticking point for Intel in the past. Gaming performance will play a big role in determining how much Intel can charge for the various graphics card models.

As shown in our GPU price index, the prices of competing AMD and Nvidia GPUs have plummeted this year. Intel would have been in great shape if it had managed to launch Arc at the start of the year with reasonable prices, which was the original plan (actually, late 2021 was at one point in the cards). Many gamers might have given Intel GPUs a shot if they were priced at half the cost of the competition, even if they were slower.

That takes care of the high-level overview. Now let's dig into the finer points and discuss where these estimates come from.

Arc Alchemist: Performance According to Intel

Intel has provided us with reviewer's guides for both its mobile Arc GPUs and the desktop Arc A380. As with any manufacturer provided benchmarks, you should expect the games and settings used were selected to show Arc in the best light possible. Intel tested 17 games for laptops and desktops, but the game selection isn't even identical, which is a bit weird. It then compared performance with two mobile GeForce solutions, and the GTX 1650 and RX 6400 for desktops. There's a lot of missing data, since the mobile chips represent the two fastest Arc solutions, but let's get to the actual numbers first.

| Game | Arc A770M | RTX 3060 | Arc A730M | RTX 3050 Ti |

|---|---|---|---|---|

| 17 Game Geometric Mean | 88.3 | 78.8 | 64.6 | 57.2 |

| Assassin's Creed Valhalla (High) | 69 | 74 | 50 | 38 |

| Borderlands 3 (Ultra) | 76 | 60 | 50 | 45 |

| Control (High) | 89 | 70 | 62 | 42 |

| Cyberpunk 2077 (Ultra) | 68 | 54 | 49 | 39 |

| Death Stranding (Ultra) | 102 | 113 | 87 | 89 |

| Dirt 5 (High) | 87 | 83 | 61 | 64 |

| F1 2021 (Ultra) | 123 | 96 | 86 | 68 |

| Far Cry 6 (Ultra) | 82 | 80 | 68 | 63 |

| Gears of War 5 (Ultra) | 73 | 72 | 52 | 58 |

| Horizon Zero Dawn (Ultimate Quality) | 68 | 80 | 50 | 63 |

| Metro Exodus (Ultra) | 69 | 53 | 54 | 39 |

| Red Dead Redemption 2 (High) | 77 | 66 | 60 | 46 |

| Strange Brigade (Ultra) | 172 | 134 | 123 | 98 |

| The Division 2 (Ultra) | 86 | 78 | 51 | 63 |

| The Witcher 3 (Ultra) | 141 | 124 | 101 | 96 |

| Total War Saga: Troy (Ultra) | 86 | 71 | 66 | 48 |

| Watch Dogs Legion (High) | 89 | 77 | 71 | 59 |

We'll start with the mobile benchmarks, since Intel used its two high-end models for these. Based on the numbers, Intel suggests its A770M can outperform the RTX 3060 mobile, and the A730M can outperform the RTX 3050 Ti mobile. The overall scores put the A770M 12% ahead of the RTX 3060, and the A730M was 13% ahead of the RTX 3050 Ti. However, looking at the individual game results, the A770M was anywhere from 15% slower to 30% faster, and the A730M was 21% slower to 48% faster.

That's a big spread in performance, and tweaks to some settings could have a significant impact on the fps results. Still, overall the list of games and settings used here looks pretty decent. However, Intel used laptops equipped with the older Core i7-11800H CPU on the Nvidia cards, and then used the latest and greatest Core i9-12900HK for the A770M and the Core i7-12700H for the A730M. There's no question that the Alder Lake CPUs are faster than the previous generation Tiger Lake variants, though without doing our own testing we can't say for certain how much CPU bottlenecks come into play.

There's also the question of how much power the various chips used, as the Nvidia GPUs have a wide power range. The RTX 3050 Ti can ran at anywhere from 35W to 80W (Intel used a 60W model), and the RTX 3060 mobile has a range from 60W to 115W (Intel used an 85W model). Intel's Arc GPUs also have a power range, from 80W to 120W on the A730M and from 120W to 150W on the A770M. While Intel didn't specifically state the power level of its GPUs, it would have to be higher in both cases.

| Games | Intel Arc A380 | GeForce GTX 1650 | Radeon RX 6400 |

|---|---|---|---|

| 17 Game Geometric Mean | 96.4 | 114.5 | 105.0 |

| Age of Empires 4 | 80 | 102 | 94 |

| Apex Legends | 101 | 124 | 112 |

| Battlefield V | 72 | 85 | 94 |

| Control | 67 | 75 | 72 |

| Destiny 2 | 88 | 109 | 89 |

| DOTA 2 | 230 | 267 | 266 |

| F1 2021 | 104 | 112 | 96 |

| GTA V | 142 | 164 | 180 |

| Hitman 3 | 77 | 89 | 91 |

| Naraka Bladepoint | 70 | 68 | 64 |

| NiZhan | 200 | 200 | 200 |

| PUBG | 78 | 107 | 95 |

| The Riftbreaker | 113 | 141 | 124 |

| The Witcher 3 | 85 | 101 | 81 |

| Total War: Troy | 78 | 98 | 75 |

| Warframe | 77 | 98 | 98 |

| Wolfenstein Youngblood | 95 | 130 | 96 |

Switching over to the desktop side of things, Intel provided the above A380 benchmarks. Note that this time the target is much lower, with the GTX 1650 and RX 6400 budget GPUs going up against the A380. Intel still has higher-end cards coming, but here's how it looks in the budget desktop market.

Even with the usual caveats about manufacturer provided benchmarks, things aren't looking too good for the A380. The Radeon RX 6400 delivered 9% better performance than the Arc A380, with a range of -9% to +31%. The GTX 1650 did even better, with a 19% overall margin of victory and a range of just -3% up to +37%.

And look at the list of games: Age of Empires 4, Apex Legends, DOTA 2, GTAV, Naraka Bladepoint, NiZhan, PUBG, Warframe, The Witcher 3, and Wolfenstein Youngblood? Some of those are more than five years old, several are known to be pretty light in terms of requirements, and in general that's not a list of demanding titles. We get the idea of going after esports competitors, sort of, but wouldn't a serious esports gamer already have something more potent than a GTX 1650?

Keep in mind that Intel potentially has a part that will have four times as much raw compute, which we expect to see in an Arc A770 with a fully enabled ACM-G10 chip. If drivers and performance don't hold it back, such a card could still theoretically match the RTX 3070 and RX 6700 XT, but drivers are very much a concern right now.

On that note, our own Arc A380 review has a slightly different result. We tested eight standard games at 1080p medium, 1080p ultra, and 1440p ultra. Here's what our testing looks like, which came a month or two after Intel's initial tests and used newer drivers.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

| Game | Setting | Arc A380 | GTX 1650 | RX 6400 |

|---|---|---|---|---|

| 8 Game Average | 1080p Medium | 58.0 | 54.6 | 56.4 |

| 1080p Ultra | 30.8 | 29.3 | 26.2 | |

| 1440p Ultra | 21.1 | 25.3 | ||

| Borderlands 3 | 1080p Medium | 70.8 | 56.7 | 61.6 |

| 1080p Ultra | 33.2 | 28.6 | 31.3 | |

| 1440p Ultra | 20.5 | 26.2 | ||

| Far Cry 6 | 1080p Medium | 62.0 | 61.8 | 62.1 |

| 1080p Ultra | 44.1 | 43.0 | 28.6 | |

| 1440p Ultra | 30.2 | 16.6 | ||

| Flight Simulator | 1080p Medium | 42.3 | 44.8 | 41.6 |

| 1080p Ultra | 24.8 | 27.6 | 24.7 | |

| 1440p Ultra | 17.4 | 25.2 | ||

| Forza Horizon 5 | 1080p Medium | 63.0 | 64.0 | 65.9 |

| 1080p Ultra | 22.8 | 27.0 | 21.7 | |

| 1440p Ultra | 19.2 | 25.1 | ||

| Horizon Zero Dawn | 1080p Medium | 63.8 | 56.4 | 62.2 |

| 1080p Ultra | 45.1 | 40.4 | 43.1 | |

| 1440p Ultra | 32.7 | 40.5 | ||

| Red Dead Redemption 2 | 1080p Medium | 64.5 | 60.9 | 70.3 |

| 1080p Ultra | 31.2 | 29.7 | 25.5 | |

| 1440p Ultra | 18.2 | 29.7 | ||

| Total War Warhammer 3 | 1080p Medium | 37.1 | 33.4 | 25.6 |

| 1080p Ultra | 18.9 | 18.1 | 12.4 | |

| 1440p Ultra | 12.4 | |||

| Watch Dogs Legion | 1080p Medium | 60.7 | 59.2 | 61.8 |

| 1080p Ultra | 26.3 | 20.4 | 22.0 | |

| 1440p Ultra | 18.7 | 14.2 | Row 26 - Cell 4 |

Where Intel's earlier testing showed the A380 falling behind the 1650 and 6400 overall, our own testing gives it a slight lead. Game selection will of course play a role, and the A380 trails the faster GTX 1650 Super and RX 6500 XT by a decent amount despite having more memory and theoretically higher compute performance. Perhaps there's still room for further driver optimizations to close the gap.

Arc Alchemist: Beyond the Integrated Graphics Barrier

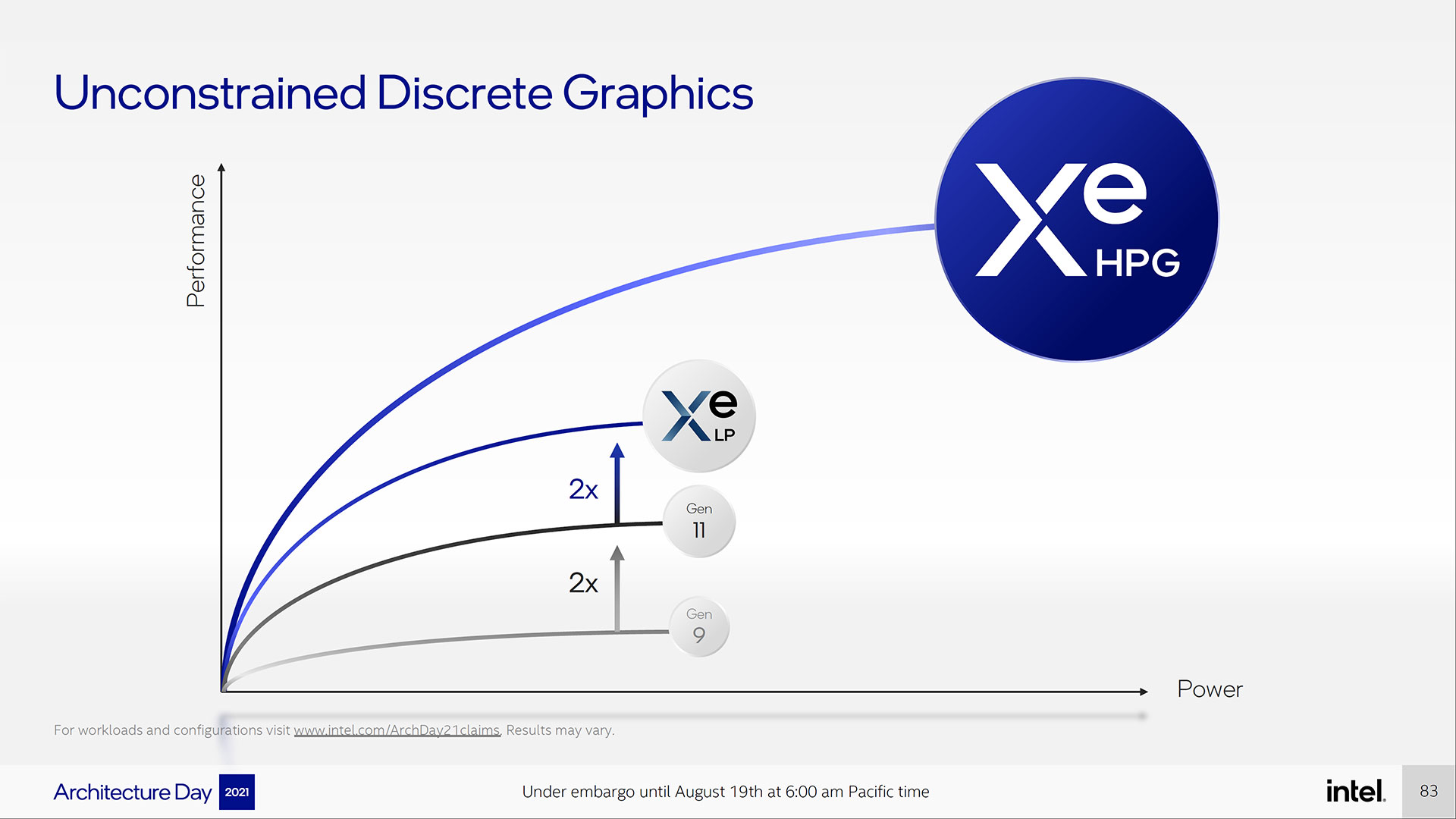

Over the past decade, we've seen several instances where Intel's integrated GPUs have basically doubled in theoretical performance. Despite the improvements, Intel frankly admits that integrated graphics solutions are constrained by many factors: Memory bandwidth and capacity, chip size, and total power requirements all play a role.

While CPUs that consume up to 250W of power exist — Intel's Core i9-12900K and Core i9-11900K both fall into this category — competing CPUs that top out at around 145W are far more common (e.g., AMD's Ryzen 5900X or the Core i7-12700K). Plus, integrated graphics have to share all of those resources with the CPU, which means it's typically limited to about half of the total power budget. In contrast, dedicated graphics solutions have far fewer constraints.

Consider the first generation Xe-LP Graphics found in Tiger Lake (TGL). Most of the chips have a 15W TDP, and even the later-gen 8-core TGL-H chips only use up to 45W (65W configurable TDP). Except TGL-H also cut the GPU budget down to 32 EUs (Execution Units), where the lower power TGL chips had 96 EUs. The new Alder Lake desktop chips also use 32 EUs, though the mobile H-series parts get 96 EUs and a higher power limit.

The top AMD and Nvidia dedicated graphics cards like the Radeon RX 6900 XT and GeForce RTX 3080 Ti have a power budget of 300W to 350W for the reference design, with custom cards pulling as much as 400W. Intel doesn't plan to go that high for its reference Arc A770/A750 designs, which target just 225W, but we'll have to see what happens with the third-party AIB cards. Gunnir's A380 increased the power limit by 23% compared to the reference specs, so a similar increase on the A700 cards could mean a 275W power limit.

Intel Arc Alchemist Architecture

Intel may be a newcomer to the dedicated graphics card market, but it's by no means new to making GPUs. Current Alder Lake (as well as the previous generation Rocket Lake and Tiger Lake) CPUs use the Xe Graphics architecture, the 12th generation of graphics updates from Intel.

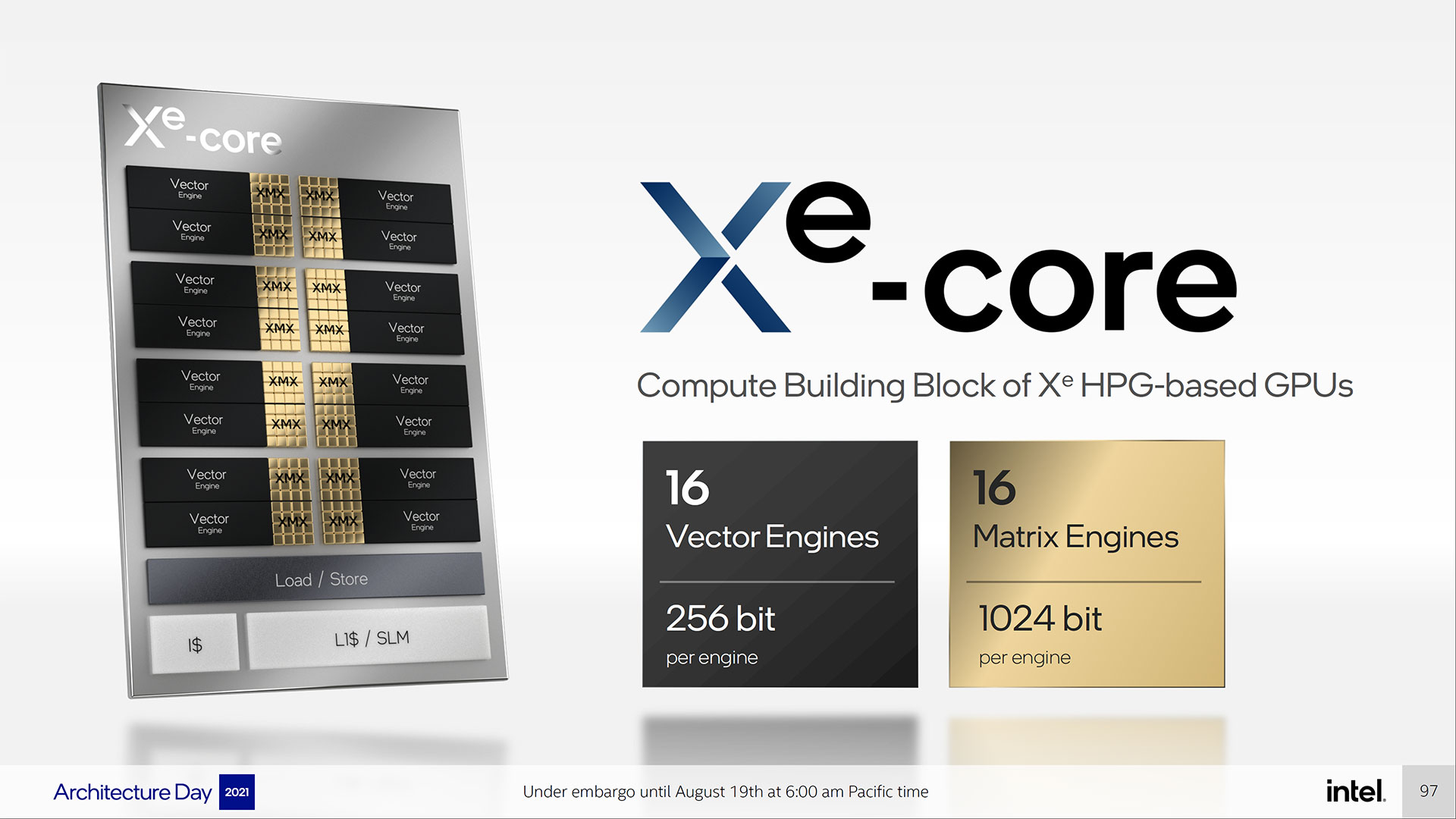

The first generation of Intel graphics was found in the i740 and 810/815 chipsets for socket 370, back in 1998-2000. Arc Alchemist, in a sense, is second-gen Xe Graphics (i.e., Gen13 overall), and it's common for each generation of GPUs to build on the previous architecture, adding various improvements and enhancements. The Arc Alchemist architecture changes are apparently large enough that Intel has ditched the Execution Unit naming of previous architectures and the main building block is now called the Xe-core.

To start, Arc Alchemist will support the full DirectX 12 Ultimate feature set. That means the addition of several key technologies. The headline item is ray tracing support, though that might not be the most important in practice. Variable rate shading, mesh shaders, and sampler feedback are also required — all of which are also supported by Nvidia's RTX 20-series Turing architecture from 2018, if you're wondering. Sampler feedback helps to optimize the way shaders work on data and can improve performance without reducing image quality.

The Xe-core contains 16 Vector Engines (formerly or sometimes still called Execution Units), each of which operates on a 256-bit SIMD chunk (single instruction multiple data). The Vector Engine can process eight FP32 instructions simultaneously, each of which is traditionally called a "GPU core" in AMD and Nvidia architectures, though that's a misnomer. Other data types are supported by the Vector Engine, including FP16 and DP4a, but it's joined by a second new pipeline, the XMX Engine (Xe Matrix eXtensions).

Each XMX pipeline operates on a 1024-bit chunk of data, which can contain 64 individual pieces of FP16 data or 128 pieces of INT8 data. The Matrix Engines are effectively Intel's equivalent of Nvidia's Tensor cores, and they're being put to similar use. They offer a huge amount of potential FP16 and INT8 computational performance, and should prove very capable in AI and machine learning workloads. More on this below.

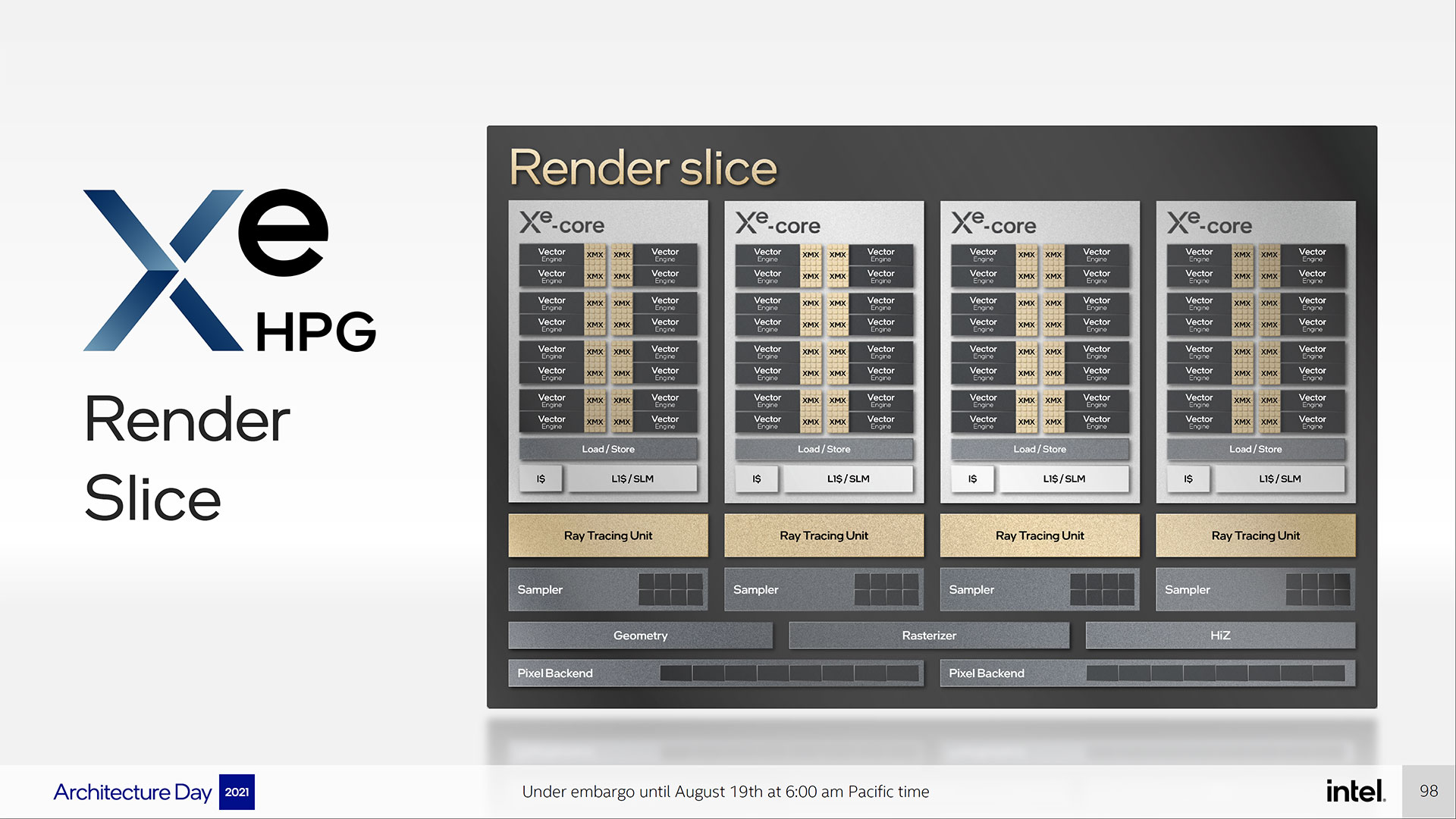

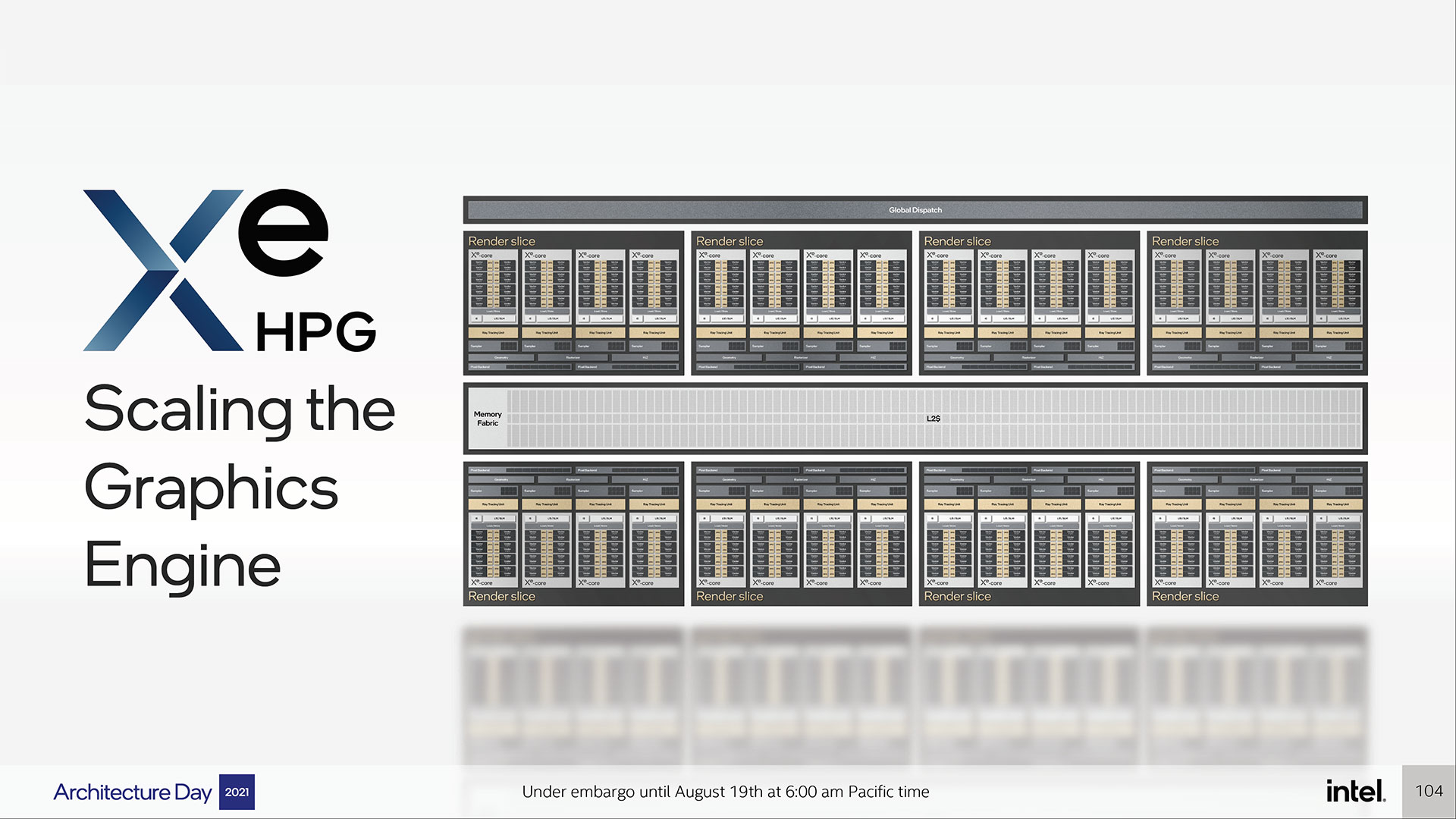

Xe-core represents just one of the building blocks used for Intel's Arc GPUs. Like previous designs, the next level up from the Xe-core is called a render slice (analogous to an Nvidia GPC, sort of) that contains four Xe-core blocks. In total, a render slice contains 64 Vector and Matrix Engines, plus additional hardware. That additional hardware includes four ray tracing units (one per Xe-core), geometry and rasterization pipelines, samplers (TMUs, aka Texture Mapping Units), and the pixel backend (ROPs).

The above block diagrams may or may not be fully accurate down to the individual block level. For example, looking at the diagrams, it would appear each render slice contains 32 TMUs and 16 ROPs. That would make sense, but Intel has not yet confirmed those numbers (even though that's what we used in the above specs table).

The ray tracing units (RTUs) are another interesting item. Intel detailed their capabilities and says each RTU can do up to 12 ray/box BVH intersections per cycle, along with a single ray/triangle intersection. There's dedicated BVH hardware as well (unlike on AMD's RDNA 2 GPUs), so a single Intel RTU should pack substantially more ray tracing power than a single RDNA 2 ray accelerator or maybe even an Nvidia RT core. Except, the maximum number of RTUs is only 32, where AMD has up to 80 ray accelerators and Nvidia has 84 RT cores. But Intel isn't really looking to compete with the top cards this round.

In our testing of the Arc A380, we found ray tracing performance was relatively weak, which is understandable considering its eight RTUs. However, thanks to the architecture and likely the 6GB of VRAM, ray tracing performance did tend to match or even exceed AMD's RX 6500 XT. Again, the high-end cards could end up being quite decent, and Intel claims the A750 can more than match the RTX 3060 in DXR performance while the A770 should be closer to the RTX 3060 Ti or even RTX 3070.

Finally, Intel uses multiple render slices to create the entire GPU, with the L2 cache and the memory fabric tying everything together. Also not shown are the video processing blocks and output hardware, and those take up additional space on the GPU. The maximum Xe HPG configuration for the initial Arc Alchemist launch will have up to eight render slices. Ignoring the change in naming from EU to Vector Engine, that still gives the same maximum configuration of 512 EU/Vector Engines that's been rumored for the past 18 months.

Intel includes 2MB of L2 cache per render slice, so 4MB on the smaller ACM-G11 and 16MB total on the ACM-G10. There will be multiple Arc configurations, though. So far, Intel has shown one with two render slices and a larger chip used in the above block diagram that comes with eight render slices. Given how much benefit AMD saw from its Infinity Cache, we have to wonder how much the 16MB cache will help with Arc performance. Even the smaller 4MB L2 cache is larger than what Nvidia uses on its GPUs, where the GTX 1650 only has 1MB of L2 and the RTX 3050 has 2MB.

While it doesn't sound like Intel has specifically improved throughput on the Vector Engines compared to the EUs in Gen11/Gen12 solutions, that doesn't mean performance hasn't improved. DX12 Ultimate includes some new features that can also help performance, but the biggest change comes via boosted clock speeds. We've seen Intel's Arc A380 clock at up to 2.45 GHz (boost clock), even though the official Game Clock is only 2.0 GHz. A770 has a Game Clock of 2.1 GHz, which yields a significant amount of raw compute.

The maximum configuration of Arc Alchemist will have up to eight render slices, each with four Xe-cores, 16 Vector Engines per Xe-core, and each Vector Engine can do eight FP32 operations per clock. Double that for FMA operations (Fused Multiply Add, a common matrix operation used in graphics workloads), then multiply by a 2.1 GHz clock speed, and we get the theoretical performance in GFLOPS:

8 (RS) * 4 (Xe-core) *16 (VE) * 8 (FP32) * 2 (FMA) * 2.1 (GHz) = 17,203 GFLOPS

Obviously, gigaflops (or teraflops) on its own doesn't tell us everything, but nearly 17.2 TFLOPS for the top configurations is nothing to scoff at. Nvidia's Ampere GPUs still theoretically have a lot more compute. The RTX 3080, as an example, has a maximum of 29.8 TFLOPS, but some of that gets shared with INT32 calculations. AMD's RX 6800 XT by comparison 'only' has 20.7 TFLOPS, but in many games, it delivers similar performance to the RTX 3080. In other words, raw theoretical compute absolutely doesn't tell the whole story. Arc Alchemist could punch above — or below! — its theoretical weight class.

Still, let's give Intel the benefit of the doubt for a moment. Arc Alchemist comes in below the theoretical level of the current top AMD and Nvidia GPUs, but if we skip the most expensive 'halo' cards, it looks competitive with the RX 6700 XT and RTX 3060 Ti. On paper, Intel Arc A770 could even land in the vicinity of the RTX 3070 and RX 6800 — assuming drivers and other factors don't hold it back.

XMX: Matrix Engines and Deep Learning for XeSS

We briefly mentioned the XMX blocks above. They're potentially just as useful as Nvidia's Tensor cores, which are used not just for DLSS, but also for other AI applications, including Nvidia Broadcast.

Theoretical compute from the XMX blocks is eight times higher than the GPU's Vector Engines, except that we'd be looking at FP16 compute rather than FP32. That's similar to what we've seen from Nvidia, although Nvidia also has a "sparsity" feature where zero multiplications (which can happen a lot) get skipped — since the answer's always zero.

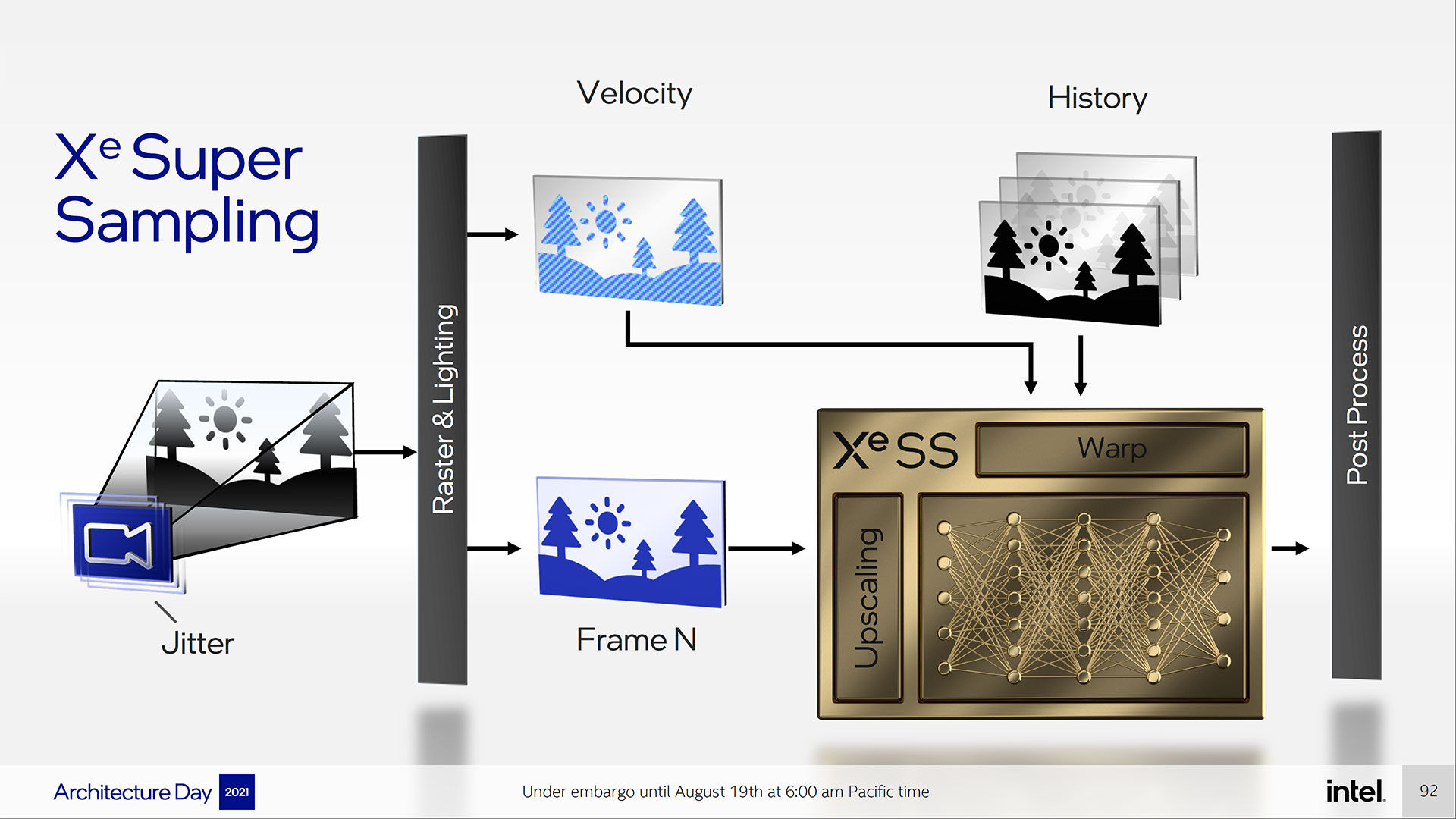

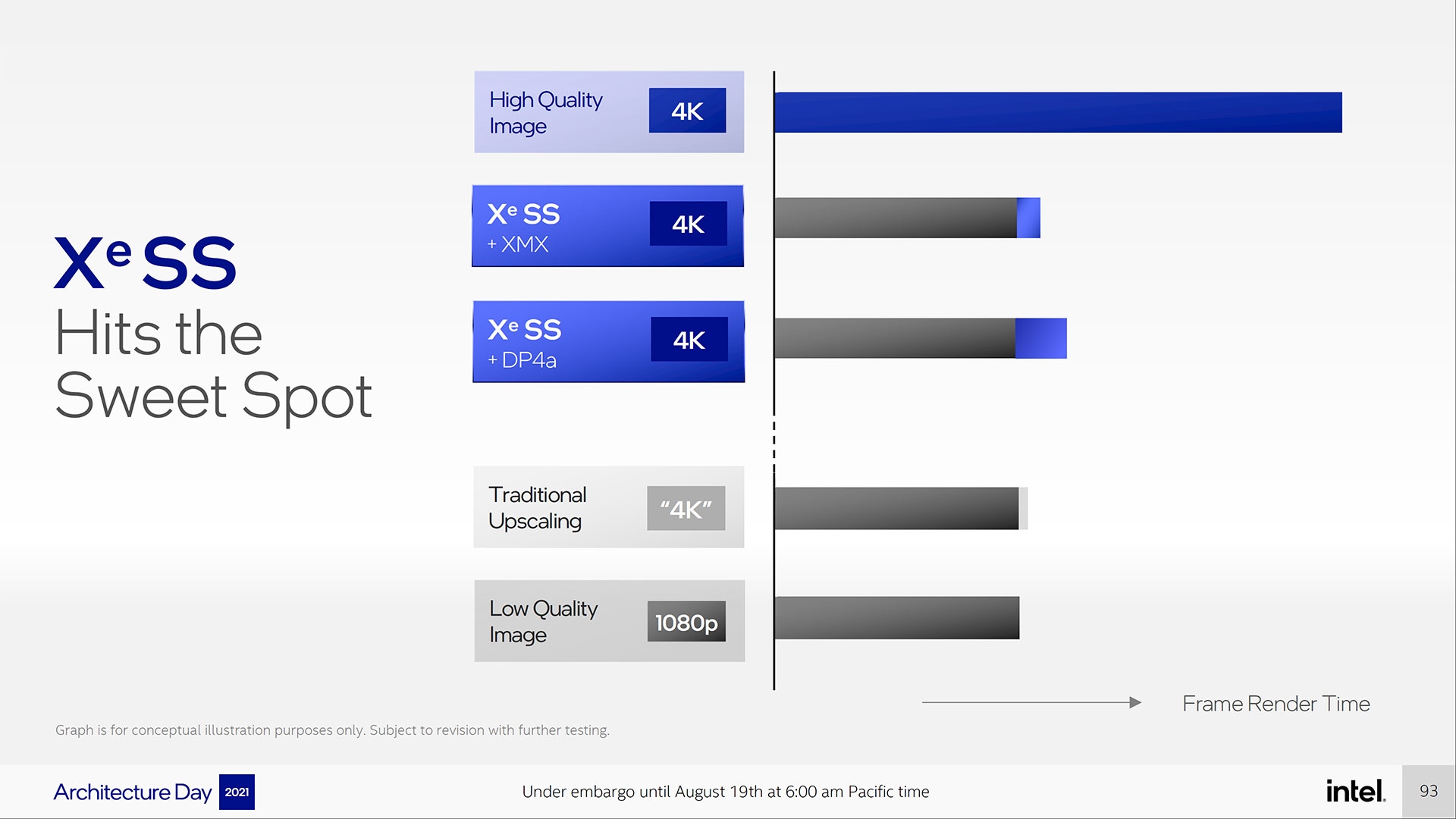

Intel also announced a new upscaling and image enhancement algorithm that it's calling XeSS: Xe Superscaling. Intel didn't go deep into the details, but it's worth mentioning that Intel hired Anton Kaplanyan. He worked at Nvidia and played an important role in creating DLSS before heading over to Facebook to work on VR. It doesn't take much reading between the lines to conclude that he's likely doing a lot of the groundwork for XeSS now, and there are many similarities between DLSS and XeSS.

XeSS uses the current rendered frame, motion vectors, and data from previous frames and feeds all of that into a trained neural network that handles the upscaling and enhancement to produce a final image. That sounds basically the same as DLSS 2.0, though the details matter here, and we assume the neural network will end up with different results.

Intel did provide a demo using Unreal Engine showing XeSS in action (see below), and it looked good when comparing 1080p upscaled via XeSS to 4K against the native 4K rendering. Still, that was in one demo, and we'll have to see XeSS in action in actual shipping games before rendering any verdict.

XeSS also has to compete against AMD's new and "universal" upscaling solution, FSR 2.0. While we'd still give DLSS the edge in terms of pure image quality, FSR 2.0 comes very close and can work on RX 6000-series GPUs, as well as older RX 500-series, RX Vega, GTX going all the way back to at least the 700-series, and even Intel integrated graphics. It will also work on Arc GPUs.

The good news with DLSS, FSR 2.0, and now XeSS is that they should all take the same basic inputs: the current rendered frame, motion vectors, the depth buffer, and data from previous frames. Any game that supports any of these three algorithms should be able to support the other two with relatively minimum effort on the part of the game's developers — though politics and GPU vendor support will likely factor in as well.

More important than how it works will be how many game developers choose to use XeSS. They already have access to both DLSS and AMD FSR, which target the same problem of boosting performance and image quality. Adding a third option, from the newcomer to the dedicated GPU market no less, seems like a stretch for developers. However, Intel does offer a potential advantage over DLSS.

XeSS is designed to work in two modes. The highest performance mode utilizes the XMX hardware to do the upscaling and enhancement, but of course, that would only work on Intel's Arc GPUs. That's the same problem as DLSS, except with zero existing installation base, which would be a showstopper in terms of developer support. But Intel has a solution: XeSS will also work, in a lower performance mode, using DP4a instructions (four INT8 instructions packed into a single 32-bit register).

DP4a is widely supported by other GPUs, including Intel's previous generation Xe LP and multiple generations of AMD and Nvidia GPUs (Nvidia Pascal and later, or AMD Vega 20 and later), which means XeSS in DP4a mode will run on virtually any modern GPU. Support might not be as universal as AMD's FSR, which runs in shaders and basically works on any DirectX 11 or later capable GPU as far as we're aware, but quality should be better than FSR 1.0 and might even take on FSR 2.0 as well. It would also be very interesting if Intel supported Nvidia's Tensor cores, through DirectML or a similar library, but that wasn't discussed.

The big question will still be developer uptake. We'd love to see similar quality to DLSS 2.x, with support covering a broad range of graphics cards from all competitors. That's definitely something Nvidia is still missing with DLSS, as it requires an RTX card. But RTX cards already make up a huge chunk of the high-end gaming PC market, probably around 90% or more (depending on how you quantify high-end). So Intel basically has to start from scratch with XeSS, and that makes for a long uphill climb.

Arc Alchemist and GDDR6

Intel has confirmed Arc Alchemist GPUs will use GDDR6 memory. Most of the mobile variants are using 14Gbps speeds, while the A770M runs at 16Gbps and the A380 desktop part uses 15.5Gbps GDDR6. The future desktop models will use 16Gbps memory on the A750 and A580, while the A770 will use 17.5Gbps GDDR6.

There will be multiple Xe HPG / Arc Alchemist solutions, with varying capabilities. The larger chip, which we've focused on so far, has eight 32-bit GDDR6 channels, giving it a 256-bit interface. Intel has confirmed that the A770 can be configured with either 8GB or 16GB of memory. Interestingly, the mobile A730M trims that down to a 192-bit interface and the A550M uses a 128-bit interface. However, the desktop models will apparently all stick with the full 256-bit interface, likely for performance reasons.

The smaller Arc GPU only has a 96-bit maximum interface width, though the A370M and A350M cut that to a 64-bit width, while the A380 uses the full 96-bit option and comes with 6GB of GDDR6.

The A380 didn't look particularly impressive in our testing, but the larger chips combined with substantially more memory bandwidth look far more competitive — assuming Intel also competes on price and availability. If the A770 can indeed match or exceed the performance of Nvidia's RTX 3060, and AMD's RX 6650 XT as well, the starting price of $329 will make it worth considering.

Arc Alchemist Die Shots and Analysis

Intel will partner with TSMC and use the N6 process (an optimized variant of N7) for Arc Alchemist. That means it's not technically competing for the same wafers as AMD uses for its Zen 2, Zen 3, RDNA, and RDNA 2 GPUs. At the same time, AMD and Nvidia could also use N6 as well — it's design is compatible with N7, so Intel's use of TSMC certainly doesn't help AMD or Nvidia production capacities.

TSMC likely has a lot of tools that overlap between N6 and N7 as well, meaning it could run batches of N6, then batches and N7, switching back and forth. That means there's potential for this to cut into TSMC's ability to provide wafers to other partners. And speaking of wafers...

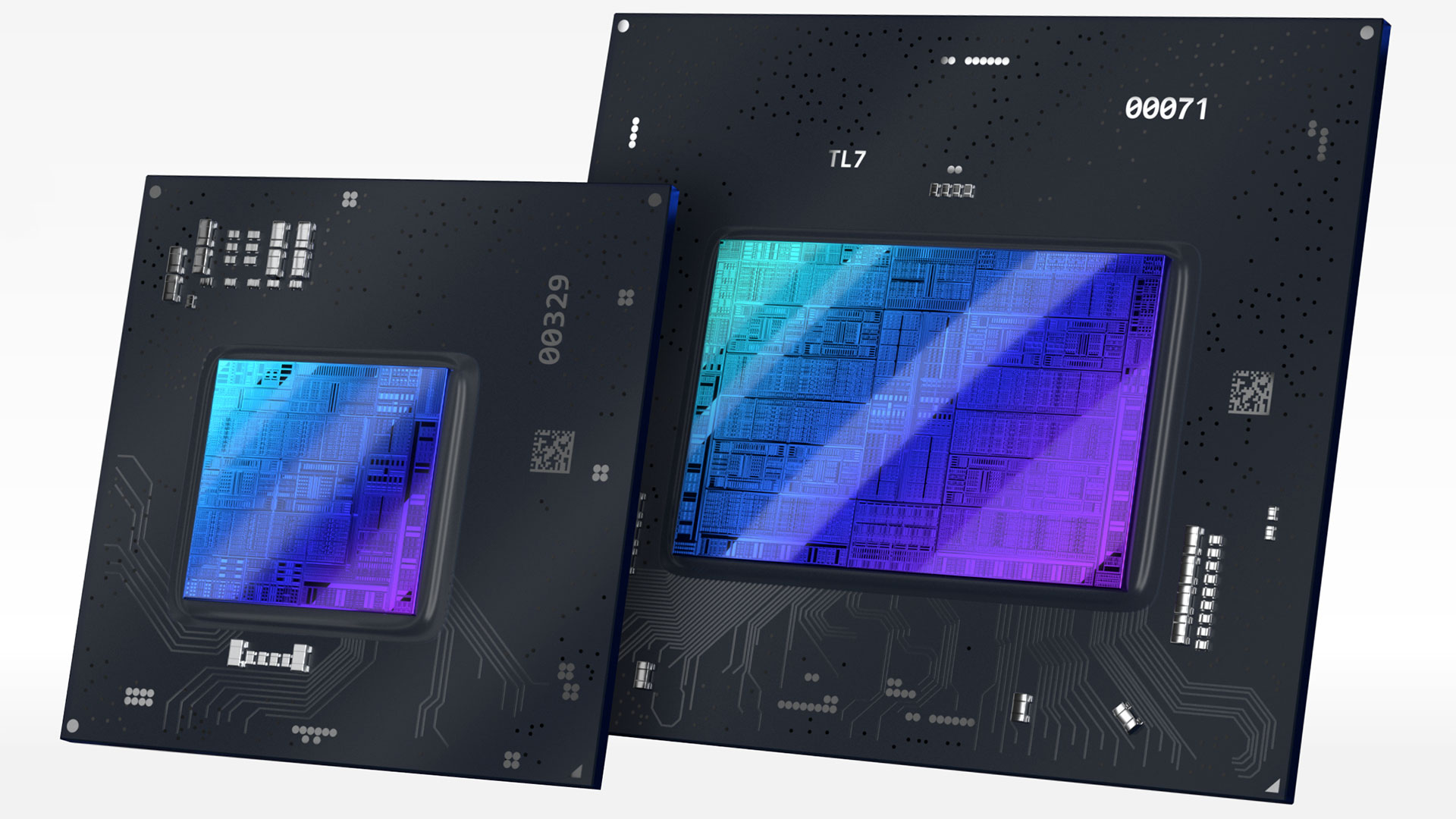

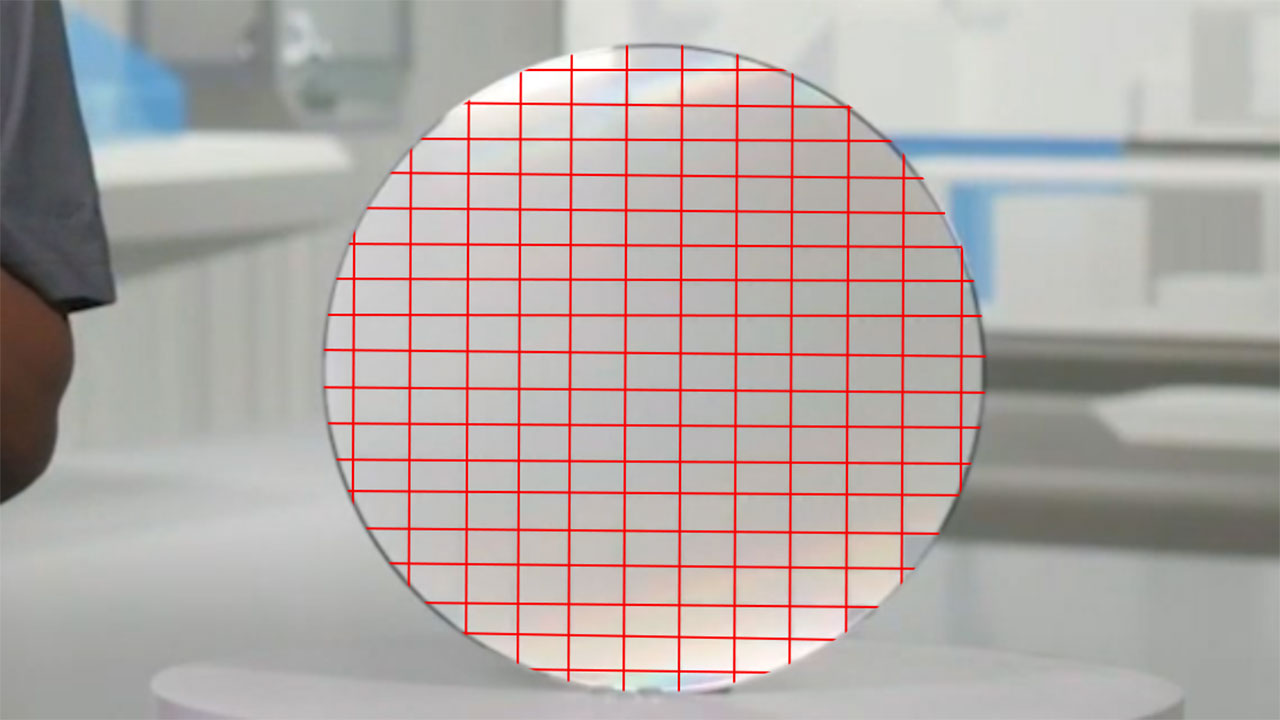

Raja showed a wafer of Arc Alchemist chips at Intel Architecture Day. By snagging a snapshot of the video and zooming in on the wafer, the various chips on the wafer are reasonably clear. We've drawn lines to show how large the chips are, and based on our calculations, it looks like the larger Arc die will be around 24x16.5mm (~396mm^2), give or take 5–10% in each dimension. Other reports state that the die size is actually 406mm^2, so we were pretty close.

That's not a massive GPU — Nvidia's GA102, for example, measures 628mm^2 and AMD's Navi 21 measures 520mm^2 — but it's also not small at all. AMD's Navi 22 measures 335mm^2, and Nvidia's GA104 is 393mm^2, so ACM-G10 is larger than AMD's chip and similar in size to the GA104 — but made on a smaller manufacturing process. Still, putting it bluntly: Size matters.

This may be Intel's first real dedicated GPU since the i740 back in the late 90s, but it has made many integrated solutions over the years, and it has spent the past several years building a bigger dedicated GPU team. Die size alone doesn't determine performance, but it gives a good indication of how much stuff can be crammed into a design. A chip that's 406mm^2 in size suggests Intel intends to be competitive with at least the RTX 3070 and RX 6700 XT, which is perhaps higher than some were expecting.

Besides the wafer shot, Intel also provided these two die shots for Xe HPG. The larger die has eight clusters in the center area that would correlate to the eight render slices. The memory interfaces are along the bottom edge and the bottom half of the left and right edges, and there are four 64-bit interfaces, for 256-bit total. Then there's a bunch of other stuff that's a bit more nebulous, for video encoding and decoding, display outputs, etc.

The smaller die has two render slices, giving it just 128 Vector Engines. It also only has a 96-bit memory interface (the blocks in the lower-right edges of the chip), which could put it at a disadvantage relative to other cards. Then there's the other 'miscellaneous' bits and pieces, for things like the QuickSync Video Engine. Obviously, performance will be substantially lower than the bigger chip.

While the smaller chip appears to be slower than all the current RTX 30-series GPUs, it does put Intel in an interesting position. The A380 checks in at a theoretical 4.1 TFLOPS, which means it ought to be able to compete with a GTX 1650 Super, with additional features like AV1 encoding/decoding support that no other GPU currently has. 6GB of VRAM also gives Intel a potential advantage, and on paper the A380 ought to land closer to the RX 6500 XT than the RX 6400.

That's not currently the case, according to Intel's own benchmarks as well as our own testing (see above), but perhaps further tuning of the drivers could give a solid boost to performance. We certainly hope so, but let's not count those chickens before they hatch.

Will Intel Arc Be Good at Mining Cryptocurrency?

This is hopefully a non-issue at this stage, as the potential profits from cryptocurrency mining have dropped off substantially in recent months. Still, some people might want to know if Intel's Arc GPUs can be used for mining. Publicly, Intel has said precisely nothing about mining potential and Xe Graphics. However, given the data center roots for Xe HP/HPC (machine learning, High-Performance Compute, etc.), Intel has certainly at least looked into the possibilities mining presents, and its Bonanza Mining chips are further proof Intel isn't afraid of engaging with crypto miners. There's also the above image (for the entire Intel Architecture Day presentation), with a physical Bitcoin and the text "Crypto Currencies."

Generally speaking, Xe might work fine for mining, but the most popular algorithms for GPU mining (Ethash mostly, but also Octopus and Kawpow) have performance that's predicated almost entirely on how much memory bandwidth a GPU has. For example, Intel's fastest Arc GPUs will use a 256-bit interface. That would yield similar bandwidth to AMD's RX 6800/6800 XT/6900 XT as well as Nvidia's RTX 3060 Ti/3070, which would, in turn, lead to performance of around 60-ish MH/s for Ethereum mining.

There's also at least one piece of mining software that now has support for the Arc A380. While in theory the memory bandwidth would suggest an Ethereum hashrate of around 20-23 MH/s, current tests only showed around 10 MH/s. Further tuning of the software could help, but by the time the larger and faster Arc models arrive, Ethereum should have undergone 'The Merge' and transitioned to a full proof of stake algorithm.

If Intel had launched Arc in late 2021 or even early 2022, mining performance might have been a factor. Now, the current crypto-climate suggests that, whatever the mining performance, it won't really matter.

Arc Alchemist Launch Date and Future GPU Plans

The core specs for Arc Alchemist are shaping up nicely, and the use of TSMC N6 and a 406mm^2 die with a 256-bit memory interface all point to a card that should be competitive with the current mainstream/high-end GPUs from AMD and Nvidia, but well behind the top performance models.

As the newcomer, Intel needs the first Arc Alchemist GPUs to come out swinging. As we discussed in our Arc A380 review, however, there's much more to building a good graphics card than hardware. That's probably why Arc A380 launched in China first, to get the drivers and software ready for the faster offerings as well as the rest of the world.

Alchemist represents the first stage of Intel's dedicated GPU plans, and there's more to come. Along with the Alchemist codename, Intel revealed codenames for the next three generations of dedicated GPUs: Battlemage, Celestial, and Druid. Now we know our ABCs, next time won't you build a GPU with me? Those might not be the most awe-inspiring codenames, but we appreciate the logic of going in alphabetical order.

Tentatively, with Alchemist using TSMC N6, we might see a relatively fast turnaround for Battlemage. It could use TSMC's N5 process and ship in 2023 — which would perhaps be wise, considering we expect to see Nvidia's Lovelace RTX 40-series GPUs and AMD's RX 7000-series RDNA 3 GPUs in the next few months. Shrink the process, add more cores, tweak a few things to improve throughput, and Battlemage could put Intel on even footing with AMD and Nvidia. Or it could arrive woefully late (again) and deliver less performance.

Intel needs to iterate on the future architectures and get them out sooner than later if it hopes to put some pressure on AMD and Nvidia. Arc Alchemist already slipped from 2021 to a supposed hard launch date of Q1 2022, which then changed to Q2 for China and Q3 for the US and other markets. Intel really needs to stop the slippage and get cards out, with fully working drivers, sooner rather than later if it doesn't want a repeat of its old i740 story.

Final Thoughts on Intel Arc Alchemist

The bottom line is that Intel has its work cut out for it. It may be the 800-pound gorilla of the CPU world, but it has stumbled and faltered even there over the past several years. AMD's Ryzen gained ground, closed the gap, and took the lead up until Intel finally delivered Alder Lake and desktop 10nm ("Intel 7" now) CPUs. Intel's manufacturing woes are apparently bad enough that it turned to TSMC to make its dedicated GPU dreams come true.

As the graphics underdog, Intel needs to come out with aggressive performance and pricing, and then iterate and improve at a rapid pace. And please don't talk about how Intel sells more GPUs than AMD and Nvidia. Technically, that's true, but only if you count incredibly slow integrated graphics solutions that are at best sufficient for light gaming and office work. Then again, a huge chunk of PCs and laptops are only used for office work, which is why Intel has repeatedly stuck with weak GPU performance.

We now have hard details on all the Arc GPUs, and we've tested the desktop A380. We even have Intel's own performance data, which was less than inspiring. Had Arc launched in Q1 as planned, it could have carved out a niche. The further it slips into 2022, the worse things look.

Again, the critical elements are going to be performance, price, and availability. The latter is already a major problem, because the ideal launch window was last year. Intel's Xe DG1 was also pretty much a complete bust, even as a vehicle to pave the way for Arc, because driver problems appear to persist. Arc Alchemist sets its sights far higher than the DG1, but every month that passes those targets become less and less compelling.

We should find out how the rest of Intel's discrete graphics cards stack up to the competition in October. Can Intel capture some of the mainstream market from AMD and Nvidia? Time will tell, but we're still hopeful Intel can turn the current GPU duopoly into a triopoly in the coming years — if not with Alchemist, then perhaps with Battlemage.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

InvalidError It would be nice if the entry-level part really did launch at $200 retail and perform like a $200 GPU should perform scaled based on process density improvement since the GTX1650S.Reply -

VforV The amount of speculation and (?) in the Specification table is funny... :DReply

If Arc's top GPU is gonna be 3070 Ti level (best case scenario) and it will cost $600 like the 3070 Ti, it's gonna be a big, BIG fail.

I don't care their name is intel, they need to prove themselves in GPUs and to do that they either need to 1) beat the best GPUs of today 3090/6900XT (which they won't) or to 2) have better, a much better price/perf ratio compared to nvidia and AMD, and also great software.

So option 2 is all that they have. 3070 TI perf level should not be more than $450, better yet $400. And anything lower in performance should also be lower in price, accordingly.

Let's also not forget Ampere and RDNA2 refresh suposedly coming almost at the same time with intel ARC, Q1 2022 (or sooner, even Q4 2021, maybe). Yikes for intel. -

JayNor Intel graphics is advertising a discussion today at 5:30ET on youtube, "Intel Arc Graphics Q&A with Martin Stroeve and Scott Wasson!"Reply -

nitts999 ReplyVforV said:If Arc's top GPU is gonna be 3070 Ti level (best case scenario) and it will cost $600 like the 3070 Ti, it's gonna be a big, BIG fail.

Why do people quote MSRPs? There is nowhere you can buy a 3070-TI for $600.

If I could buy an Intel 3070ti equivalent for $600 I would do it in a heartbeat. Availability and "street price" are the only two things that matter. MSRPs for non-existent products are a waste of breath. -

-Fran- As I said before, what will make or break this card is not how good the silicon is in theory, but the driver support.Reply

I do not believe the Intel team in charge of polishing the drivers has had enough time; hell, not even 2 years after release is enough time to get them ready!

I do wish for Intel to do well, since it'll be more competition in the segment, but I have to say I am also scared because it's Intel. Their strong-arming game is worse than nVidia. I'll love to see how nVidia feels on the receiving end of it in their own turf.

Regards. -

waltc3 ZZZZz-z-z-z-z-zzzzz....wake me when you have an actual product to review. Until then, we really won't "know" anything, will we?....;) Right now it's just vaporware. It's not only Intel doing that either--there's is quite a bit of vaporware-ish writing about currently non-existent nVidia and AMD products as well. Sure is a slow product year....If this sounds uncharitable, sorry--I just don't get off on probables and maybes and could-be's...;)Reply -

InvalidError Reply

I enabled my i5's IGP to offload trivial stuff and stretch my GTX1050 until something decent appears for $200. Intel's Control Center appears to get fixated on the first GPU it finds so I can't actually configure UHD graphics with it. Not sure how such a bug/shortcoming in drivers can still exist after two years of Xe IGPs in laptos that often also have discrete graphics.Yuka said:I do not believe the Intel team in charge of polishing the drivers has had enough time; hell, not even 2 years after release is enough time to get them ready!

Intel's drivers and related tools definitely need more work. -

-Fran- Reply

It baffles me how people that has an Intel iGPU has never actually had the experience of suffering trying to use it for daily stuff and slightly more advanced things than just power a single monitor (which, at times, it can't even do properly).InvalidError said:I enabled my i5's IGP to offload trivial stuff and stretch my GTX1050 until something decent appears for $200. Intel's Control Center appears to get fixated on the first GPU it finds so I can't actually configure UHD graphics with it. Not sure how such a bug/shortcoming in drivers can still exist after two years of Xe IGPs in laptos that often also have discrete graphics.

Intel's drivers and related tools definitely need more work.

I can't even call their iGPU software "barebones", because even basic functionality is sketchy at times. And for everything they've been promising, I wonder how their priorities will turn out to be. I hope Intel realized they won't be able to have the full cake and will have to make a call on either consumer side (games support and basic functionality) or their "pro"/advanced side of things they've been promising (encoding, AI, XeSS, etc).

Yes, I'm being a negative Nancy, but that's fully justified. I'd love to be proven wrong though, but I don't see that happening :P

Regards. -

btmedic04 ReplyVforV said:The amount of speculation and (?) in the Specification table is funny... :D

If Arc's top GPU is gonna be 3070 Ti level (best case scenario) and it will cost $600 like the 3070 Ti, it's gonna be a big, BIG fail.

I don't care their name is intel, they need to prove themselves in GPUs and to do that they either need to 1) beat the best GPUs of today 3090/6900XT (which they won't) or to 2) have better, a much better price/perf ratio compared to nvidia and AMD, and also great software.

So option 2 is all that they have. 3070 TI perf level should not be more than $450, better yet $400. And anything lower in performance should also be lower in price, accordingly.

Let's also not forget Ampere and RDNA2 refresh suposedly coming almost at the same time with intel ARC, Q1 2022 (or sooner, even Q4 2021, maybe). Yikes for intel.

I disagree with this. In this market, if anyone can supply a GPU with 3070 Ti performance at $600 and keep production ahead of demand, they are going to sell like hotcakes regardless of who has the fastest GPU this generation. Seeing how far off Nvidia and AMD are from meeting demand currently gives Intel a massive opportunity provided that they can meet or exceed demand.

Yuka said:As I said before, what will make or break this card is not how good the silicon is in theory, but the driver support.

I do not believe the Intel team in charge of polishing the drivers has had enough time; hell, not even 2 years after release is enough time to get them ready!

I do wish for Intel to do well, since it'll be more competition in the segment, but I have to say I am also scared because it's Intel. Their strong-arming game is worse than nVidia. I'll love to see how nVidia feels on the receiving end of it in their own turf.

Regards.

This right here is my biggest concern. How good will the drivers be and how quickly will updates come. Unlike AMD, Intel has the funding to throw at its driver team and developers, but money cant buy experience creating high performance drivers. only time can do that

I remember the days of ATi, Nvidia, 3dFX, S3, and Matrox to name a few. Those were exciting times and I hope for all of our sake that intel succeeds with Arc. We as consumers need a third competitor at the high end. This duopoly has gone on long enough -

ezst036 Replybtmedic04 said:In this market, if anyone can supply a GPU with 3070 Ti performance at $600 and keep production ahead of demand, they are going to sell like hotcakes......

In the beginning this may not be possible. AFAIK Intel will source out of TSMC for this, which simply means more in-fighting for the same floor space in the same video card producing fabs.

When Intel gets its own fabs ready to go and can add additional fab capacity for this specific use case that isn't now available today, that's when production can (aim toward?)stay ahead of demand, and the rest of what you said, I think is probably right.

If TSMC is just reducing Nvidias and AMDs to make room for Intels on the fab floor, the videocard production equation isn't changing. - unless TSMC shoves someone else to the side in the CPU or any other production area. I suppose that's a thing.