Radeon HD 4850 Vs. GeForce GTS 250: Non-Reference Battle

The Gigabyte GV-N250ZL-1GI: Software And Cooling

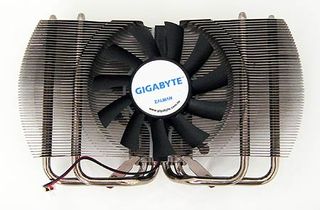

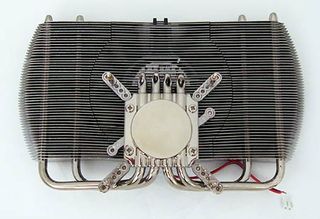

The most striking feature of the card is, of course, its large Zalman cooler, as its four heatpipe design is elegant, simple, and typical of a Zalman product. The cooler is a Zalman VF1050, which is a newer version of the tried-and-true VF1000. Buying a Zalman VF1000 alone would set you back $45 on Newegg, so there’s undeniable value with this cooler being part of the GV-N250ZL-1GI package. A low-cost reference GeForce GTS 250 and a Zalman VF 1000 cooler would add up to more than what the Gigabyte card costs.

It’s notable that there is absolutely no cooling applied to the card’s memory, although memory cooling is often a gimmick. While the card takes up two slots, it doesn’t have a vent at the rear of the case, so the hot air from the card's components is deposited back in the case, similar to Asus' EAH4850 MT air-flow design. Unlike the Asus card, however, the Gigabyte cooler is not a "hybrid" unit, so the cooler is always on, although the Zalman fan is so quiet that it’s not really an issue.

Once again, our pre-release sample didn’t include a retail box or software bundle. From promotional shots of the card, we see it will be shipped with a Molex-to-PCIe converter cable, a DVI-to-HDMI dongle, and an audio cable to channel sound from a sound card to the HDMI output, if desired. Remember that sound over HDMI isn’t natively supported in the GeForce GTS 250 cards, so this is a workaround. Notably missing is an SLI connector and component video dongle, which are things we would expect in a top-tier version of a GeForce GTS 250. Also included is a manual and a driver/utility CD. Gigabyte’s Gamer HUD Lite utility will be on this CD as well, although it can be downloaded from the Gigabyte site if need be.

This is a simple overclocking utility that, when accompanied with a Gigabyte card that has the option of voltage control, can be a force to reckon with. Unfortunately, unlike Gigabyte’s GeForce 9800 GT, the GV-N250ZL-1GI has no voltage control ability, and voltage controls will be missing from the Gamer HUD control panel when used with the GV-N250ZL-1GI. Hence, the "Lite" designation.

Presumably, Gigabyte feels that the Ultra Durable VGA feature will be enough to let the card stretch its legs and be a serious overclocking contender. We’re a little disappointed because we wanted to pit one voltage-tweakable card against another, but we’ll see if Gigabyte’s card has what it takes to stand against Asus’ EAH4850 Matrix later when it comes times for overclocking.

For now, we’ll consider that even the restricted version of Gigabyte’s Gamer HUD Lite application offers everything an overclocker really needs with no interface confusion.

The Gamer HUD Lite utility really has only three settings to change: GPU, shader, and memory clock speeds. You can also enable "2D/3D Auto-Optimized," but from what we saw, it didn’t really do any overclocking at that setting. There is also a handy temperature and GPU usage graph. That’s it! Clean and simple.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Armed with these two unique cards and their proprietary overclocking tools, let’s see how far we can take them.

Current page: The Gigabyte GV-N250ZL-1GI: Software And Cooling

Prev Page The Gigabyte GV-N250ZL-1GI: ZL Stands For Zalman Next Page Overclocking The Asus EAH 4850 Matrix Using iTracker-

tuannguyen rags_20In the second picture of the 4850, the card can be seen bent due to the weight.Reply

Hi rags_20 -

Actually, the appearance of the card in that picture is caused by barrel or pincushion distortion of the lens used to take the photo. The card itself isn't bent.

/ Tuan -

jebusv20 demonhorde665... try not to triple post.Reply

looks bad... and eratic. and makes the forums/coments system

more clutered than need be.

ps. your not running the same bench markes as Toms so your not really comparable.

yes, same game and engine, but for example in crysis, the frame rates are completely different from the start, through to the snowey bit at the end.

pps. are you comparing your card to there card at the same resolution? -

alexcuria Hi,Reply

I've been looking for a comparison like this for several weeks. Thank you although it didn't help me too much in my decision. I also missed some comments regarding the Physix, Cuda, DirectX 10 or 10.1 and Havok discussion.

I would be very happy to read a review for the Gainward HD4850 Golden Sample "Goes Like Hell" with the faster GDDR5 memory. If it then CLEARLY takes the lead over the GTS 250 and gets even closer to the HD4870 then my decision will be easy. Less heat, less consumption and almost same performance than a stock 4870. Enough for me.

btw. Resolutions I'm most interested in: 1440x900 and 1650x1080 for 20" monitor.

Thank you -

spanner_razor Under the test setup section the cpu is listed as core 2 duo q6600, should it not be listed as a quad? Feel free to delete this comment if it is wrong or when you fix the erratum.Reply -

KyleSTL Why a Q6600/750i setup? That is certainly less than ideal. A Q9550/P45 or 920/X58 would have been a better choice in my opinion (and may have exhibited a greater difference between the cards).Reply -

B-Unit zipzoomflyhighand no the Q6600 is classified as a C2D. Its two E6600's crammed on one die.Reply

No, its classified as a C2Q. E6600 is classified as C2D. -

KyleSTL ZZFhigh,Reply

Directly from the article on page 11:

Game Benchmarks: Left 4 Dead

Clearly this is not an ideal setup to eliminate the processor from affecting benchmark results of the two cards. Most games are not multithreaded, so the 2.4Ghz clock of the Q6600 will undoubtedly hold back a lot of games since they will not be able to utilize all 4 cores.

Let’s move on to a game where we can crank up the eye candy, even at 1920x1200. At maximum detail, can we see any advantage to either card?

Nothing to see here, though given the results in our original GeForce GTS 250 review, this is likely a result of our Core 2 Quad processor holding back performance.

To all,

Stop triple posting!

-

weakerthans4 ReplyThe default clock speeds for the Gigabyte GV-N250ZL-1GI are 738 MHz on the GPU, 1,836 MHz on the shaders, and 2,200 MHz on the memory. Once again, these are exactly the same as the reference GeForce GTS 250 speeds.

Later in the article you write,or the sake of argument, let’s say most cards can make it to 800 MHz, which is a 62 MHz overclock. So, for Gigabyte’s claim of a 10% overclocking increase, we’ll say that most GV-N250ZL-1GI cards should be able to get to at least 806.2 MHz on the GPU. Hey, let’s round it up to 807 MHz to keep things clean. Did the GV-N250ZL-1GI beat the spread? It sure did. With absolutely no modifications except to raw clock speeds, our sample GV-N250ZL-1GI made it to 815 MHz rock-solid stable. That’s a 20% increase over an "expected" overclock according to our unscientific calculation.

Your math is wrong. A claim of 20% over clock on the GV-N250ZL-1GI would equal 885.6 MHz. 10% of 738MHz = 73.8 MHz. So a 10% overclock would equal 811.8 MHz. 815 MHz is nowhere near 20%. In fact, according to your numbers, the GV-N250ZL-1GI barely lives up to its 10% minimal capability.

Most Popular