Intel’s 24-Core, 14-Drive Modular Server Reviewed

Storage Controller Module

Through the shared SAS connection inside the chassis, the compute modules communicate with the storage controller modules. This enables the MFS5000SIs to utilize the assigned disks in the SAN. The storage control module also manages the SAN’s RAID teams and the disk virtualization that drives the storage pools using the management module’s modular server control application. An interposer card connects the storage controller module to the storage back plane sitting behind the hard disks. A hot-swappable storage controller module is required to run the 14 SAS drives in the MFSYS25’s SAN. For redundancy, a second storage controller module should be installed in case the first module fails during operation.

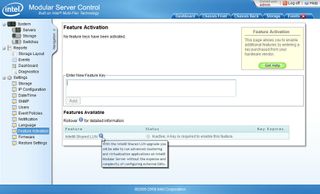

One additional function not included by default is the shared logical unit numbers (LUN) feature that enables two compute modules to share the same LUN. To enable this function, an activation code must be purchased and added to the system, using the modular server control’s Featured Activation UI.

On the front panel of the storage controller module, there are two additional interfaces. The first is a serial connection for accessing the storage module’s command line interface. It’s a port that Intel uses for low-level debugging and testing.

The second interface is an external SAS connector that, once activated, can be used to add an external SAS device (e.g. tape or disk) to the MFSYS25. I had some difficulty finding information about the use of the external SAS port, but with a little assistance from our Intel rep, I was able to get a document on how to connect the storage controller module to the Promise VTrak e610 and e310 storage devices. But while it’s a decent tutorial, a limitation of using the external SAS is that you can’t manage the newly added direct attached storage from the modular server control UI. Instead, you need to use whatever tool the DAS manufacturer offers.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

-

kevikom This is not a new concept. HP & IBM already have Blade servers. HP has one that is 6U and is modular. You can put up to 64 cores in it. Maybe Tom's could compare all of the blade chassis.Reply -

sepuko Are the blades in IBM's and HP's solutions having to carry hard drives to operate? Or are you talking of certain model or what are you talking about anyway I'm lost in your general comparison. "They are not new cause those guys have had something similar/the concept is old."Reply -

Why isn't the poor network performance addressed as a con? No GigE interface should be producing results at FastE levels, ever.Reply

-

nukemaster So, When you gonna start folding on it :pReply

Did you contact Intel about that network thing. There network cards are normally top end. That has to be a bug.

You should have tried to render 3d images on it. It should be able to flex some muscles there. -

MonsterCookie Now frankly, this is NOT a computational server, and i would bet 30% of the price of this thing, that the product will be way overpriced and one could buid the same thing from normal 1U servers, like Supermicro 1U Twin.Reply

The nodes themselves are fine, because the CPU-s are fast. The problem is the build in Gigabit LAN, which is jut too slow (neither the troughput nor the latency of the GLan was not ment for these pourposes).

In a real cumputational server the CPU-s should be directly interconnected with something like Hyper-Transport, or the separate nodes should communicate trough build-in Infiniband cards. The MINIMUM nowadays for a computational cluster would be 10G LAN buid in, and some software tool which can reduce the TCP/IP overhead and decrease the latency. -

less its a typo the bench marked older AMD opterons. the AMD opteron 200s are based off the 939 socket(i think) which is ddr1 ecc. so no way would it stack up to the intel.Reply

-

The server could be used as a Oracle RAC cluster. But as noted you really want better interconnects than 1gb Ethernet. And I suspect from the setup it makes a fare VM engine.Reply

Most Popular