Intel’s 24-Core, 14-Drive Modular Server Reviewed

Main And I/O Cooling Modules

Cooling is a key factor when running the MFSYS25. About 80% of the MFSYS25 front panel is perforated, so if you put your hand in front of the running chassis, you can feel the amount of air drawn in from different parts of the panel. There are three main sources for this induction.

In the rear of the main chassis are two hot-swappable main cooling modules that are used to pull air through the heavily perforated front panels in front of the compute modules. These large modules sit in a vertical arrangement so that each main cooling module is positioned to cover either the top three compute modules or the bottom three.

As the fans spin, air rushes over each of the compute modules’ voltage regulator, CPU, and memory banks before exiting through the main cooling module. Each of these two larger cooling devices consists of two 4.5" fans in a 1+1 redundancy configuration and sits in a tandem position. Each fan then plugs into a small circuit board inside the module housing, which in turn plugs into the MFSYS25 midplane.

As mentioned before, each of the main cooling modules cools down three of the six compute modules, so if there is a problem with a faulty fan, it should be replaced immediately. The user manual states that hot-swappable cooling devices should be replaced within a minute, especially if the MFSYS25 is fully populated with six compute modules.

The smaller I/O cooling module sits on the front of the main chassis, just below the storage enclosure. Inside this smaller module are six 1.5" fans that run in a 3+3 redundancy configuration. The I/O module pulls in air from the front of the chassis and directs the flow to the back, which cools the I/O devices in the rear of the system. Like the other two cooling modules, the I/O cooling module is hot-swappable as well.

One interesting observation I made during this evaluation was the behavior of the entire cooling system when one of the three modules was pulled out. When the chassis loses a cooling component, the remaining two devices go into high gear. From the increase of air flow, it would seem that the chassis is compensating for the missing device. However, neither the MFSYS25/MFSYS35’s user guide nor the technical product specification manual mentions this behavior, while an email from an Intel representative said that this is normal behavior.

I found out that in case of a cooling device failure that the remaining main cooling module will continue to run at 100% activity and can run in this state for a while. On the other hand, if there was a problem with the sole I/O cooling module, then an immediate replacement would be necessary.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

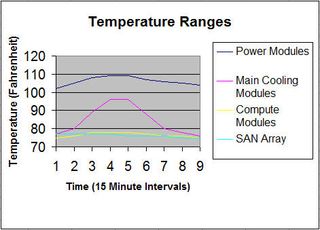

I couldn’t wait to test the overall heat coming out of the MFSYS25 at different compute module configurations. After bringing up all three compute modules (one at a time), I sequentially shut them down until I was just running the chassis by itself. The startup and shutdown of the compute modules were completed in 15-minute intervals.

I started with just the chassis and the disk drives powered on. Note that the compute modules were in their bays the whole time, but no command to start them up was issued until later.

I then powered on the compute modules in 15 minute intervals. By interval 4, you can see that the amount of heat coming out of the main cooling modules increases dramatically.

As might be expected, when power consumption increased and after I added more compute modules to the chassis, there was a significant increase in heat, not only from the newly powered-up components, but from the power supply modules as well. There’s at least a 19 degrees Fahrenheit heat increase coming from the cooling modules as they pull the hot air away from the three compute modules. If I had loaded the chassis with three more compute modules, then I’m sure the line representing the main cooling modules would be close to the power module’s temperatures. At interval 5, I started shutting down the compute modules at 15 minute intervals. This “shutdown” test produced an almost mirror-image of the system as everything was booting up during the first half. Note that temperature coming from the compute modules’ front panel only went up and down by three degrees. This just means that the CPUs near the front of the compute modules were probably being well ventilated by the flow of fresh air coming from the front of the chassis.

Current page: Main And I/O Cooling Modules

Prev Page Power Supply Modules Next Page Modular Server Control-

kevikom This is not a new concept. HP & IBM already have Blade servers. HP has one that is 6U and is modular. You can put up to 64 cores in it. Maybe Tom's could compare all of the blade chassis.Reply -

sepuko Are the blades in IBM's and HP's solutions having to carry hard drives to operate? Or are you talking of certain model or what are you talking about anyway I'm lost in your general comparison. "They are not new cause those guys have had something similar/the concept is old."Reply -

Why isn't the poor network performance addressed as a con? No GigE interface should be producing results at FastE levels, ever.Reply

-

nukemaster So, When you gonna start folding on it :pReply

Did you contact Intel about that network thing. There network cards are normally top end. That has to be a bug.

You should have tried to render 3d images on it. It should be able to flex some muscles there. -

MonsterCookie Now frankly, this is NOT a computational server, and i would bet 30% of the price of this thing, that the product will be way overpriced and one could buid the same thing from normal 1U servers, like Supermicro 1U Twin.Reply

The nodes themselves are fine, because the CPU-s are fast. The problem is the build in Gigabit LAN, which is jut too slow (neither the troughput nor the latency of the GLan was not ment for these pourposes).

In a real cumputational server the CPU-s should be directly interconnected with something like Hyper-Transport, or the separate nodes should communicate trough build-in Infiniband cards. The MINIMUM nowadays for a computational cluster would be 10G LAN buid in, and some software tool which can reduce the TCP/IP overhead and decrease the latency. -

less its a typo the bench marked older AMD opterons. the AMD opteron 200s are based off the 939 socket(i think) which is ddr1 ecc. so no way would it stack up to the intel.Reply

-

The server could be used as a Oracle RAC cluster. But as noted you really want better interconnects than 1gb Ethernet. And I suspect from the setup it makes a fare VM engine.Reply

Most Popular