Gaming At 3840x2160: Is Your PC Ready For A 4K Display?

We got our hands on Asus' PQ321Q Ultra HD display with a resolution of 3840x2160. Anxious to game on it, we pulled out our GeForce GTX Titan, 780, and 770 cards for a high-quality romp through seven of our favorite titles. What do you need to game at 4K?

Results: Crysis 3

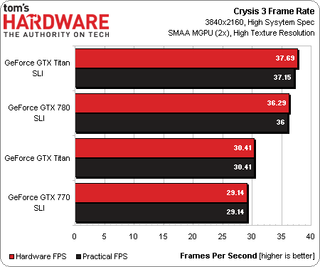

Our Crysis 3 benchmark is a manual run-through that’s typically pretty consistent. The first time we ran FCAT scripts on the output, though, it was clear that v-sync switched on in a couple of cases, even though it was off in the game. As a result, we ran several configurations with the option toggled off in Nvidia’s driver. The performance figures changed in response, but the average frame rates suggest something is still off in Crysis 3. Two GeForce GTX 770s should be faster than one Titan, and we were really hoping for more scaling from two $1000 cards.

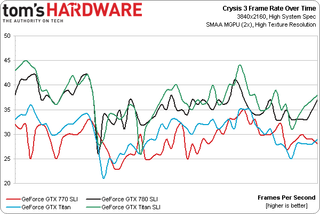

Charting out frame rate over time shows the GeForce GTX 770s in SLI and Titan card trading blows, with the 780s and Titans in SLI behaving similarly as well.

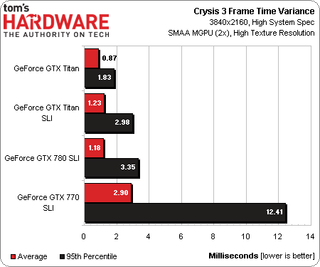

Big spikes again affect the 770s, though average frame time variance continues to show Nvidia’s multi-GPU solutions yielding a fairly consistent experience.

What I will say is that, in a game like Crysis 3, when performance starts dipping under the 30 FPS range, your ability to react quickly falls off very fast. Because this game requires a manual run-through, I was painfully aware on both the single-Titan and 770 SLI systems that low frame rates were getting me shot more often, forcing me to restart my run. Two 780s and Titans helped this issue immensely.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

-

RascallyWeasel Is it really necessary to use Anti Alaising at this resolution? If anything it would only hurt average FPS without really giving much of a visual increase.Reply -

ubercake Great review! It's good to see this information available.Reply

I know you want to leave AMD out of it since they still haven't completed the fixing of the runt/drop microstutter issue through promised driver updates (actually, I thought it was all supposed to be done with the July 31 update?), but people constantly argue that AMD cards would be superior because of this or that on 4K. Maybe after they release the new flagship?

At any rate, I won't buy a 4K 60Hz screen until the price drops under the $1K mark. I really wish they could make the higher res monitors with a faster refresh rate like 120Hz or 144Hz, but that doesn't seem to be the goal. There must be more money in higher res than in higher refresh. It makes sense, but when they drop the refresh down to 30Hz, it seems like too much of a compromise. -

CaedenV Hey Chris!Reply

So 2GB of ram on the 770 was not enough for quite a few games... but just how much vRAM is enough? By chance did you peak at the usage on the other cards?

With next gen consoles having access to absolutely enormous amounts of memory on dedicated hardware for 1080p screens I am very curious to see how much memory is going to be needed for gaming PCs running these same games at 4K. I still think that 8GB of system memory will be adequate, but we are going to start to need 4+GB of vRAM just at the 1080p level soon enough, which is kinda ridiculous.

Anywho, great article! Can't wait for 4K gaming to go mainstream over the next 5 years! -

shikamaru31789 So it's going to be a few years and a few graphics card generations before we see 4k gaming become the standard, something that can be done on a single mid-high end video card. By that time, the price of 4k tv's/montors should have dropped to an affordable point as well.Reply -

Cataclysm_ZA So no-one figures that benching a 4K monitor at lower settings with weaker GPUs would be a good feature and reference for anyone who wants to invest in one soon, but doesn't have anything stronger than a GTX770? Gees, finding that kind of information is proving difficult.Reply -

cypeq Cool Yet I can't stop to think that I can Put 5 000$ on something better than gaming rig that can run smoothly this 3 500 $ screen.Reply -

CaedenV Reply11564184 said:Is it really necessary to use Anti Alaising at this resolution? If anything it would only hurt average FPS without really giving much of a visual increase.

This is something I am curious about as well. Anandtech did a neat review a few months ago and in it they compared the different AA settings and found that while there was a noticeable improvement at 2x, things quickly became unnecessary after that... but that is on a 31" screen. I don't know about others, but I am hoping to (eventually) replace my monitor with a 4K TV in the 42-50" range, and I wonder with the larger pixels if a higher AA would be needed or not for a screen that size compared to the smaller screens (though I sit quite a bit further from my screen than most people do, so maybe it would be a wash?).

With all of the crap math out on the internet, it would be very nice for someone at Tom's to do a real 4K review to shed some real testable facts on the matter. What can the human eye technically see? What are UI scaling options are needed? etc. 4K is a very important as it holds real promise to being a sort of end to resolution improvements for entertainment in the home. there is a chance for 6K to make an appearance down the road, but once you get up to 8K you start having physical dimension issues of getting the screen through the doors of a normal house on a TV, and on a computer monitor you are talking about a true IMAX experience which could be had much cheaper with a future headset. Anywho, maybe once a few 4K TVs and monitors get out on the market we can have a sort of round-up or buyer's guide to set things straight? -

daglesj So those of us married, living with a partner or not still living with our parents need not apply then?Reply

I think there is a gap in the market for a enthusiast PC website that caters to those who live in the real world with real life budgets.

Most Popular