Silicon Motion SM2256 Preview

Introduction And Specifications

Advances in controller error correction technology allow SSD makers to utilize low-cost flash in value-oriented solid-state drives. As manufacturing lithography shrinks, the number of rated program-erase (P/E) cycles decreases. Available P/E cycles take another hit moving from two- to three-bit-per-cell flash.

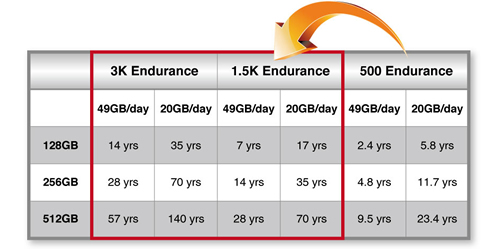

For several years, we had NAND rated for 5000 cycles. Then it was 3000 cycles. Now, that stuff is starting to trail off, displaced by 1000-cycle flash that is already appearing in today's low-cost consumer SSDs. This will soon give way to 500-cycle flash, which is what you'd find in thumb drives.

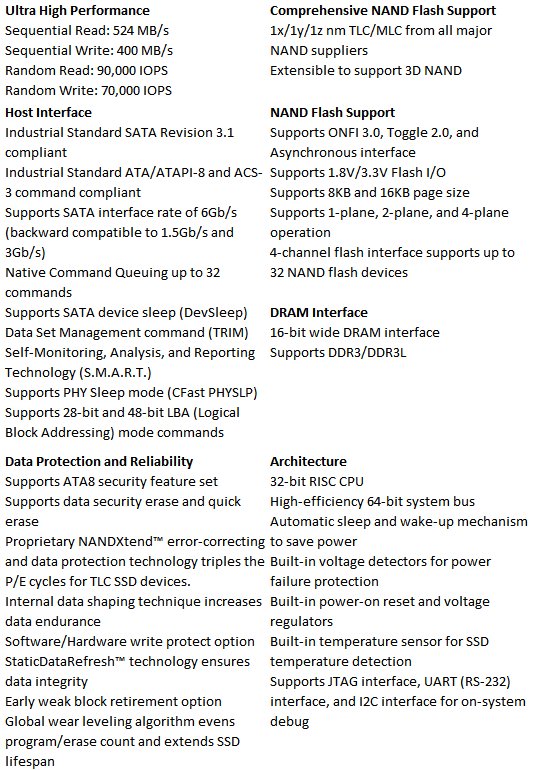

As far as we can tell, every SSD controller maker is working towards extending the life of low-endurance flash. They all have a "special sauce" and a trademarked marketing name. The proprietary software accompanies Low-Density Parity-Check code (LDPC), which uses a very small amount of space to build error correction data, leaving more capacity for users. Silicon Motion's next-generation ECC code for solid-state storage products just so happens to be called NANDXtend.

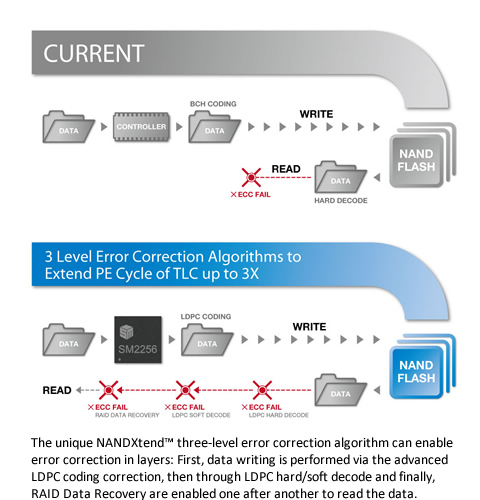

Most of today's SSDs employ BCH code to correct bit errors. The controller vendors add their own spin to enhance the technology as well. When a bit fails, the controller does what is called a read retry. This adds latency and consumes more power compared to a successful read.

Next-gen error correction technology is progressive. Not every bit needs to be dug out with a big shovel. If the deepest method was used for all correction, latency would be higher across the board and power consumption wouldn't look so pretty. Silicon Motion's NANDXtend is a three-level algorithm that progresses through the ECC as needed until your data is error-free.

Silicon Motion also incorporates another layer of data protection, RAID Data Recovery, which doesn't require excessive over-provisioning to implement RDR. The custom version reportedly takes just .1% of the flash capacity.

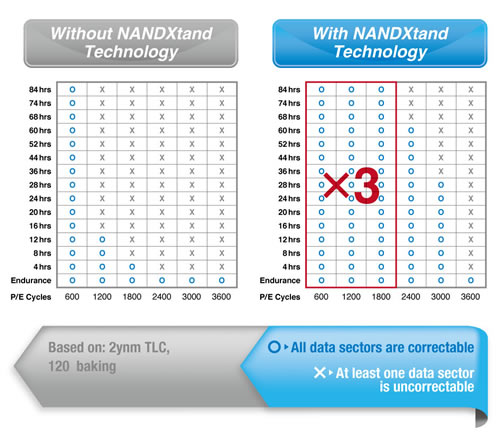

Silicon Motion's internal testing with a 120-degree bake to accelerate the degradation process shows a 3x endurance increase.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The end result is that SSD vendors can use lower-cost, lower-endurance flash and still guarantee the same number of writes per day that we have now.

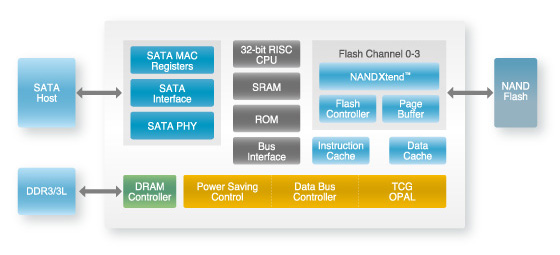

The Silicon Motion SM2256 Four-Channel SSD Controller

It's important to understand that Silicon Motion doesn't make SSDs you can buy. The company builds controllers, firmware and reference drives so companies like Intel, Crucial and Corsair can create the final product to sell. Silicon Motion's SM2246EN was the company's break-out controller, and it has several design wins. Crucial recently released its BX100 series with the SM2246EN, and SanDisk has a model of its own. That's a big change from Marvell, which both companies featured in the past.

The specifications gleaned from Silicon Motion's marketing material cites performance with Toshiba A19 TLC flash. But today we have a special treat on our test bench: the SM2256 controller with Samsung's 19nm TLC flash. This is the same NAND that shipped in Samsung's 840 EVO drives.

Current page: Introduction And Specifications

Next Page A Closer Look At Silicon Motion's SM2256

Chris Ramseyer was a senior contributing editor for Tom's Hardware. He tested and reviewed consumer storage.

-

Maxx_Power I hope this doesn't encourage manufacturers to use even lower endurance NAND in their SSDs.Reply -

InvalidError Reply

It most likely will. Simply going from 20-22nm TLC to 14-16nm will likely ensure that with smaller trapped charge, increased leakage, more exotic dielectric materials to keep the first two in check.15495485 said:I hope this doesn't encourage manufacturers to use even lower endurance NAND in their SSDs. -

unityole this is the way where we are heading, only thing to get good grade SSD is probably to spend big. good grade as in HET MLC flash and pcie 3.0 performance.Reply -

alextheblue As long as they don't corrupt data, I'm not really freaking out over endurance. When a HDD died, that was a major concern. If an SSD that's a few years old starts to really reach the end of its useful life, as long as I can recover everything and dump it onto a new drive, I'm happy.Reply

If you have a really heavy workload though, by all means get a high-end unit. -

JoeMomma I am using a small ssd for Windows that is backup weekly. Saved my butt last week. Speed is key. I can provide my own reliability.Reply -

jasonkaler Here's an idea:Reply

While they're making 3d nand, why don't they add an extra layer for parity and then use the raid-5 algorithm?

e.g. 8 layers for data, adding 1 extra for parity. Not that much extra overhead, but data will be much more reliable. -

InvalidError Reply

SSD/MMC controllers already use FAR more complex and rugged algorithms than plain parity. Parity only lets you detect single-bit errors. It only allows you to "correct" errors if you know where the error was, such as a drive failure in a RAID3/5 array. If you want to correct arbitrary errors without knowing their location beforehand, you need block codes and those require about twice as many extra bits as the number of correctable bit-errors you want to implement. (I say "about twice as many" because twice is the general requirement for uncorrelated, non-deterministic errors. If typical failures on a given media tend to be correlated or deterministic, then it becomes possible to use less than two coding bits per correctable error.)15517825 said:Here's an idea:

While they're making 3d nand, why don't they add an extra layer for parity and then use the raid-5 algorithm?

e.g. 8 layers for data, adding 1 extra for parity. Not that much extra overhead, but data will be much more reliable.

-

jasonkaler Reply15519330 said:

SSD/MMC controllers already use FAR more complex and rugged algorithms than plain parity. Parity only lets you detect single-bit errors. It only allows you to "correct" errors if you know where the error was, such as a drive failure in a RAID3/5 array. If you want to correct arbitrary errors without knowing their location beforehand, you need block codes and those require about twice as many extra bits as the number of correctable bit-errors you want to implement. (I say "about twice as many" because twice is the general requirement for uncorrelated, non-deterministic errors. If typical failures on a given media tend to be correlated or deterministic, then it becomes possible to use less than two coding bits per correctable error.)15517825 said:Here's an idea:

While they're making 3d nand, why don't they add an extra layer for parity and then use the raid-5 algorithm?

e.g. 8 layers for data, adding 1 extra for parity. Not that much extra overhead, but data will be much more reliable.

No you don't. Each sector has CRC right?

So if the sector read fails CRC, simply calculate CRC replacing each layer in turn with the raid parity bit.

All of them will be off except for one with the faulty bit.

And these CRC's can all be calculated in parallel so there would be 0 overhead with regard to time.

Easy huh?

-

InvalidError Reply

If you know which drive/sector is bad thanks to a read error, your scheme is needlessly complicated: you can simply ignore ("erase") the known-bad data and calculate it by simply XORing all remaining volumes. But you needed the extra bits from the HDD's "CRC" to know that the sector was bad in the first place.15536005 said:No you don't. Each sector has CRC right?

So if the sector read fails CRC, simply calculate CRC replacing each layer in turn with the raid parity bit.

All of them will be off except for one with the faulty bit.

And these CRC's can all be calculated in parallel so there would be 0 overhead with regard to time.

Easy huh?

In the case of a silent error though, which is what you get if you have an even-count bit error when using parity alone, you have no idea where the error is or even that there ever was an error in the first place. That's why more complex error detection and correction block codes exist and are used wherever read/receive errors carry a high cost, such as performance, reliability, monetary cost or loss of data. -

Eggz I know this article was about the SM2256, but the graphs really made the SanDisk PRO shine bright! In the latency tests, which content creators care about, nothing seemed to phase it, doing better than even the 850 Pro - consistently!Reply

BUT, one significant critique I have was the density limitation. Everything here was based on the ~250 GB drives. Comparing a drive with the exact name, but in a different density, is akin to comparing two entirely different drives.

I realize producing the data can be time consuming, but having the same information at three density points would be extremely helpful for purchasing decisions - lowest, highest, and middle densities.