Nvidia Betting its CUDA GPU Future With 'Fermi'

This chip is going to be huge for the supercomputing market -- if Nvidia's has its way.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

The video card has evolved now to be termed the GPU, thanks to the growing capability of the hardware. Now the GPU is about to take its next big leap to becoming specialized GPGPU (of course, we realize that the term specialized and general purpose are some what contradictory).

Nvidia is betting heavily on GPGPUs becoming a large need in the computing market. While we'll still need our GPUs to push our pixels for our 3D games, Nvidia has just revealed its next-generation CUDA architecture, codenamed "Fermi."

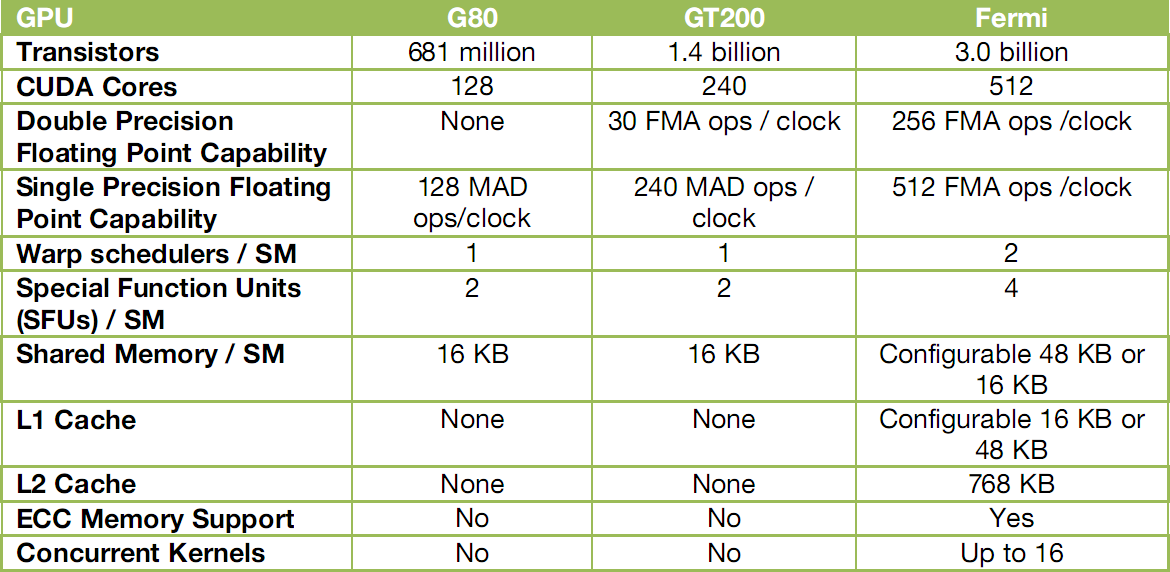

Nvidia bills Fermi as an entirely new ground-up design that will finally realize the potential of GPU computing. Although Nvidia made big steps with its G80 and later the GT200, the graphics maker has made Fermi a much more pleasant and useful tool for programmers.

“The first two generations of the CUDA GPU architecture enabled Nvidia to make real in-roads into the scientific computing space, delivering dramatic performance increases across a broad spectrum of applications,” said Bill Dally, chief scientist at Nvidia.

“It is completely clear that GPUs are now general purpose parallel computing processors with amazing graphics, and not just graphics chips anymore,” said Jen-Hsun Huang, co-founder and CEO of Nvidia. “The Fermi architecture, the integrated tools, libraries and engines are the direct results of the insights we have gained from working with thousands of CUDA developers around the world. We will look back in the coming years and see that Fermi started the new GPU industry.”

At the unveil event, Nvidia did not give anything away in terms of clock speeds or any of the other specifications that hardcore 3D gamers focus on. Instead, it talked about technical features that lend themselves specifically for GPU computing. Such technologies include:

- C++, complementing existing support for C, Fortran, Java, Python, OpenCL and DirectCompute.

- ECC, a critical requirement for datacenters and supercomputing centers deploying GPUs on a large scale

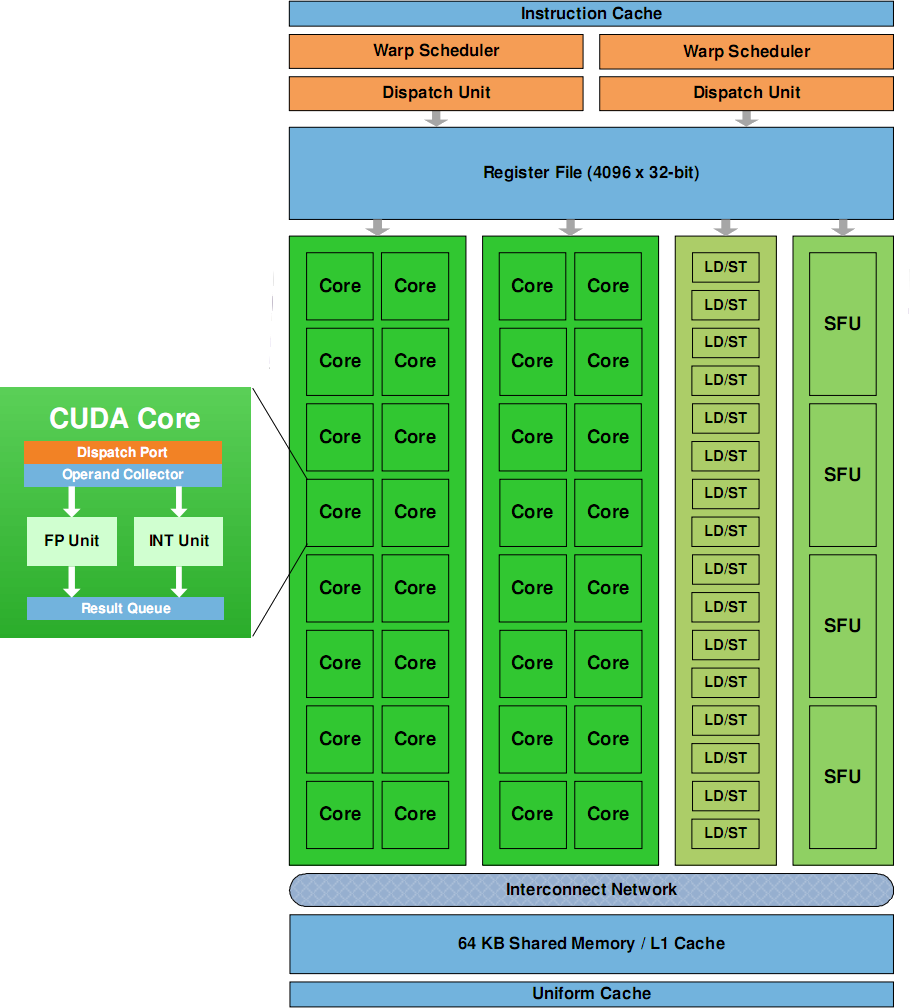

- 512 CUDA Cores featuring the new IEEE 754-2008 floating-point standard, surpassing even the most advanced CPUs

- 8x the peak double precision arithmetic performance over Nvidia’s last generation GPU. Double precision is critical for high-performance computing (HPC) applications such as linear algebra, numerical simulation, and quantum chemistry

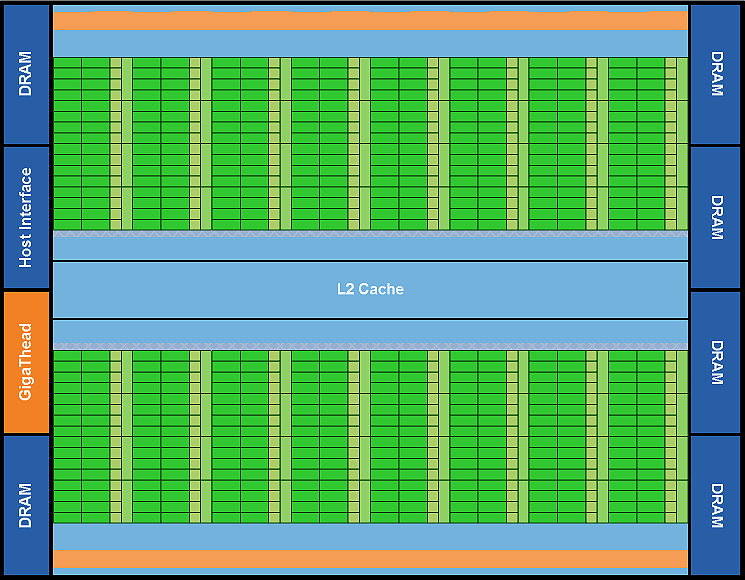

- Nvidia Parallel DataCache - the world’s first true cache hierarchy in a GPU that speeds up algorithms such as physics solvers, raytracing, and sparse matrix multiplication where data addresses are not known beforehand

- Nvidia GigaThread Engine with support for concurrent kernel execution, where different kernels of the same application context can execute on the GPU at the same time (eg: PhysX fluid and rigid body solvers)

- Nexus – the world’s first fully integrated heterogeneous computing application development environment within Microsoft Visual Studio

Oak Ridge National Laboratory (ORNL) has already announced plans for a new supercomputer that will use Fermi to research in areas such as energy and climate change. ORNL’s supercomputer is expected to be 10-times more powerful than today’s fastest supercomputer.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

“This would be the first co-processing architecture that Oak Ridge has deployed for open science, and we are extremely excited about the opportunities it creates to solve huge scientific challenges,” Jeff Nichols, ORNL associate lab director for Computing and Computational Sciences said. “With the help of Nvidia technology, Oak Ridge proposes to create a computing platform that will deliver exascale computing within ten years.”

Nvidia did reveal that its upcoming Fermi GPU will pack 3 billion transistors, making it one mammoth chip – bigger than anything from ATI. Of course, the aspirations of Nvidia in the GPU space are far more ambitious than that of AMD. It'll be interesting to see if and how the two head-to-head rivals diverge from the focus on 3D gaming technologies to greater GPGPU application.

-

magicandy If you're going to put a logo on your chart, common sense states you shouldn't cover up what's on the chart...Reply -

crisisavatar The computing capability is great and all but I am personally more interested in affordable GPUs. Let's see if NVIDIA can deliver here.Reply -

mlopinto2k1 Hi, I would like a programmable CPU/GPU/GPGPU unit that allowed Virtual Instruments and Effects to be processed on it. Otherwise, this is just more of the same CRAP!Reply -

megamanx00 That's nice and all, but when are they gonna start selling the darn thing? Besides, even though an evolution of Cuda is nice and everything, proprietary APIs like that are kind of a hard sell. I think it's cool that it will get some C++ support, we'll see how that one goes, but as OpenCL and DirectCompute are more open it will be more important how this chip compares to AMDs in the performance of those rather than CUDA.Reply -

jonpaul37 if the performance/price fit the same shoes as ATI's latest release(s), i will be sold and Nvidia will again be an option in my future. Not to mean it isn't now, i'm just saying, ATI has some nice stuff for a low-ish price.Reply -

nforce4max Cool how much will it cost? Most likely have to work for a month just to get one at $10 US an hour.Reply -

The logo'd out part of the chart reads:Reply

L1 Cache: Configurable 16K or 48K

L2 Cache: 768K

ECC: Yes

Concurrent Kernels: Up to 16

...from another source. Gotta love automated processes like logo stamping :)