OpenCL In Action: Post-Processing Apps, Accelerated

We've been bugging AMD for years now, literally: show us what GPU-accelerated software can do. Finally, the company is ready to put us in touch with ISVs in nine different segments to demonstrate how its hardware can benefit optimized applications.

Mobile Platform Results And Wrap-Up

In looking at these notebooks, we need to answer two questions. First, are the integrated graphics engines on low-power mobile processors fast enough to accelerate a compute-oriented workload? Second, how do AMD's mobile APUs compare to its desktop implementations?

First let’s compare the Gateway machine running an A8-3500M to the desktop A8-3850. Statistically, the two are almost in a dead heat, which is pretty phenomenal when you consider that the A8-3500M is a 35 W part standing up to the 100 W A8-3850.

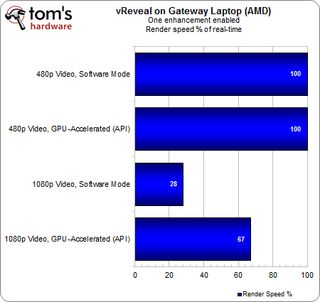

While 480p video gets handled in real-time on both platforms, slower core and graphics clocks catch up to the notebook. It takes a hit on the 1080p test, losing one-third of its frame rate.

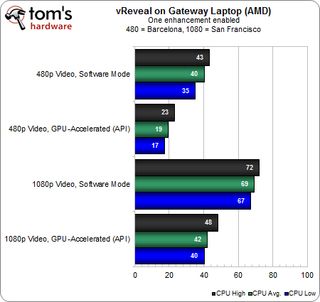

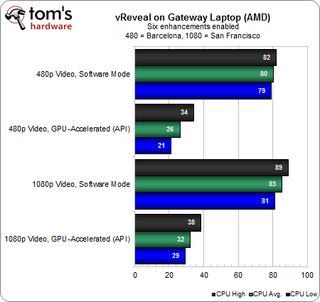

When we crank up the vReveal load to six effects, the system's CPU utilization tops out in the 80-85% range with software-based rendering. The A8 APU also vacillates between 26 and 32% with GPU acceleration turned on, suggesting that this platform is again being topped out based on the way it distributes CPU- and GPU-specific work.

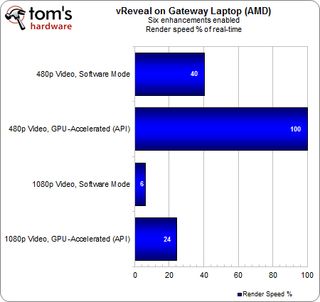

In this demanding application, the Gateway notebook still manages to cope with 480p video in real-time (100%). Also impressive is that, in the 1080p benchmark, the mobile APU only takes a 5% hit compared to the desktop A8-3850. Although 24% of real-time seems pretty grim next to the Radeon HD 5870's ability to achieve 67% of real-time performance in this test, the fact that we're comparing a 35 W part to a 100 W APU and 188 W graphics card working cooperatively speaks volumes, too.

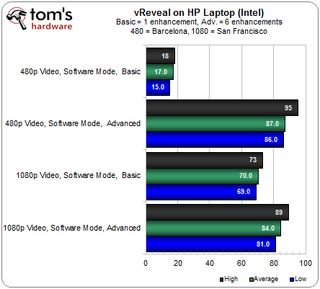

Finally, let's check to see how the APU fares against Intel's Sandy Bridge-based architecture with HD Graphics 3000. Of course, all of the tests are run in software mode, so we're only gauging performance without OpenCL-based acceleration.

With 1080p unaccelerated video processing, the APU-powered machine was pushed to 69% utilization with one effect and 85% with six. Here, we see 70% and 84%, respectively.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Nevertheless, Intel's mobile Core i5 is able to post decent numbers. With one effect applied to the 480p sample, it manages real-time playback. With six, it falls to 75% of real-time. Unassisted, AMD's APU was only able to hit 40% of real-time in the same test. The thing is, collaborating with its 400 Radeon cores, the A8 jumps back up to real-time rendering.

Those results are exacerbated at 1080p. In a CPU-to-CPU comparison, Intel doubles AMD's performance. But once you get the GPU acceleration involved, the APU assumes a definite lead.

Learning Lessons

After all of that, what do we come away with?

- When time is money, there is no substitute for high-end hardware, and discrete components remain important upgrades for enthusiasts. None of the configurations we tested could touch our FX-8150 and Radeon HD 7970 working together. Had this comparison involved even pricier processors, we probably could have predicted the outcome of the software-only tests. In any case, balance remains a critical component in picking the hardware for your next upgrade.

- When budget is a concern, getting general-purpose and graphics resources on the same piece of silicon can facilitate notable performance boosts in applications optimized to capitalize. Yes, a mid-range discrete graphics adapter will outperform as a result of its more extensive architecture. However, the APU design gets you in on the ground floor of GPU acceleration without requiring an add-on graphics card (with its corresponding expense). APUs most certainly won't satiate enthusiasts accustomed to powerful discrete adapters, but they do put a much-needed emphasis on capable integrated functionality where previous chipset- and processor-based engines came up short.

- The benefits of a mobile APU can be close to equivalent to a desktop APU, again, in the right application. There's a reason the desktop A8-3850 has a 100 W TDP and the mobile A8-3500M is constrained to 35 W. However, there's also something to be said for an intelligent balance between processing power and graphics muscle to help notebooks yield a better overall experience.

In all honesty, this is the most exciting development we’ve seen from AMD in quite a while, and it deserves more attention. Stay tuned for our next installment on gaming acceleration in a few weeks, and we’ll see if similar (or better!) results can be enjoyed there, as well.

Current page: Mobile Platform Results And Wrap-Up

Prev Page Benchmark Results: vReveal On The A8-3850's Radeon HD 6550D-

bit_user amuffinWill there be an open cl vs cuda article comeing out anytime soon?At the core, they are very similar. I'm sure that Nvidia's toolchain for CUDA and OpenCL share a common backend, at least. Any differences between versions of an app coded for CUDA vs OpenCL will have a lot more to do with the amount of effort spent by its developers optimizing it.Reply

-

bit_user Fun fact: President of Khronos (the industry consortium behind OpenCL, OpenGL, etc.) & chair of its OpenCL working group is a Nvidia VP.Reply

Here's a document paralleling the similarities between CUDA and OpenCL (it's an OpenCL Jump Start Guide for existing CUDA developers):

NVIDIA OpenCL JumpStart Guide

I think they tried to make sure that OpenCL would fit their existing technologies, in order to give them an edge on delivering better support, sooner. -

deanjo bit_userI think they tried to make sure that OpenCL would fit their existing technologies, in order to give them an edge on delivering better support, sooner.Reply

Well nvidia did work very closely with Apple during the development of openCL. -

nevertell At last, an article to point to for people who love shoving a gtx 580 in the same box with a celeron.Reply -

JPForums In regards to testing the APU w/o discrete GPU you wrote:Reply

However, the performance chart tells the second half of the story. Pushing CPU usage down is great at 480p, where host processing and graphics working together manage real-time rendering of six effects. But at 1080p, the two subsystems are collaboratively stuck at 29% of real-time. That's less than half of what the Radeon HD 5870 was able to do matched up to AMD's APU. For serious compute workloads, the sheer complexity of a discrete GPU is undeniably superior.

While the discrete GPU is superior, the architecture isn't all that different. I suspect, the larger issue in regards to performance was stated in the interview earlier:

TH: Specifically, what aspects of your software wouldn’t be possible without GPU-based acceleration?

NB: ...you are also solving a bandwidth bottleneck problem. ... It’s a very memory- or bandwidth-intensive problem to even a larger degree than it is a compute-bound problem. ... It’s almost an order of magnitude difference between the memory bandwidth on these two devices.

APUs may be bottlenecked simply because they have to share CPU level memory bandwidth.

While the APU memory bandwidth will never approach a discrete card, I am curious to see whether overclocking memory to an APU will make a noticeable difference in performance. Intuition says that it will never approach a discrete card and given the low end compute performance, it may not make a difference at all. However, it would help to characterize the APUs performance balance a little better. I.E. Does it make sense to push more GPU muscle on an APU, or is the GPU portion constrained by the memory bandwidth?

In any case, this is a great article. I look forward to the rest of the series. -

What about power consumption? It's fine if we can lower CPU load, but not that much if the total power consumption increase.Reply

Most Popular