AI System Scores Better Than 75% Of Americans In Visual Intelligence Test

Northwestern University professors created a new computational model that performs as well or better than 75% of American adults in a standard intelligence test. The team believes this is an important step in making artificial intelligence (AI) that sees and understands the world as humans do.

“The model performs in the 75th percentile for American adults, making it better than average,” said Northwestern Engineering’s Ken Forbus. “The problems that are hard for people are also hard for the model, providing additional evidence that its operation is capturing some important properties of human cognition,” he added.

CogSketch AI Platform

The computation model was built on CogSketch, a sketch-understanding system developed in Forbus’ laboratory at Northwestern University. Sketching is a natural activity that people do while thinking or trying to communicate an idea, especially when spatial content is involved. Sketching is also heavily used in engineering and geoscience. CogSketch is used to model spatial understanding and reasoning, making it suitable for research based on sketches, but also for testing against a standardized visual intelligence test such as the Raven’s Progressive Matrices test.

The computational model developed by Forbus builds on the idea that analogical reasoning is at the heart of visual problem solving. In other words, if we want our AI systems to solve complex visual problems, they need to be able to make analogies and compare one object to another. Images are compared via structure mapping, which aligns common structures found in two images, in order to identify commonalities and differences. The structure-mapping theory was developed by psychology professor Dedre Gentner, who also works at Northwestern.

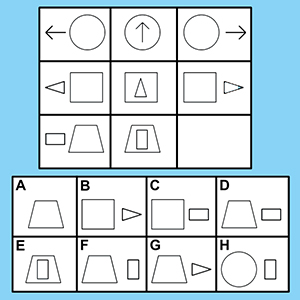

The Raven’s Progressive Matrices Test

The 60-item Raven’s Progressive Matrices test measures a person's reasoning ability by showing them a matrix of images with one missing image. The test-taker is then supposed to select which image is missing from a set of six to eight options.

Forbus and former colleague Andrew Lovett, who was a postdoctoral researcher in psychology at Northwestern, said their AI system did better in the test than the average American.

"The Raven’s test is the best existing predictor of what psychologists call ‘fluid intelligence, or the general ability to think abstractly, reason, identify patterns, solve problems, and discern relationships,’" said Lovett, now a researcher at the US Naval Research Laboratory. "Our results suggest that the ability to flexibly use relational representations, comparing and reinterpreting them, is important for fluid intelligence," he said.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The professors said that understanding sophisticated relational representations is key to higher-order cognition. It can help connect entities and ideas such as “the clock is above the door” or “pressure differences cause water to flow.”

Today’s AI systems are mainly good at recognizing objects, a task that not too long ago was also quite difficult for computers. However, the professors said that object identification without subsequent reasoning isn’t all that useful, which is why they subjected their AI system to the Raven's Progressive Matrices Test. They also believe their research is an important step towards gaining a better understanding of computer vision.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

blackbit75 It is strange. I tried to find chat bots intelligent, and they were disappointing. I saw the succesor of one by Microsoft, but at last it isn't ready. Others were not very intelligent.Reply -

derekullo Knowing/figuring out that a 4"x4" square won't fit into a 3" diameter circle is not the same as having a conversation.Reply -

Commandodan This is awesome though... I'd much rather have AI that's smarter than average humans and still dumber than the most intelligent humans...Reply -

bit_user Reply

This is pretty clearly a first step. It probably won't be long before the state of the art surpasses the level at which most humans can perform this task.19187593 said:This is awesome though... I'd much rather have AI that's smarter than average humans and still dumber than the most intelligent humans...

When AI's can do most cognitive tasks better than most humans, it might be the case that no single human can surpass the AI's at the majority of those tasks. That will be the beginning of the commoditization of intelligence.

It'd be ironic if droves of knowledge workers then start scrambling for the very service sector jobs they'd previously derided.

-

OriginFree "AI System Scores Better Than 75% Of Americans In Visual Intelligence Test"Reply

That's not setting the bar very high, especially given today's events. -

Chester Rico I always sucked at tests like these. According to my scores in one I did two years ago (long story) I shouldn't even be able to use a computer/tie my own shoes.Reply -

bit_user Reply

That's why IQ tests are controversial. Intelligence is comprised of a variety of cognitive skills. So, you can't really have just one test, or one scale, for measuring it.19188500 said:According to my scores in one I did two years ago (long story) I shouldn't even be able to use a computer/tie my own shoes.

I'd guess most people have areas of strengths and weaknesses. I once heard that chess grand masters devote so much of their brain to chess, that they actually tend to develop mild cognitive deficits, in other areas.

-

kenjitamura The 75% of "Americans" in the title takes out a lot of the impact in the headline.Reply -

Virtual_Singularity Not a bad story regarding ai. One with slightly more irony, and humor, I can think of involves draft legislation in the E.U. for Europe's worker robots to become 'electronic persons', which would give certain robots "rights", among other things. A Reuters story explains, "Europe's growing army of robot workers could be classed as "electronic persons" and their owners liable to paying social security for them if the European Union adopts a draft plan to address the realities of a new industrial revolution."Reply -

Martell1977 Saying 75% of Americans is not a high bar, just looking at the streets in DC shows that there has been a large decline in overall intelligence in the USA for a long time.Reply

But, glad to see some progression in the field, just try not to create Skynet...LOL.