Intel Releases Xe Max Graphics, Details Power Sharing and Deep Link

They're releasing today in China

Intel is launching its Iris Xe Max graphics in thin-and-light laptops, starting today with launches in China. They will first appear in the Acer Swift 3x, Dell Inspiron 15 7000 2-in-1 and Asus VivoBook Flip TP470. These laptops will come to the United States in the coming weeks.

| Execution Units | 96 |

| Frequency | 1.65GHz |

| Lithography | 10nm Superfin |

| Graphics Memory Type | LPDDR4x |

| Graphis Capacity 4GB | 68 GB/s |

| PCI Express | Gen4 |

| Medida | 2 Multi-Format Codec (MFX Engines |

| Number of Displays Supported | 4 |

| Graphics Features | Variable rate shading, adaptive sync, Async compute (Beta) |

| DirectX Support | 12.1 |

| OpenGL Support | 4.6 |

| OpenCL Support | 2 |

Formerly codenamed DG1, the chip has 96 EUs, a frequency up to 1.65GHz and, yes, LPDDR4x memory for power savings. Rumors of the DG1 test vehicle using 3GB of GDDR6 were, apparently, incorrect. Instead, Xe Max uses a 128-bit interface with the lower power memory to ultimately end up with more bandwidth than competing solutions like Nvidia's GeForce MX350.

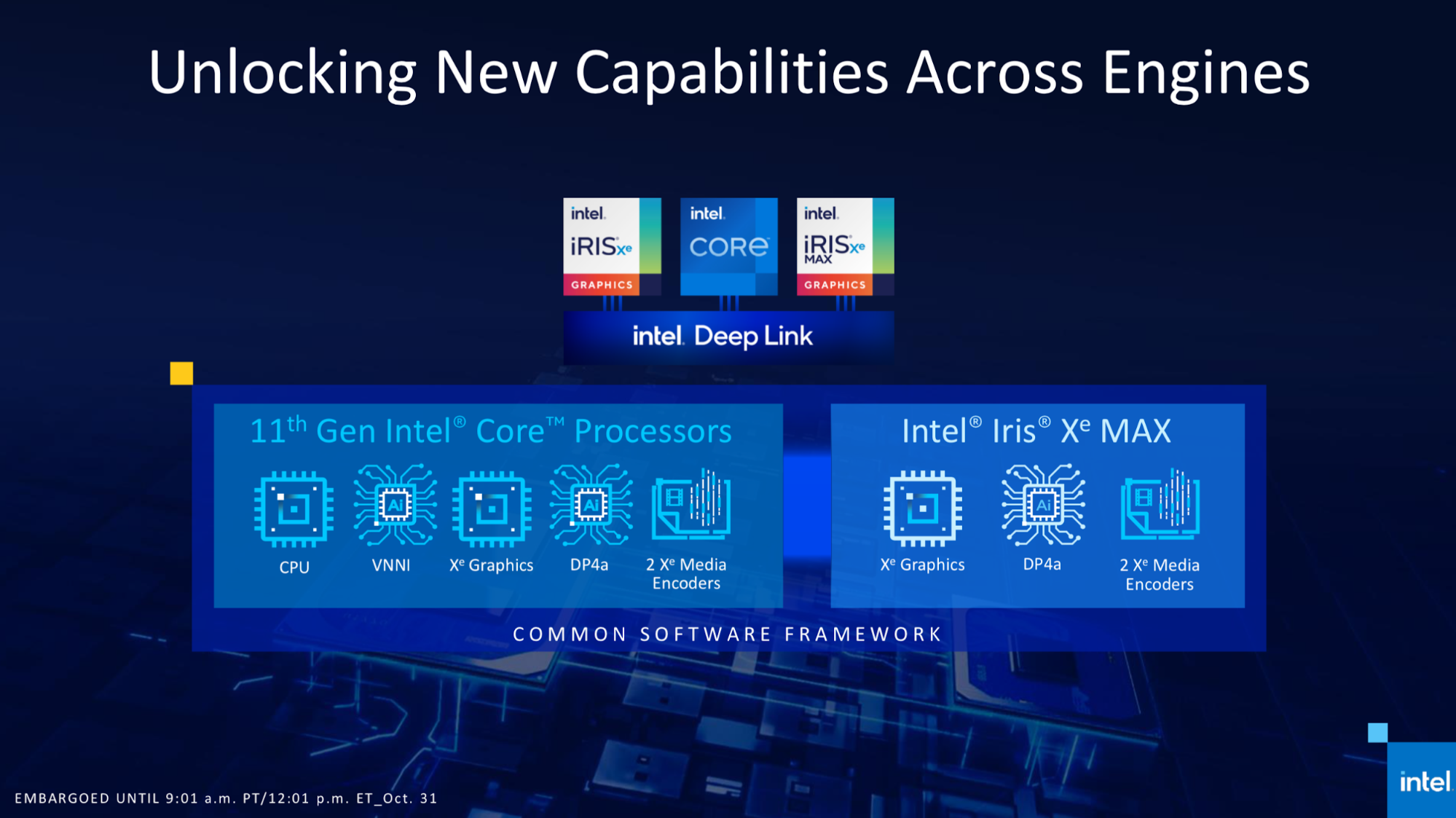

The company is aiming the Xe Max laptops at content creators. Intel is using its ownership over both the Tiger Lake CPU and the discrete GPU in a feature called Deep Link, combining the processing with a software framework to boost performance.

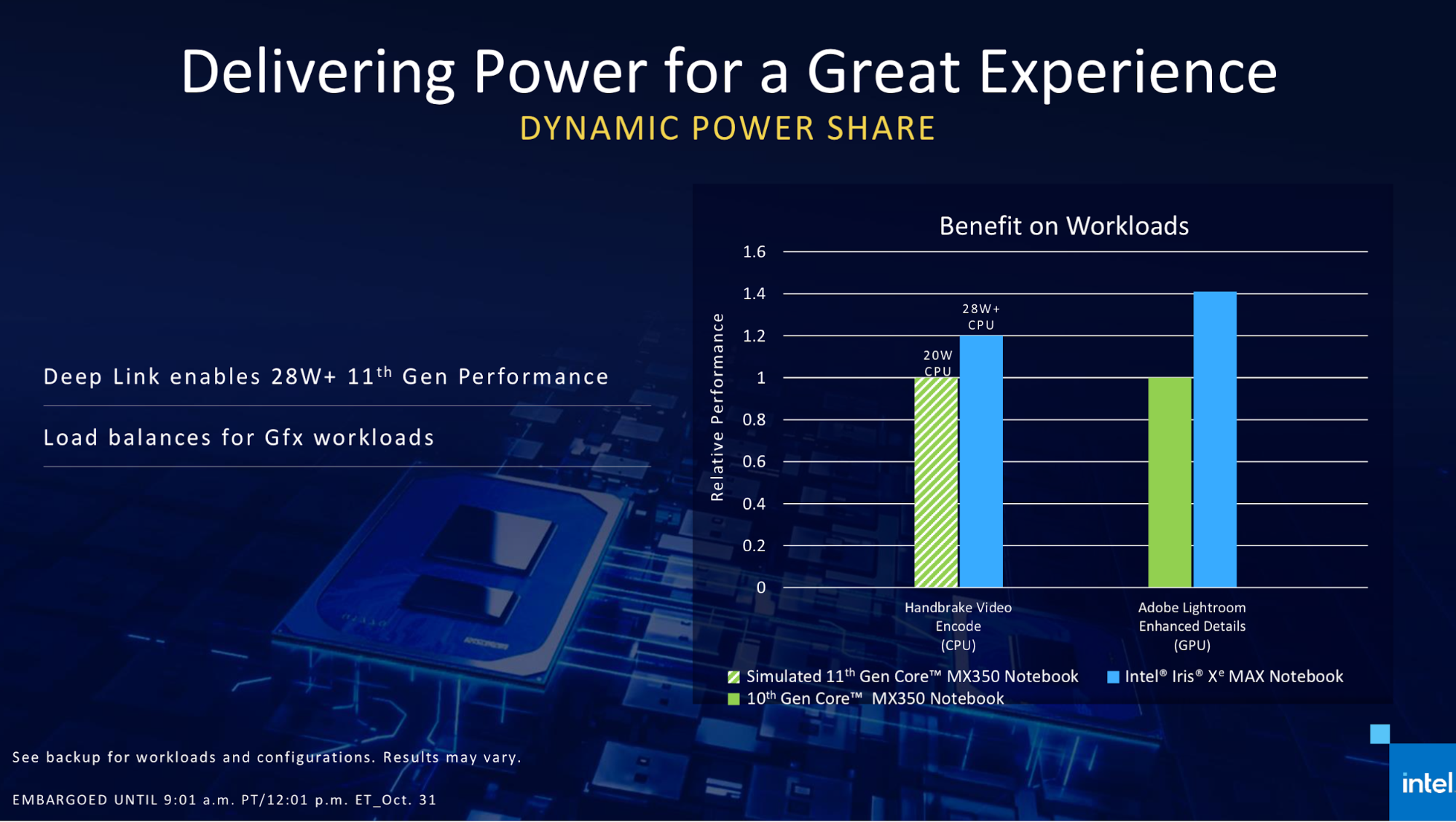

This includes dynamic power sharing between the CPU (with the integrated Iris Xe graphics) and the discrete Iris Xe Max GPU. In a Handbrake encode, Intel claimed the 28W CPU in the Acer Swift 3x easily bested an Intel reference system combining a Core i7-1185G7 with an Nvidia GeForce MX350. It also outperformed when enhancing details in Adobe Lightroom against a Lenovo Slim 7 with a 10th Gen Core i7 and MX350.The power sharing sounds somewhat similar to AMD's SmartShift tech.

Additionally, Deep Link lets the processors share Xe graphics, DP4a instructions, and Xe media encoders. In a demo of Topaz Gigapixel AI, Intel showed the Xe Max and Tiger Lake upscaling images with OpenVINO and DLBoost DP4a far faster than Ice Lake with an MX350 using Tensorflow. The combined Iris Xe and Iris Xe Max, in the demo, finished faster than the competitive laptop completed a single image.

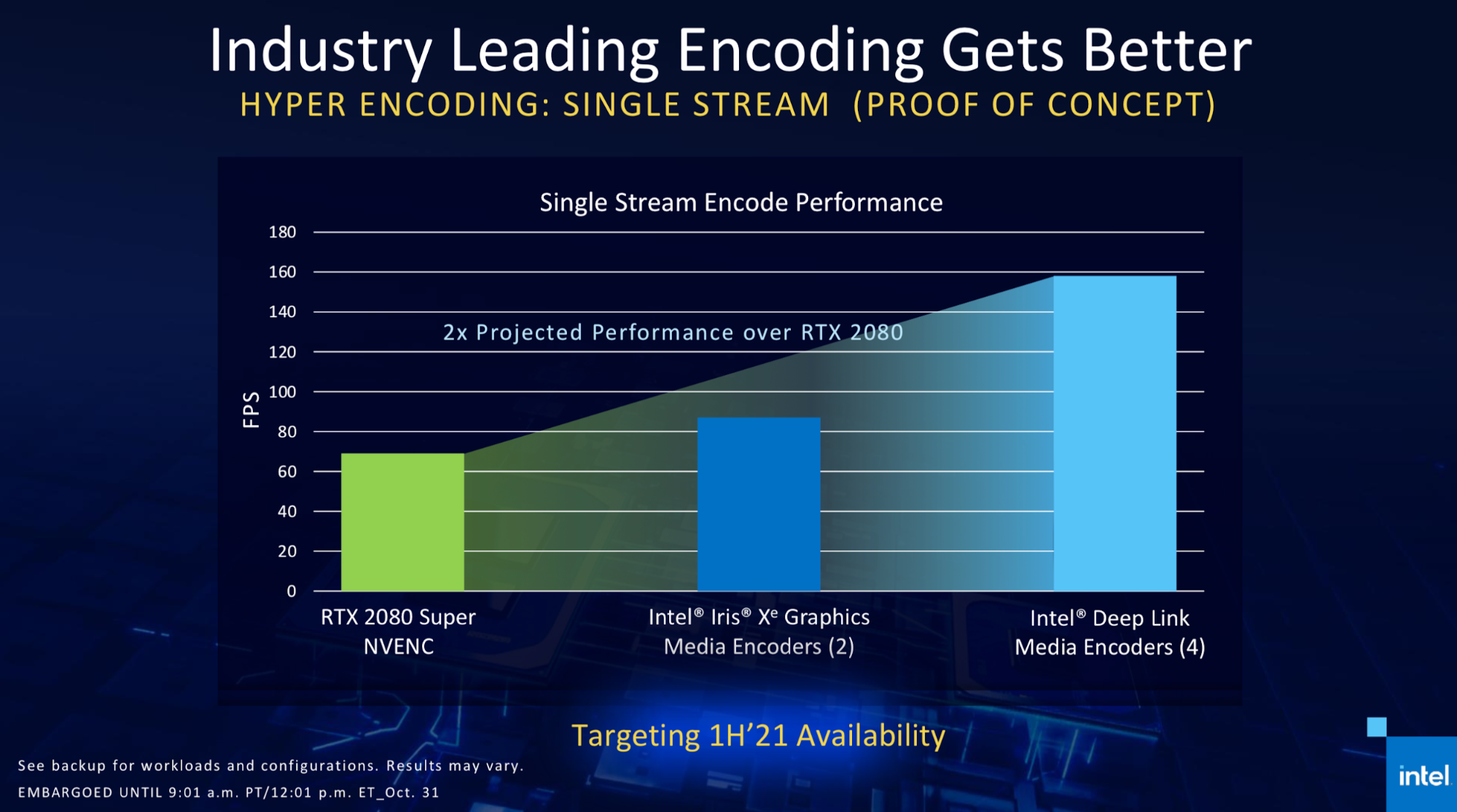

The combined media encoding should allow for up to 1.78x faster encoding than using an RTX 2080. Going up against a Gigabyte Aero 15 with a Core i9-109080HK and an RTX 2080 Super Max-Q in Handbrake, the Acer Swift 3x transcoded ten 1-minute clips from 4K/60fps AVC to 1080p / 60 fps HEVC faster. Intel says this is thanks to Intel's QuickSync with the integrated and discrete graphics working together simultaneously, compared to Nvidia's NVENC.

Intel is also working on ways to use the four media encoders present in an Iris Xe Max laptop (two in the CPU's integrated graphics, two in the dedicated GPU) to accelerate single stream encoding. Intel hopes to have that available in the first half of 2021, and says the performance should potentially double that of a single RTX 2080 using NVENC.

Deep Link will support HandBrake, OBS, Topaz Gigabpixel, XSplit and more today, with Blender, CyberLink anad others getting support in the near future.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

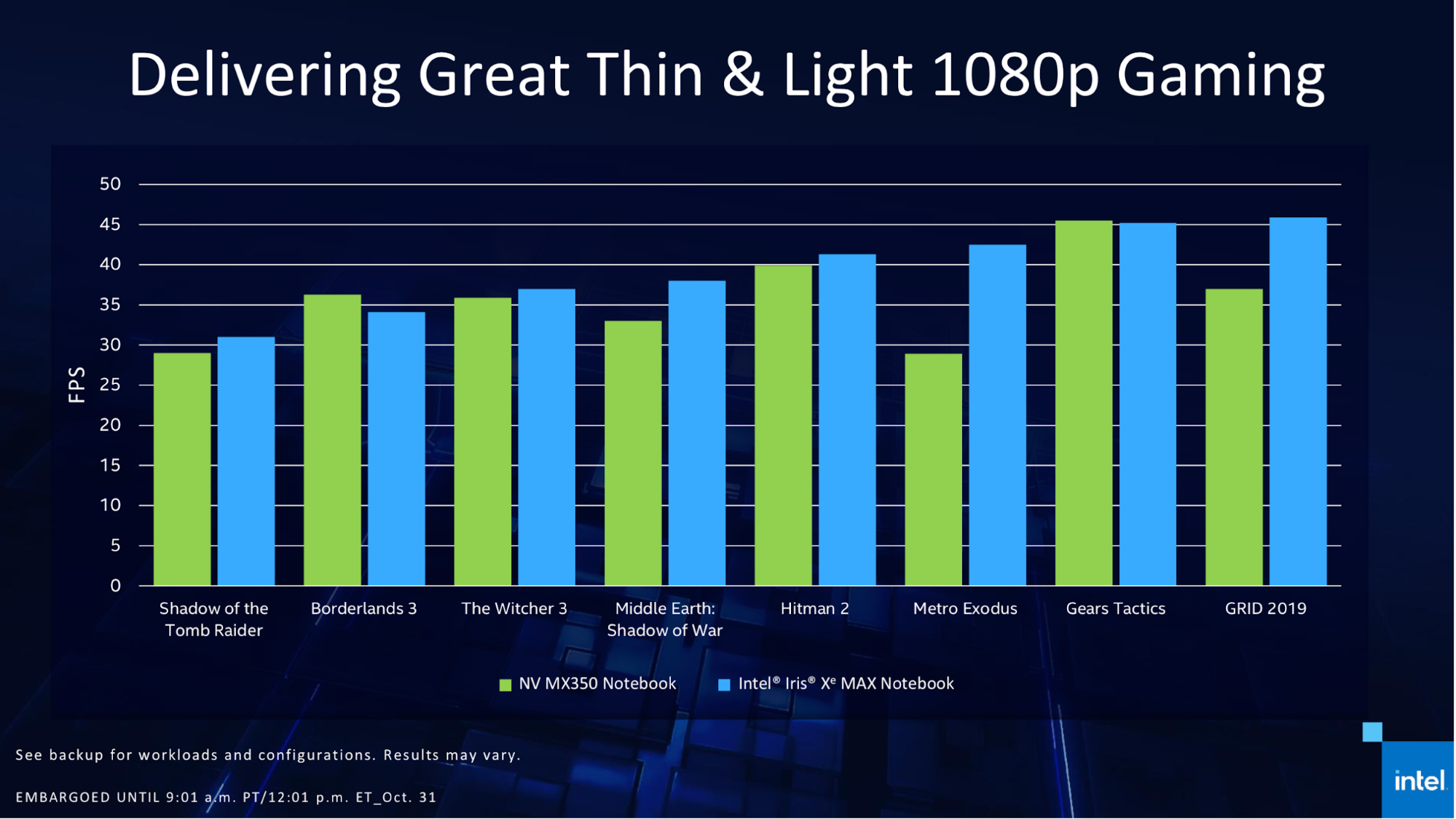

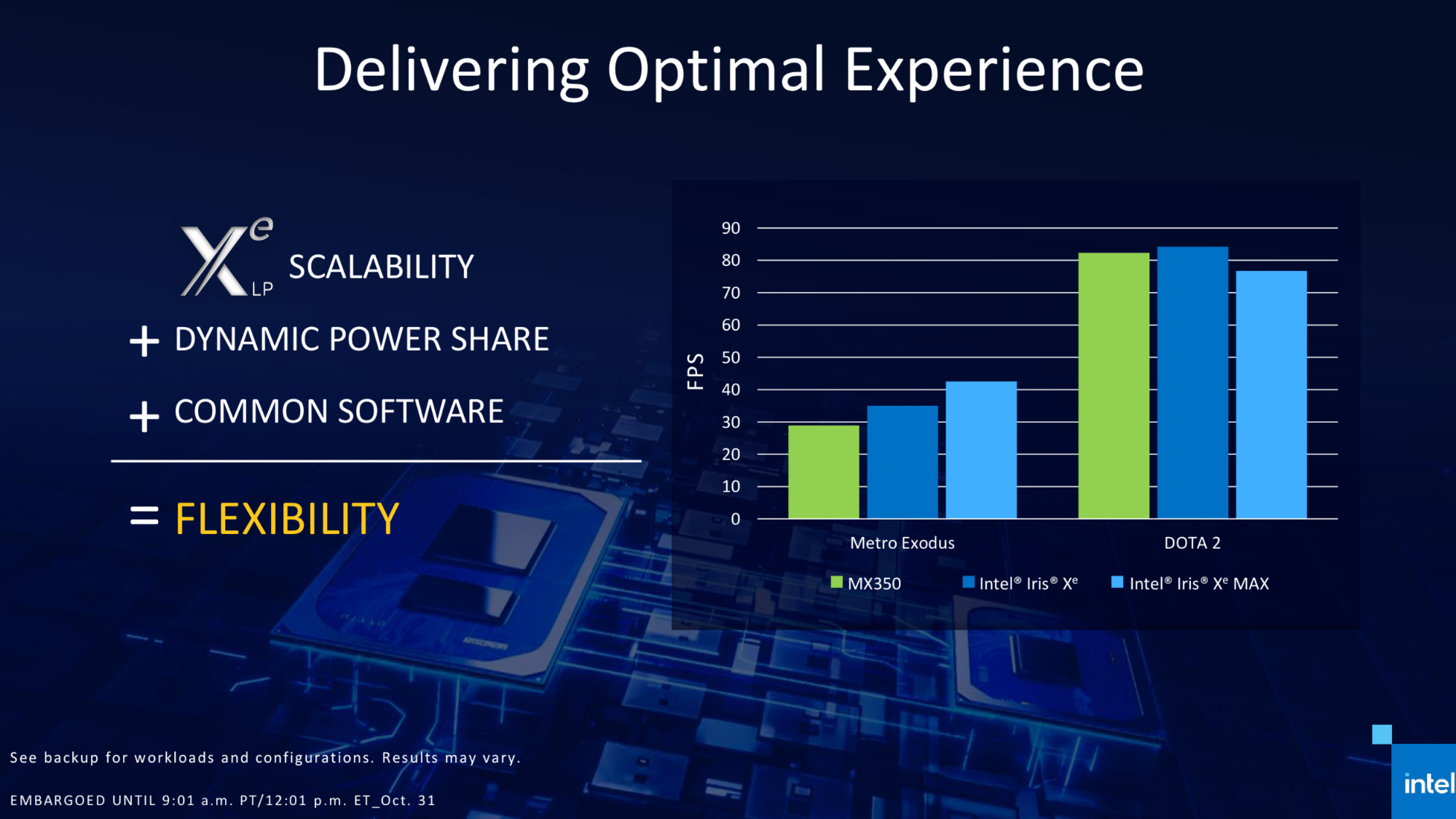

Intel isn't positioning Iris Xe Max as a gaming chip, but is claiming you can get solid 1080p performance versus an MX350. In a chart, Intel showed that if offers more performance in games like Shadow of the Tomb Raider, The Witcher 3, Middle Earth: Shadow of War, Hitman 2, Metro Exodus and Grid 2019, though the MX350 won out in Borderlands 3 and Gears Tactics.

Interestingly, while the Iris Xe Max beat the Iris Xe in Metro Exodus (in fact, the integrated graphics also surpassed the MX350), both the integrated graphics and MX350 were faster in DOTA 2. This seems like an abnormality, in that the higher clocked GPU without shared RAM should generally deliver higher frame rates. Intel said it will test games and have a list in the Intel Graphics Command Center to ensure the best-performing GPU is used.

This is not a high-end part, and unlike the media encoding and content creation workloads, Intel isn't doing multi-GPU in any form right now. The laptops will switch between integrated and dedicated Xe Graphics as deemed best.

Of course, we'll have to get our hands on these laptops ourselves to see just how well Intel Xe Max performs in our testing.

Intel told Tom's Hardware that DG1 will also come to desktop, as a discrete card for some entry and mid-level prebuilts from OEMs. That will happen in 2021, but these definitely aren't enthusiast class chips — we'll need Intel's Xe HPG for that, which will arrive some time later in 2021.

Andrew E. Freedman is a senior editor at Tom's Hardware focusing on laptops, desktops and gaming. He also keeps up with the latest news. A lover of all things gaming and tech, his previous work has shown up in Tom's Guide, Laptop Mag, Kotaku, PCMag and Complex, among others. Follow him on Threads @FreedmanAE and BlueSky @andrewfreedman.net. You can send him tips on Signal: andrewfreedman.01

-

PapaCrazy You know you're not in a good market position if you're constantly reacting to what other companies are doing first. Xe is beginning to look like a response to AMD APUs. Maybe Intel is starting to realize the combination of AMD's core counts, efficiency, and graphics architectures present an extreme danger in each and every market they compete in.Reply -

jkflipflop98 AMD is about to get hit by a runaway freight train. Savor the flavor, it's not going to come back around for a long time.Reply -

bufbarnaby36 Reply

Agree , Intel has the brains and money to do well here. Poaching Raj was just the start.jkflipflop98 said:AMD is about to get hit by a runaway freight train. Savor the flavor, it's not going to come back around for a long time. -

cryoburner Reply

AMD's graphics card division wasn't particularly great when he was heading it, and seems to be doing a lot better now that he's been gone a few years.bufbarnaby36 said:Agree , Intel has the brains and money to do well here. Poaching Raj was just the start.

Since he left, the energy efficiency of AMD's graphics cards has more than doubled, and their new cards will be competing at the enthusiast level against Nvidia's best offerings again, rather than only having more mid-range models drawing over 50% more power than the competition, as was the case when he was heading Radeon. He might be better than whoever Intel had in charge of their graphics hardware before, but I'm not convinced AMD is at much of a loss without him. -

spongiemaster Reply

A pretty significant portion of the credit for the efficiency boost can probably be attributed to switching from Global Foundries to TSMC.cryoburner said:AMD's graphics card division wasn't particularly great when he was heading it, and seems to be doing a lot better now that he's been gone a few years.

Since he left, the energy efficiency of AMD's graphics cards has more than doubled, and their new cards will be competing at the enthusiast level against Nvidia's best offerings again, rather than only having more mid-range models drawing over 50% more power than the competition, as was the case when he was heading Radeon. He might be better than whoever Intel had in charge of their graphics hardware before, but I'm not convinced AMD is at much of a loss without him. -

thepersonwithaface45 As long as technological engineering and the science behind it is being researched, all these companies are gonna keep producing products. Typically they want these products to be bought. So, I don't see the point in arguing who is gonna out do who. We always compare bench marks anyway. I look at it as any company can blindside the public as well as other companies at any time.Reply

All the speculation can be saved for when a company actually starts laying people off and losing money, imo. -

cryoburner Reply

Most of the efficiency gains seem to be architectural though. Just compare the 7nm Radeon VII based on Vega with the 7nm 6900 XT based on RDNA2, for example. Both cards are listed as having a 300W TBP, but the 6900 XT should be delivering around double the gaming performance on the same process node.spongiemaster said:A pretty significant portion of the credit for the efficiency boost can probably be attributed to switching from Global Foundries to TSMC.