Microsoft Says It Will Build a Quantum Supercomputer Within Ten Years

The research into topological qubits seems to have paid-off handsomely.

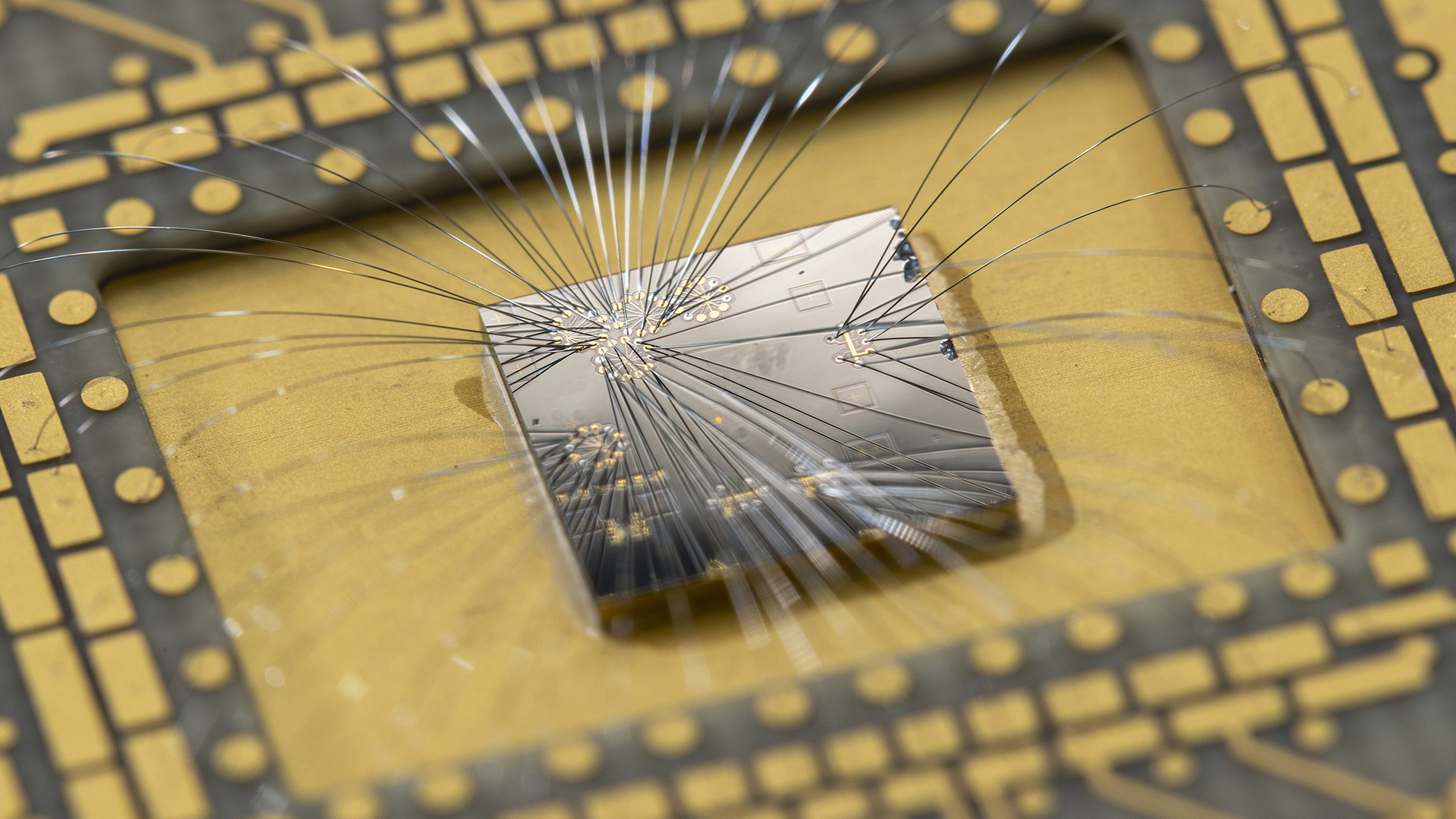

Microsoft yesterday announced its very own roadmap towards building a quantum supercomputer, crystallizing its path along the company's years-long research into topological qubits. Just last year, Microsoft had the breakthrough it "bet" would pay-off out of its research into topological qubits, an (even more) exotic qubit type than usual. Now, the company is saying it can get from the research breakthrough towards a functional quantum supercomputer in less than a decade.

That's according to Microsoft's VP of advanced quantum development, Krysta Svore, who in an interview with TechCrunch said that at Microsoft, "We think about our roadmap and the time to the quantum supercomputer in terms of years rather than decades”.

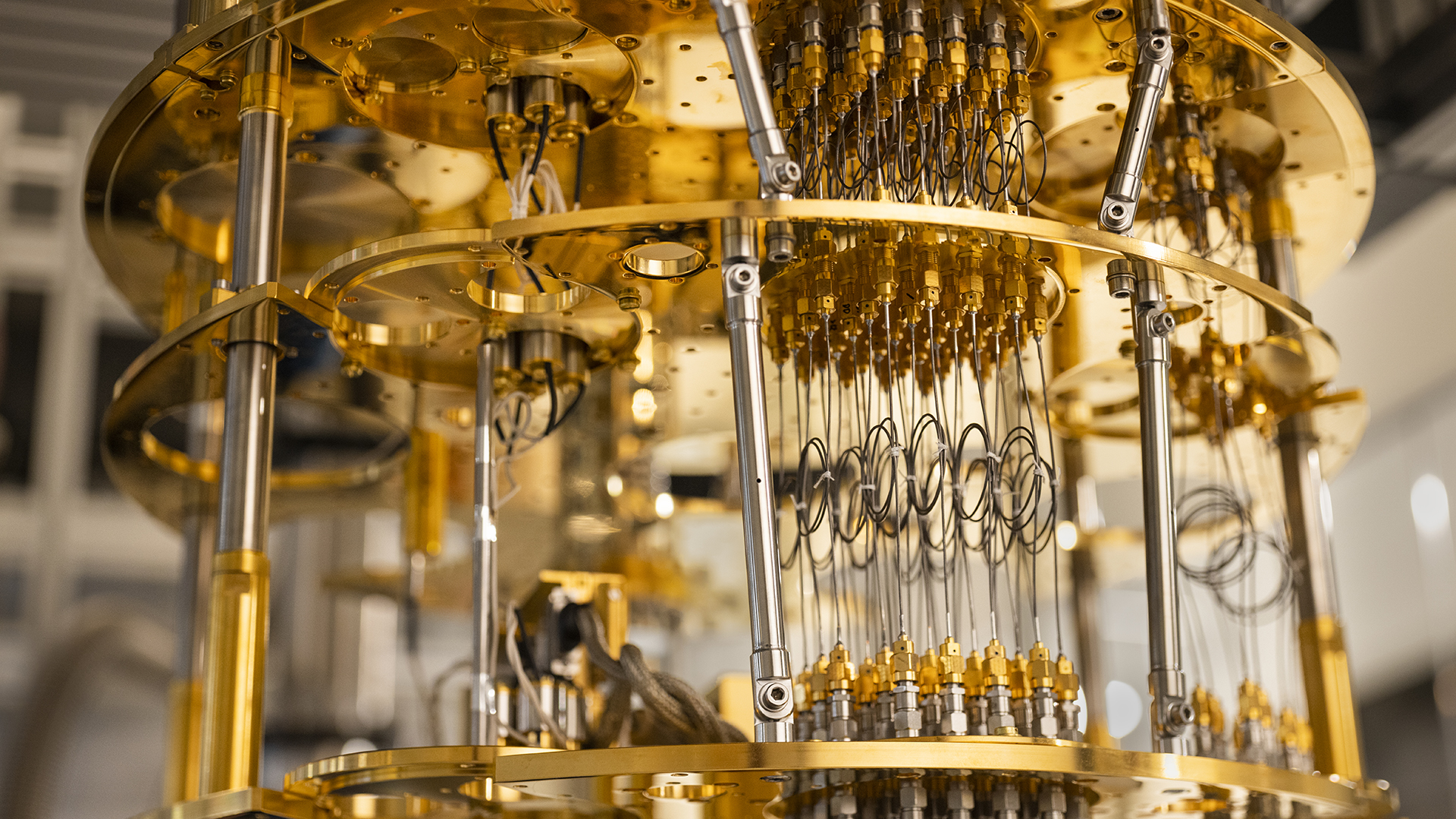

Now, that's. quite an aggressive "roadmap". Of course, Microsoft has been on the road it has publicly committed to for a while now - the company has advanced its research into quantum computing in numerous other areas, even with the lack of a single, coherent, topological qubit being shown until last year. There are many areas of quantum computing that could be worked on while Microsoft waited for its topological qubits to come to pass - such as control mechanisms, noise reduction, deployment, and others. Areas where the company's research was already aligned with the certainty that they'd actually be able to produce, entangle, and keep them coherent.

"Today, we’re really at this foundational implementation level,” Svore told TechCrunch. "We have noisy intermediate-scale quantum machines. They’re built around physical qubits and they’re not yet reliable enough to do something practical and advantageous in terms of something useful. For science or for the commercial industry. The next level we need to get to as an industry is the resilient level. We need to be able to operate not just with physical qubits but we need to take those physical qubits and put them into an error-correcting code and use them as a unit to serve as a logical qubit.”

Essentially, Microsoft has to do the same work that other companies have been doing on their own qubits: Microsoft has to scale the number of qubits it can deploy; it has to make sure those qubits are resilient (stable) so they can be used for complex calculations; and it has to find ways to reduce the error rate. Microsoft expects it will achieve its quantum supercomputer once it can reach a rate of one million quantum operations per second, with a failure rate of one per trillion operations.

It's currently unclear how many qubits will be required for that in Microsoft's topological qubit architecture, but nowadays, a rate of around two error-correcting qubits is required for each working qubit (the value changes with the tech, as does the qubits' reliability, ease of manufacture, and many other factors).

Microsoft is essentially saying that they're as much of a contender in building the world's first quantum supercomputer as other quantum powerhouses. And it's not been an easy road: one of the sub-headings on Microsoft's blog post relating to the announcement reads A high-risk, high-reward approach. And the company is undoubtedly home to some of the most talented quantum researchers the world has seen - that Microsoft reached its breakthrough on topological qubits is testament enough to that. But to be fair, so is IBM; so is Quantinuum, which has (interestingly) also dabbled in topological qubits to supercharge error correction on its trapped-ion qubits; so is Intel, who is also making great strides on designing, manufacturing, and delivering its QPUs (Quantum Processing Units) and so are other quantum-computing-focused companies.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Unless your product is vaporware or some modern rendition of snake oil, there's no way you are working in the quantum computing field without having some of the most impressive brains of our generations (the same is true for other fields of science, of course).

Yet some of Microsoft's competitors have already established their relative footholds in the industry. They've delivered, Quantum Processing Units (QPUs), software-based solutions, or pure cloud-based access to quantum computing hardware. And IBM's own roadmap also leaves space for it to reach its own quantum supercomputer around the same time as Microsoft, even if the company hasn't been as razor-sharp in stating it would come within the decade as Microsoft was just yesterday.

All of these companies have been working with their qubits of choice for longer than Microsoft has - they're all bound to have found hurdles and unforeseen difficulties in bringing their quantum computing vision to the life it already has. Microsoft is sure to hit comparable roadblocks, even if its technology is different from others'. As any engineer will tell you, and as years of being hardware enthusiasts have taught us extensively, on-paper (or on-research) specifications don't always translate into the real world.

But then, that's where Microsoft being a two-trillion-dollar company comes into play. There's nothing like huge amounts of funding to iron out the wrinkles, is there?

Svore finished saying that Microsoft is well on its way to building its reliable qubits. The company expects these to be around 10 nm in size each, which while small, isn't as small as the silicon qubits Intel has already got spinning and working for research within its Tunnel Falls QPU. But to be fair, the world of quantum doesn't work in quite the same way as transistors would; some qubits being smaller doesn't automatically mean it's easier to simply mash more of them together on the road to a million qubits.

Interestingly, Microsoft also offered a new metric for measuring a quantum computer's performance - it seems that IBM's proposed CLOPS standard didn't align with Microsoft's view. Microsoft thinks its proposed rQOPS acronym (short for reliable Quantum Operations Per Second), which measures how many reliable operations can be executed in one second, is a better fit.

No-one ever believed that Microsoft was sleeping in the shade of hopeful, topological qubits while other companies raced ahead with other, more well-understood qubit types. The company was simply coiling itself around its chosen technology. It now promises it'll deliver a quantum supercomputer - the ones that will wreak havoc on any standard, non-quantum cryptography - in under a decade. The company now hopes to race ahead of its competitors despite its slower start. But Microsoft now has a much clearer view of the road ahead; and the starting shot on quantum computing still hasn't stopped ringing in the industry's ears.

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

xspkbstr Two questions.Reply

1st. Will it play Crysis?? (I know that question is old and over rated/hated but it still funny to me.)

2nd. When we do a software update or add a new device, will we be experiencing the same blue screen of dead?? -

So now can we say goodbye to Noisy Intermediate-Scale Quantum (NISQ) computing ? Of course not, since today’s quantum computers are at the first level, rQOPS Zero, so a long way to go. The new roadmap contains a total of six steps though.Reply

Anyway, Quantum systems that run on noisy physical qubits, have already been realized with quantum machines available in the cloud via Azure Quantum.

I think MS needs to focus on these two points first: reliable logical qubits, and engineering with scale, IMHO. So the next "logical" step for MS will be to engineer hardware-protected or topological qubits, to improve and finesse their quality, and to create a multi-qubit system.

Although, Microsoft estimates that the first quantum supercomputer will need to deliver at least one million rQOPS with an error rate of 10-12, or one in every trillion operations, to be able to provide valuable inputs, however, quantum computers of today only deliver an rQOPS value of zero, meaning that the industry as a whole has a LONG way to go before we see the first QS.

The ability to create and control "Majorana" quasiparticles is no way an easy task. I think Majorana qubits have the benefit of being highly stable, particularly when compared to conventional methods, but they are also quite challenging to produce.

So, in my opinion, the whole industry needs to not only operate with physical qubits but also take those physical qubits and put them into an error-correcting code while using them as a unit to serve as a logical qubit.

Since logical qubits, formed from many physical qubits, are required for a true quantum supercomputer, the more stable the qubit, the easier it is to scale up towards supercomputer levels as you need fewer physical qubits per logical qubit, since other forms of qubits including spin, transmon, gatemon don't scale effectively.

FWIW, for this topological qubits theory, Microsoft actually achieved this last year ( by creating and manipulating the matter in a topological state). And it appears that in this state, qubits are more easily manipulated, more stable as well, and have a smaller footprint allowing for greater scale, or that's what they claim.

There are two significant hurdles to overcome IMO. First, to achieve resiliency in the logical qubits, and then to achieve scale. But with stability of its "Majorana" qubits it might be much easier to reach the resiliency level, and that stability will also help to achieve scale.

There are also important basic problems to solve, such as interference factors that influence the controllability and reliability of qubits, such as temperature, electromagnetism and material defects.

So we can now have three categories of Quantum Computing implementation levels.

Level 1 — Foundational (Noisy Intermediate Scale Quantum);

Level 2 — Resilient (reliable logical qubits);

Level 3 — Scale (Quantum supercomputers).

Btw, this paper is also worth giving a read:

https://journals.aps.org/prb/abstract/10.1103/PhysRevB.107.245423 -

Replyxspkbstr said:Two questions.

1st. Will it play Crysis?? (I know that question is old and over rated/hated but it still funny to me.)

2nd. When we do a software update or add a new device, will we be experiencing the same blue screen of dead??

The "Can it run Crysis" meme will live on forever till eternity ! :grinning: Maybe one day Quantum Chips will used on a gaming PCs too, and the whole PC Master Race PCMR will become a "Quantum Computer Master Race"/QCMR, lol.

Second question, I couldn't understand though. :unsure: -

USAFRet Reply

Before Crysis was Quake, for testing the network performance.Metal Messiah. said:The "Can it run Crysis" meme will live on forever till eternity ! -

bit_user Reply

I don't indulge in such predictions. We'd first need to see good evidence of scalable, room-temperature qubits. As long as the only approaches proven to scale are ones which require near-zero temperatures and nearly complete isolation from EMI, it's not even worth joking about.Metal Messiah. said:Maybe one day Quantum Chips will used on a gaming PCs too, and the whole PC Master Race PCMR will become a "Quantum Computer Master Race"/QCMR, lol.

It was a joke about automatic updates and Blue Screen of Death (BSoD), because this is Microsoft we're talking about.Metal Messiah. said:Second question, I couldn't understand though. :unsure: -

bit_user Reply

Yeah, and to do so is your right. But, the reason I don't is the pervasive misunderstanding of QC by people on this forum. Based on what I've seen in comment threads on QC articles, I think probably more than half of the active members on here probably expect to have a quantum computer on their desk or in their pocket within the next 2 decades.Metal Messiah. said:That was also a joke, not prediction.

Too many people seem to just blindly assume QC will follow the same general trajectory as digital computing. In terms of size, cost, and practicality. They even seem to think quantum computers are effectively just faster equivalents of classical digital computers. -

Reply

Interestingly, Microsoft also offered a new metric for measuring a quantum computer's performance - it seems that IBM's proposed CLOPS standard didn't align with Microsoft's view.

Yes, actually IBM's CLOPS mainly focuses on quantum system's speed, to carry out timing measurements and identify specific speed bottlenecks in Runtime, such as idle times between consecutive circuits, using Qiskit-runtime. But MS appears to take a different approach since apart from mainly are focusing on scale, quality, focus is on reliable operations that can be executed.

So that they can measure how many reliable operations can be executed in a second. -

BTW, after hardware-protected qubits, I also assume MS team is going to work on entangling these qubits and operate them through braiding.Reply

After all IBM, IonQ and others aiming for similar feats, although by using more established methods for building their qubits. So. we're in a bit of an arms race right now to lead beyond the NISQ era. -

NinoPino Will be the most expensive paperweight ever built. It will be able to generate real random numbers at the speed of light.Reply

A revolution.