Cerebras Slays GPUs, Breaks Record for Largest AI Models Trained on a Single Device

Democratizing large AI Models without HPC scaling requirements.

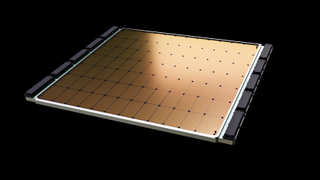

Cerebras, the company behind the world's largest accelerator chip in existence, the CS-2 Wafer Scale Engine, has just announced a milestone: the training of the world's largest NLP (Natural Language Processing) AI model in a single device. While that in itself could mean many things (it wouldn't be much of a record to break if the previous largest model was trained in a smartwatch, for instance), the AI model trained by Cerebras ascended towards a staggering - and unprecedented - 20 billion parameters. All without the workload having to be scaled across multiple accelerators. That's enough to fit the internet's latest sensation, the image-from-text-generator, OpenAI's 12-billion parameter DALL-E.

The most important bit in Cerebras' achievement is the reduction in infrastructure and software complexity requirements. Granted, a single CS-2 system is akin to a supercomputer all on its own. The Wafer Scale Engine-2 - which, like the name implies, is etched in a single, 7 nm wafer, usually enough for hundreds of mainstream chips - features a staggering 2.6 trillion 7 nm transistors, 850,000 cores, and 40 GB of integrated cache in a package consuming around 15kW.

Keeping up to 20 billion-parameter NLP models in a single chip significantly reduces the overhead in training costs across thousands of GPUs (and associated hardware and scaling requirements) while doing away with the technical difficulties of partitioning models across them. Cerebras says this is "one of the most painful aspects of NLP workloads," sometimes "taking months to complete."

It's a bespoke problem that's unique not only to each neural network being processed, the specs of each GPU, and the network that ties it all together - elements that must be worked out in advance before the first training is ever started. And it can't be ported across systems.

Pure numbers may make Cerebras' achievement look underwhelming - OpenAI's GPT-3, an NLP model that can write entire articles that may sometimes fool human readers, features a staggering 175 billion parameters. DeepMind's Gopher, launched late last year, raises that number to 280 billion. The brains at Google Brain have even announced the training of a trillion-parameter-plus model, the Switch Transformer.

“In NLP, bigger models are shown to be more accurate. But traditionally, only a very select few companies had the resources and expertise necessary to do the painstaking work of breaking up these large models and spreading them across hundreds or thousands of graphics processing units,” said Andrew Feldman, CEO and Co-Founder of Cerebras Systems. “As a result, only very few companies could train large NLP models – it was too expensive, time-consuming and inaccessible for the rest of the industry. Today we are proud to democratize access to GPT-3XL 1.3B, GPT-J 6B, GPT-3 13B and GPT-NeoX 20B, enabling the entire AI ecosystem to set up large models in minutes and train them on a single CS-2.”

Yet just like clockspeeds in the world's Best CPUs, the number of parameters is but a single possible indicator of performance. Recently, work has been done in achieving better results with fewer parameters - Chinchilla, for instance, routinely outperforms both GPT-3 and Gopher with just 70 billion of them. The aim is to work smarter, not harder. As such, Cerebras' achievement is more important than might first meet the eye - researchers are bound to be able to fit increasingly complex models even if the company does say that its system has the potential to support models with "hundreds of billions even trillions of parameters".

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

This explosion in the number of workable parameters makes use of Cerebras' Weight Streaming tech, which can decouple compute and memory footprints, allowing for memory to be scaled towards whatever the amount is needed to store the rapidly-increasing number of parameters in AI workloads. This enables set-up times to be reduced from months to minutes, and to easily switch between models such as GPT-J and GPT-Neo "with a few keystrokes".

“Cerebras’ ability to bring large language models to the masses with cost-efficient, easy access opens up an exciting new era in AI. It gives organizations that can’t spend tens of millions an easy and inexpensive on-ramp to major league NLP,” said Dan Olds, Chief Research Officer, Intersect360 Research. “It will be interesting to see the new applications and discoveries CS-2 customers make as they train GPT-3 and GPT-J class models on massive datasets.”

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

Most Popular