GeForce GT 240: Low Power, High Performance, Sub-$100

GeForce GT 240 Specifications And Hardware

Let's start with a look at the specifications of Nvidia's new GeForce GT 240-based graphics cards:

| Header Cell - Column 0 | GeForce GT 240 |

|---|---|

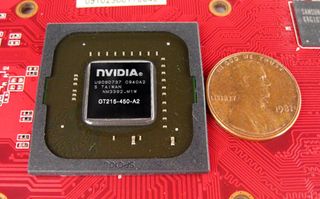

| GPU Designation | GT215 |

| Fabrication Process | 40nm |

| Graphics Clock (Texture and ROP units) | 550 MHz |

| Processor Clock (Shader Units) | 1,360 MHz |

| Memory Clock (Clock Rate/Data Rate) | 850 MHz (3,400 MHz effective) GDDR51,000 MHz (2,000 MHz effective) DDR3 |

| Total Video Memory | 1GB, 512MB |

| Memory Interface | 128-bit |

| Total Memory Bandwidth | 54.4 GB/s (GDDR5)32.0 GB/s (DDR3) |

| Stream Processors | 96 |

| ROP units | 8 |

| Texture Filtering Units | 32 |

| Microsoft DirectX/Shader model | 10.1/4.1 |

| OpenGL | 3.2 |

| PhysX Ready | Yes |

| Video Format Support for GPU Decode Acceleration | MPEG-2, MPEG-4 Part 2 Advanced Simple Profile, H.264, VC1, WMV, DivX version 3.11 and later |

| HD Digital Audio over PCI Express | Yes |

| Connectors | DVI, VGA, HDMI |

| Form Factor | Single-Slot |

| Power Connectors | None |

| HDMI version | 1.3a |

| DisplayPort | 1.1 |

| Dual Link DVI | Yes |

| Bus Support | PCIe 2.0 |

| Max Board power | 70 watts |

| GPU Thermal Threshold | 105 degrees C |

Just like the G 210 and GT 220 before it, the new GeForce GT 240 is based on the same GT200 architecture that spawned the GeForce GTX 200-series. We're not going to delve too deep into the GT200 architecture, since we've done that already in our GeForce GTX 280 launch article, which you can check out here.

We will go over the major specifications. however. The GeForce GTX 285 has 10 texture-processing clusters (TPCs) with 24 individual streaming processors (SPs) (or cores) in each one. Each TPC also has eight texture-management units (TMUs). There are eight 64-bit raster-operator partitions (ROPs) capable of handling eight raster operations per clock cycle each. As a result, the GTX 285 sports a total of 240 processor cores, 80 texture units, and eight ROPs capable of handling 64 pixels per clock, with all of the ROPs contributing to a 512-bit memory bus.

For comparison, the new GeForce GT 240 has four TPCs, each containing 24 SPs, for a total of 96 processor cores. Similar to the GeForce GTX 280, each TPC sports eight TMUs, for a total of 32 texture units. Two 64-bit ROPs capable of handling four pixels per clock work together to give the GPU a 128-bit memory interface and the capacity to handle eight raster operations per clock. Therefore, we expect the GT 240 to server up less than half of the processing power of a GeForce GTX 285.

The new GeForce GT 240 is also fabricated using TSMC's reportedly-problematic 40nm process, which should allow Nvidia to pull greater profit margins from this sub-$100 GPU compared to its 55 and 65nm cousins. Of course, it shares some other features with the G 210 and GT 220, such as DirectX 10.1 and Shader Model 4.1 support, eight-channel LPCM output support, and enhanced playback of DivX, VC-1, and MPEG-2 video codecs. The GeForce GT 240 is certified for not only CUDA and PhysX use, but is also GeForce 3D Vision-ready.

The biggest differentiator favoring Nvidia's GeForce GT 240 is its memory support. While the GeForce GT 220 is limited to DDR2 and GDDR3, the new GeForce GT 240 can be coupled with either DDR3 or GDDR5. This is very important, as GDDR5 offers two times the theoretical memory bandwidth per clock compared to DDR2 or GDDR3. The beauty of this is that it allows the GeForce GT 240 to offer similar memory bandwidth to GDDR3-equipped cards sporting a 256-bit memory interface (like the GeForce 9600 GT), but it keeps memory costs down. GDDR5 was one of AMD's aces when it launched the Radeon HD 4800-series cards, and this is Nvidia's first use of the technology. It's also the first time we've seen GDDR5 used on a card destined for the sub-$100 market. Nvidia's implementation of GDDR5 helps bridge the performance gap between 128-bit cards like the Radeon HD 4670 and 256-bit cards like the GeForce 9600 GT.

GeForce GT 240 distinguishes itself as the only reference card in this performance range without a dedicated power connector. This really brings the fight to the Radeon HD 4670, previously the undisputed performance king of reference cards without a PCIe power cable requirement.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Update: Wait a Minute. No SLI?

It's not all good news, however. The GeForce GT 240 lacks a feature that both the GeForce 9600 GSO and GT have offered since they were introduced: an SLI bridge connector.

Hoping that SLI might be supported over the PCI Express bus, we tried running our two test samples together. However, the driver panel wouldn't show us the option to enable SLI. Confused, we asked Nvidia for a bit of clarification. The company let us know that the GeForce GT 240 does not support SLI, and that Nvidia "typically hasn’t supported SLI for sub-$99 products, as users typically upgrade instead of buying a second card."

While Nvidia's position is certainly understandable, we find the news disappointing, as even low-end cards like the GeForce 9500 GT are often equipped with SLI support.

As far as users upgrading instead of buying a second card, Nvidia is absolutely correct: with the knowledge that the GeForce GT 240 will be replacing the GeForce 9600 GSO and GT, we had a look at Steam's Hardware Survey. According to the survey, only about 2% of gamers are running multi-GPU systems, and the GeForce 9600 GSO and GT represent about 6% of graphics cards out there. According to my math skills, that means that roughly 12 in every 10,000 gamers are running more than one GeForce 9600 in SLI.

Having said that, the GeForce GT 240 is certainly powerful enough to warrant SLI, and the lack of support for this new model is a disappointment since it is featured on the GeForce 9600-series cards being replaced.

Current page: GeForce GT 240 Specifications And Hardware

Prev Page Introduction Next Page Zotac's GeForce GT 240 512MB AMP! Edition-

rodney_ws Well, it appears I might be the first poster... and that's pretty indicative of how exciting this card truly is. At any price point it's just hard to get excited when a company is just re-badging/re-naming older cards. DDR5? Oh yay! Now about that 128 bit bus...Reply -

Ramar I really can't justify this card when a Sparkle 9800GT is on newegg for the same price or less than these cards. Perhaps if energy costs are really important to you?Reply -

Uncle Meat ReplyBefore we get into the game results, something we want to stress is that all of the GeForce cards we used for benchmarking ended up being factory overclocked models, but that our Diamond Radeon HD 4670 sample is clocked at reference speeds.

The memory on the Diamond Radeon HD 4670 is clocked 200Mhz below reference speeds. -

rodney_ws Also, the 9600 GSO was on the Egg for $35 after MIR a few weeks/months back. No, that's not a top-tier card, but at $35 that's practically an impulse buy.Reply -

http://store.steampowered.com/hwsurvey/videocard/Reply

Looking at what cards people actually have (8800gt mostly), I think there are very few that would want to upgrade to this. Give us something better, Nvidia! The only reason why Ati doesn't have a 90% market share right now is that they can't make 5800s and 5700s fast enough. -

jonpaul37 the card is pointless, it's Nvidia's attempt to get some $$$ before an EP!C FA!L launch of FermiReply -

jonpaul37 The card is pointless, it's Nvidia's attempt to get some $$$ before an EP!C FA!L launch of Fermi.Reply -

JofaMang No SLI means they want to force higher profit purchases from those looking for cheap multi-card setups. That's dirty. I wonder how two 4670s compare to one of these for the damn near the same price?Reply -

KT_WASP I too noticed the discrepancy in your stated numbers for the Diamond 4670. In the article it states 750MHz / 800MHz (1600 effective). But then in your chart it states 750MHz / 1000MHz (2000 effective).Reply

So, which one was used? Reference is 750/1000 (2000 eff.) Diamond had two versions, I believe, one at the reference speed and one at 750/900 (1800 eff.)

Just trying to understand you pick so we could better understand the results.

Most Popular