Part 4: Avivo HD vs. PureVideo HD

Resolution Benchmarks: 1080p vs. 780p

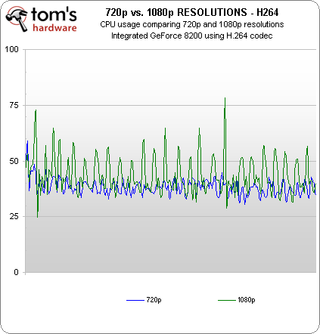

First off, since we noticed smoother playback on the GeForce 8200 at lower resolutions, let’s examine the CPU utilization difference between 720p (1280x720) and 1080p (1920x1080):

Now we’re getting somewhere. While overall CPU utilization didn’t drop that much, note that the CPU utilization is much more consistent at the lower 720p resolution. As a result, we’re not seeing the dropped frames and skipping performance.

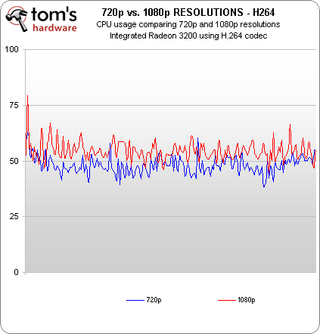

Let’s compare this to what happens to the Radeon 3200 when we lower the resolution:

There doesn’t appear to be any CPU spiking at the higher resolution with the Radeon 3200. As the resolution is dropped, CPU utilization lowered a bit. But playback appeared skip-free at either resolution.

Based on CPU utilization, it looks like the GeForce 8200 can decode the demanding H.264 content just fine, but it seems that as the resolution is raised past 720p there’s a bottleneck happening somewhere else and playback suffers.

Frankly, this is a serious blow to the integrated GeForce 8200’s potential as a home theater PC platform. While it’s true that it was able to play back two of the three titles we tested at the 1080p resolution, it’s a pretty serious limitation that resolution potentially has to be lowered to 720p for demanding titles, especially since the Radeon 3200 displays no such limitation.

With this in mind, we are curious as to how these platforms will perform with CPUs that are slower than the 4800+. Are either of these system viable HTPC platforms with cheaper, single-core processors?

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Current page: Resolution Benchmarks: 1080p vs. 780p

Prev Page CPU Usage Benchmarks: Radeon 3200 vs. GeForce 8200 Next Page CPU Benchmarks: Dual-Core Athlon 4800+ vs. Single-Core Sempron 3200+-

abzillah Don't the 780G chips have hybrid technology? It would have been great to see what kind of performance difference it would make to add a discrete card with a 780G chip. Motherboards with integrated graphics cost about the same as those without integrated graphics, and so I would choose an integrated graphics + a discrete graphic card for hybrid performance.Reply -

liemfukliang Wao, you should update this article part 5 in tuesday when NDA 9300 lift out. 9300 vs 790GX. Does this NVidia VGA also defect?Reply -

TheGreatGrapeApe Nice job Don !Reply

Interesting seeing the theoretical HQV difference being a realistic nil due to playability (does image enhancement of a skipping image matter?)

I'll be linking to this one again.

Next round HD4K vs GTX vs GF9 integrated, complete with dual view decoding. >B~)

-

kingraven Great article, specially liked the decrypted video benchmarks as I was indeed expecting a much higher difference.Reply

Also was expecting that the single core handled it better as I use a old laptop with pentium M 1500mhz & ATI 9600 as a HTPC and it plays nearly all HD media I trow at it smoothly (Including 1080P) trough ffdshow. Notice the files are usually Matroska or AVI and the codecs vary but usually are H264.

I admit since its an old PC without blueray or HD-DVD I have no idea how the "real deal" would perform, probably as bad or worse as the article says :P -

I have a gigabyte GA-MA78GM-S2H m/b (780G)Reply

I just bought a Samsung LE46A656 TV and I have the following problem:

When I connect the TV with standard VGA (D-SUB) cable,

I can use Full HD (1920 X 1080) correctly.

If I use the HDMI or DVI (with DVI-> HDMI adaptor) I can not use 1920 X 1080 correctly.

The screen has black borders on all sides (about 3cm) and the picture is weird, like the monitor was not driven in its native resolution, but the 1920 X 1080 signal was compressed to the resolution that was visible on my TV.

I also tried my old laptop (also ATI, x700) and had the same problem.

I thought that my TV was defective but then I tried an old NVIDIA card I had and everything worked perfect!!!

Full 1920 X 1080 with my HDMI input (with DVI-> HDMI adaptor).

I don't know if this is a ATI driver problem or a general ATI hardware limitation,

but I WILL NEVER BUY ATI AGAIN.

They claim HDMI with full HD support. Well they are lying!

-

That's funny, bit-tech had some rather different numbers for HQV tests for the 780g board.Reply

http://www.bit-tech.net/hardware/2008/03/04/amd_780g_integrated_graphics_chipset/10

What's going on here? I assume bit-tech tweaked player settings to improve results, and you guys left everything at default?

-

puet What about the image enhacements in the HQV test posible with a 780G and a Phenom procesor?, would this mix stand up in front of the discrete solution chosen?.Reply

This one could be an interesting part V in the articles series. -

genored azraelI have a gigabyte GA-MA78GM-S2H m/b (780G)I just bought a Samsung LE46A656 TV and I have the following problem:When I connect the TV with standard VGA (D-SUB) cable, I can use Full HD (1920 X 1080) correctly.If I use the HDMI or DVI (with DVI-> HDMI adaptor) I can not use 1920 X 1080 correctly. The screen has black borders on all sides (about 3cm) and the picture is weird, like the monitor was not driven in its native resolution, but the 1920 X 1080 signal was compressed to the resolution that was visible on my TV.I also tried my old laptop (also ATI, x700) and had the same problem.I thought that my TV was defective but then I tried an old NVIDIA card I had and everything worked perfect!!!Full 1920 X 1080 with my HDMI input (with DVI-> HDMI adaptor).I don't know if this is a ATI driver problem or a general ATI hardware limitation, but I WILL NEVER BUY ATI AGAIN.They claim HDMI with full HD support. Well they are lying!Reply

LEARN TO DOWNLOAD DRIVERS -

Guys...I own this Gigabyte board. HDCP works over DVI because that's what I use at home. Albeit I go from DVI from the motherboard to HDMI on the TV (don't ask why, it's just the cable I had). I don't have ANYDVD so, I know that it works.Reply

As for the guy having issues with HDMI with the ATI 3200 onboard, dude, there were some problems with the initial BIOS. Update them, update your drivers and you won't have a problem. My brother has the same board too and he uses HDMI and it works just fine. Noob...

Most Popular