CEVA Unveils Next-Generation Deep Learning And Vision Platform

Ceva announced its fifth generation vision platform, which includes the new Ceva-XM6 DSP chip, hardware accelerators, neural network software framework and a broad set of algorithms. The new platform will enable next-generation smartphones, autonomous cars, drones, and surveillance cameras to perform vision processing and machine learning operations two to eight times faster than the previous XM4-based platform.

Developers are using machine learning software more, so there is an increasing need for chips that can perform vision processing and machine learning tasks on devices in real-time. Internet connectivity may not make sense for all types of devices, and even when it does, the latency required to process the information on a company’s servers and return the data may be too long for practical applications. For some tasks or types of devices, it may be preferable to do all of the computation locally and in real-time. We should see this type of chip in more devices as vision processors get better.

Smartphone companies may be the most interested in adopting vision processors because they tend to use coprocessors alongside a CPU and a GPU. Mobile device makers are looking to differentiate themselves from others through their computational capabilities. A high-performance vision coprocessor may help them stand apart from all the others that may only use Qualcomm or MediaTek SoCs for computation.

Ceva-XM6

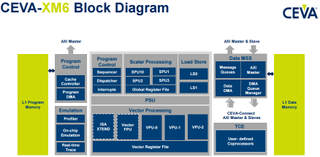

The Ceva-XM6 DSP chip promises up to an 8x increase in performance for some hardware accelerated tasks, a 3x increase in performance for vector-based computer vision kernels and a 2x average performance gain across all kernels.

Ceva added the following technical improvements to provide the increased performance:

A new vector processing unit architecture ensuring above 95% MAC utilization, which the company claimed is unmatched in the industry.Enhanced Parallel Scatter-Gather Memory Load Mechanism, which further improves the performance of vision algorithms Sliding Window 2.0 - a mechanism that takes advantage of pixel overlap in image processing and helps to achieve higher utilization for a wider variety of neural networks Optional 32-way SIMD vector floating-point unit that includes FP16 support and major non-linear operations enhancements An enhanced 3D data processing scheme for accelerated CNN (Convolutional Neural Networks) performance A 50% improvement in control code performance versus the Ceva-XM4 A new scalar unit which further reduces code size, multi-core and system integration support

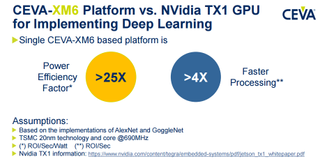

Ceva also said that its new vision platform has a significant power efficiency lead compared to GPUs when implementing neural networks. We’ve recently seen evidence from other vision processors and Google’s TPU that GPUs are not leading the way in terms of neural network processing efficiency.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

However, at least for now, GPUs are still the leading mainstream products that can fulfill the taxing performance requirements for fast neural network training. GPUs may not be the most efficient, but they tend to offer much higher performance in a single chip, and they are more readily available, which is why companies playing with machine learning still prefer them to the emerging alternatives.

Vision processors may still be better suited for low-power embedded devices, where efficiency, peak power consumption and price matter most.

Ilan Yona, vice president and general manager of the Vision Business Unit at CEVA, commented, “As computer vision and deep learning technologies become mainstream, there is a need to bridge the gap between the multi-layered and powerful deep neural networks that are being generated by power-consuming GPU engines and the ability to deploy these in power- and performance-constrained embedded applications. Our new vision platform excels in this regard, providing developers with the most comprehensive set of technologies to rapidly address these embedded use-cases.”

Ceva’s Vision Platform

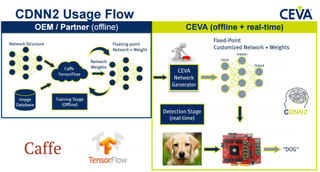

Ceva’s vision platform functions Alongside the Ceva-XM6 DSP, and it includes function-specific hardware accelerators for Convolutional Neural Networks, along with image de-warp for all types of image transformations. Ceva’s software platform supports its recently unveiled CDNN2 neural network software framework, the Ceva-CV computer vision library, the OpenCV, OpenCL, and OpenVX APIs. It also supports a set of widely used algorithms.

Ceva optimized the CDNN2 software framework for both the Ceva-XM6 and CDNN accelerator. It supports 16-bit fixed-point precision, thus ensuring less than 1% degradation when running a network trained in a 32-bit floating-point environment. According to Ceva, this is critical for companies that want to transition neural networks from R&D (likely run on GPUs) to more cost- and power-efficient solutions, such as its own vision processor.

The Ceva-XM6 and its vision platform components will be available to lead customers in the fourth quarter of this year, and general licensing will come in the first quarter of 2017.

-

ZolaIII As you stated many times in the article:Reply

"However, at least for now, GPUs are still the leading mainstream products that can fulfill the taxing performance requirements for fast neural network training. GPUs may not be the most efficient, but they tend to offer much higher performance in a single chip, and they are more readily available, which is why companies playing with machine learning still prefer them to the emerging alternatives".

I have to inform you that there are & DSP cluster design that can scale much bigger (multi cluster designs) as for instance ones from Tensilica top offering, I don't know about CEVA ones.

To keep fair let's assume they did use old TX1 development kit for comparation & that one only had ability of doing it with 32 bit (single precision). So let's cut the results comparation in half geting 2x performance per 12.5 less power consumption this is still fascinating & it brings us to the conclusion how it's also much cheaper to manufacture as their is no magical stick to get the power consumption 12.5x down. More significant DSP's are available as licensable IP's wile most GPU's remain property non licensable IP's. As I see a current Tensilica offering as the better choice & I am certain they can scale up to 4x full blocks (clusters) me be even more they are faster than Ceva offering & also have 8 bit suport so that practically makes them on pair with biggest GPU chips available today (performance wise) wile they would probably cost 8 times less to make & commercialy available to who ever needs them with up to 40x less price (as Nv & Intel tend to change that much for their "special purpose accelerators"). When you take all this into consideration you see how the DSP's are really winning the race in the large margins. -

bit_user I don't see anything here that's so different from a GPU. I think they simply tuned the engines and on-chip memories for data access patterns common in neural networks.Reply

Therefore, I'm predicting future generations of mobile GPUs from Nvidia, AMD, and ARM will narrow or eliminate the performance & power efficiency gaps vs. dedicated deep learning processors.

It supports 16-bit fixed-point precision, thus ensuring less than 1% degradation when running a network trained in a 32-bit floating-point environment.

Also, I'm curious how they arrived at this figure. Does it really apply to layers of all types networks of all depths and architectures?

Most Popular