Part 1: DirectX 11 Gaming And Multi-Core CPU Scaling

We test five theoretical Intel CPUs in 10 different DirectX 11-based games to determine what impact core count has on performance.

The Witcher 3 & Conclusion

The Witcher 3

CD Projekt RED’s REDengine 3 is optimized for multi-core CPUs, and the developers maintain that they aren’t limited to just three or four threads. However, the jump from two cores to four takes us from the bottom of our Witcher 3 average FPS chart to the top.

Indeed, the game’s minimum requirement is a Core i5-2500K, so it’s not surprising to see a dual-core solution suffering. The fact that Core i7-6700K lands in first place suggests that The Witcher 3 gets more from Intel’s Skylake architecture than Broadwell-E’s extra cores, even at the same 3.9 GHz.

It’s unfortunately impossible to derive any meaning from the other four charts because the dual-core system locks up for several seconds at a time (and in a repeatable way), skewing the sense of scale. Those plunges in the frame rate over time graph are very real; game play simply drops to zero FPS in each instance.

At 2560x1440, the dual-core config’s most glaring issues subside…but that doesn’t mean it’s out of the woods. Big frame time spikes reflect the oversubscribed processor’s attempts to keep up with The Witcher 3’s threaded engine, negatively affecting smoothness.

The other four configurations settle in around the same performance level. Their average and minimum frame rates are similar (though the quad-core -6700K actually leads by a bit), and from what we can see in the frame time graph, there’s quite a bit of overlap.

QHD may have forgiven our Core i3 with HT disabled, but UHD does not. Although 3840x2160 presents an even more GPU-bound scenario, two cores buckle under the test sequence. Multiple ~5-second pauses bring the average frame rate down and impose a minimum of 0 FPS.

For the most part, anything with four or more cores fares comparably using The Witcher’s most taxing detail settings at 4K.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPU Content

Conclusion

There’s a ton of data spread across the preceding pages. But it’s largely distillable to a handful of sweeping conclusions.

First, it’s increasingly clear that dual-threaded CPUs are no longer the way to go. Yes, our experiment exaggerates their limitations with an ultra-high-end graphics card. However, a great many developers specify quad-core processors in their minimum requirements. The Xbox One and PlayStation 4 both expose eight threads, and cross-platform games utilize engines written to exploit the parallelism afforded by those consoles.

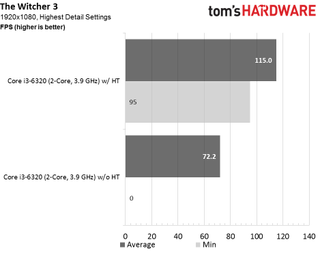

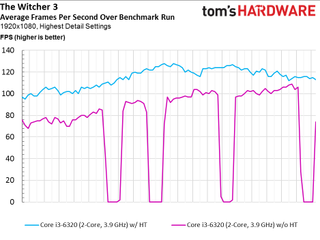

So start your shopping list with at least a Core i3 or quad-threaded AMD CPU. Simply adding Hyper-Threading to Intel’s architecture makes a huge difference. The following charts illustrate what happens in The Witcher 3 when the feature is enabled.

Remember those huge pauses that plagued the i3? They’re ironed out when The Witcher 3 has four threads to work with. Beyond that, performance across the benchmark run jumps dramatically. Average frame rate increases almost 60 percent thanks to those two logical cores. Ashes, CARS, Grand Theft Auto, Hitman, Metro, Tomb Raider, and The Witcher 3 all support our quad-threaded recommendation.

Of course, the largest difference between processors is seen at 1920x1080 with an overkill GPU. As you turn the dial to 2560x1440 and 3840x2160, the GeForce GTX 1080 is pushed harder, resulting in average frame rates that look more similar from one CPU to another. Despite the shifting workload, though, drilling down into frame times reveals that our dual-threaded Core i3 still cannot keep up. In games like Grand Theft Auto, Rise of the Tomb Raider, and The Witcher 3, massive frame time spikes translate to jarring interruptions. Ashes of the Singularity, Project CARS, Metro, and Hitman are all just less smooth in general on the Core i3. We’re perhaps most surprised that loading down Nvidia’s GP104 doesn’t let two Skylake cores off the hook.

All of these games are DirectX 11-based. And that’s not necessarily great for looking at CPU scaling at a given clock rate since DirectX 11 exposes a serialized stream of commands to the GPU. Right out of the gate, potential performance is limited by the older API. DirectX 12 is supposed to be better for threaded scaling and utilization by virtue of its command lists. But that also depends on the game developer’s ability to manage available resources. And up until now, we’ve seen very few DirectX 12-based titles expose the API’s purported benefits.

Stay tuned for part two of this series, where we roll in data from the latest DirectX 12 games to compare how the same CPUs fare across three popular resolutions.

MORE: Best Deals

MORE: Hot Bargains @PurchDeals

Current page: The Witcher 3 & Conclusion

Prev Page Interview With Ubisoft Massive & The DivisionStay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

-

ledhead11 Awesome article! Looking forward to the rest.Reply

Any chance you can do a run through with 1080SLI or even Titan SLi. There was another article recently on Titan SLI that mentioned 100% CPU bottleneck on the 6700k with 50% load on the Titans @ 4k/60hz. -

Wouldn't it have been a more representative benchmark if you just used the same CPU and limited how many cores the games can use?Reply

-

Traciatim Looks like even years later the prevailing wisdom of "Buy an overclockable i5 with the best video card you can afford" still holds true for pretty much any gaming scenario. I wonder how long it will be until that changes.Reply -

nopking Your GTA V is currently listing at $3,982.00, which is slightly more than I paid for it when it first came out (about 66x)Reply -

TechyInAZ Reply18759076 said:Looks like even years later the prevailing wisdom of "Buy an overclockable i5 with the best video card you can afford" still holds true for pretty much any gaming scenario. I wonder how long it will be until that changes.

Once DX12 goes mainstream, we'll probably see a balanced of "OCed Core i5 with most expensive GPU" For fps shooters. But for CPU the more CPU demanding games it will probably be "Core i7 with most expensive GPU you can afford" (or Zen CPU). -

avatar_raq Great article, Chris. Looking forward for part 2 and I second ledhead11's wish to see a part 3 and 4 examining SLI configurations.Reply -

problematiq I would like to see an article comparing 1st 2nd and 3rd gen I series to the current generation as far as "Should you upgrade?". still cruising on my 3770k though.Reply -

Brian_R170 Isn't it possible use the i7-6950X for all of 2-, 4-, 6-, 8-, and 10-core tests by just disabling cores in the OS? That eliminates the other differences between the various CPUs and show only the benefit of more cores.Reply -

TechyInAZ Reply18759510 said:Isn't it possible use the i7-6950X for all of 2-, 4-, 6-, 8-, and 10-core tests by just disabling cores in the OS? That eliminates the other differences between the various CPUs and show only the benefit of more cores.

Possibly. But it would be a bit unrealistic because of all the extra cache the CPU would have on hand. No quad core has the amount of L2 and L3 cache that the 6950X has. -

filippi I would like to see both i3 w/ HT off and i3 w/ HT on. That article would be the perfect spot to show that.Reply