Why you can trust Tom's Hardware

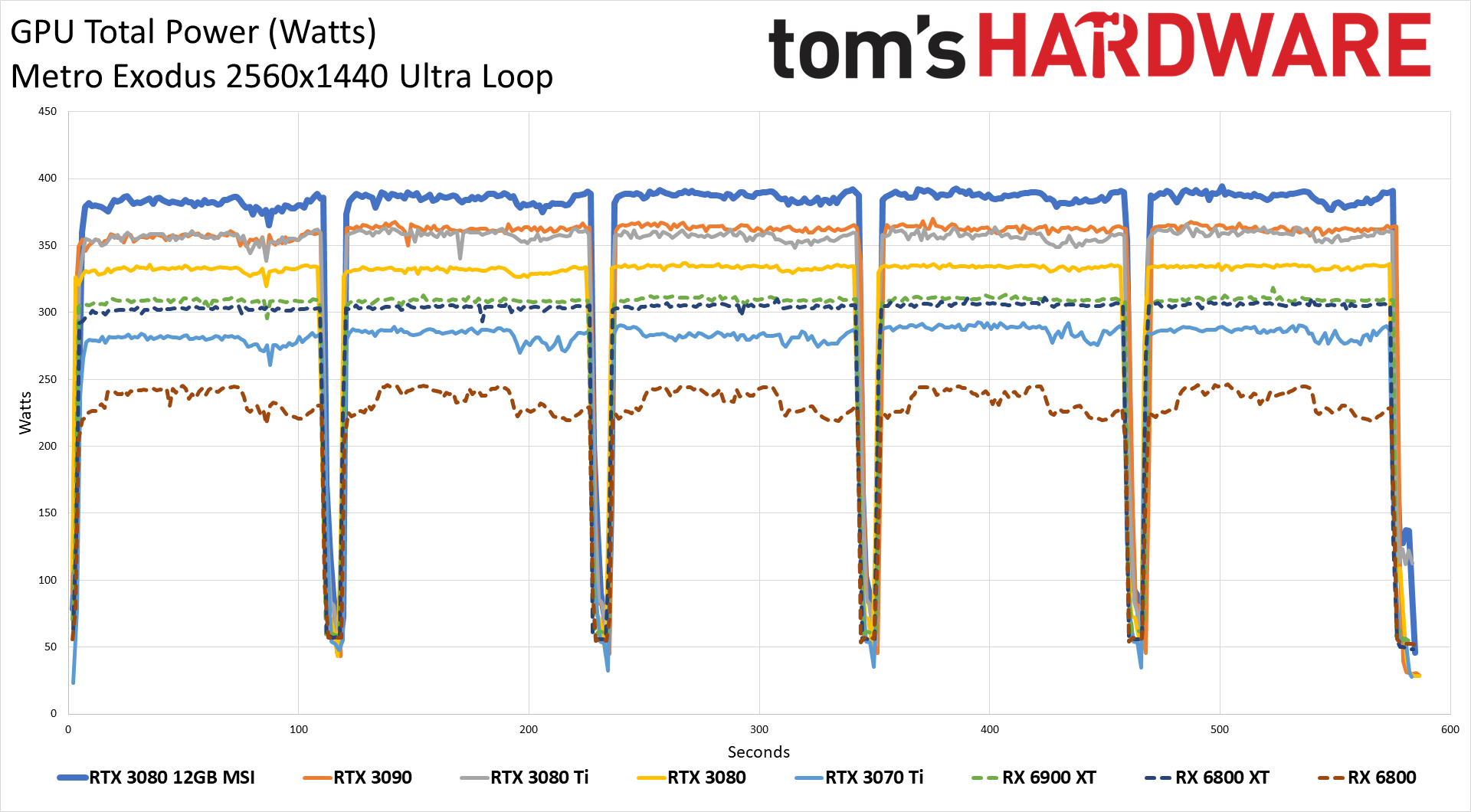

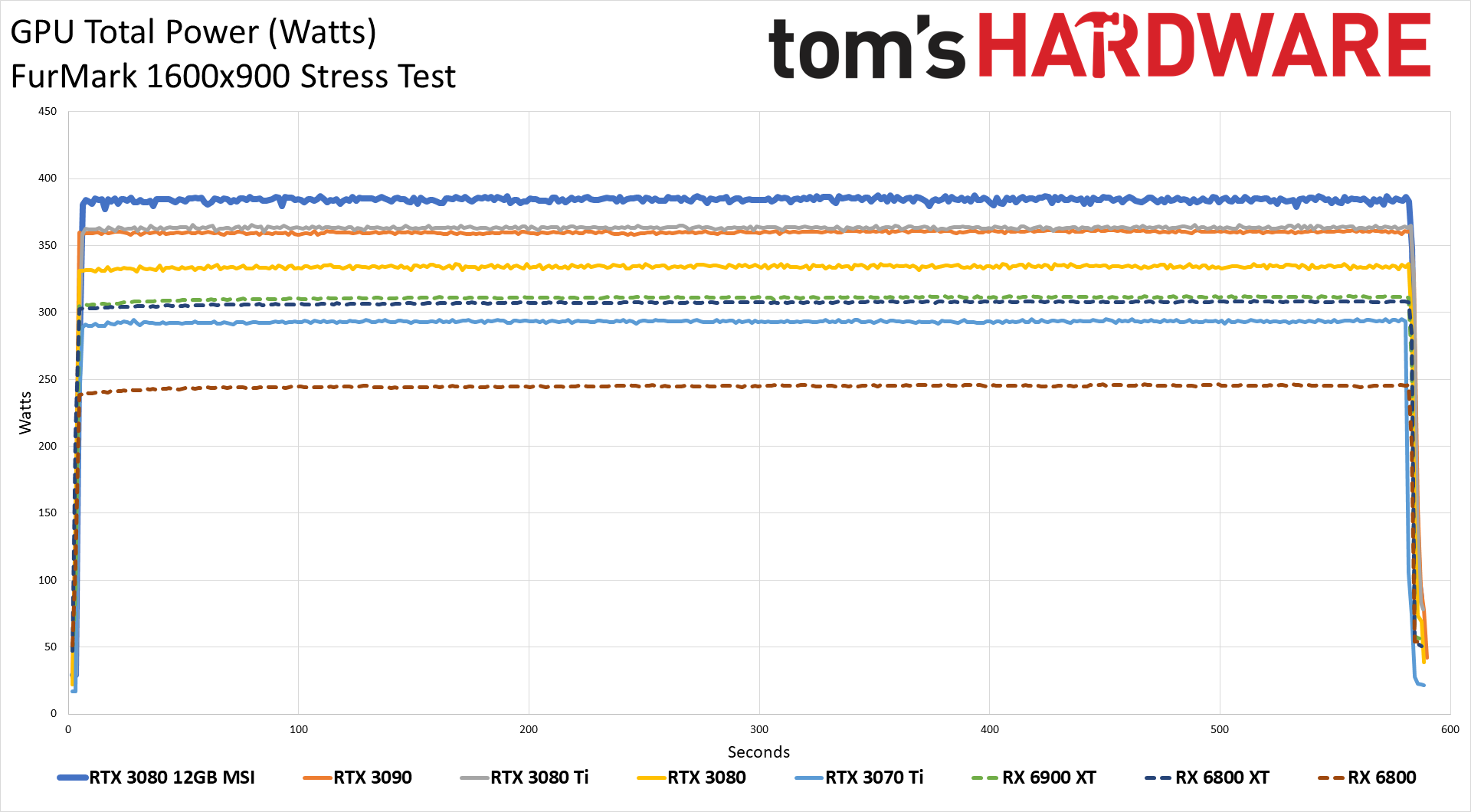

Overall, performance from the MSI RTX 3080 12GB was basically equal to the reference RTX 3080 Ti Founders Edition, but MSI does list a higher power consumption of 400W and includes three 8-pin power connectors. Our Powenetics testing looks at in-line GPU power consumption, so we'll be able to see just how much juice the card requires, and we'll also look at other aspects of the cards. We collect data while running Metro Exodus at 1440p ultra and run the FurMark stress test at 1600x900. Our test PC remains the same old Core i9-9900K as we've used previously, to keep results consistent.

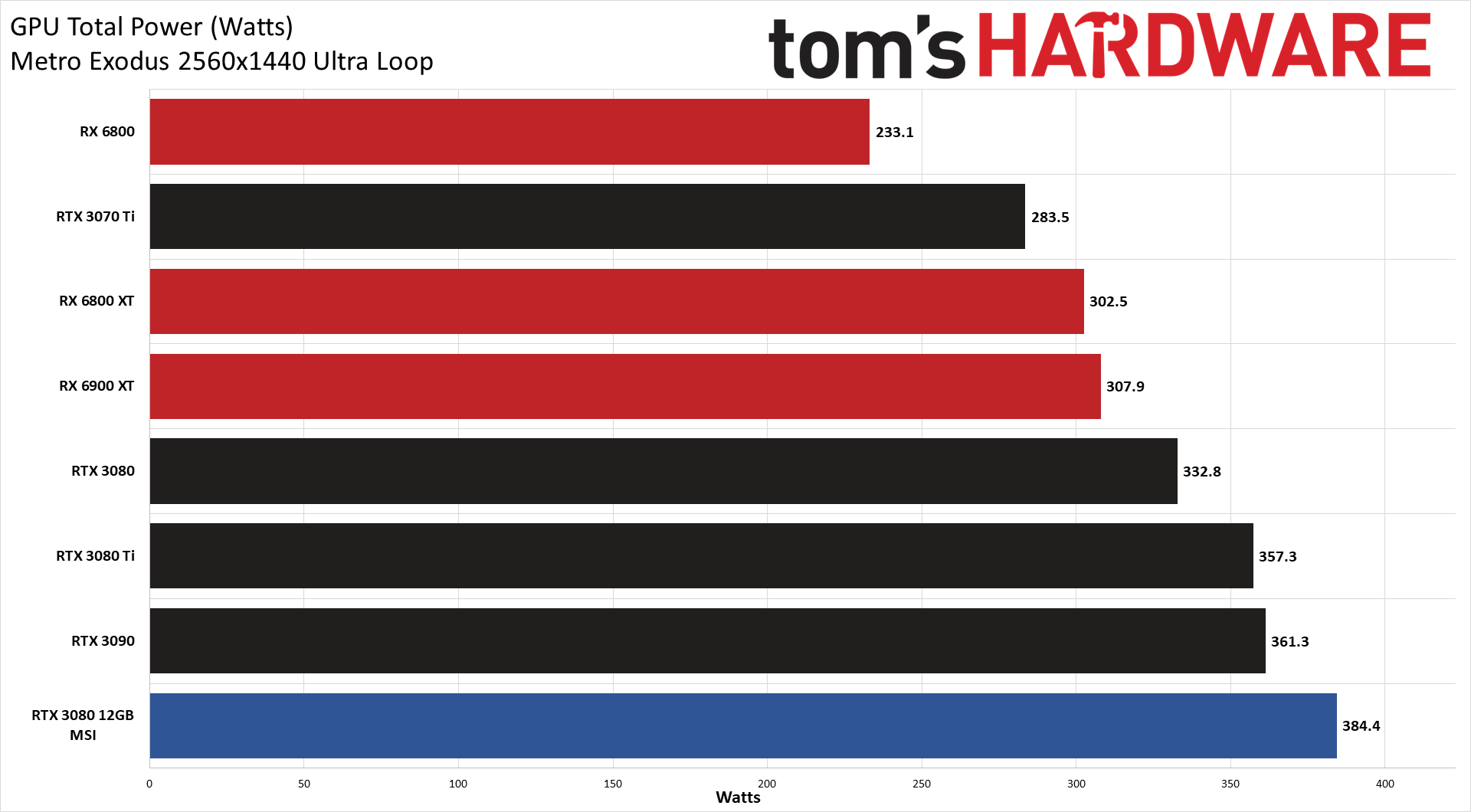

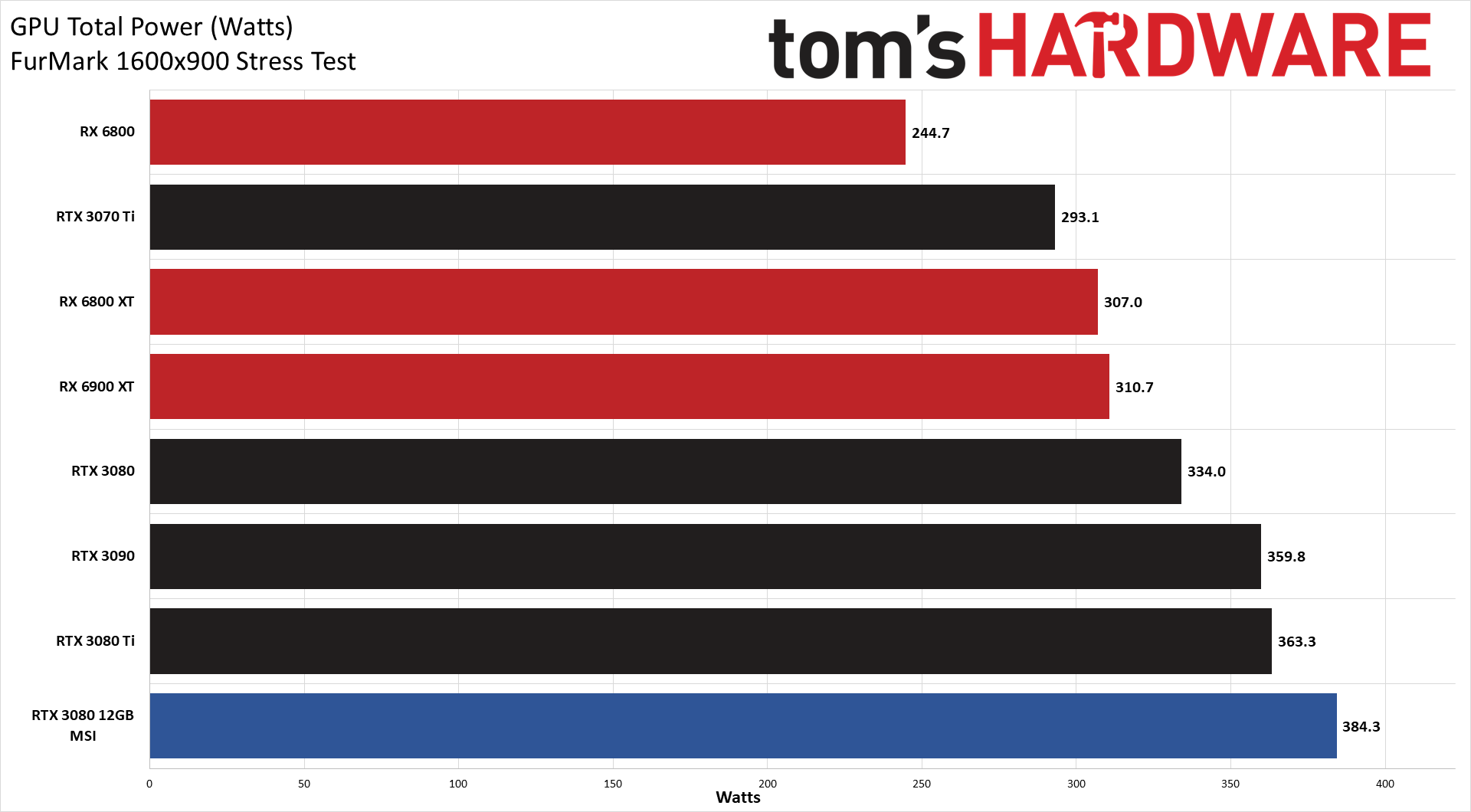

It's not the most power-hungry graphics card we've ever tested, but the MSI 3080 12GB Suprim X can draw quite a bit of power. It averaged 384W in both FurMark and Metro Exodus, about 25W more than the 3080 Ti and 3090 Founders Edition cards, and over 50W more than the 3080 10GB. Let's look at the other aspects of the card to see where the extra power goes.

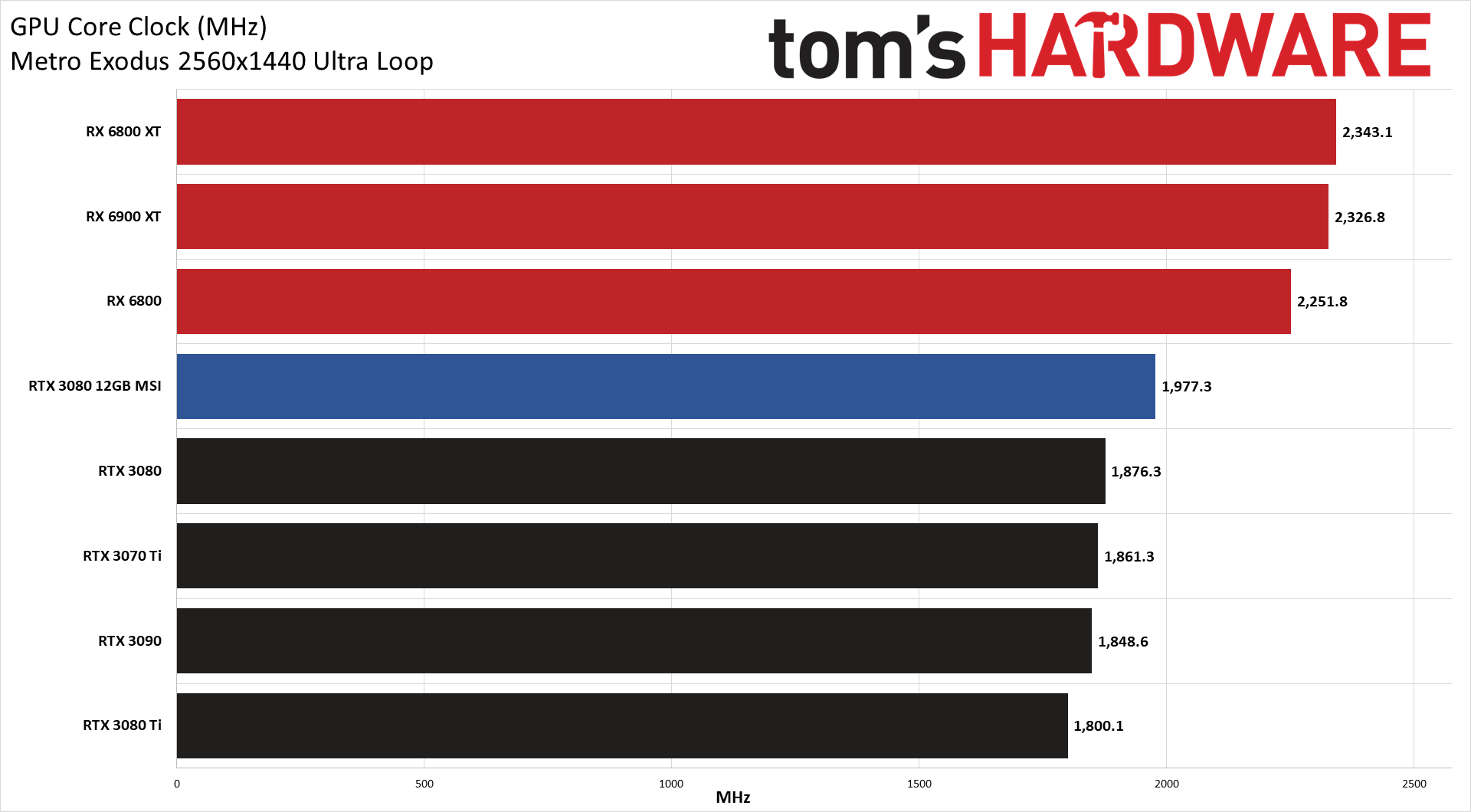

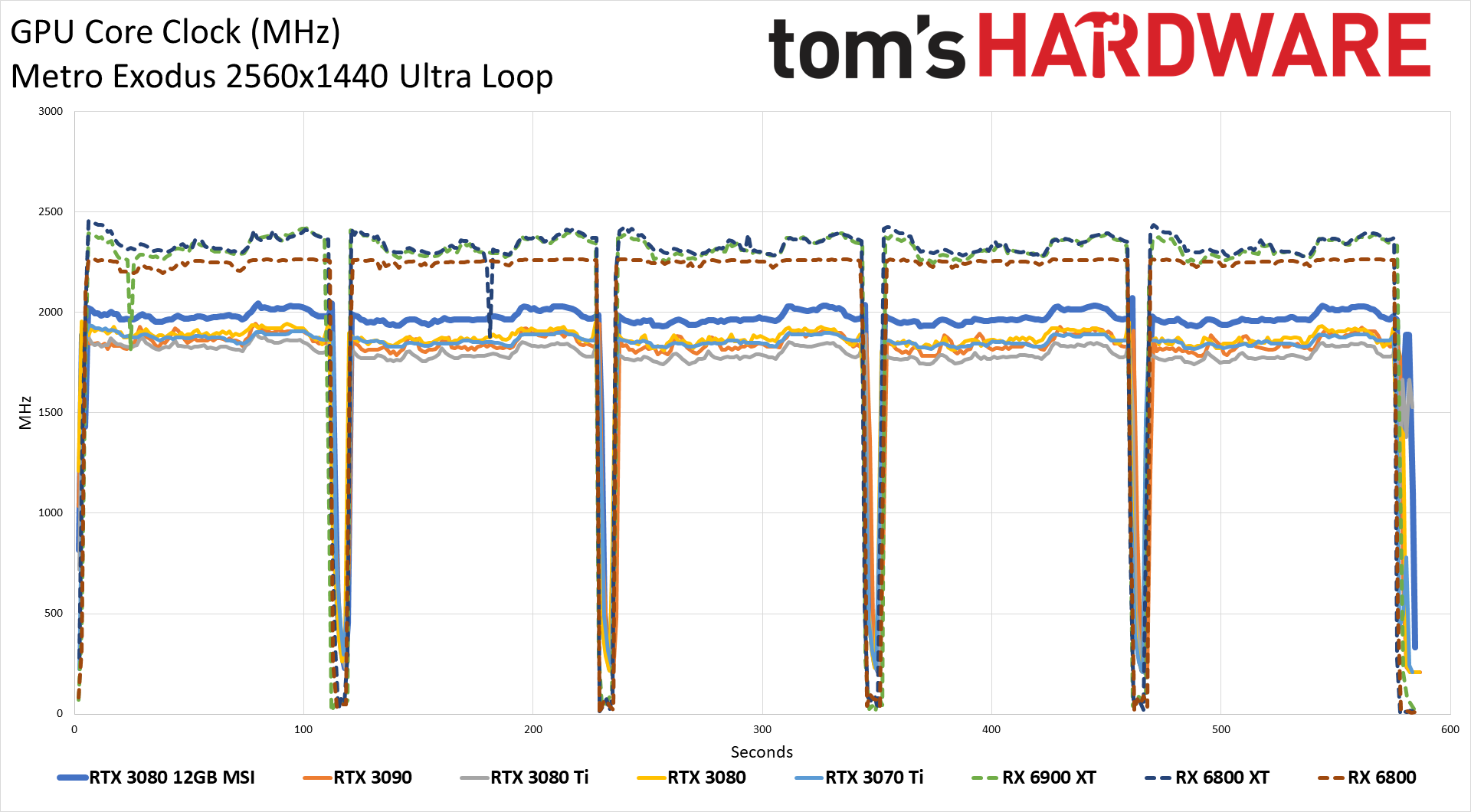

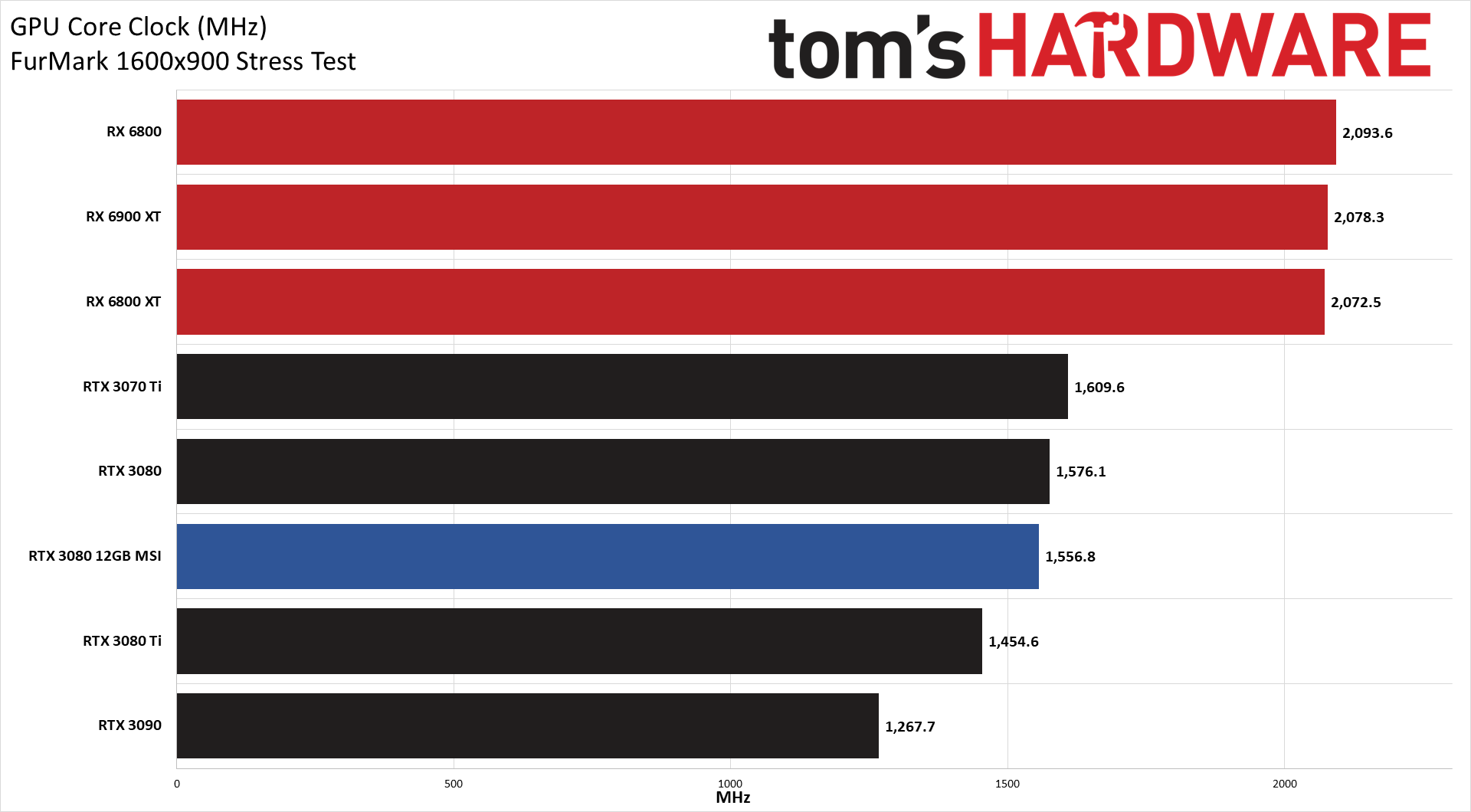

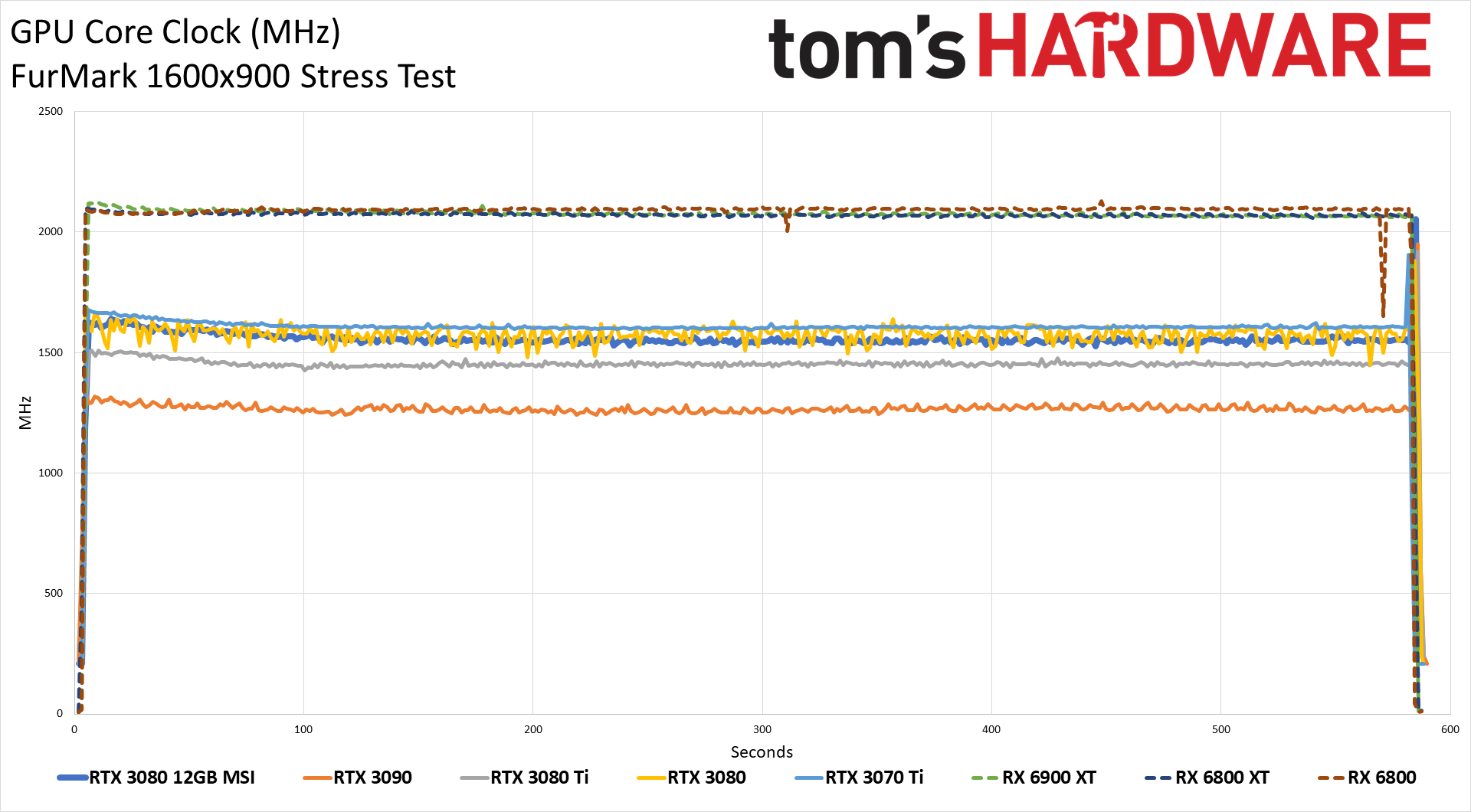

Running more GPU cores at higher clock speeds is a great way to consume power, which is why the 3090 and 3080 Ti both have lower average clocks than the other Nvidia GPUs. The MSI card meanwhile goes the other direction and averaged about 100MHz higher clocks than the reference 3080 10GB in Metro and nearly breached the 2GHz mark. Clock speeds in FurMark were much lower, falling just under the 3080 FE and sitting at around 1.55GHz.

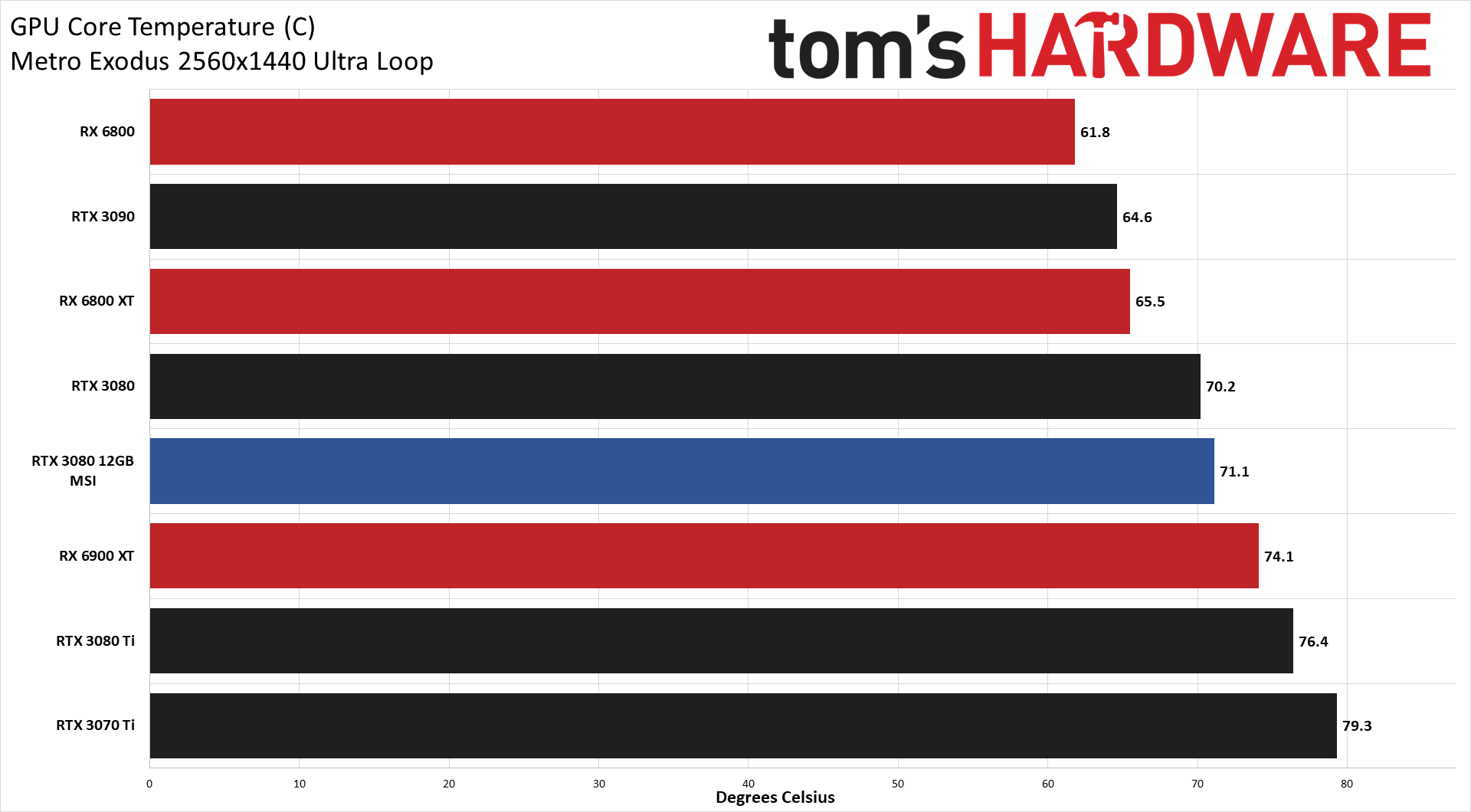

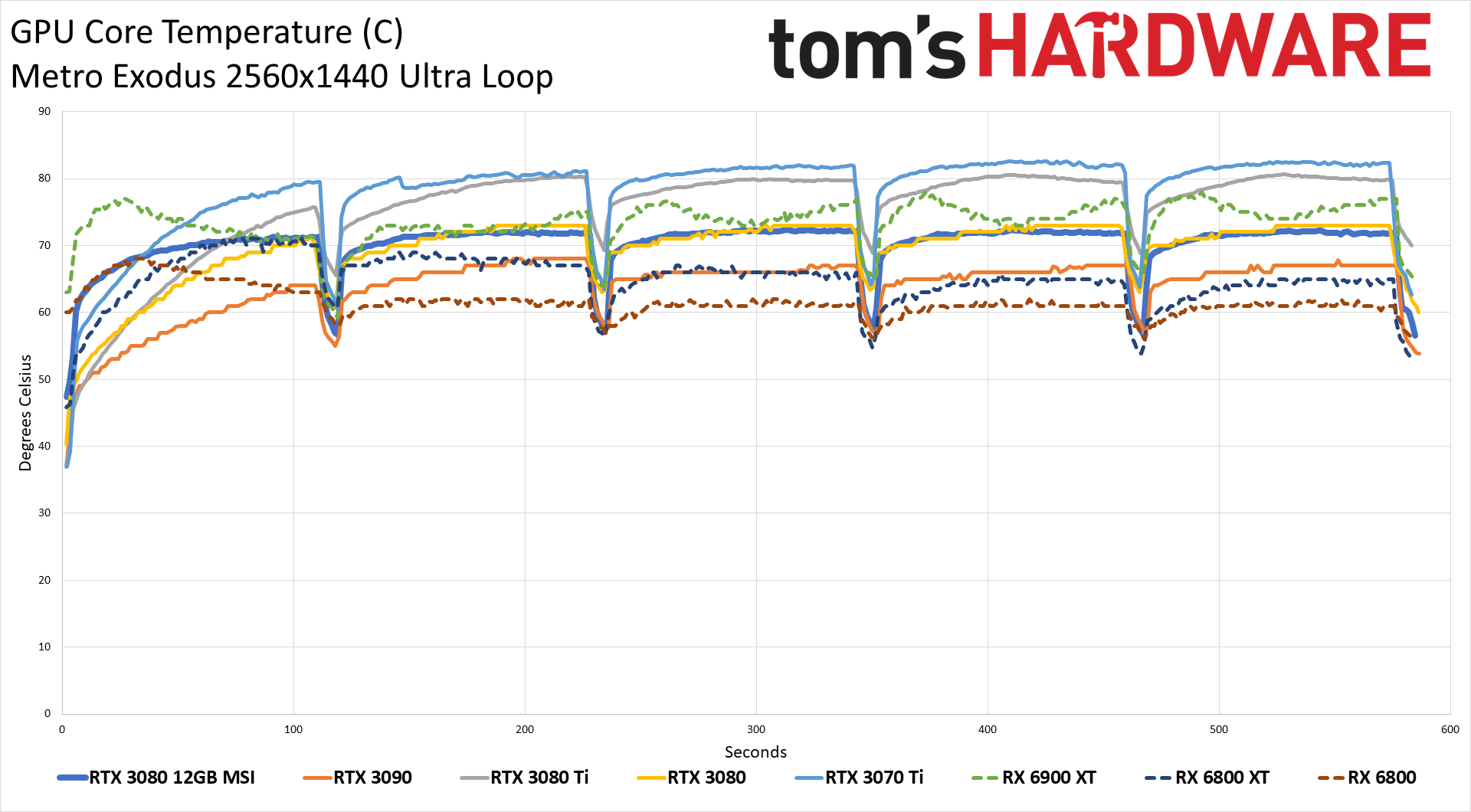

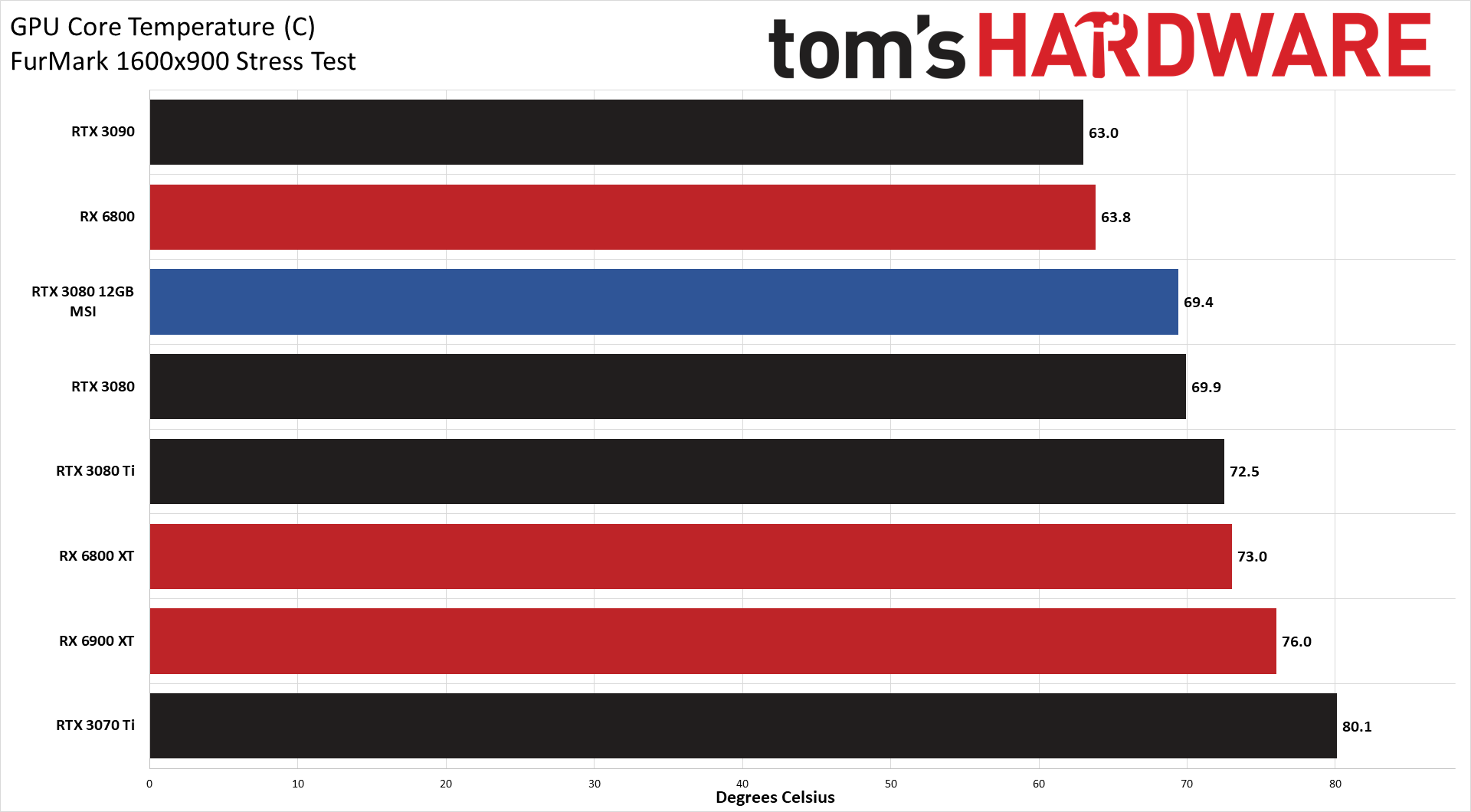

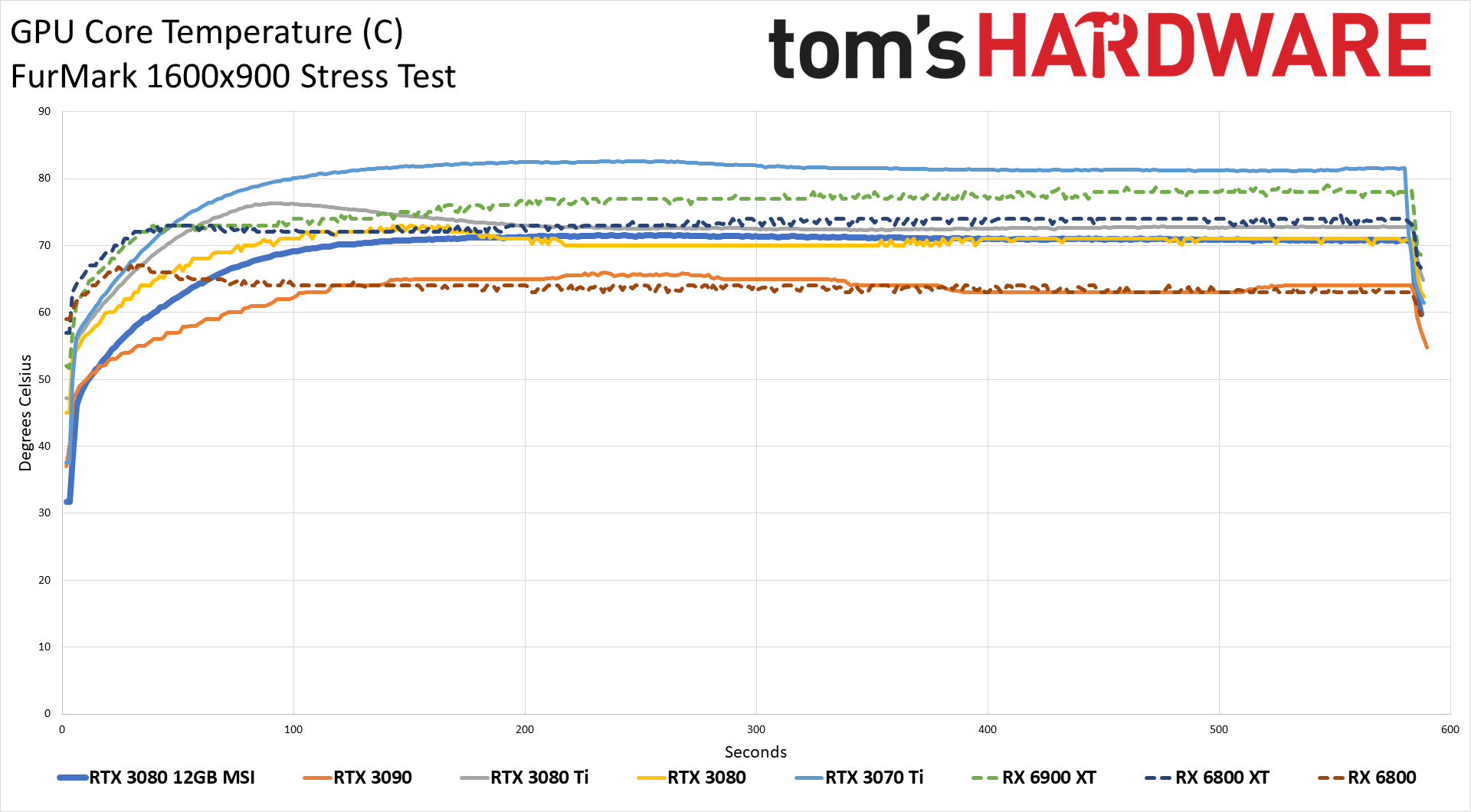

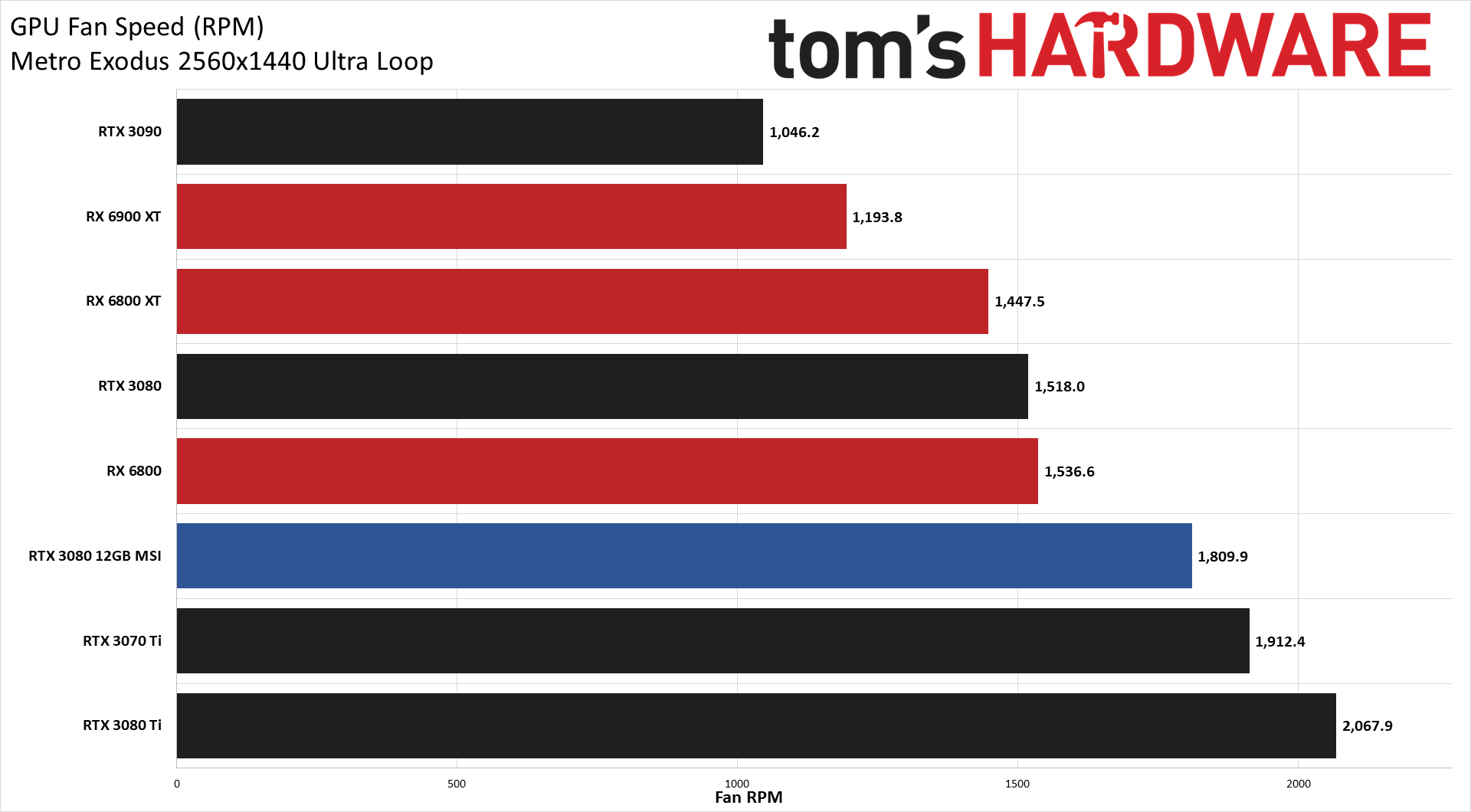

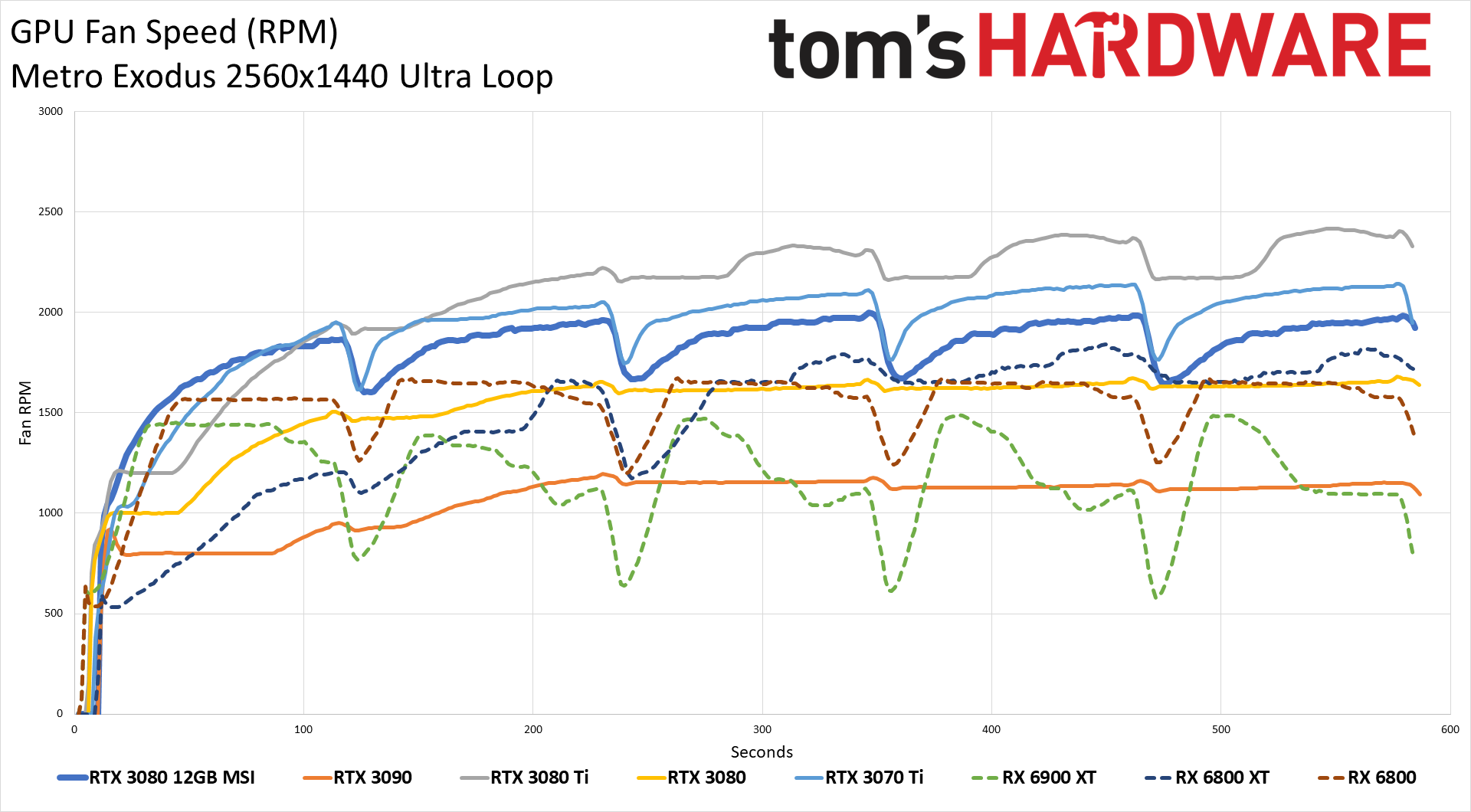

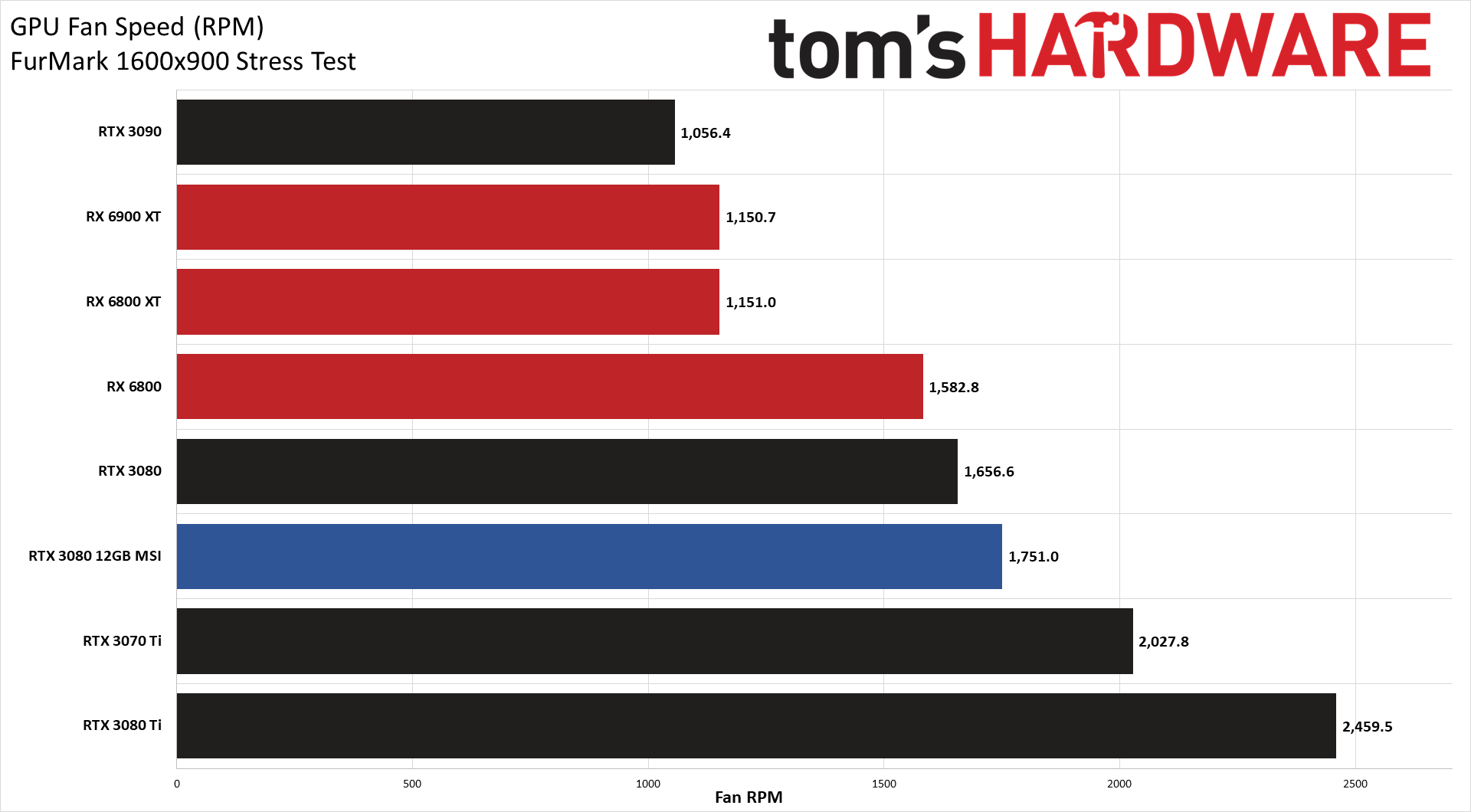

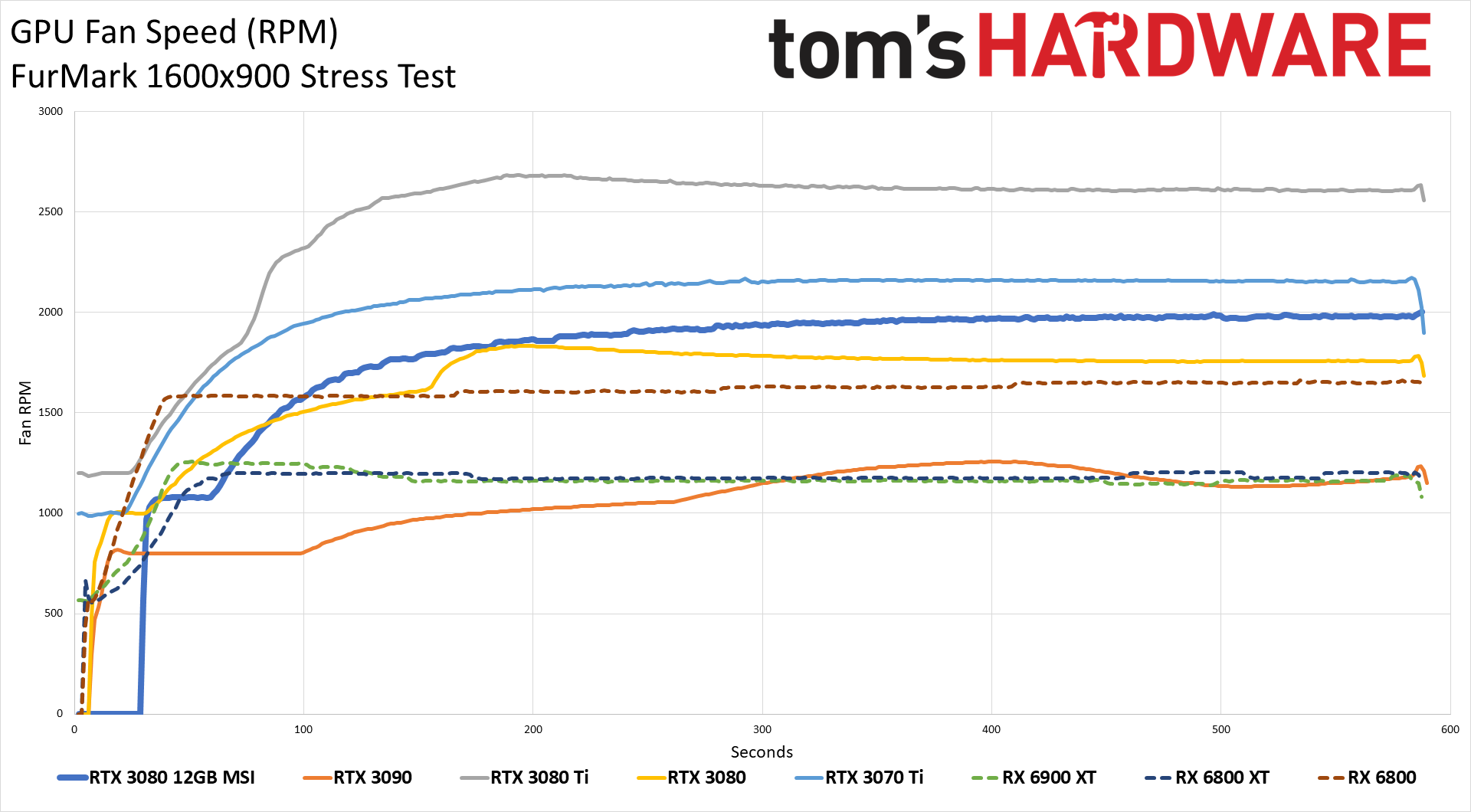

Despite the high power use and high clocks, the MSI card still keeps thermals and fan speeds at reasonable levels. FurMark averaged 69C, largely due to the throttled GPU clocks, while Metro hit 71C. That was just a hair above the RTX 3080, but much lower than the RTX 3080 Ti's 76C while gaming. Fan speeds were also lower than the 3080 Ti FE, which was expected as that card tended to get quite loud.

We measured noise levels at 10cm using an SPL (sound pressure level) meter, aimed right at the GPU fans in order to minimize the impact of other fans like those on the CPU cooler. The noise floor of our test environment and equipment measures 33 dB(A). The MSI RTX 3080 12GB Suprim X isn't a silent card, but noise levels maxed out at 48.8 dB(A), which isn't bad considering the performance and power use. That equated to a 60% fan speed, and we also tested with a static 75% fan speed that bumped the card up to 54.6 dB of noise.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: MSI GeForce RTX 3080 12GB Power, Temps, Noise, Etc.

Prev Page MSI GeForce RTX 3080 12GB Gaming Performance Next Page MSI GeForce RTX 3080 12GB Suprim X: Matching the 3080 Ti, Mostly

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

King_V Reply"You know, we have a lot of GA102 chips where all 12 memory controllers are perfectly fine but where we can't hit the necessary 80 SMs for the 3080 Ti. Rather than selling these as an RTX 3080 10GB, what if we just make a 3080 12GB? Then we don't have to directly deal with the supposed $699 MSRP."

That may not be exactly what happened, but it certainly feels that way. MSI has produced a graphics card that effectively ties the RTX 3080 Ti in performance, even though it has 10 fewer SMs and needs more power to get there.

I guess that's one way to hit the price point and raw performance metrics. -

10tacle THANK YOU Jared for including FS2020 in this hardware review! That's pretty much all I use my rig for as a hard core flight simmer. I find the gap increase at 4K with the 3080 Ti (my GPU) over this 10GB 3080 interesting. That sim is a strange hardware demand duck and I'm still trying to figure out fine tuning details some 6 months on now since my build last August after winning a NewEgg shuffle to get the GPU (I paid $1399.99 for the EVGA FTW3 edition).Reply

The interesting thing is that it is the only game (sim to me) that shows a leg stretch gap between the lower tiered/VRAM'd cards, including this 10GB 3080, at 4K. My guess is that's where the Ti's wider memory bus bandwidth, CUDA cores (I still call Nvidia GPU cores that), and extra 2GB VRAM come together to show off the power of the GPU. The same can be said with the 3090 sitting at the top of the FS2020 chart at 4K. -

HideOut I hope the Intel ARC series is competitive and they put so much price pressure on nVidia that they nearly go broke. They're doing noithing but ripping off customers these days with this kinda rediculous trash.Reply -

watzupken I actually see no point in paying this much for a RTX 3000 series card when we are expecting RDNA3 and RTX 4000 series to be announced later this year. I feel Nvidia released this card with the intention of increasing prices, less to push performance. After all, between RTX 3080 and RTX 3090, each "new" product is just marginally better than the previous, but cost quite a bit more for a few % improvement.Reply -

JarredWaltonGPU Reply

Like I said in the review, Nvidia claims this was an AIB-driven release. Meaning, MSI, Asus, EVGA, Gigabyte, etc. wanted an alternative to the 3080 Ti and 3080 10GB. Probably there were chips that could be used in the "formerly Quadro" line that have similar specs of 70 SMs and 384-bit memory interface, and Nvidia created this SKU for them to use. But that would assume there's less demand for the professional GPUs right now, as otherwise Nvidia would presumably prefer selling the chips in that more lucrative market.watzupken said:I actually see no point in paying this much for a RTX 3000 series card when we are expecting RDNA3 and RTX 4000 series to be announced later this year. I feel Nvidia released this card with the intention of increasing prices, less to push performance. After all, between RTX 3080 and RTX 3090, each "new" product is just marginally better than the previous, but cost quite a bit more for a few % improvement.