China Approves Nvidia's Mellanox Deal, Could Help Fight AMD and Intel in Exascale

Nvidia wires up its networking connections

Nvidia announced today that its $6.9 billion acquisition of Mellanox had cleared the final hurdle from Chinese antitrust authorities, paving the way for the graphics card company to take control of the networking giant by April 27. The move could help Nvidia bolster its presence in the HPC and supercomputer segments as the company finds itself without any new notable exascale contracts despite its overwhelming market presence in GPU-driven AI and compute workloads in the data center. Meanwhile, Intel and AMD have both been tapped for the Department of Energy's (DoE) next generation of world-leading supercomputers.

Mellanox is known for its commanding presence in Ethernet and InfiniBand products for the data center, so while the acquisition obviously diversifies Nvidia's portfolio, it also creates synergies that are larger than they appear on the surface.

Tying accelerators, like GPUs, together into one seamless architecture that spans across a massive number of supercomputer nodes is the winning formula for reaching exascale-class levels of performance within an acceptable power threshold and physical footprint, but AMD and Intel seem to be winning the battle of these interconnected designs.

AMD and Intel, by virtue of having both CPU and GPU production in-house, can tie the CPU and GPU together in much more sophisticated ways than Nvidia due to their purpose-built designs. AMD has won two of the three exascale contracts with the DoE, but the Frontier system is the first in line for deployment in 2021. This 1.5-exaflop system will be powered by AMD's EPYC Rome CPUs and Radeon Instinct GPUs, but we recently learned that the CPUs and GPUs will support memory coherency via the Infinity Fabric.

Memory coherency between the CPUs and GPUs reduces data movement to improve performance, reduce latency, and boost performance per watt. It also eases programming requirements. Again, this is a capability that Nvidia currently doesn't have because it doesn't design processors, though it has adopted the CXL specification that should include that feature in future revisions. We won't see that specification until PCIe 5.0 comes to market, though.

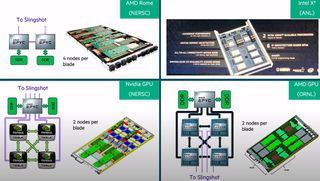

But there's another interesting tidbit. Cray, an HPE Company, designs the chassis and Slingshot networking fabric for all of the exascale supercomputers, and the company has largely taken the lead in the HPC market due to its cutting-edge system and networking design. Cray revealed some interesting information, seen in the slide below, during a recent presentation at the Rice Oil and Gas HPC Summit.

The block in the lower left hand corner represents Nvidia's coming supercomputer design, which uses Cray's Shasta blades and Slingshot networking for the Perlmutter supercomputer. Here we can see the Nvidia Volta Next GPUs that power this pre-exascale system that will come online in spring 2021, meaning this is Nvidia's latest known design. Notice that the GPUs reside on a fabric tied to the EPYC Rome CPUs, but the "To Slingshot" markings up top denote that the network traffic travels into the CPUs and then through to the GPUs. Remember, Slingshot is an Ethernet-based fabric that is the key to Cray's success in developing highly-scalable supercomputers. That data from the network, which flows in and out at amazing rates in supercomputers, has to pass through the CPU before landing in the Nvidia GPUs.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

In contrast, the bottom right corner represents AMD's next architecture that powers the Frontier supercomputer. Here we can see that the Slingshot networking fabric never touches the EPYC Milan CPU, instead flowing directly into the GPUs. That means those accelerators will experience higher throughput and lower latency to the network, all without tying up the fabric that provides memory coherency with the EPYC processor.

There are networking technologies, like RDMA, that can cut through a CPU to offer direct memory access without involving the compute cores, but that data still has to flow over a secondary fabric in Nvidia's design. There's no substitute for the capabilities enabled by having a high-bandwidth network connection directly into the GPU, arguably making the AMD implementation superior, at least conceptually. It's probably no coincidence that AMD's design scales to 1.5 exaflops of performance, while the Nvidia design in Perlmutter hits 100 petaflops.

As such, Nvidia's acquisition of Mellanox, a networking leader with an IP warchest, could give Nvidia access to technologies to create tighter integrations such as this. Granted, Nvidia has its own exceptional switching technology with its NVSwitch that powers what the company calls the "World's Largest GPU," but that interface is primarily designed for GPU-to-GPU transfers. It should go without saying, but Mellanox's years of experience in networking and topologies will also help with future iterations of NVSwitch, too.

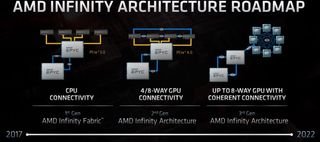

But AMD and Intel aren't sitting still. AMD's next-next-gen EPYC Genoa CPUs and Radeon Instinct CPUs will power what will be the world's fastest supercomputer, the two-exaflop El Capitan, and it comes with an even more sophisticated third generation of the Infinity Architecture. Again, this relies upon an amazing amount of integration between the CPU and GPU. The illustrations include eight GPUs tied into a mesh/taurus-type topology that's connected to the CPUs. Imagine the possibilities if this architecture also has direct networking connections to each of the eight GPUs.

Intel is also on the same path. Intel's as-yet unproven Ponte Vecchio GPUs come with a new interconnected node design that leans on its new XE Memory Fabric, complemented by a 'Rambo Cache,' to tie together CPUs and GPUs into a single coherent memory space. This design will power the one-exaflop Aurora supercomputer.

Meanwhile, Nvidia might suffer in the supercomputer realm because it doesn't produce both CPUs and GPUs and, therefore, cannot enable similar levels of integration. Is this type of architecture, and the underlying unified programming models, required to hit exascale-class performance within acceptable power envelopes? That's an open question, but both AMD and Intel have won exceedingly important contracts for the U.S. DOE's exascale-class supercomputers (the broader server ecosystem often adopts the winning HPC techniques), but Nvidia hasn't made any announcements about such wins despite their dominating position for GPU-accelerated compute in the HPC and data center space.

As such, we can expect Nvidia to expand into more holistic rack-level architectures to complement its prowess in GPU compute. Mellanox has plenty of promising new products, including the chiplet-based Spectrum-3 line in the works, and Nvidia's recent acquisition of SwiftStack (for an undisclosed sum) also opens the possibility for complementary storage software that enables large-scale AI systems. This object-based storage company has plenty of experience with vast network-attached data repositories used for AI workloads, which ties in nicely with Nvidia's burgeoning portfolio.

The industry is rife with speculation that all that's left for Nvidia to do is to roll out its own CPUs. ARM, anyone?

Nvidia plans to take over Mellanox on April 27th.

Paul Alcorn is the Managing Editor: News and Emerging Tech for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

JayNor both NVidia and AMD have joined the consortium for the PCIE5/CXL technology that Intel developed to enable asymmetric cache coherence between CPU and accelerators.Reply

Intel is using that next year on the Aurora. -

Deicidium369 With both CXL (intra rack) and GenZ (inter rack) the space for Mellanox is going to shrink - Infiniband was originally designed to tie multiple machines together in a cluster - IB was high speed, ultra low latency (just like GenZ) connect. I don't think that all servers will be using GenZ or CXL for that matter - but this has been the first real industry supported challenger to IB.Reply

I see CXL as being ubiquitous - since Intel is the originator and dominant data center supplier - and with it's full range of accelerators - from FPGA to AI (Nervana, Habana)... all CXL ready for PCIe Gen5 and probably not too long after Gen 6. This move to dis-aggregation makes at least CXL a sure thing. -

jeremyj_83 Reply

You are forgetting that Mellanox doesn't make only Infiinband products. They are currently the only company that makes the ICs, sells their own ICs, and white-boxes ICs for people like Dell, HP, etc... On top of that their Ethernet products are always at the same performance level as Broadcom. Also IB is primarily used in the HPC & Super Computers of the world, granted Ethernet is still more prevalent there. GenZ is nice, but for a while it will be a niche product and during that time Mellanox can get things to work GenZ and their other products.Deicidium369 said:With both CXL (intra rack) and GenZ (inter rack) the space for Mellanox is going to shrink - Infiniband was originally designed to tie multiple machines together in a cluster - IB was high speed, ultra low latency (just like GenZ) connect. I don't think that all servers will be using GenZ or CXL for that matter - but this has been the first real industry supported challenger to IB.

I see CXL as being ubiquitous - since Intel is the originator and dominant data center supplier - and with it's full range of accelerators - from FPGA to AI (Nervana, Habana)... all CXL ready for PCIe Gen5 and probably not too long after Gen 6. This move to dis-aggregation makes at least CXL a sure thing. -

bit_user @PaulAlcornReply

Nvidia might suffer in the supercomputer realm because it doesn't produce both CPUs and GPUs

Nvidia designed several generations of custom ARM cores for their self-driving, tablet, and embedded SoCs. I know it's not the same thing as building server CPUs, but also not that far off. -

castl3bravo Replybit_user said:@PaulAlcorn

Nvidia designed several generations of custom ARM cores for their self-driving, tablet, and embedded SoCs. I know it's not the same thing as building server CPUs, but also not that far off.

Sounds like Jensen continues to play catch up while Lisa has been making the right decisions since taking control "temporarily" as CEO. If Jensen tries to force additional revenue out of its ARM acquisition I would expect to see Lisa and Intel to easily counter it with competing products.

Most Popular