Intel Shares Details on Movidius Keem Bay, Other A.I. Hardware

Earlier this month, Intel provided updates on its AI portfolio that includes the company’s new Neural Network Processors (NNP) for Training and Inference. Intel also gave details about Keem Bay, its next-generation Movidius VPU (vision processing unit) for edge computing with massive increases in performance and efficiency.

Intel announced the dedicated hardware at its AI Summit event on November 12th. These products are arguably the culmination of over three years of work since its acquisitions of Movidius and Nervana in the second half of 2016 and creating its AI Products Group, led by Nervana co-founder Naveen Rao.

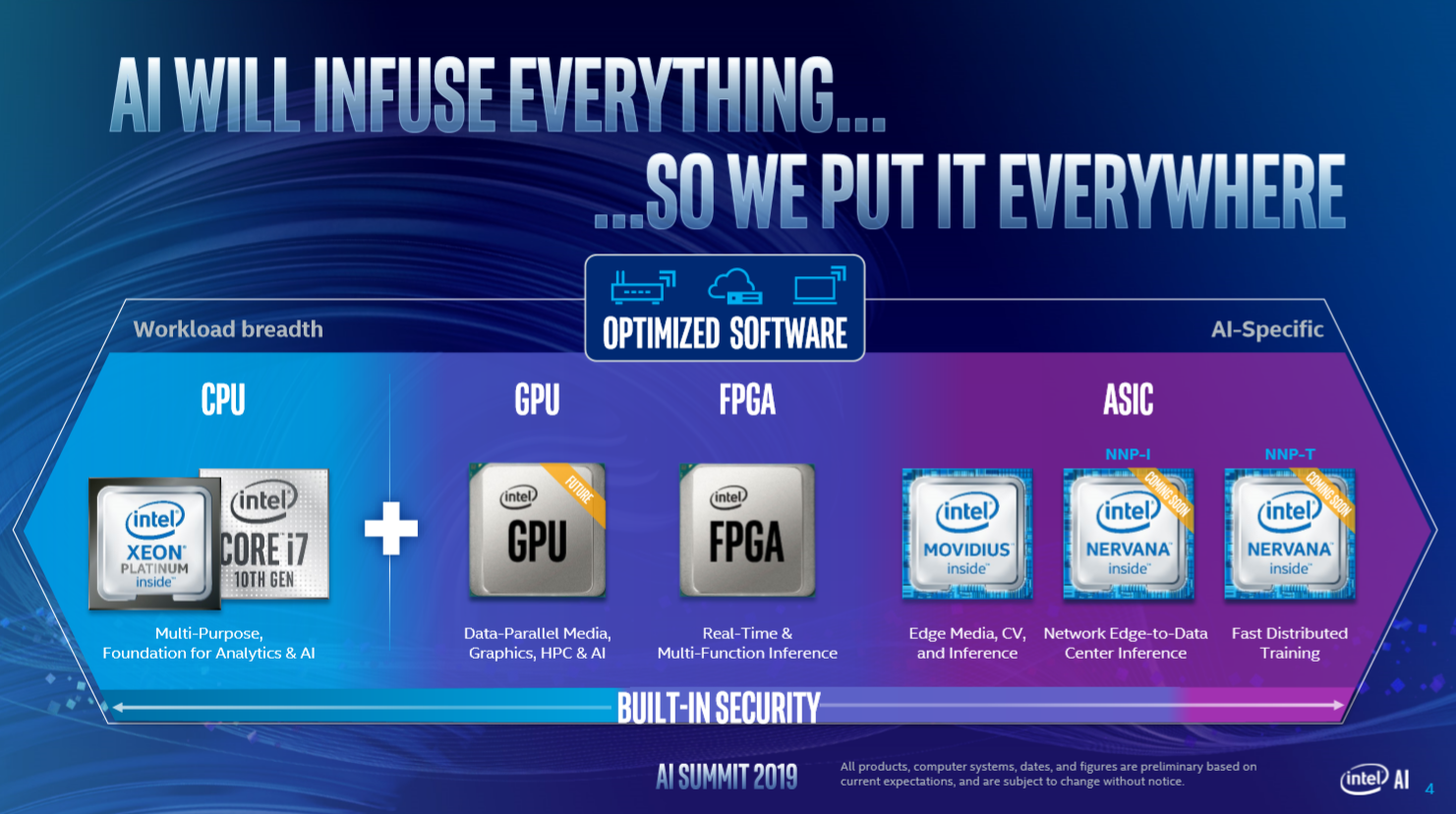

Rao pointed out that Intel is already a big player in AI, stating that its 2019 AI revenue will surpass $3.5 billion, up from over $1 billion in 2017 and larger than Nvidia’s data center business. (Most of that likely stems from Xeons doing inference in the data center.) Since AI will be infused in practically everything, as Intel says, Intel’s strategy is to put AI capabilities throughout its portfolio. Intel has shown this through its OpenVINO vision toolkit for IoT, Agilex FPGAs, Ice Lake on the PC, Cascade Lake’s DLBoost, and even further out, its future discrete graphics.

Processors: DLBoost

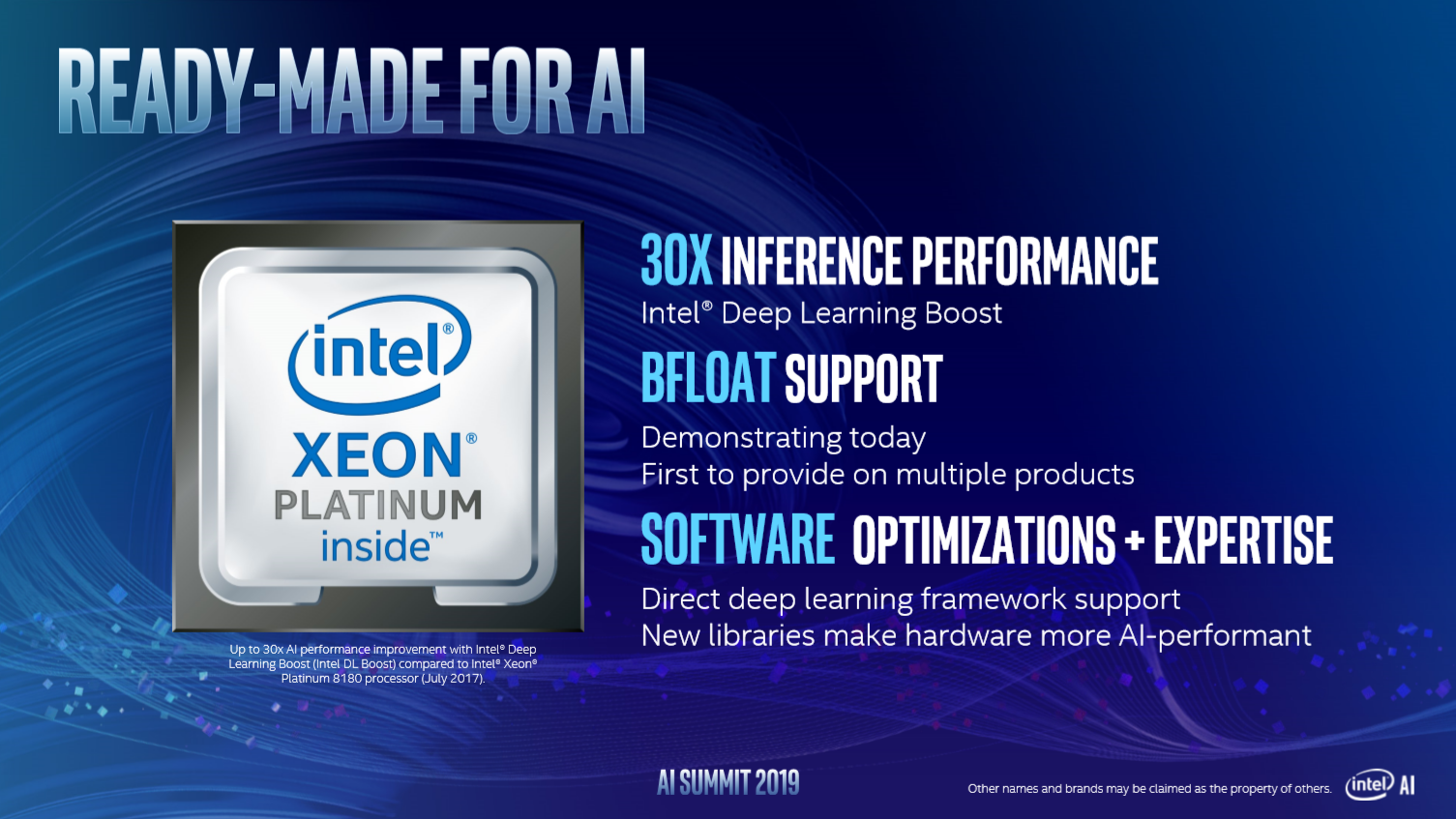

Intel demonstrated the bfloat16 support on next year’s 56-core Cooper Lake as part of its DLBoost umbrella of AI features in its processors. Bfloat16 is a numeric format that achieves similar accuracy as single precision floating-point (FP32) in AI training, but with the smaller memory footprint of a 16-bit format.

Intel didn’t provide a performance improvement estimate, but it did claim that for inference, Cooper Lake is 30x faster than Skylake-SP in July 2017, which comes from both hardware and software improvements.

On the PC side, Ice Lake incorporates the same DLBoost AVX-512_VNNI instructions that are also in Cascade Lake. Intel showed that a Core i3 Ice Lake processor achieves 4.3x higher inference throughput than a Ryzen 7 3700U. Although it should be noted that the former utilized both its CPU and integrated graphics, whereas the Ryzen processor used solely the CPU.

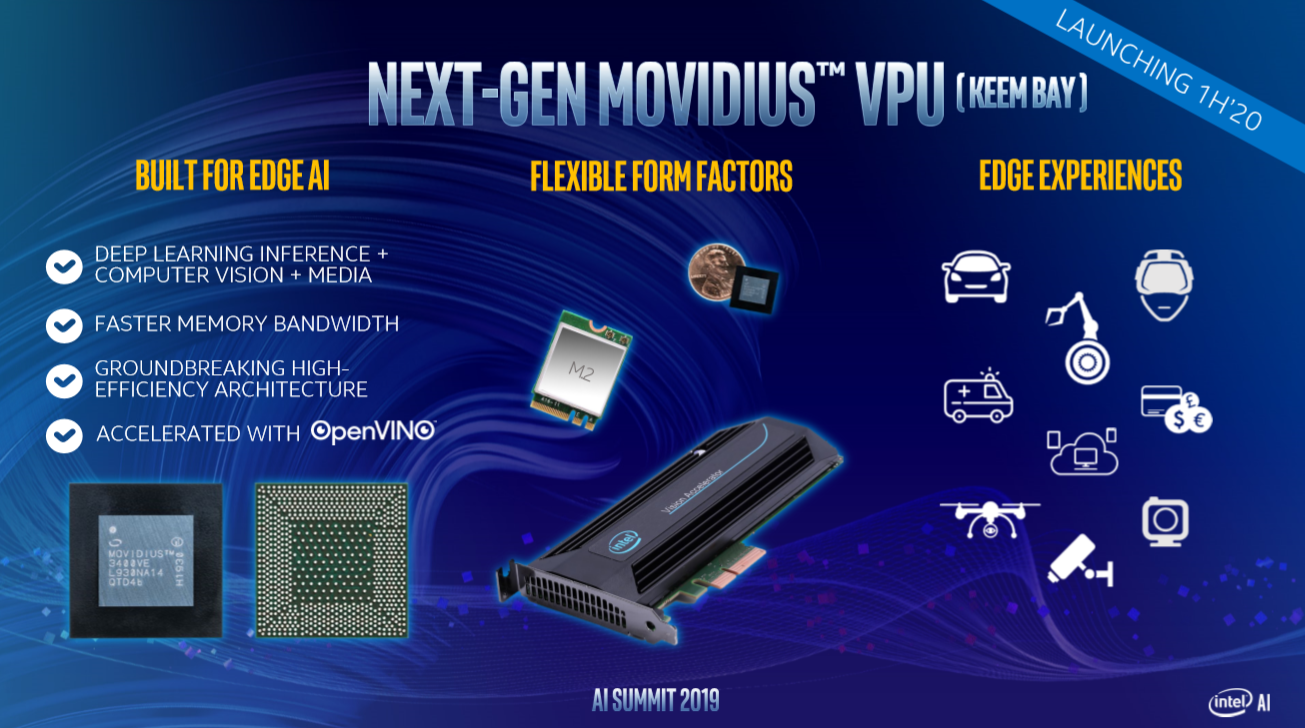

Movidius: Keem Bay VPU

As part of its push into machine vision and edge intelligence, such as smart cameras, robots, drones and VR/AR, Intel acquired Movidius in 2016. Movidius calls its low-power chips "vision processing units" (VPUs). They feature image signal processing (ISP) capabilities, hardware accelerators, MIPS processors and programmable (VLIW) 128-bit vector processors which it calls SHAVE cores.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Movidius’ products have found their way into several notable products such as Google’s Clips camera, DJI’s Phantom 4 drone and the DJI powered toy grade Ryze Tello. Movidius has also produced it in the Neural Compute Stick form factor. The latest NCS2 is based on the Myriad X.

As a refresher, the 2018 Myriad X also featured a dedicated 1 TOPS (tera operations per second) neural compute engine that made it 8x faster at inference than the Myriad 2, and features a total of 4 TOPS over all compute units. The Myriad X has 16 SHAVE cores, up from 12 on the Myriad 2. Its die size is 71mm2.

Intel has now detailed what it calls the Gen 3 Intel Movidius VPU codenamed Keem Bay. According to Intel, it boasts more than 10x the inference performance compared to the Myriad X while consuming the same amount of power. Intel says this is due to “adding groundbreaking and unique architectural features that provide a leap ahead in both efficiency and raw throughput”. It also has a new on-die memory architecture.

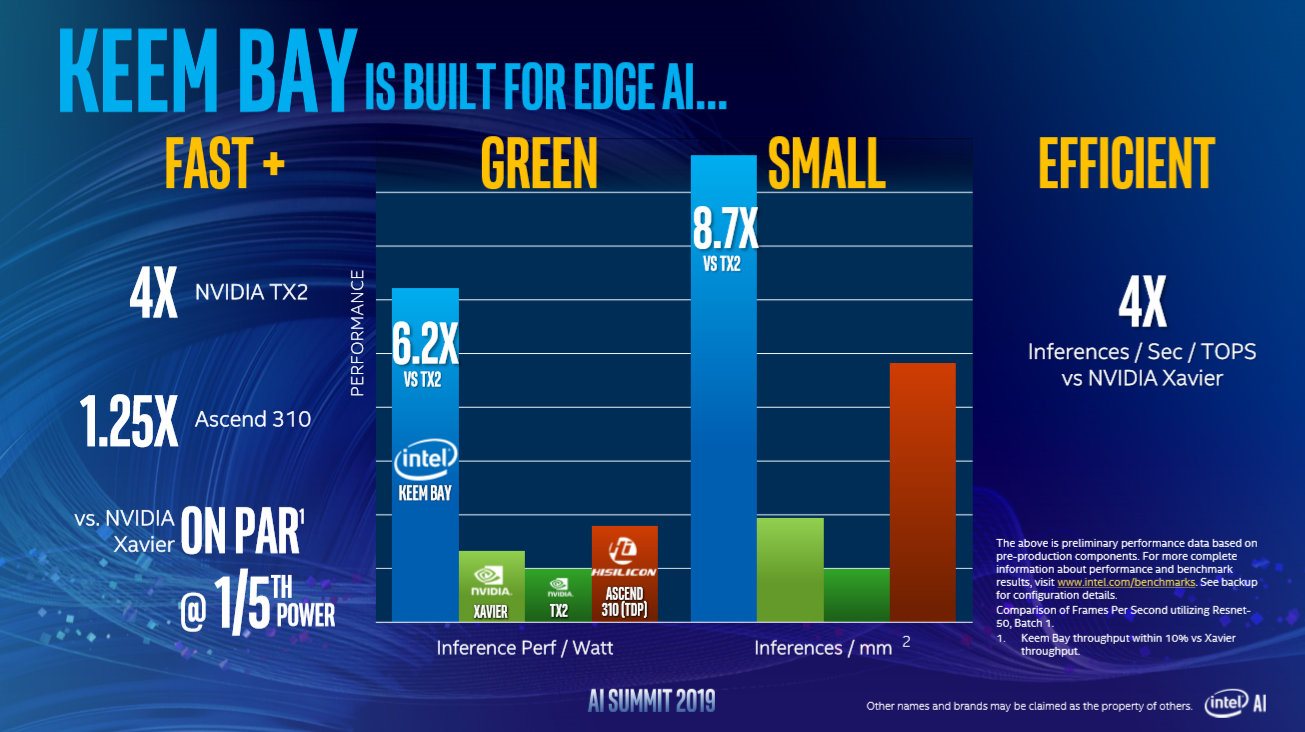

Indicative of those features, Intel claims that Intel claims it achieves four times more inferences/second per TOPS than Nvidia’s 16nm, 30 TOPS Xavier, and overall is on par (within 10% throughput) in performance at one-fifth the power consumption. Compared to the Huawei HiSilicon Ascend 310 (16 TOPS at 8W), Intel claims 1.25x higher performance with ~3x higher energy efficiency. Its lead against Nvidia’s TX2 is even higher. Intel said Keem Bay consumes a third less power than TX2’s 10W and a fifth of Xavier’s 30W, which would put Keem Bay at around 6.5W.

Intel also claims big gains in inferences per mm2. While Intel didn’t disclose its manufacturing process, its efficiency and die size suggests it is likely TSMC 7nm. At 71mm2, the chip’s die size is practically unchanged from the Myriad X’ 72mm2, and compares to Xavier’s 350mm2.

Keem Bay will be supported by Intel’s OpenVINO vision AI toolkit (which Intel calls the fastest growing tool in Intel history) and its new Edge AI DevCloud that allows customers to test their OpenVINO models for a variety of hardware configurations. Intel also announced an Edge AI nanodegree on Udacity.

Keem Bay will be available in M.2 and PCIe form factors in the first half of 2020. In the PCIe card form factor, multiple chips might be put on one board similar to the Myriad X.

Nervana Neural Network Processors

Intel has NNPs for both deep neural network training and inference. Intel says deep learning models are growing in complexity far beyond Moore’s Law, at a pace of doubling every 3.5 months or roughly an order of magnitude per year. AlexNet in 2012 had 26 million parameters, ML-NLP today has 100 billion parameters. This makes dedicated hardware a necessity.

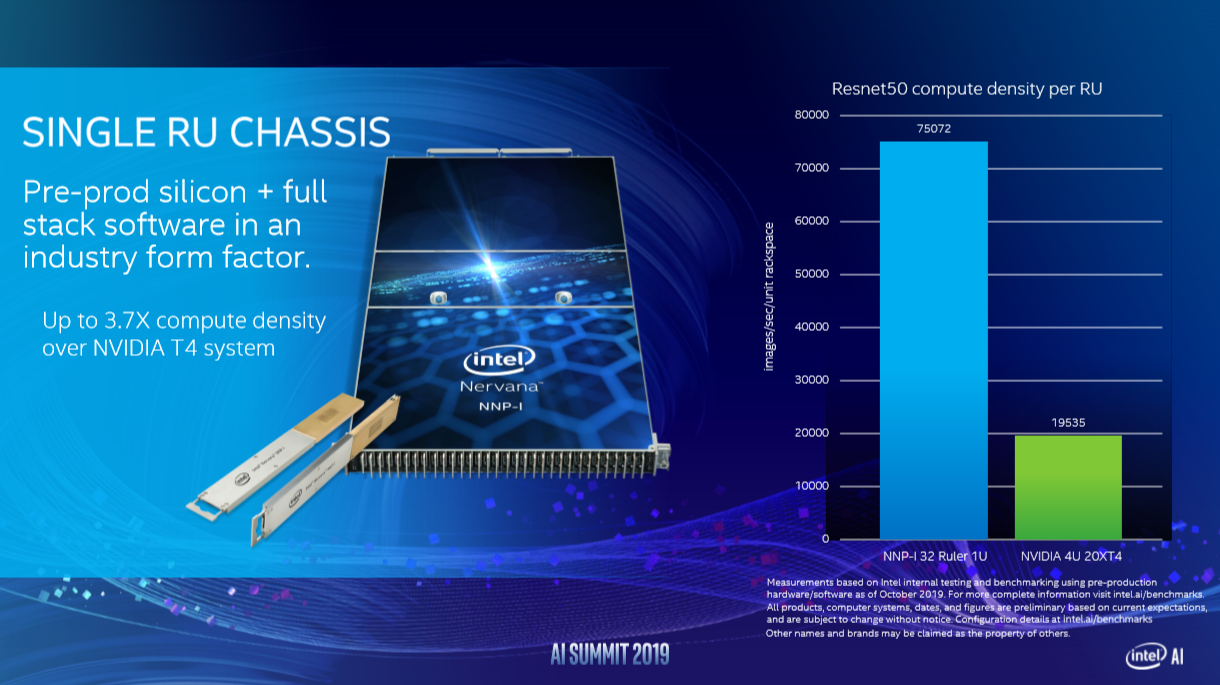

Intel’s NNP-I for inference is based on two Ice Lake Sunny Cove cores and twelve ICE accelerator cores. Intel claims it will deliver leadership performance per watt and compute density. In its M.2 form factor, it is capable of 50 TOPS at 12W equating to 4.8TOPS/W as it had already announced. Intel disclosed that the PCIe card form factor draws 75W and produces up to 170 TOPS (at INT8 precision).

Intel also showed its NNP-I in the ruler form factor that it originally invented for SSDs, fitting 32 cards in a single rack unit chassis (1U) and compared this to 20 Nvidia T4s in 4U. Intel claims it achieves 3.7x the compute density in ResNet-50. Assuming perfect performance scaling of both chips, this implies that one 12W NNP-I delivers 60% of the performance of the 130 TOPS (INT8), 70W T4.

It is also cloud-native with Kubernetes support and comes with a full reference solution stack. Production will start this year.

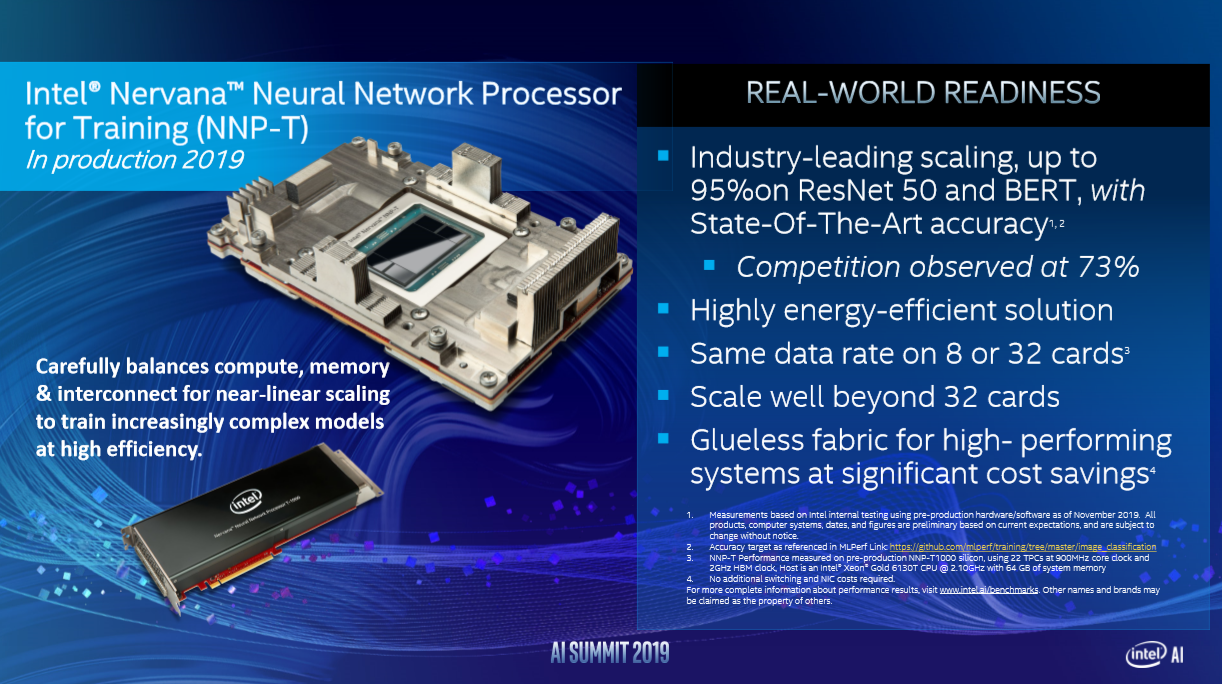

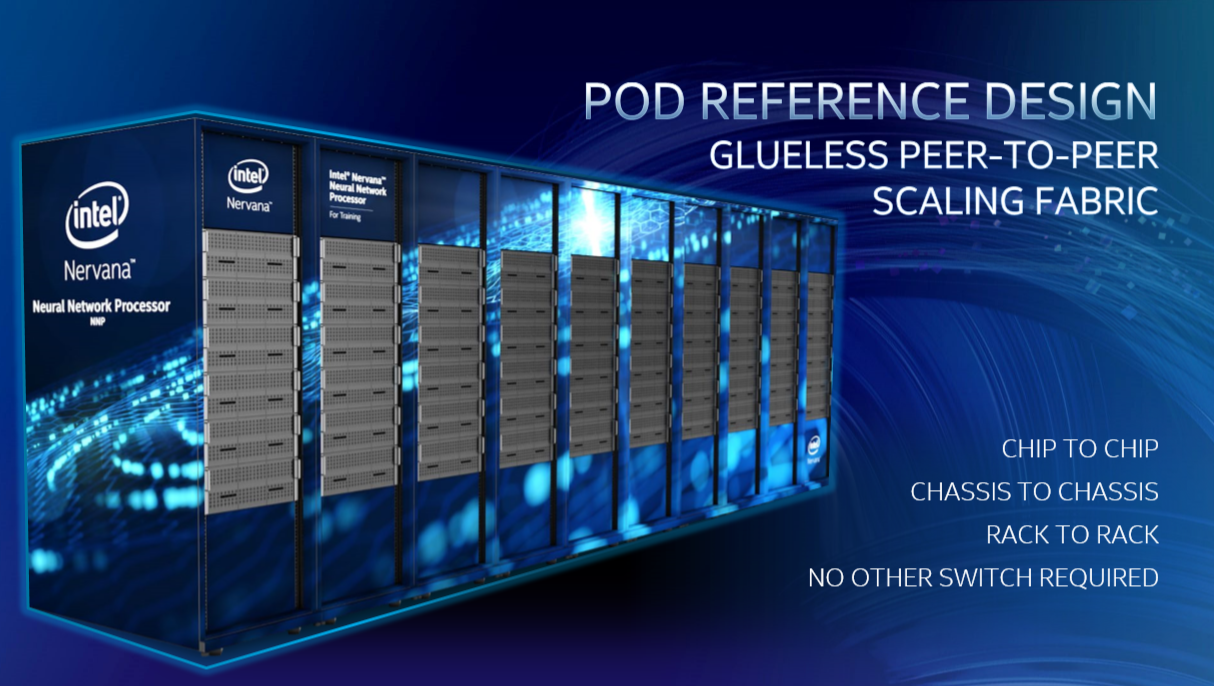

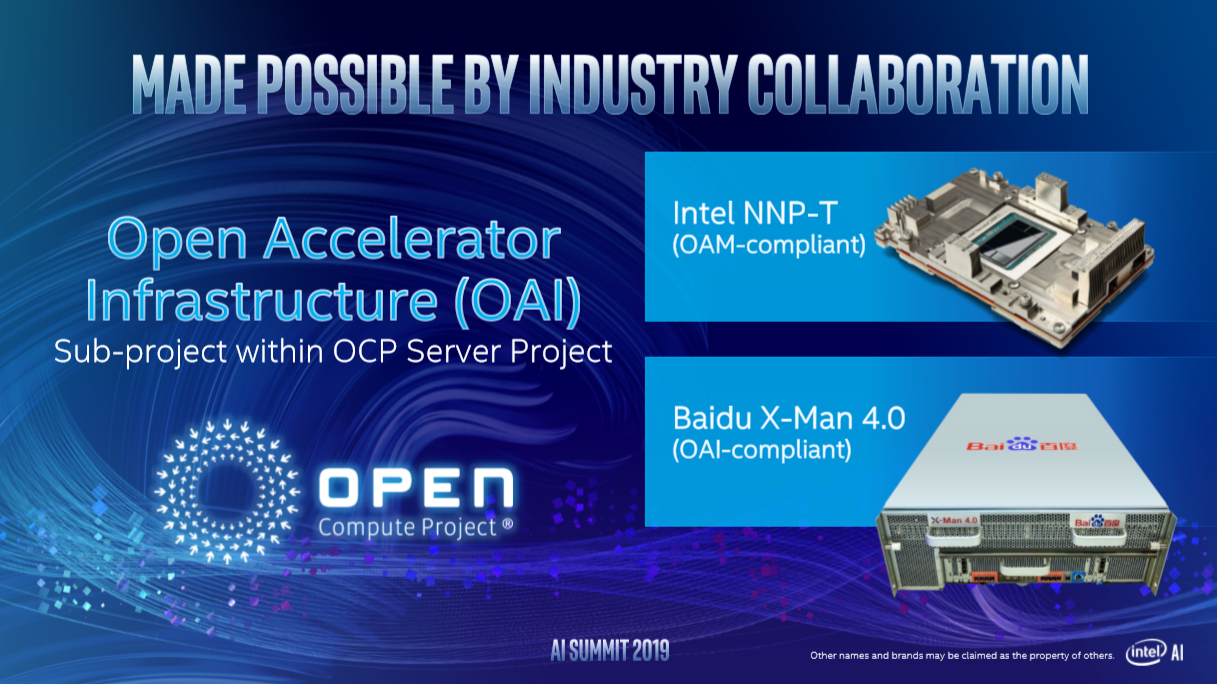

Moving to the NNP-T for training, Intel reiterated its high, near-linear scaling efficiency, at 95% for 32 cards compared Nvidia’s 73%. It features a glueless fabric that requires no additional switching or NIC costs, and it can scale to hundreds or thousands of cards. To that end, Intel showed a pod reference design, the Nervana NNP Pod with 480 chips in 10 racks. It also showed a rack designed with Baidu with 32 NNP-Ts.

Final Thoughts

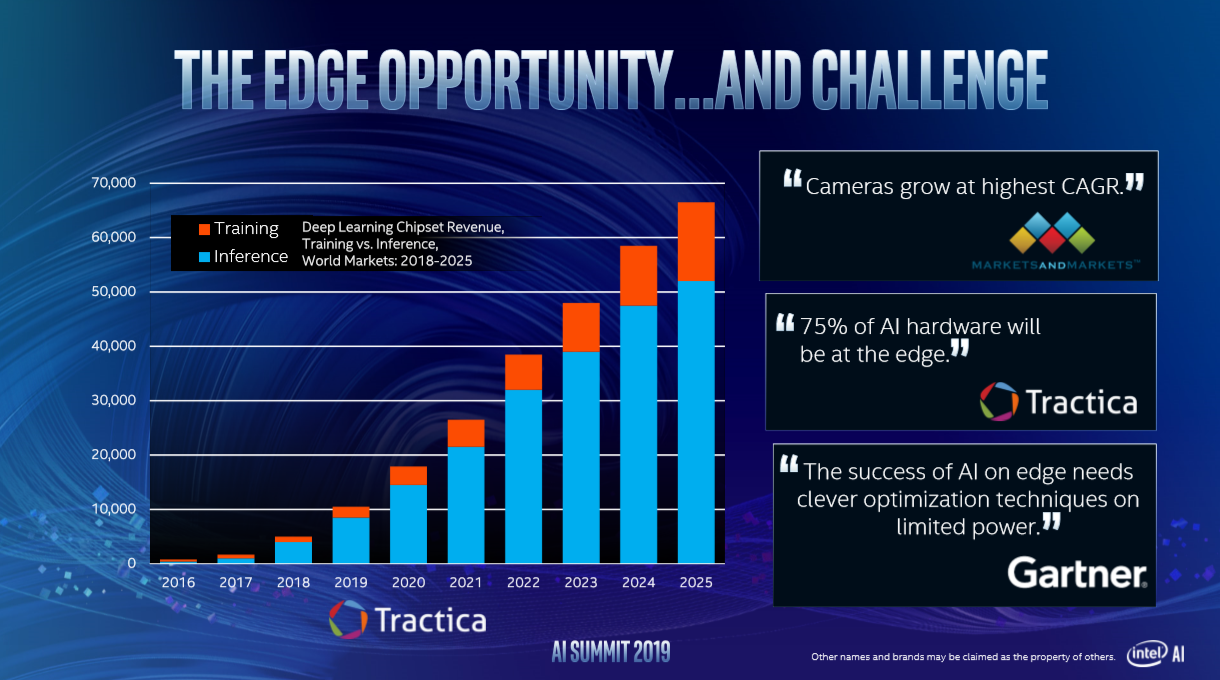

The deep learning hype has settled down a bit, like 5G and autonomous driving, but it is still a nascent and rapidly-growing market, estimated at $10 billion revenue this year. It is forecasted to grow to $40 billion in 2022 (including the edge) with the majority on the inference side.

Movidius has established itself at the edge, and Keem Bay is looking to be a significant improvement compared to the Myriad X, beating the likes of Huawei and Nvidia to market with a (presumably) 7nm edge solution.

With the addition of the NNP-I and NNP-T cards for the data center, Intel is entering a new market and completing its portfolio of AI solutions alongside its AI-infused Xeon and Core processors, Gen graphics, Movidius VPUs, FPGAs and Mobileye EyeQ SoCs. Intel is several years behind Nvidia in the training market, though, and it has yet to show MLPerf results of its NNP-T. But by providing well-scaling, glueless solution, it is likely to at least gain some traction. Earlier this year, though, Huawei beat even Nvidia to market to 7nm with the 256 TFLOPS (FP16), 310W Ascend 910.

On the inference side where competitors are trying to take share from Xeons, Nvidia has a slight time to market lead with the T4 and Qualcomm has announced the 7nm Cloud AI 100 for 2020. Intel’s pre-emptive response is the NNP-I focused on energy efficiency. Its also differentiates itself with the inclusion of two Sunny Cove CPU cores, with the rationale that inference workloads can be more complex than purely based on throughput.