In Pictures: The Best Graphics Card Values In History

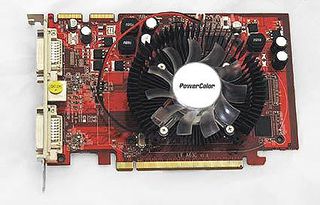

Radeon HD 2600 XT

A few months after the GeForce 8600 GT was introduced, AMD fired back with its Radeon HD 2600 XT. Like the Nvidia cards, the Radeon was overpriced at first. But the competitive existence and comparable performance sparked a price war that eventually pushed them from over $150 to less than $100.

Radeon HD 2600 XT was based on the RV630 GPU, a 65 nm part with 120 stream processors, 800 MHz synchronous core and memory clocks, and a 128-bit memory interface.

GeForce 8800 GT (9800 GT)

At the end of 2007, roughly one year after the GeForce 8800 GTX emerged, Nvidia unleashed one of the most influential graphics cards of all time: the GeForce 8800 GT.

Based on a new 65 nanometer G92 GPU, the GeForce 8800 GT boasted 112 stream processors (compared to the flagship 8800 GTX's 128), 600 MHz core and 900 MHz memory clocks, and a 256-bit memory bus. Priced between $200 and $250, depending on RAM, this card performed tantalizingly close to the $600 GeForce 8800 GTX and easliy beat AMD's Radeon HD 2900 XT.

How successful was it? The GeForce 8800 GT remains a viable gaming card today, four years later. It performs similarly to the Radeon HD 6670, and it outpaces the GeForce GT 240 GDDR5. Nvidia still has no suitable sub-$100 card to take its place. After serving as one of our recommended buys, dropping in price over the years, and then getting rebadged as GeForce 9800 GT, this legendary model only recently fell off our monthly column suggesting best gaming cards for the money (only because it was too hard to find).

Of all the cards in this list, the GeForce 8800 GT is perhaps the most revered.

Radeon HD 3850 And 3870

If the Radeon HD 3870 and 3850 had been launched into a world that didn't know Nvidia's GeForce 8800 GT, they would have dominated the mid-range landscape. But as fate would have it, they landed a couple months after Nvidia's budget-oriented coup. Although their impact was consequently muted, the AMD cards still offered modest performance for what they cost.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

The Radeon HD 3850 featured a 55 nm GPU with 320 stream processors, a 670 MHz core, and 833 MHz memory operating on a 256-bit bus. The Radeon HD 3870 featured the same GPU with higher 775 MHz core and 1125 MHz memory frequencies. While the Radeon HD 3870 vied for attention below the GeForce 8800 GT, the Radeon HD 3850 found its niche with a much lower price.

By the end of their product cycles, the Radeon HD 3870 and 3850 were competing against newer GeForce 9600 derivatives at $100 and $85, respectively, setting a new standard in the sub-$100 gaming market.

GeForce 9600 GT/GSO

The Radeon HD 3870 didn't go uncontested under Nvidia's GeForce 8800 GT for very long. Early 2008 saw the introduction of a GeForce 9600 GT. Armed with a 65 nm G94 GPU, 64 shader processors, a 256-bit memory bus, and 650 core/900 MHz memory clocks, this product performed on par with AMD's Radeon HD 3870. Over time, both boards battled it out down to a $100 price point.

As for the GeForce 9600 GSO, it wasn't derived from the same G94 processor. Rather, it was a re-badged GeForce 8800 GS, which in turn was a handicapped GeForce 8800 GT based on G92, sporting 96 of its 128 available shaders. The memory interface was correspondingly cut from 256 bits to 192, and on-board memory dropped to 384 MB. Nevertheless, GeForce 8800 GS (and then the 9600 GSO) delivered enough performance to keep up with AMD's Radeon HD 3850.

To make the market even more confusing, Nvidia later changed its 9600 GSO by using the 9600 GT's G94 GPU, stripping it down to 48 shader processors, and leaving its 256-bit memory bus intact. Given identical clocks (550 MHz core and 800 MHz memory), shader performance dropped, while memory bandwidth increased, yielding a product that usually performed in the same realm as the original, but not quite as well. As such, we don't include the G94-based GeForce 9600 GSO as part of this list.

Radeon HD 4850 And 4870

The GeForce GTX 200 series was introduced in the middle of 2008, and the GeForce GTX 280 became the fastest graphics card ever seen. Shortly after, AMD introduced its Radeon HD 4850 and 4870. Although they couldn't contend on the performance front, both boards were priced to attract a broader audience able to appreciate solid frame rates at a reasonable price. The Radeon HD 4870 was priced at $300 and the 4850 cost $200, but they did battle against Nvidia's $400 GeForce GTX 260 and $230 GeForce 9800 GTX+.

Both AMD cards owed their speed and affordability to an efficient design. They're derived from the RV770, a 55 nm GPU with 800 shader processors and a 256-bit memory interface. The Radeon HD 4850 had a 625 MHz core and 993 MHz DDR3 memory, while the faster Radeon HD 4870 boasted a 750 MHz core and 900 MHz GDDR5 memory. Of course, GDDR5 gave the 4870 twice as much theoretical memory bandwidth per clock compared to DDR3, and that was part of the reason it did so well against Nvidia's GTX 260.

An inevitable price war followed, but the GeForce GTX 260 was a more expensive card to manufacture, so it never managed to drop as low as AMD's board. Incidentally, the Radeon HD 4870 made it all the way down to around $150 before getting replaced by the Radeon HD 5770. The GeForce 9800 GTX+ was later re-branded as the GeForce GTS 250 though, and managed to keep pressure on the Radeon HD 4850 all the way down to $100, where both cards delivered incredible value for a very long time.

GeForce GTS 250 (GeForce 9800 GTX+)

The GeForce 9800 GTX was introduced in the first quarter of 2008, priced between $300 and $350 and positioned as Nvidia's high-end solution. It was almost as fast as the GeForce GTX 8800 Ultra, but it came in at a much lower price point. AMD's introduction of the Radeon HD 4850 halfway through same year surprised everyone and Nvidia fought back as best it could: by overclocking the GeForce 9800 GTX and dropping its price. The new model was called GeForce 9800 GTX+.

Using the same G92 core as the GeForce 8800 GTS 512 with all 128 of its shaders operational, the 55 nanometer GPU was hitched to a 256-bit memory bus. Given a 738 MHz core, this was the highest-clocked GeForce 8800/9800 card ever released, and the respectable 1100 MHz DDR3 memory frequency helped, too.

It performed so well, in fact, that it was re-badged as the GeForce GTS 250 in 2009 to keep it consistent with Nvidia's newer naming scheme. The GeForce GTS 250 battled it out with AMD's Radeon HD 4850 until both were discontinued, but not before they established themselves as value-oriented legends at $100.

Radeon HD 4670 And 4650

At the end of 2008, AMD continued its Radeon HD 4000 series with the mid-range Radeon HD 4670 and 4650. Featuring specifications very close to the Radeon HD 3870 and 3850, the big differentiator was a narrower memory interface shrunk down to 128 bits from 256. All four boards shared the same 320-shader configuration, but the 4600 series were differentiated by their clock rates: the Radeon HD 4650 came configured to a 600 MHz core and 500 MHz DDR2 memory frequency, while the Radeon HD 4670 boasted up to twice the memory bandwidth with 750 MHz core and 900-1000 MHz DDR3 memory clocks.

The real strength of the Radeon HD 4670 and 4650 was their $80 and $70 launch prices, allowing the Radeon HD 4670 to instantly replace the aging, discounted Radeon HD 3850 and establishing the Radeon HD 4650 as the budget card to beat. Both models kept the sub-$100 graphics card market interesting until they were replaced by the Radeon HD 5570 and 5670 a year later.

Radeon HD 5770 (6770)

Soon after AMD released its Radeon HD 5800 series at the end of 2009, the company followed up with Radeon HD 5770 and 5750. These cards provided performance comparable to the Radeon HD 4870 and 4850 they replaced at similar prices. Although the Radeon HD 5770's value was clear at its $160 launch price, that number dropped soon after, earning the card recognition as a value leader.

With 800 stream processors and an 850 MHz core, the Radeon HD 5770 sounds an awful lot like an overclocked Radeon HD 4870. Both use GDDR5 memory, and the 5770's ICs run at 1200 MHz. But the Radeon HD 4870 benefited from a 256-bit bus, while the more mainstream Radeon HD 5770 made do with a 128-bit pipeline. Seemingly, the 4870 wasn't able to use all of that throughput, though, as the Radeon HD 5770 managed comparable performance despite its handicap.

Still available, the 5770 maintains a price just over $110, and there's nothing that can touch it at that price. Its closest competition is the GeForce GTX 550 Ti and Radeon HD 6790, both of which start at $130, leaving a significant value gap. The Radeon HD 5750 isn't a recommended option because it barely costs any less than the superior 5770.

The Radeon HD 5770 fits its target market so well that AMD skipped introducing a new product in this price segment, choosing instead to rebadge the 5770 as its Radeon HD 6770 in early 2011. The only difference is that the 6770 supports hardware acceleration of Blu-ray 3D playback.

Radeon HD 5570 And 5670

The Radeon HD 5570 was introduced at the end of 2010, and the Radeon HD 5670 was introduced a couple months later. Both employ a 40 nm RV830 GPU with 400 stream processors and a 128-bit memory interface. The Radeon HD 5570 has a 650 MHz core and 900 MHz DDR3 memory, and the Radeon HD 5670 runs even faster thanks to its 775 MHz core and 1000 MHZ GDDR5 memory. The GDDR5 RAM supplies the 5670 with more than twice the memory bandwidth of the 5570.

Let's look at the Radeon HD 5570 first. Its $80 launch price wasn't particularly attractive, since the card performed on par with the $10-cheaper Radeon HD 4670. But the Radeon HD 4670 has since been discontinued, letting the Radeon HD 5570 settle at $60. We call this today's baseline for gamers on a tight budget.

The Radeon HD 5670 is much more capable, though. It performs slightly behind the GeForce 8800/9800 GT, so its $100 launch price was again too high. We've seen it settle down at $80, though, creating a much better value argument. This is especially true now that the GeForce 8800/9800 GT and 9600 GT are at the end of their own lives.

GeForce GTX 460

In 2010, Nvidia finally introduced a serious sub-$250 card that wasn't based on its aging GeForce 8800 architecture. The GF104 GPU is a 40 nanometer part based on the Fermi architecture, but with updated and optimized streaming multiprocessors. GF104 first emerged in the form of GeForce GTX 460.

There are two versions of this card: the GeForce GTX 460 1 GB and GeForce GTX 460 768 MB. The difference in capacity affects other specifications. Though both boards include 336 CUDA cores, 675 MHz processor, and 900 MHz GDDR5 clocks, the 1 GB model enjoys a 256-bit memory interface and 32 ROPS, while the 768 MB model has a 192-bit memory interface and 24 ROPs. As a result, performance is close. The gap between them is enough to justify a pair of price points, though. When they were launched, the GeForce GTX 460 1 GB went for $230 and the 768 MB card sold for $200.

After the Radeon HD 6850 was introduced, the GeForce GTX 460 1 GB was forced under the $200 mark and remains there today, fighting AMD's card at the $160 price point and delivering fantastic performance. Unfortunately, the GeForce GTX 460 768 MB was discontinued, its market taken over by the relatively new Radeon HD 6790 with no competition from Nvidia.

-

dragonsqrrl Great article. I still have my GeForce 3 Ti 200 (fan died a while ago though), and I'm still running a GTS 250 in my backup system. Both were excellent values.Reply -

we_san just my thought. how about voodoo banshee ? against voodoo2+you must have/buy a 2d card.Reply

-

michalmierzwa Epic, I loved this article. I get emotionally attached to my GPU's as my childhood dreams were based on moving away from playing software rendering Quake 2 to OpenGL. That was my 1st step. Than came Quake 3 and oh boy my dreams come true when a budget card did the trick :-))) Amazing!Reply -

joytech22 Thanks for the compilation.Reply

I enjoyed seeing my first dedicated GPU (7600GT) in there, I was like.. 12 when I got my very first desktop (that I could call my own) and the 7600GT was the card I decided to put into it.

Ah the memories.. (Even though it wasn't long ago -.-) -

Achoo22 The author is too young and unqualified to write such an article - I'm pretty sure there were some crucially important budget CGA/etc cards back in the 80s without which home computing for entertainment purposes would've never caught on.Reply

Once upon a time, pretty much every version of BASIC was specific to a particular video card - much like all PC games before VGA/UNIVBE/Direct X. As a result, you can argue pretty strongly that the cards back then were much more important, historically, than anything on this meager and ill-conceived list. Even if the focus was explicitly on modern cards, the choices were poor. We are not impressed. -

hardcore_gamer I built my first gaming PC with a 6600GT when I was 14. I remember playing HL2, UT2004, farcry,Doom3 etc..Good old days :DReply -

theuniquegamer My first was TNT 2 then 6600 then 8800gt and 4670 and now i will upgrade to 7000 or 700 seriesReply

Most Popular