Arm Plans To Make Edge Machine Learning Ubiquitous

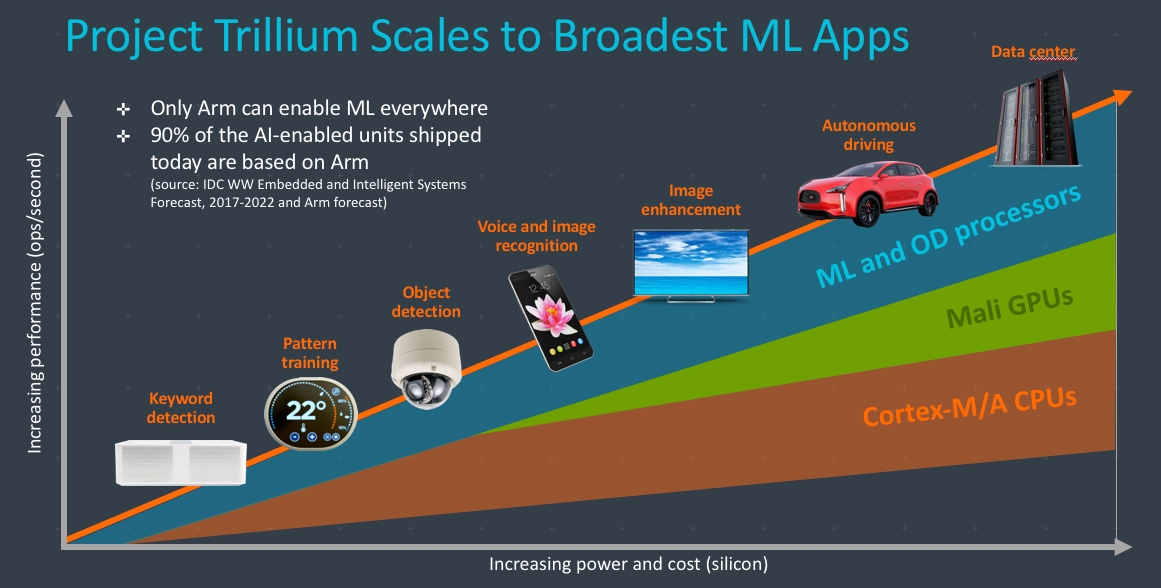

Arm announced Project Trillium, a new IP suite that aims to give every embedded device, from Internet of Things (IoT) products to smartphones and Advanced Driver Assistance Systems (ADAS), machine learning and object detection capabilities.

Arm At The Edge

The popularity of machine learning chips for data centers has exploded over the past few years, and we are still in the early days of artificial intelligence (AI) taking over everything around us.

However, to achieve that future, cloud machine learning is not going to be enough, because we’ll quickly run out of bandwidth if all the “smart objects” around us need to connect to distant servers. Real-time analysis is also difficult when every device needs a high-speed and low-latency connection.

This is where edge or client-side machine learning comes into play. Over the past couple of years we’ve seen smartphones companies launch increasingly powerful AI accelerators inside their devices, as a way to improve the phone’s object recognition and image capabilities. Apple, Huawei, and Google have all one-upped each other in the past year, and this trend will likely accelerate in the near future.

Arm seems to have noticed this trend sometime ago, too, so it’s now announcing a family of new IP to take advantage of this trend and help device makers put an AI accelerator in almost every device they will build from now on. The Project Trillium name comes from the three main components: a machine learning (ML) processor, an object detection (OD) processor, and a low-level neural network (NN) software library.

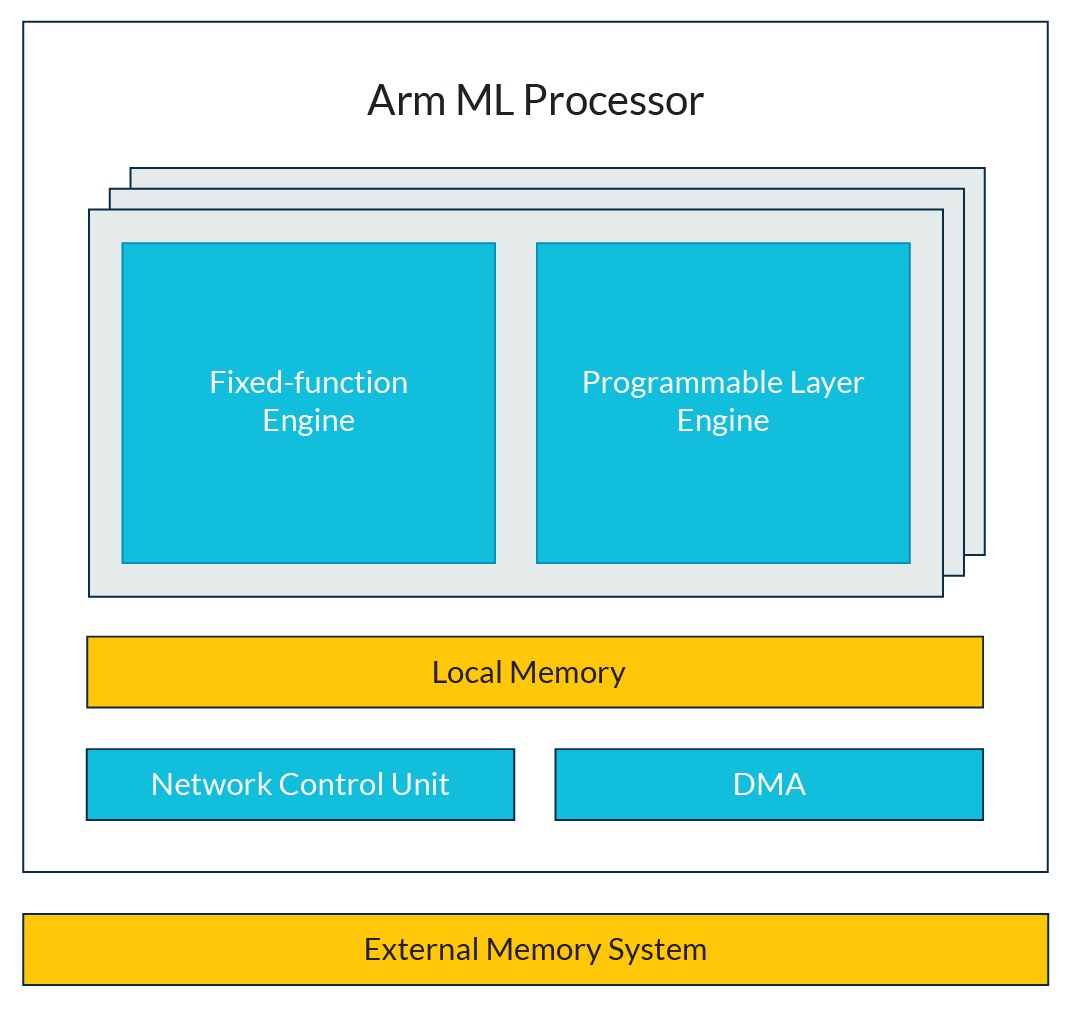

Machine Learning Processor

Arm designed a new highly-efficient machine learning processor that can achieve an efficiency of 3 trillion operations per second (TOPs) per Watt, with a total performance of up to 4.6 TOPS and a peak power consumption of 2W.

This would be the highest-performance embedded AI processor on the market right now, considering even the current mobile AI king, the Pixel Visual Core, achieves "only" 3 TOPs. However, Arm’s new processor will not appear on the market at least until the second half of 2018, and it’s not clear whether or not we’ll see the highest performance version then, or later.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Arm promises another 2x-4x performance uplift in real-world uses through intelligent data management, but again, it may take some time for device makers and app developers to squeeze that kind of performance out of the ML processor. Either way, we should be seeing a “massive” AI performance increase in smartphones over the next 12-18 months as smartphone makers start to adopt these chips.

Similarly, we’re going to see a significant number of IoT devices start to rapidly adopt less powerful and cheaper versions of this processor. The Arm ML processor can scale down to only 2 giga operations per second (GOPs), or about a three order of magnitude decrease in performance from what we can expect in smartphones. It can also scale upwards to 150 TOPs for ADAS and servers.

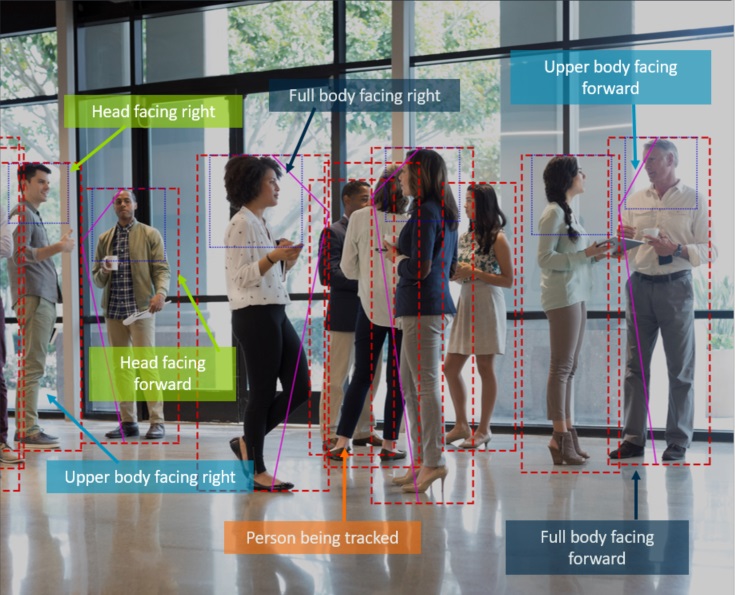

Object Detection Processor

While the ML processor will be programmable, allowing greater flexibility for developers, the OD processor uses fixed-function hardware to further increase efficiency in order to become extremely good at one task: image recognition.

The processor will be able to scan all frames in real-time at Full HD resolution and 60 fps, as well as provide a list of detected objects, along with their location within the scene. It will also be able to recognize objects as small as only 50x60 pixels. The software for the OD processor will also be able to identify human poses, gestures, behavior, and faces.

The Arm OD processor will be able to enable surveillance cameras that will do all or most of the image recognition locally and offline, as opposed to most of the “smart cameras” right now, which need a constant internet connection to enable object recognition.

Plus, the new processors should enable much better user privacy, as the private home recordings won’t need to be stored on the camera company’s servers. Even companies that have the best intentions could still suffer a data breach and expose that user information to cyber criminals.

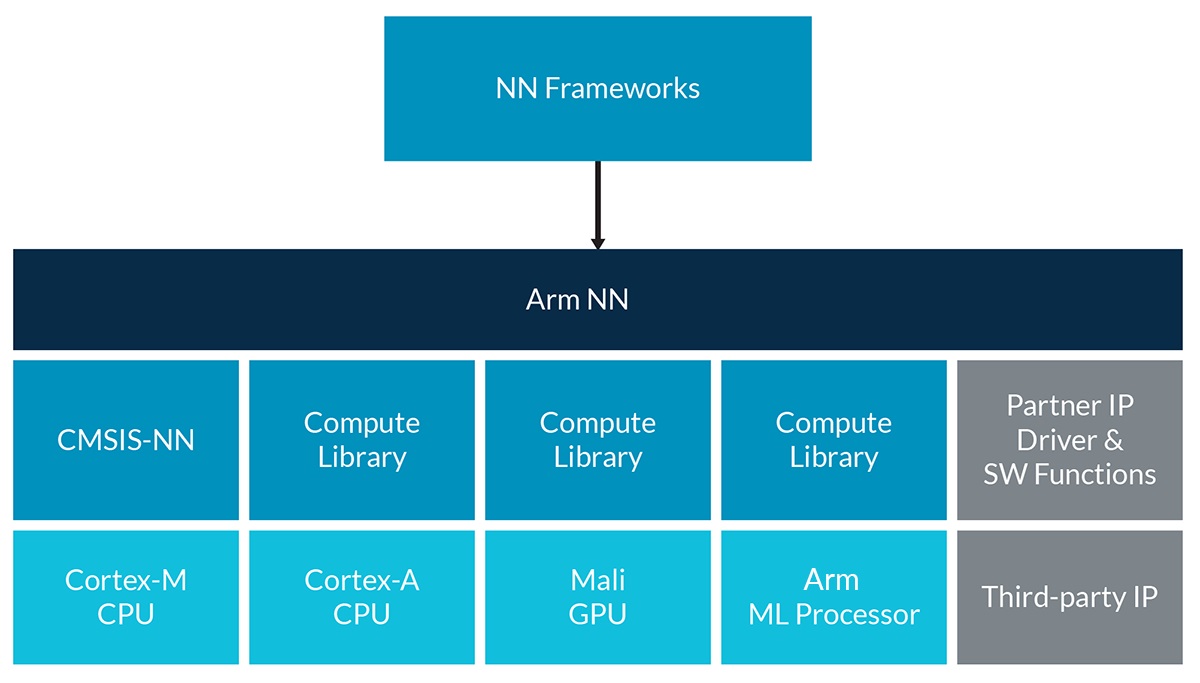

Neural Network SDK

Arm has developed a lower-level software development kit (SDK), which can more efficiently translate existing neural network software libraries such as TensorFlow, Caffe (1 and 2), and MXNet, and can integrate with Android’s NNAPI, giving it a 4x performance boost. The NN SDK will support Cortex A and M processors, as well as Mali GPUs. The ML processor will be supported later this year.

Arm seems to be going all-in with machine learning, not wanting to miss the “biggest inflection point in computing for more than a generation.” As Arm already dominates embedded computing, it will be only a matter of time before most “smart devices” come with their own powerful AI accelerator.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

bit_user The OD processor was a good move. Otherwise, I see their ML block just being covered over by Android’s NNAPI as yet another hardware inferencing accelerator, offering them little chance of differentiation.Reply

The main users of their NN API will probably be embedded apps not running on Android, perhaps including various robots and IoT.