Nvidia's Arm-based N1X-equipped gaming laptops are reportedly set to debut this quarter, with N2 series chips planned for 2027 — new roadmap leak finally hints at consumer release Windows-on-ARM machines

N1 might finally be here.

More than two years ago, rumors of consumer-focused ARM SoCs from Nvidia first started to float, and since then, we've learned about the existence of N1/N1X only through leaks. Most recently, a shipping manifest featuring an unreleased Dell laptop with an N1X chip was spotted, reigniting hope for the platform's eventual release. Today, a new report from DigiTimes says that N1 is back on Nvidia's internal roadmap.

"According to supply chain operators, according to NVIDIA's latest technology blueprint, the Windows on Arm (WoA) platform NB model using N1X will debut in the first quarter of 2026, first targeting the consumer market, and the other three versions will go on sale in the second quarter, and the next-generation N2 series is expected to take over in the third quarter of 2027." — DigiTimes Taiwan

The quote above is lifted directly from the aforementioned report and points toward a Q1 2026 release for N1X, with three more variants to follow later in Q2 2026, likely aimed at the enterprise segment. After all, CEO Jensen Huang has already confirmed that the GB10 Superchip inside DGX Spark is powered by N1 silicon. Perhaps it's finally time that silicon escapes its AI confinement and pops up in consumer products.

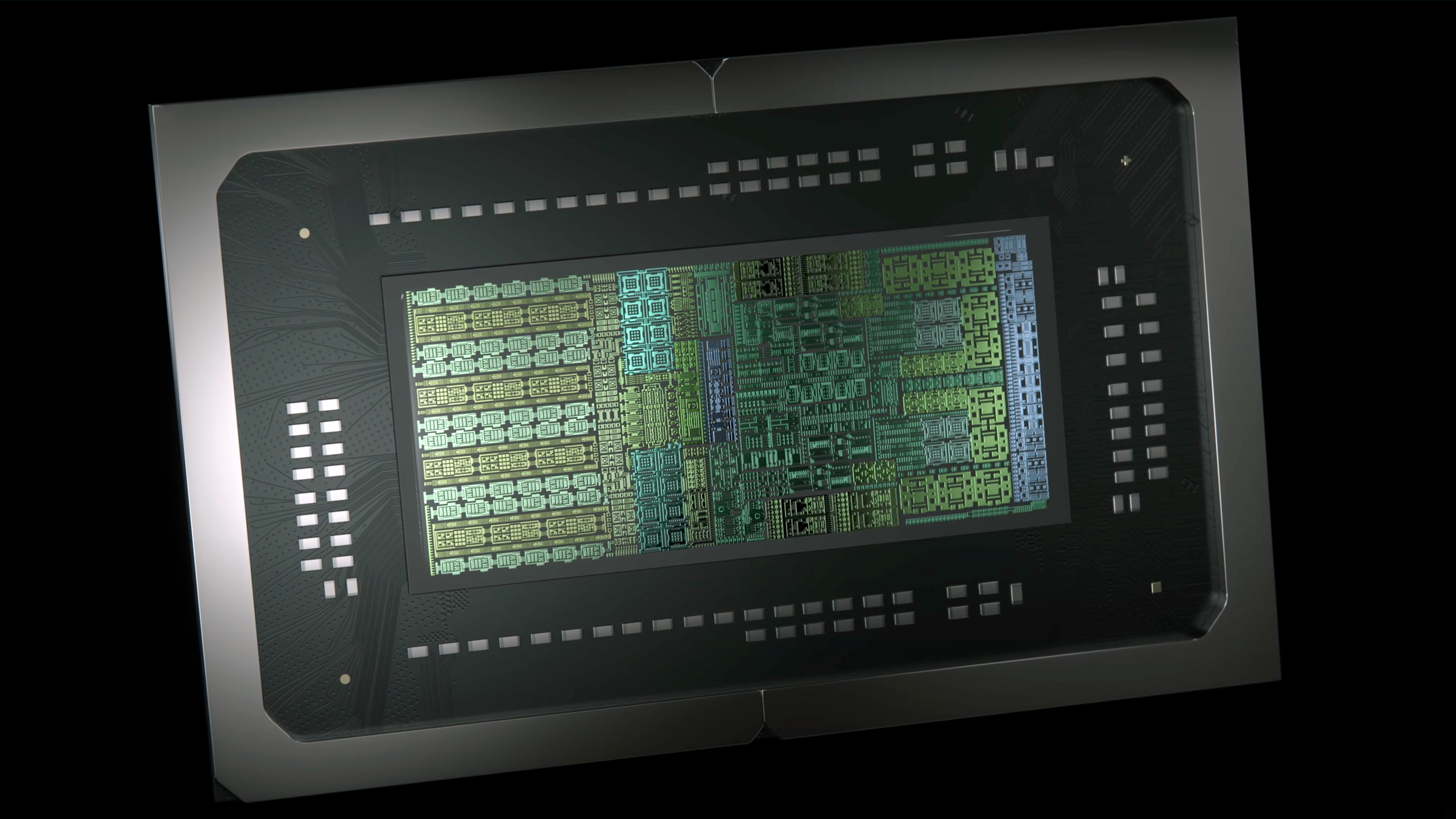

Through that GB10 confirmation, we know that the N1 features a 20-core ARM CPU, paired with an RTX 5070-class GPU. Some early benchmark leaks have painted an exciting picture, but their preliminary nature means we can't read too much into them. Though the fact that we've seen any of them means the chip is certainly out there, just stuck in prototype hell, at least as far as a mainstream release is concerned.

More interestingly, DigiTimes also mentions that the next-gen N2 series is already under development and planned for Q3 2027. If these timelines are true, it's strange that even the N1 was a no-show at CES 2026, with just a few months before its purported debut. Still, the rumor lines up with the Dell laptop leak we covered recently; could the XPS lineup be a frontrunner to showcase N1?

"In terms of product schedule, NVIDIA has also released a next-generation plan, using the N2X DGX Spark model, which will debut in the fourth quarter of 2027 at the earliest; The N2 model of the WoA platform will be launched in the third quarter of 2027 at the earliest." — DigiTimes Taiwan

Despite Panther Lake's recent impressive showing, the only fully-fledged substitute to discrete graphics on a mobile platform is AMD's Strix Halo, so far. If the reports are to be believed, that reality could soon be altered with a new competitor.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Apple has shown what the ARM architecture is capable of for consumers, and Qualcomm is trying to repeat that magic with its Snapdragon X lineup. Microsoft's efforts to popularize Windows-on-ARM, even with proper x64 emulation now baked in, have been underwhelming so far, but all that could change with the N1/N1X.

That being said, given the AI boom, pricing for the platform may not be as enticing as it was once planned. Originally, the N1 was rumored to launch at Computex 2025, but that ship has sailed, and along with it, fair prices for DRAM and NAND flash. Nvidia is also working with Intel in a partnership now worth $5 billion to develop Intel x86 RTX SoCs featuring the Blue Team's CPUs and the Green Team's GPUs on the same die.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

bit_user Reply

Jeff Geerling briefly tested gaming performance on GB10. It's about what you'd expect from a RTX 5070 with about 40% of the memory bandwidth as the dGPU version (probably more like 1/3rd, after the CPU cores take their share of the unified memory bandwidth):The article said:Some early benchmark leaks have painted an exciting picture,

FjRKvKC4ntwView: https://www.youtube.com/watch?v=FjRKvKC4ntw

As for the Geekbench score, his GB10 testing did achieve a single-core score of 3123, but he noted what would be quite a high system idle power (31.5 W) for a laptop. So, it wouldn't be surprising to see the scores for the N1 version scale back a bit, as they tame power consumption to be more in line with a laptop. Of course, no laptop will have a ConnectX-7 network card, capable of 200+ Gbps, and I'm sure that's adding to the idle power draw.

https://www.jeffgeerling.com/blog/2025/dells-version-dgx-spark-fixes-pain-points/ -

ekio A good news from Ngreedia ? WowReply

Not too early to see competition in the Arm64 market for laptops. I was wondering if ARM was a locked license to Qualcomm at this point…

x86 is bloated and obsolete CISC isa with instructions and concepts from 50 years ago, aarch64 v9 is a RISC isa that is barely over a decade old. (In advance: Stop confusing arm the company and arm the ISA) -

Roland Of Gilead I've been wondering who would be first to the market with and ARM based SOC, with a more powerful GPU. If Qualcomm and SD aren't on the same trajectory, their iGPU's are just too weak to compete. It will be interesting to see real testing and performance numbers.Reply

Question to those in the know - Can ARM not use PCIe to make use of modern GPU's? Why does that seems so hard? -

bit_user Reply

Chips & Cheese interviewed the team lead of Qualcomm's newest Adreno in the Snapdragon X2:Roland Of Gilead said:I've been wondering who would be first to the market with and ARM based SOC, with a more powerful GPU. If Qualcomm and SD aren't on the same trajectory, their iGPU's are just too weak to compete. It will be interesting to see real testing and performance numbers.

https://chipsandcheese.com/p/diving-into-qualcomms-upcoming-adreno

I'm just now noticing this is the same guy who's jumping ship and heading to Intel!

https://www.tomshardware.com/pc-components/gpus/eric-demers-leaves-for-intel-after-14-years-at-qualcomm-father-of-radeon-and-adreno-gpus-now-sits-at-lip-bu-tans-table

Anyway, the point I wanted to make is that not only are they continuing to optimize their GPU IP, but that Snapdragon X2 Elite will also expand its memory datapath to 192-bit, which is half-way between the standard laptop/desktop spec of 128-bit and the 256-bit width utilized by Nvidia GB10/N1 and AMD's Strix Halo (Ryzen AI Max). On top of that, the higher-speed LPDDR5X they're using closes most of the remaining gap.

And if that weren't enough, it will feature a rather large chunk of SRAM. As large iGPUs tend to be rather bottlenecked by memory bandwidth, these changes could result in a decent step up, for the X2. You can see more published details of the X2's GPU (and the rest of it), here:

https://chipsandcheese.com/p/qualcomms-snapdragon-x2-elite

There needs to be a market, in order for such products to make sense. Until a market gets established for ARM-based gaming laptops, I think you won't see any with dGPUs.Roland Of Gilead said:Question to those in the know - Can ARM not use PCIe to make use of modern GPU's? Why does that seems so hard?

On a technical level, yes - dGPUs have been used in various ARM-based machines, ranging from Ampere workstations to Raspberry Pi 4 & 5 and others.

https://www.jeffgeerling.com/blog/2023/ampere-altra-max-windows-gpus-and-gaming/ https://www.jeffgeerling.com/blog/2025/big-gpus-dont-need-big-pcs/ -

abufrejoval I guess if you're Nvidia you can afford to delay gen1 for a few years and then anounce gen2 for just a year later...Reply

But who'd buy a gen1 product when it's bound to be obsolete before it's dry?

I know they have to show a roadmap and their ability to execute on it, but this isn't inclining me one bit towards Nvidia for an ARM laptop... unless they get the Linux part perfect, which should be a given.

Somehow I fear it won't be on consumer hardware. -

ezst036 Reply

Almost certainly, no.hwertz said:And more importantly it should have good Linux on ARM support.

Nvidia's biggest problem here is its graphics driver which is known to be highly unstable in Wayland sessions. Nvidia doesnt bother much with QAQC on the Wayland side and it shows. They'll get the performance up and then oh you're having graphical artifacting? We'll get back to you. Monitor shuts off? We'll get back to you. Sound stopped? Let's have a chat two years from now pal. All sorts of just weird and just entirely random stuff happens, random lockups and stuff. It doesn't happen on Nvidia/Xorg and it doesn't happen anywhere in Intel or AMD based systems with open source drivers. Some people incorrectly assume this behavior is because Wayland is still untested but that's not it.

It's Nvidia's driver. This driver is terrible on the Wayland side.

If there was any interest in Linux gaming on ARM from Nvidia they could have(or should have) been beefing up their GPU drivers and it would've been a huge talking point for them since it is a fact that Linux is now a fast-growing gaming platform.

Poor stability on x86 Nvidia and Wayland means Nvidia isn't going to bother fixing ARM on Wayland in their buggy graphics driver for such a niche use case. -

bit_user Here's how I figure these GPUs compare on paper. Note that I used the RTX 5070 as a stand-in for the GB10. Take the pixels/c and texels/c specs for AMD and Nvidia with a grain of salt, since I based them on the per-second specs and I'm just assuming they were computed against the boost clocks.Reply

GPUFP32 ALUsTexels/cyclePixels/cycleAdreno X2204812864Radeon 8060S256016064RTX 5070614417178 -

abufrejoval Reply

That caught my attention, too, a full frame buffer in on-die SRAM! That ought to do a lot of good for gaming performance...bit_user said:And if that weren't enough, it will feature a rather large chunk of SRAM. As large iGPUs tend to be rather bottlenecked by memory bandwidth, these changes could result in a decent step up, for the X2. You can see more published details of the X2's GPU (and the rest of it), here:

https://chipsandcheese.com/p/qualcomms-snapdragon-x2-elite

...if you can actually hide that from the game designers via the engine. And that's where I'd be sceptical, at least until proven otherwise, that you'd be able to hide this pretty fundamental paradigm difference vs. non-tiled GPU architectures.

And even if a game engine will then low-level support it, but requires adjusting the game high-level to take advantage of it, I'm not very hopeful it would find a lot of adopters, just judgine by the various DLSS alternatives, that see little use. And that's a very small change in comparison.

The technical hurdles for dGPU support on ARM are rather low, but I agree that it makes very little sense commercially. You aim for ARM to get battery run-time and dGPUs are at best about luggable workstations where ARM doesn't have an inherent advantage, yet: not sure, how much Nvidia wants to get it there.bit_user said:There needs to be a market, in order for such products to make sense. Until a market gets established for ARM-based gaming laptops, I think you won't see any with dGPUs.

Of course with a modular setup like a Framework laptop, that should just be a matter of picking the parts... -

bit_user Reply

It's only launching 3 quarters after initially rumored. The second gen is launching about 6 quarters after that. So, a 1.5 year gap, instead of >= 2.abufrejoval said:I guess if you're Nvidia you can afford to delay gen1 for a few years and then anounce gen2 for just a year later...

Intel often launches new laptop CPUs on an annual cadence. I don't hear you complaining about that!

Well, if it's a more finished product than the Snapdragon X laptops were at launch, then anyone who needs a new laptop this year would be a good candidate.abufrejoval said:But who'd buy a gen1 product when it's bound to be obsolete before it's dry?

It's usually the case with tech products that something better will come along, if you can afford to wait. Nothing unusual, in that regard.