AMD touts Instinct MI430X, MI440X, and MI455X AI accelerators and Helios rack-scale AI architecture at CES — full MI400-series family fulfills a broad range of infrastructure and customer requirements

There's an Instinct MI400X-series GPU for everyone in 2026

Artificial intelligence is arguably the hottest technology around, so it's not surprising that AMD used part of its CES keynote to reveal new information about its upcoming Helios rack-scale solution for AI as well as its next-generation Instinct MI400-series GPUs for AI and HPC workloads. In addition, the company is rolling out platforms designed to wed next-generation AI and HPC accelerators with existing data centers.

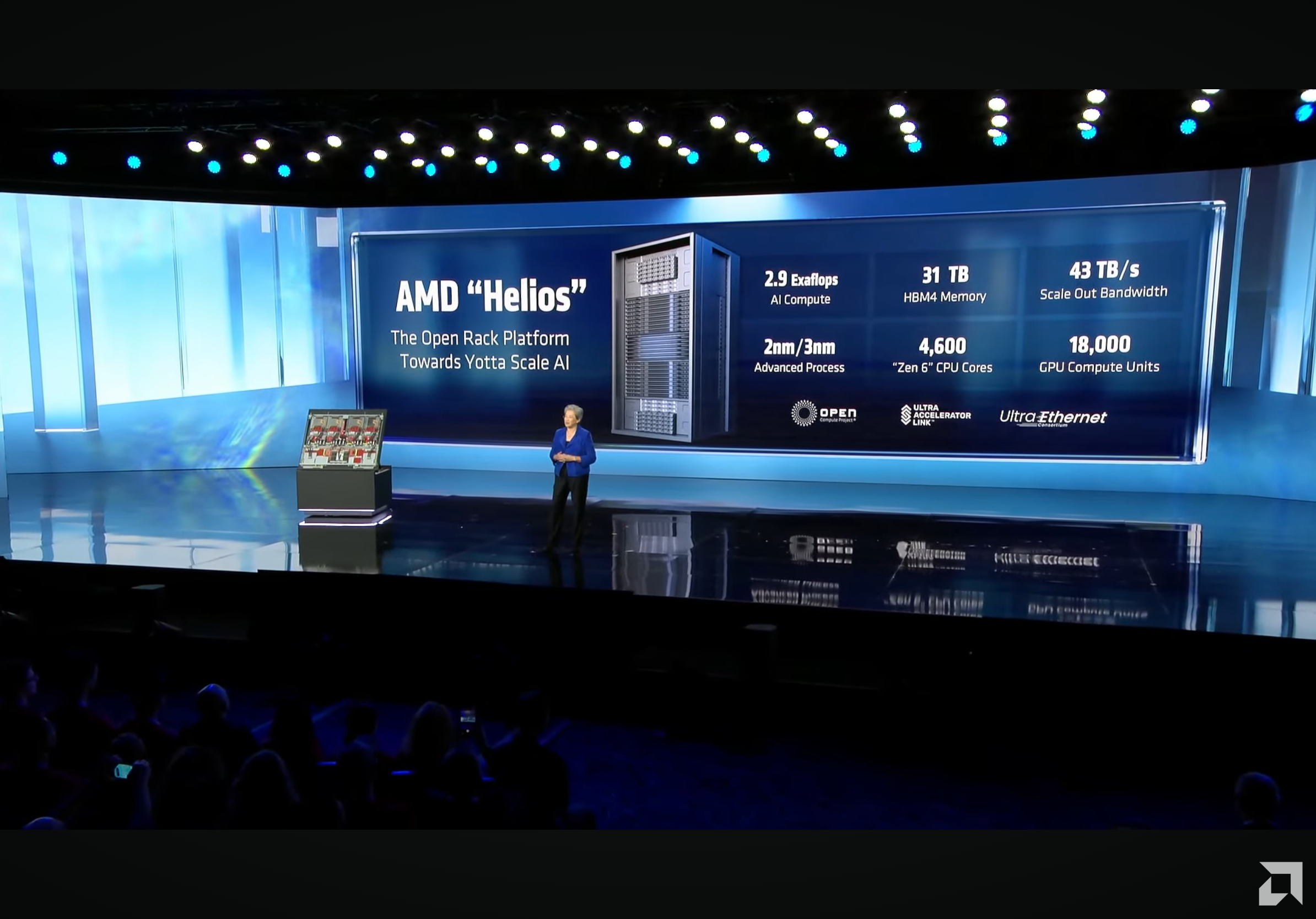

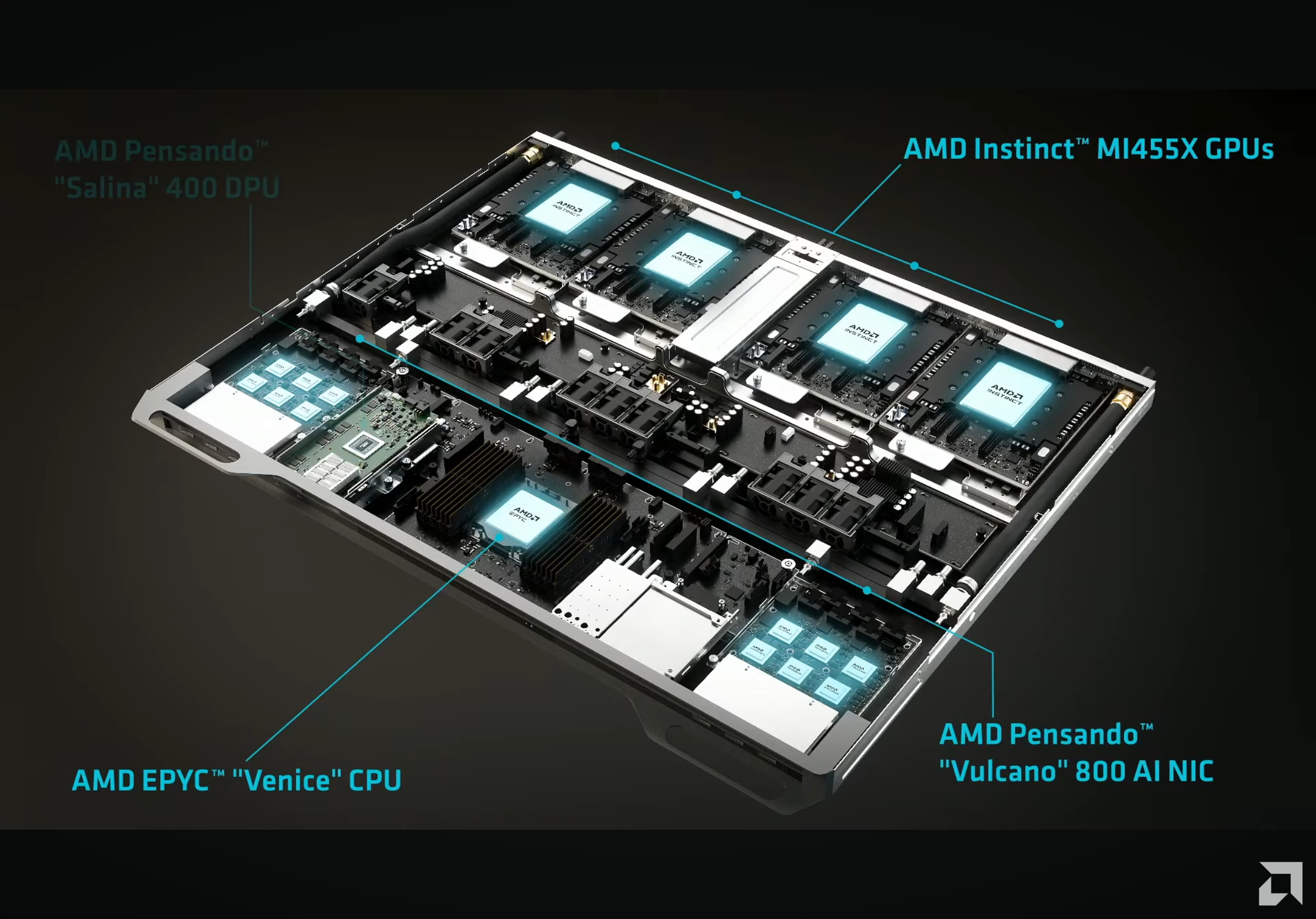

Helios is AMD's first rack-scale system solution for high-performance computing deployments based on AMD's Zen 6 EPYC 'Venice' CPU. It packs 72 Instinct MI455X-series accelerators with 31 TB of HBM4 memory in total with aggregate memory bandwidth of 1.4 PB/s, and it's meant to deliver up to 2.9 FP4 exaFLOPS for AI inference and 1.4 FP8 exaFLOPS for AI training. Helios has formidable power consumption and cooling requirements, so it is meant to be installed into modern AI data centers with sufficient supporting infrastructure.

Beyond the MI455X, AMD's broader Instinct MI400X family of accelerators will feature compute chiplets produced on TSMC's N2 (2nm-class) fabrication process, making them the first GPUs to use this manufacturing technology. Also, for the first time the Instinct MI400X family will be split across different subsets of the CDNA 5 architecture.

The newly disclosed MI440X and MI455X are set to be optimized for low-precision workloads, such as FP4, FP8, and BF16. The previously disclosed MI430X targets both sovereign AI and HPC, thus it fully supports FP32 and FP64 technical computing and traditional supercomputing tasks. By tailoring each processor to a specific precision envelope, AMD can eliminate redundant execution logic and therefore improve silicon efficiency in terms of power and costs.

The MI440X powers AMD's new Enterprise AI platform, which is not a rack-scale solution but a standard rack-mounted server with one EPYC 'Venice' CPU and eight MI440X GPUs.

The company positions this system as an on-premises platform aimed at enterprise AI deployments that is designed to handle training, fine-tuning, and inference workloads while maintaining drop-in compatibility with existing data-center infrastructure in terms of power and cooling and without any architectural changes.

Furthermore, the company will offer a sovereign AI and HPC platform based on Epyc 'Venice-X' processors with additional cache and extra single-thread performance as well as Instinct MI430X accelerators that can process both low-precision AI data as well as high-precision HPC workloads.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The Instinct MI430X, MI440X, and MI455X accelerators are expected to feature Infinity Fabric alongside UALink for scale-up connectivity, thus making them the first accelerators to support the new interconnect. However, practical UALink adoption will depend on ecosystem partners such as Astera Labs, Auradine, Enfabrica, and Xconn.

If these companies deliver UALink switching silicon in the second half of 2026, then we are going to see Helios machines interconnected using UALink. In the absence of such switches, UALink-based systems will use UALink-over-Ethernet (which is not exactly a way UALink was meant to be used) or stick to traditional mesh or torus configurations rather than large-scale fabrics.

As for scale-out connectivity, AMD plans to offer its Helios platform with Ultra Ethernet. Unlike UALink, Ultra Ethernet can rely on existing network adapters, such as AMD’s Pensando Pollara 400G and the forthcoming Pensando Vulcano 800G cards that can enable advanced connectivity in data centers that can already use the latest technology.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Stomx Impression is that already by the next CES when somebody will start talking about AI the audience will threw upReply -

Stomx By the way can somebody explain me how they plan to increase the AI performance 10,000x by the next 5 years without at least 1000x increase of power consumption when during last 5 years they were not able to increase Ryzen single core or multi-core performance even 2x ?Reply -

George³ Reply

It's easy with zero-precision calculations like FP0 and INT0. /sStomx said:By the way can somebody explain me how they plan to increase the AI performance 10,000x by the next 5 years without at least 1000x increase of power consumption when during last 5 years they were not able to increase Ryzen single core or multi-core performance even 2x ? -

Penzi Reply

Can’t seem to get the :LOL: instead of the (y)… ah well 🤷♂️George³ said:It's easy with zero-precision calculations like FP0 and INT0. /s -

bit_user Reply

Fair question. The usual answers are things like:Stomx said:By the way can somebody explain me how they plan to increase the AI performance 10,000x by the next 5 years without at least 1000x increase of power consumption

Compute-in-memory (or near memory), as most energy in computers is spent on moving data around.

Newer data formats that increase information density and are cheaper to process.

Possibly more use of compression, although that overlaps with number 2.

Dataflow-like processing architectures that don't suffer the inefficiencies of a cache hierarchy or the latency penalties of random access.

Exotic forms of computation.

I've lost track of which, but one of the HBM4 variants is supposed to make progress on compute/memory integration. We've already seen several iterations of new data formats, so I'm not sure there's too much more mileage in numbers 2 & 3.

Number 4 is something AMD just started dabbling with, in the PS5 and RDNA4. The upcoming PS6 and UDNA architecture should go a lot further, in this direction. I think that's what "Neural Arrays" is getting at:

https://www.tomshardware.com/video-games/console-gaming/sony-and-amd-tease-likely-playstation-6-gpu-upgrades-radiance-cores-and-a-new-interconnect-for-boosting-ai-rendering-performance

Improving single-thread performance is hard.Stomx said:when during last 5 years they were not able to increase Ryzen single core or multi-core performance even 2x ?

As for multi-core, they really did, if you look at their server or workstation CPUs. Not desktop, but those have artificially-restricted core counts, based on the usage patterns and needs of typical desktop users & apps.

However, by focusing on CPUs, you're really missing the point. This isn't about CPUs. You should look at GPU compute performance, instead. That said, I think all of this isn't nearly enough to cover the gap cited in your question.