AMD unwraps Instinct MI500 boasting 1,000X more performance versus MI300X — setting the stage for the era of YottaFLOPS data centers

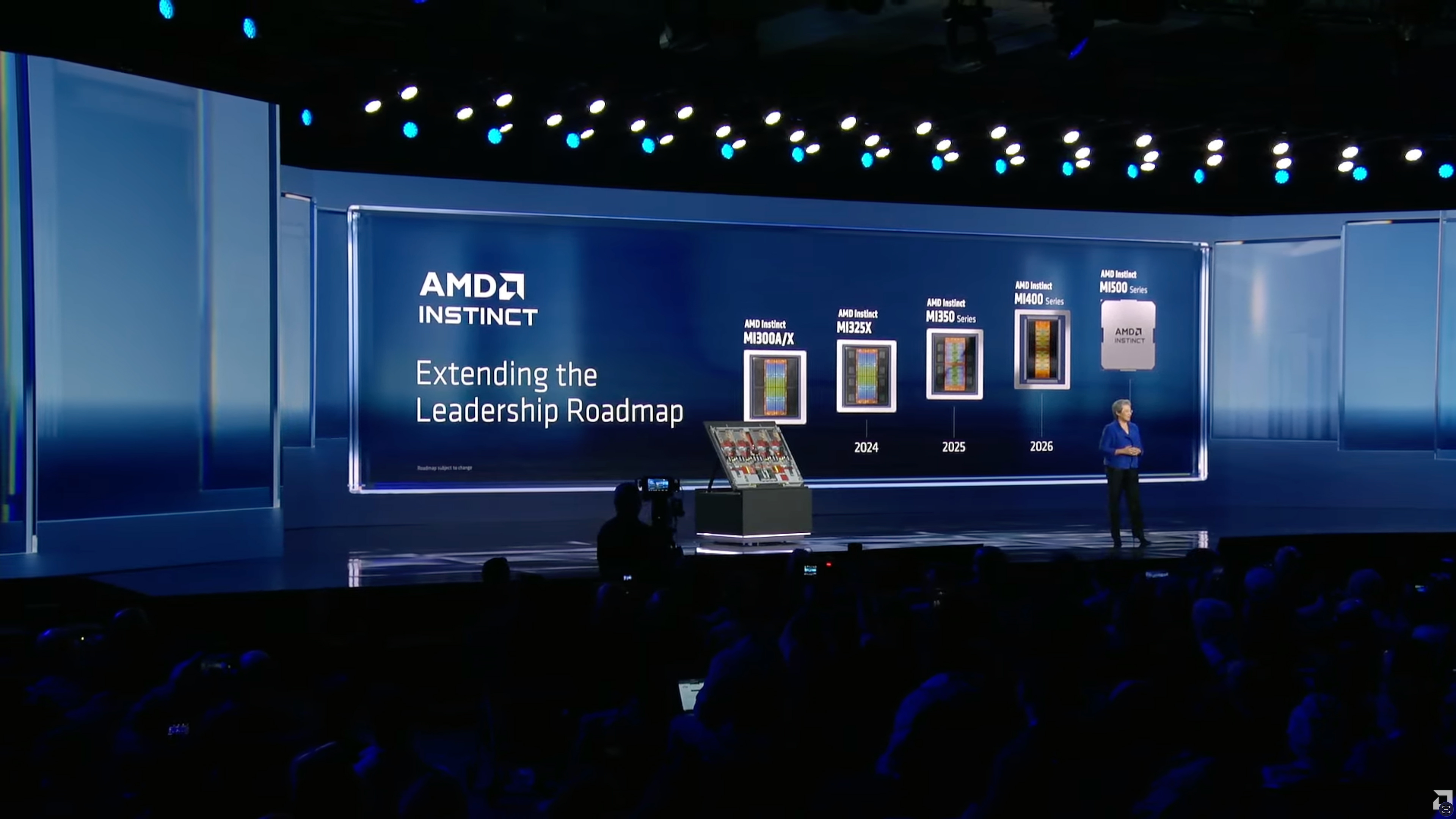

Next-generation CDNA 6 architecture on-track for 2027.

The demands of AI data centers compute capability are set to increase dramatically from around 100 ZettaFLOPS today to around 10+ YottaFLOPS* in the next five years (approximately by about 100 times), according to AMD. Thus, to stay relevant, hardware makers must increase performance of their products across the full stack every year. AMD does its best, so during the company's CES keynote its chief executive Lisa Su announced Instinct MI500X-series AI and HPC GPUs due in 2027.

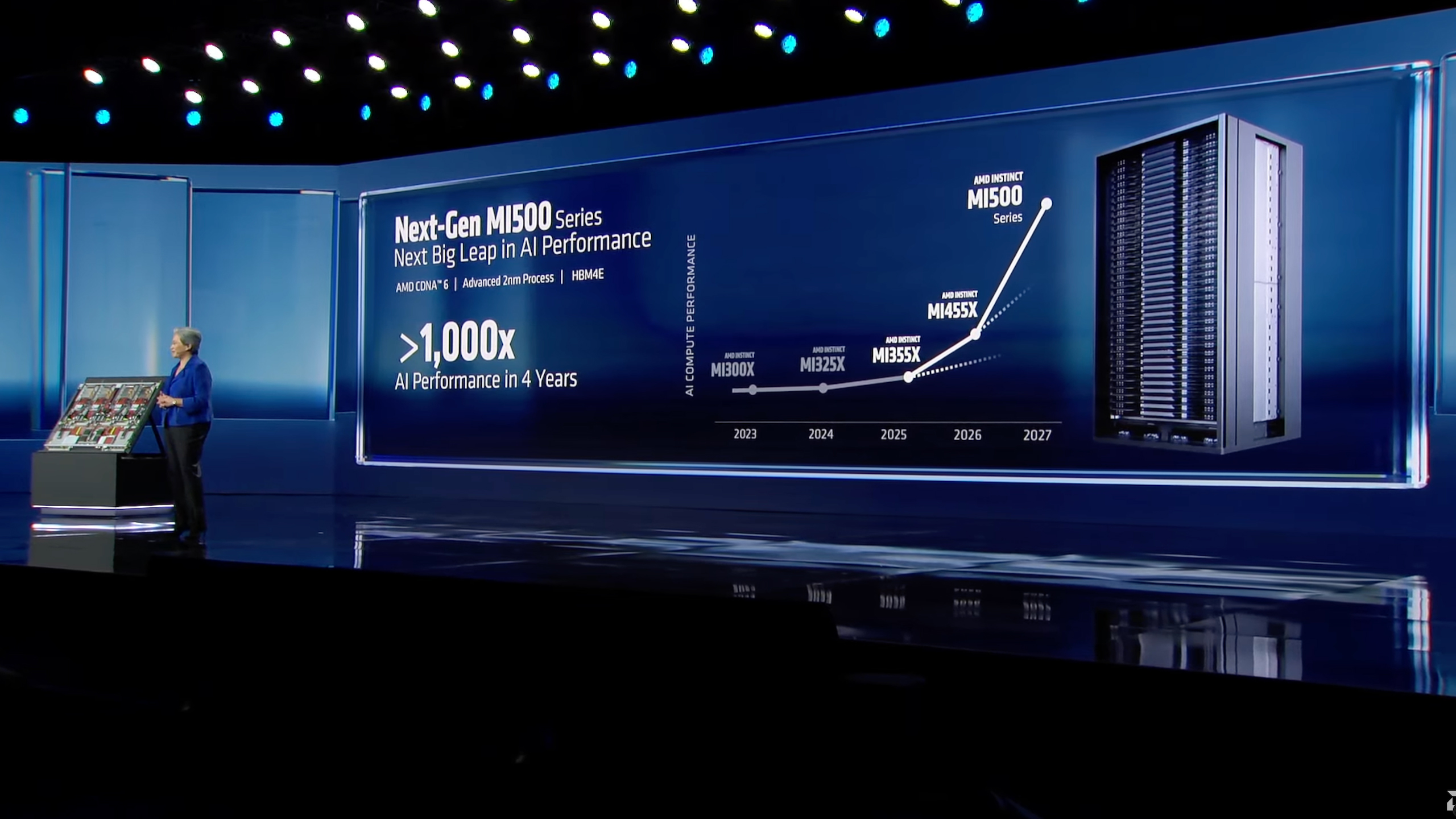

"Demand for compute is growing faster than ever," said Lisa Su, chief executive of AMD. "Meeting that demand means continuing to push the envelope on performance far beyond where we are today. MI400 was the major inflection point in terms of delivering leadership training across all workloads, inference, and scientific computing. We are not stopping there. Development of our next-generation MI500-series is well underway. With MI500, we take another major leap on performance. It is built on our next gen CDNA 6 architecture [and] manufactured on 2nm process technology and uses higher speed HBM4E memory."

AMD's Instinct MI500X-series accelerators are set to be based on the CDNA 6 architecture (no UDNA yet?) with their compute chiplets made on one of TSMC's N2-series fabrication process (2nm-class). AMD says that its Instinct MI500X GPUs will offer up to 1,000 times higher AI performance compared to the Instinct MI300X accelerator from late 2023, but does not exactly define comparison metrics.

"With the launch of MI500 in 2027, we are on track to deliver 1000 times increase in AI performance over the last four years, making more powerful AI accessible to all," added Su.

Achieving a 1000X performance increase in four years is a major achievement, though we should keep in mind that between the Instinct MI300X and Instinct MI500 there is a three-generational instruction set architecture (ISA) gap (CDNA 3 => CDNA 6), a three generational memory gap (HBM3 => HBM4E), an addition of FP4 and other low-precision formats, faster scale-up interconnects, and possibly PCIe 6.0 interconnection to host CPU.

Nonetheless, the Instinct MI500 will be an all-new generation of AMD's AI and HPC GPUs with major architectural improvements, which probably include substantially higher tensor/matrix-compute density, tighter integration between compute and memory, and significantly improved performance-per-watt perhaps achieved by a combination of ISA and TSMC's N2P fabrication process.

*One YottaFLOPS equals to 1,000 ZettaFLOPS, or one million ExaFLOPS.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

usertests ReplyAchieving a 1000X performance increase in four years is a major achievement, though we should keep in mind that between the Instinct MI300X and Instinct MI500 there is a three-generational instruction set architecture (ISA) gap (CDNA 3 => CDNA 6), a three generational memory gap (HBM3 => HBM4E), an addition of FP4 and other low-precision formats, faster scale-up interconnects, and possibly PCIe 6.0 interconnection to host CPU.

That's pretty unfathomable marketing. I have to imagine it's some edge case or something that couldn't run well in lower memory capacity, with lower precision added. -

edzieba ReplyAMD says that its Instinct MI500X GPUs will offer up to 1,000 times higher AI performance compared to the Instinct MI300X accelerator from late 2023, but does not exactly define comparison metrics.

Presumably Bungholiomarks. Anything else would hardly be considered a reputable performance metric! -

emerth I'm thinking 1000x the FP4 perf compared to Mi300 FP16 perf. That or AMD is implementing 2 bit FP.Reply -

bit_user Reply

Well, AMD claims MI300X had up to 5.22 POPS of sparse int8 performance. I wonder if they're comparing theoretical MI500X performance on something like BFP4 vs. the actual achieved performance of the MI300X. Even then, 1000x seems like quite a stretch. I could probably believe 100x, though.emerth said:I'm thinking 1000x the FP4 perf compared to Mi300 FP16 perf. That or AMD is implementing 2 bit FP. -

qwertymac93 The 1000x claim is probably for whole system performance, not just a single card. Taking into account interconnect advancements and larger addressable memory, 1000x seems possible, if unfair in the real world. You'd never use these systems beyond what they are clearly bottlenecked.Reply -

bit_user Reply

System performance is determined by your biggest bottleneck. It really doesn't matter what else you do, as that bottleneck will be the limiting factor.qwertymac93 said:The 1000x claim is probably for whole system performance, not just a single card. Taking into account interconnect advancements and larger addressable memory, 1000x seems possible,

As such, improvements in memory bandwidth, compute, and I/O are never multiplicative. One of them will be the limiting factor and how much you improved the system from whatever was your previous bottleneck is what determines system performance.

This statement doesn't make sense. The bottleneck fundamentally constrains actual use. You cannot push it beyond what the bottleneck allows.qwertymac93 said:You'd never use these systems beyond what they are clearly bottlenecked.